Top 10 Linear Regression Projects for Beginners (with Source Code)

Oct 10, 2025 7 Min Read 7847 Views

(Last Updated)

Among the vast universe of data science and artificial intelligence, Linear Regression is a foundational method. It is often the first algorithm that aspiring data scientists and machine learning engineers learn, and for good reason. It is simple, interpretable, widely applicable, and useful! But how do you go from theory to the doorstep of implementation? The answer is with practical projects.

This guide is your complete source for linear regression projects in machine learning. We will start with a simple linear regression problem and then move into more complex situations. Also included are many linear regression project ideas for all, including linear regression mini projects for students to linear regression projects with a defined real-world problem.

Whether you are looking for linear regression projects for beginners or as complex as linear regression case studies, by the end of this article, you will possess both insights and practical support to successfully engage in linear regression projects.

Table of contents

- What is Linear Regression and Why is it Important?

- Essential Tools and Concepts

- Beginner-Friendly Linear Regression Projects with Source Code

- Predicting Student Grades

- Advertising Sales Prediction

- House Price Prediction

- Salary Prediction

- Stock Price Prediction

- Diabetes Progression Prediction

- Startup Profit Prediction

- Car Price Prediction

- CO₂ Emissions Prediction

- Life Expectancy Prediction

- Datasets for Linear Regression Projects

- Best Practices for Linear Regression Projects

- Final thoughts:

- Can linear regression be used for classification problems?

- How do I know if linear regression is the right model for my dataset?

- How do I choose evaluation metrics for linear regression projects?

- What is overfitting in linear regression?

- Can I deploy a linear regression model in real-world applications?

What is Linear Regression and Why is it Important?

Before we dive into the linear regression projects, let’s take a moment for a quick review.

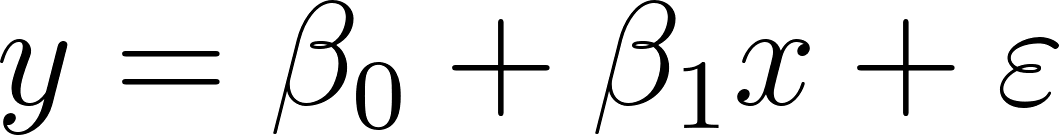

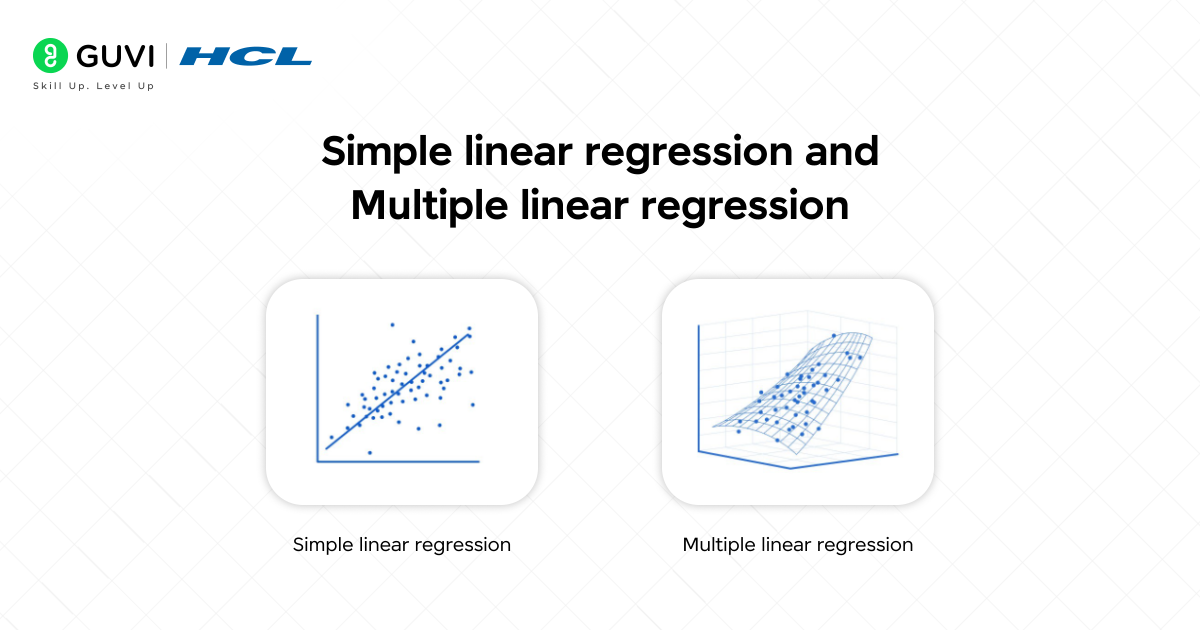

Simple Linear Regression: Contains one independent variable. For example, predicting a student’s exam score based on the number of hours they studied.

Equation:

- y: Dependent variable (Target we want to predict)

- x: Independent variable (Feature we use for prediction)

- β₀: y-intercept (The value of y when x is 0)

- β₁: Slope of the line (The change in y for a one-unit change in x)

- ε: Error term (The difference between the observed and predicted value)

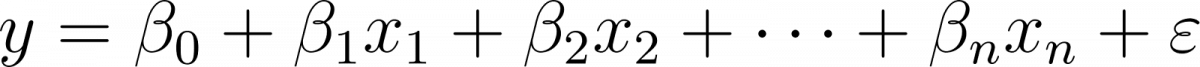

Multiple Linear Regression: Contains two or more independent variables.For instance, predicting a house price based on its size, number of bedrooms, and location.

Equation:

Here, each x₁, x₂, …, xₙ represents a different feature, and each β coefficient represents the respective feature’s contribution to the target, assuming all other features are held constant.

Essential Tools and Concepts

Programming Language: Python and R are probably the two most popular programming languages you could use. And libraries in Python, such as Scikit-learn and Statsmodels (in Python), and for R using the lm() function.

Datasets: A dataset is essential to any machine learning project. You can use datasets from Kaggle and UCI Machine Learning Repository and data.gov.

IDE: Jupyter Notebooks or Google Colab are great IDEs for interactive programming, in which you code and visualize and document your findings in one location.

Model Evaluation: Understand key metrics like R-squared (which measures the proportion of variance in the dependent variable that can be predicted from the independent variables) and Mean Squared Error (MSE) to assess your model’s performance.

Beginner-Friendly Linear Regression Projects with Source Code

1. Predicting Student Grades

This is a classic linear regression project for students. The goal is simply to predict a student’s grade from a number of variables.

- Goal: To predict a student’s final grade.

- Dataset: The Student Performance Dataset from the UCI Repository is a good option.

- Approach: First develop a simple linear regression (e.g. predict grades from study time) and then build out to a multiple regression project by adding additional predictors.

- Why it is good for beginners: This is an excellent example of a linear regression project for practice as the dataset is organized and easy to understand.

Source Code: Student Grade Prediction (GitHub)

| # Import libraries import pandas as pd import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“student_scores.csv”) print(data.head()) # Features and target X = data[[‘Hours’]] y = data[‘Scores’] # Split data X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluate print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) # Visualization plt.scatter(X, y, color=”blue”) plt.plot(X, model.predict(X), color=”red”) plt.xlabel(“Study Hours”) plt.ylabel(“Scores”) plt.title(“Student Scores Prediction”) plt.show() |

2. Advertising Sales Prediction

This is a very popular linear regression project in data science, explaining how different advertising budgets impact product sales.

- Goal: To predict sales based on advertising spending.

- Dataset: Advertising dataset with TV, Radio, and Newspaper columns.

- Approach:

- Use multiple linear regression with the predictor variables being TV, Radio, and Newspaper ad spending.

- Evaluate how much each medium contributes to the overall sales figure.

- Why is it good for beginners?

- It only has 3 features, so the dataset is easy to digest.

- You can easily visualize the relationships.

Source Code: Advertising Sales Prediction (GitHub)

| import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“Advertising.csv”) # Features and target X = data[[‘TV’, ‘Radio’, ‘Newspaper’]] y = data[‘Sales’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predict y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) |

3. House Price Prediction

Housing prices are one of the most common regression problems in real-world machine learning.

- Goal: Predict house prices using features such as number of rooms, area, and location.

- Dataset: Boston Housing Dataset (built into scikit-learn).

- Approach:

- Use multiple linear regression.

- Train the model on features like RM (average number of rooms), LSTAT (percentage of lower status population), etc.

- Why it is good for beginners: A classic case study in machine learning.

- Widely used in interviews and tutorials.

Source Code: House Price Prediction (GitHub)

| from sklearn.datasets import load_boston import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset boston = load_boston() X = pd.DataFrame(boston.data, columns=boston.feature_names) y = boston.target # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predict y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) |

4. Salary Prediction

A beginner-friendly linear regression project for practice, where we predict salaries based on years of experience.

- Goal: To predict an employee’s salary from years of experience.

- Dataset: Salary Data CSV.

- Approach: Train a simple linear regression model using “YearsExperience” as input and “Salary” as output.

- Why is it good for beginners?

- Very small and clean dataset.

- A perfect linear regression mini project.

Source Code: Salary Prediction (GitHub)

| import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score import matplotlib.pyplot as plt # Load dataset data = pd.read_csv(“Salary_Data.csv”) # Features and target X = data[[‘YearsExperience’]] y = data[‘Salary’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predict y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) # Visualization plt.scatter(X, y, color=”blue”) plt.plot(X, model.predict(X), color=”red”) plt.xlabel(“Years of Experience”) plt.ylabel(“Salary”) plt.title(“Salary Prediction using Linear Regression”) plt.show() |

5. Stock Price Prediction

This project shows how linear regression in AI and finance can be applied.

- Goal: Predict the stock closing price from the previous day’s open, high, and low.

- Dataset: Yahoo Finance (via yfinance library).

- Approach:

- Collect stock data for a company.

- Train a regression model using daily Open, High, and Low as features.

- Why is it good for beginners?

- Real-world financial dataset.

- Useful for beginners exploring linear regression case studies.

Source Code: Stock Price Prediction (GitHub)

| import yfinance as yf import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Download stock data df = yf.download(“AAPL”, start=”2020-01-01″, end=”2021-01-01″) # Features and target X = df[[‘Open’, ‘High’, ‘Low’]] y = df[‘Close’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predict y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) |

6. Diabetes Progression Prediction

This linear regression project shows how you can predict diabetes using the patient’s medical data

- Goal: Predict diabetes progression from patient medical data.

- Dataset: Scikit-learn’s built-in Diabetes dataset.

- Approach:

- Use regression on 10 medical predictors (BMI, blood pressure, age, etc.).

- Predict the diabetes progression score.

- Why it’s good for beginners: A healthcare-related dataset that helps understand feature importance and evaluation.

Source Code: Diabetes Progression Prediction (GitHub)

| import matplotlib.pyplot as plt from sklearn.datasets import load_diabetes from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset diabetes = load_diabetes() X, y = diabetes.data, diabetes.target # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) # Visualization plt.scatter(y_test, y_pred, color=”purple”) plt.xlabel(“Actual”) plt.ylabel(“Predicted”) plt.title(“Diabetes Progression Prediction”) plt.show() |

7. Startup Profit Prediction

- Goal: Predict startup profits based on R&D spend, administration, and marketing spend.

- Dataset: 50 Startups Dataset

- Approach:

- Perform multiple regression using different budget features.

- Compare the contribution of each spending category.

- Why it’s good for beginners: Shows real-world application in business finance with a small, simple dataset.

Source Code: Startup Profit Prediction (GitHub)

| import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“50_Startups.csv”) print(data.head()) # Convert categorical column ‘State’ using one-hot encoding data = pd.get_dummies(data, drop_first=True) # Features and target X = data.drop(‘Profit’, axis=1) y = data[‘Profit’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) |

- The term “Machine Learning” was coined way back in 1959 by Arthur Samuel!

- A simple Logistic Regression model can sometimes outperform deep neural networks on clean, structured data.

- Random Forest got its name because it’s literally a “forest” of random decision trees!

8. Car Price Prediction

- Goal: Predict car prices using mileage, year, and horsepower.

- Dataset: Car Dataset

- Approach:

- Use multiple regression to analyze price dependency on different car features.

- Clean categorical variables and encode them.

- Why it’s good for beginners: A practical project related to a familiar domain — easy to connect theory with real-world usage.

Source Code: Car Price Prediction (GitHub)

| import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“CarPrice.csv”) print(data.head()) # Convert categorical columns data = pd.get_dummies(data, drop_first=True) # Features and target X = data.drop(‘price’, axis=1) y = data[‘price’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) |

9. CO₂ Emissions Prediction

- Goal: Predict CO₂ emissions of cars from engine size, cylinders, and fuel consumption.

- Dataset: CO₂ Emissions Dataset (Kaggle)

- Approach:

- Perform simple & multiple regression on car specifications.

- Visualize emissions vs engine size.

- Why it’s good for beginners: A Simple dataset with a few features is great for visualizations and understanding regression basics.

Source Code: CO2 Emission Prediction (GitHub)

| import pandas as pd import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“CO2_emissions.csv”) print(data.head()) # Features and target X = data[[‘Engine_Size’, ‘Cylinders’, ‘Fuel_Consumption’]] y = data[‘CO2_Emissions’] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) # Visualization plt.scatter(data[‘Engine_Size’], y, color=”blue”) plt.plot(data[‘Engine_Size’], model.predict(X), color=”red”) plt.xlabel(“Engine Size”) plt.ylabel(“CO2 Emissions”) plt.title(“Engine Size vs CO2 Emissions”) plt.show() |

10. Life Expectancy Prediction

- Goal: Predict the life expectancy of a country based on socio-economic and health factors.

- Dataset: WHO Life Expectancy Dataset (Kaggle)

- Approach:

- Use multiple regression to analyze features like GDP, schooling, BMI, alcohol consumption, etc.

- Train the model to predict life expectancy values.

- Why it’s good for beginners: It connects machine learning with public health and economics. Students get hands-on practice cleaning messy datasets with missing values.

Source Code: Life Expectancy Prediction (GitHub)

| import pandas as pd import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error, r2_score # Load dataset data = pd.read_csv(“Life Expectancy Data.csv”) print(“Dataset Shape:”, data.shape) print(“Columns:”, data.columns) # Drop missing values for simplicity (can use imputation for advanced work) data = data.dropna() # Features and target X = data[[‘GDP’, ‘Schooling’, ‘BMI’, ‘Alcohol’, ‘Adult Mortality’]] y = data[‘Life expectancy ‘] # Train-test split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) # Predictions y_pred = model.predict(X_test) # Evaluation print(“MSE:”, mean_squared_error(y_test, y_pred)) print(“R2 Score:”, r2_score(y_test, y_pred)) # Visualization: Predicted vs Actual plt.scatter(y_test, y_pred, alpha=0.6, color=”teal”) plt.xlabel(“Actual Life Expectancy”) plt.ylabel(“Predicted Life Expectancy”) plt.title(“Life Expectancy Prediction”) plt.show() |

Datasets for Linear Regression Projects

Here are some excellent sources:

- Kaggle: Massive collection of real-life datasets.

- UCI Machine Learning Repository: Classic datasets (like Boston Housing, Diabetes).

- GitHub: Many developers show the linear regression projects they have created, including source Code.

Best Practices for Linear Regression Projects

- Always visualize your data before you fit models.

- Check for multicollinearity for multiple regression.

- Evaluate models with MSE, RMSE, and R².

- Make sure to document your projects with good documentation for linear regression projects.

If you are thinking of pursuing a career as an AI/ML Engineer, the possibilities are endless. Take the chance to get started and learn with HCL GUVI’s IITM Pravartak and Intel Certified Online Artifical Intelligence & Machine Learning Course. This NSDC-approved course offers a certificate recognized globally, which adds serious bragging rights to your résumé and will help you set yourself apart in a highly competitive employment market.

Final thoughts:

One of the best ways to get started in AI and Data Science is to work on linear regression projects in machine learning. These case studies are everything from simple linear regression projects like predicting student scores to multiple linear regression projects like predicting house prices, and are critical to your learning.

Once you grow, do linear regression projects with a dataset and source Code, do linear regression projects in R or Python, and display them via GitHub.

1. Can linear regression be used for classification problems?

No. Linear regression is strictly for predicting continuous values. For classification, you should use logistic regression or other classification algorithms like decision trees or random forests.

2. How do I know if linear regression is the right model for my dataset?

If your target variable is continuous, your data has a linear relationship, and residuals are normally distributed without strong multicollinearity, then linear regression is a good choice.

3. How do I choose evaluation metrics for linear regression projects?

The most common metrics are Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R² Score. Choose based on whether you want error in raw units (RMSE) or variance explained (R²).

4. What is overfitting in linear regression?

Overfitting happens when your regression model captures noise in the training data and performs poorly on new data. Using cross-validation and regularization helps prevent it.

5. Can I deploy a linear regression model in real-world applications?

Yes. Linear regression models can be easily deployed via Flask, FastAPI, or Django in Python and integrated into business apps for real-time predictions.

Did you enjoy this article?