Complete Machine Learning Syllabus: Roadmap with Resources

Jul 03, 2025 6 Min Read 18365 Views

(Last Updated)

Machine learning (ML) has become a critical skill set for industries such as healthcare, autonomous vehicles, and finance, fueled by advances in computational power and data availability.

To fully master ML, learners must navigate a comprehensive and structured curriculum, which includes a blend of theoretical foundations and hands-on implementation.

In this article, we will be providing you with a detailed machine learning syllabus and roadmap for mastering machine learning in 2025, combining diverse resources to help learners understand complex algorithms, deploy models in real-world settings, and solve advanced problems.

Table of contents

- What is Machine Learning?

- How Machine Learning Works

- Core Foundations

- Machine Learning Fundamentals

- Advanced Topics

- Model Evaluation and Optimization

- Machine Learning Projects for Practical Application

- Roadmap: Step-by-Step Learning Path

- Concluding Thoughts…

- FAQs

- What is the subject of machine learning?

- Is machine learning full of math?

- Is machine learning all coding?

- Which language is best for ML?

- Can we learn NLP without ML?

What is Machine Learning?

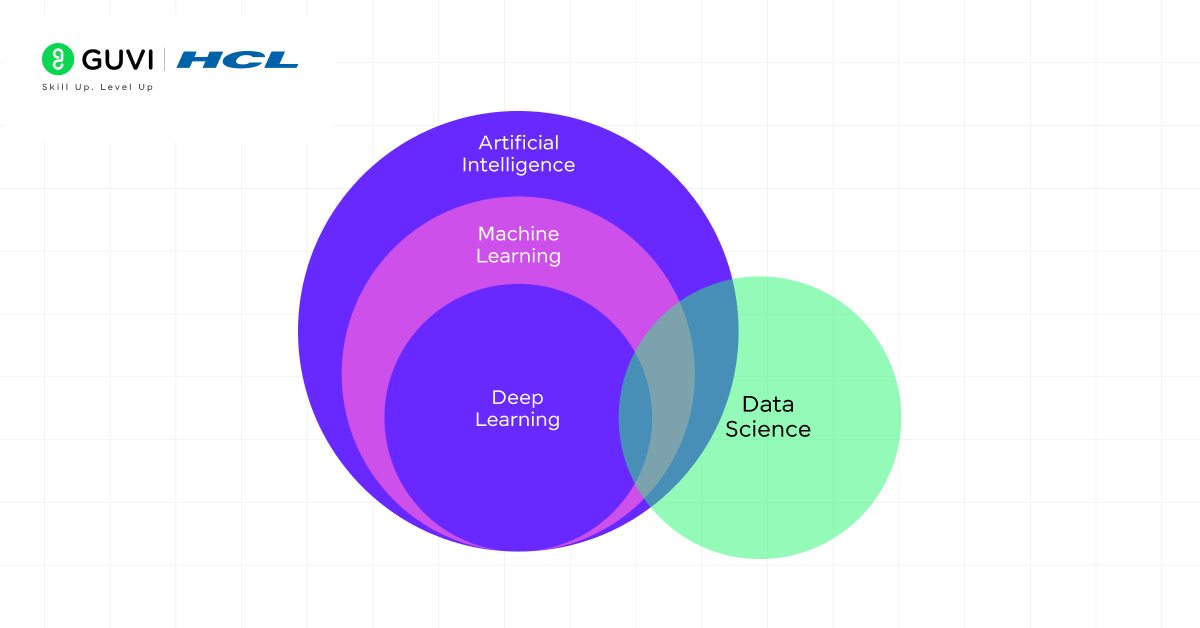

Machine Learning (ML) is a subset of artificial intelligence (AI) that focuses on creating algorithms and statistical models that enable computers to learn patterns from data without explicit programming.

Unlike traditional programming, where rules and logic are explicitly coded, machine learning allows systems to learn and improve from experience automatically. This is particularly useful for tasks like image recognition, natural language processing, and predictive analytics.

PRO TIP: Don’t waste your time looking for different courses, just pick one and start, you learn by doing.

That is what we follow at GUVI, hence I’d suggest GUVI’s Artificial Intelligence and Machine Learning Courses with updated syllabi, tools, artificial intelligence, and industry-grade projects brought to you by expert machine learning practitioners!

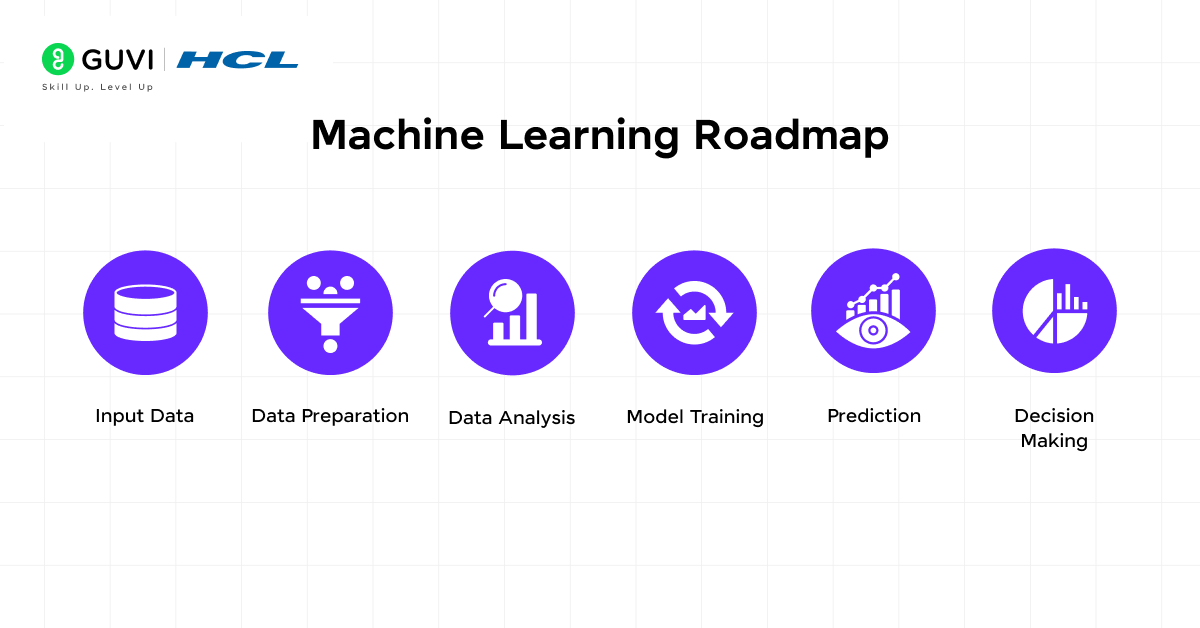

How Machine Learning Works

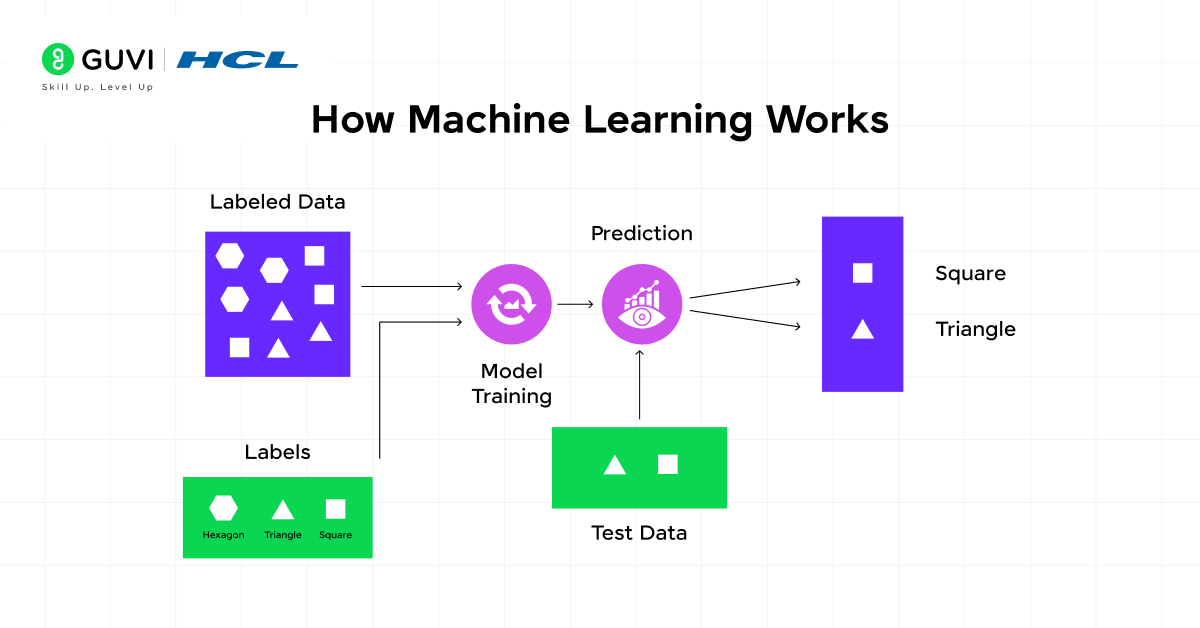

Machine learning works through a systematic process that involves data collection, preprocessing, training, and model evaluation. Below is an in-depth explanation of its workflow:

- Data Collection

- Machine learning relies heavily on vast amounts of data. This data can be structured (like databases) or unstructured (such as images, text, or video). High-quality, labeled datasets are critical for training effective models, especially in supervised learning.

- Data Preprocessing

- Before feeding data into ML algorithms, it’s crucial to clean and prepare it. This stage includes:

- Data Cleaning: Handling missing values, removing outliers, and addressing noise in the dataset.

- Data Transformation: Normalization or standardization to ensure that features have comparable scales.

- Feature Engineering: Creating new features from existing ones to improve model performance.

- Dimensionality Reduction: Techniques like PCA (Principal Component Analysis) reduce the number of features without losing significant information, making the model more efficient.

- Before feeding data into ML algorithms, it’s crucial to clean and prepare it. This stage includes:

- Choosing the Algorithm

- The choice of algorithm depends on the problem type (e.g., regression, classification) and the nature of the data. For example:

- Supervised Learning Algorithms: Used when data is labeled. Popular choices include Linear Regression, Support Vector Machines (SVM), and Random Forests.

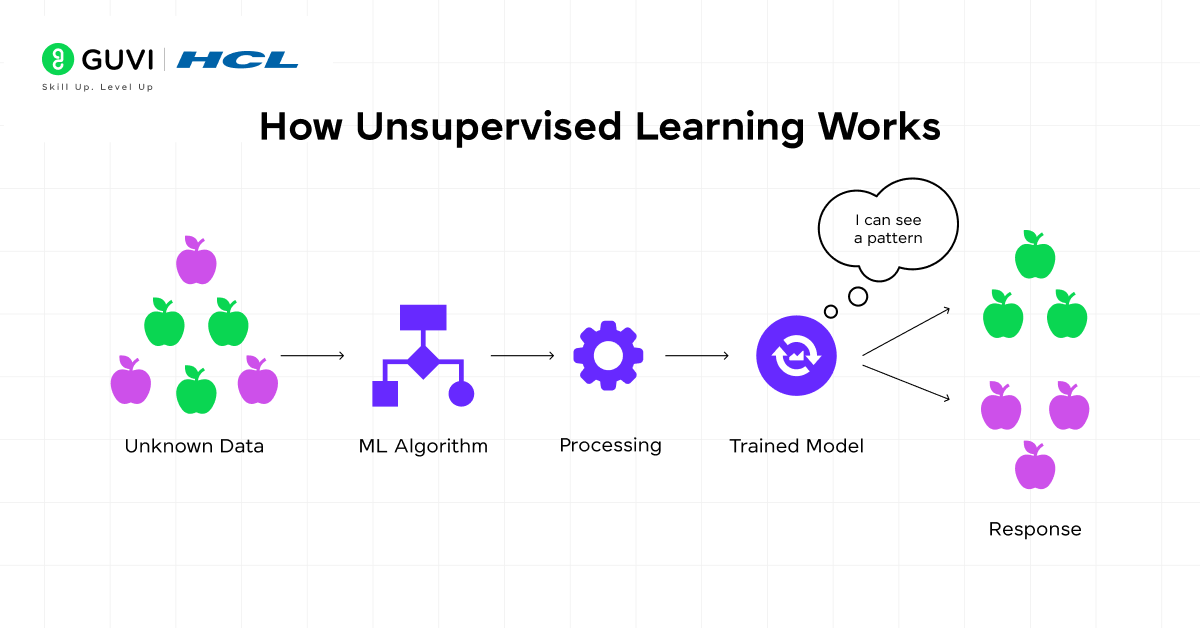

- Unsupervised Learning Algorithms: Applied to unlabeled data. Examples include K-Means Clustering and Hierarchical Clustering.

- Reinforcement Learning: Here, the model learns by interacting with an environment and receiving feedback in the form of rewards and penalties.

- The choice of algorithm depends on the problem type (e.g., regression, classification) and the nature of the data. For example:

- Model Training

- In this step, the algorithm is trained using a portion of the data. The model learns patterns and relationships by optimizing a cost or loss function. In deep learning, for example, neural networks use gradient descent to minimize the error between predicted and actual values by adjusting weights through backpropagation.

- Model Evaluation

- Once trained, the model’s performance is evaluated using unseen test data to ensure it generalizes well. Key metrics include:

- Accuracy for balanced datasets.

- Precision, Recall, and F1-Score for imbalanced datasets.

- Confusion Matrix for visualizing true positives, false positives, true negatives, and false negatives.

- Once trained, the model’s performance is evaluated using unseen test data to ensure it generalizes well. Key metrics include:

- Hyperparameter Tuning

- After evaluating the model, fine-tuning is performed by adjusting hyperparameters like learning rate, batch size, and depth of the tree (in decision trees) to enhance performance. Techniques such as Grid Search or Random Search are often employed here.

- Deployment and Monitoring

- Once the model is optimized, it is deployed into a production environment. Continuous monitoring is essential to ensure the model adapts to new data patterns over time, especially in dynamic environments like fraud detection or recommendation systems.

Now that we’ve established what it is and how it works, let us get into the core foundations and basically, everything you need to learn throughout your machine learning journey.

Core Foundations

- Mathematics and Statistics for Machine Learning

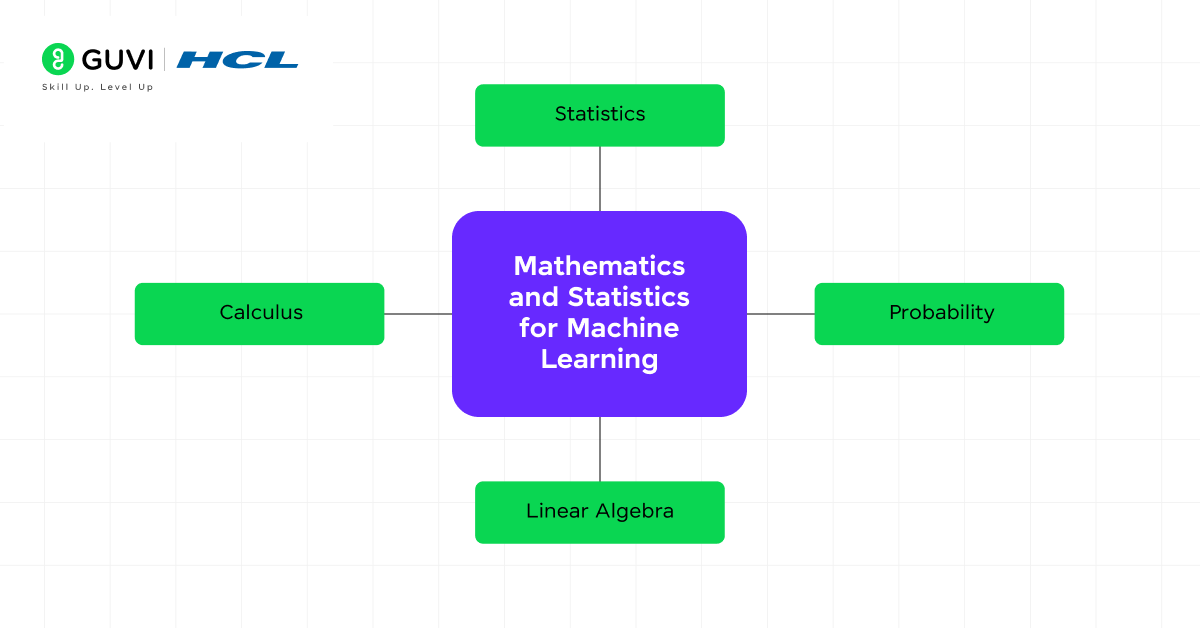

Mathematics is the backbone of machine learning algorithms. Mastery in linear algebra, calculus, probability, and statistics is essential for understanding and optimizing ML models. Let’s delve deeper into how these topics integrate with machine learning and explore specific applications with detailed explanations.

| Concept | Description | Examples | Advanced Resources |

| Linear Algebra | Deals with vector spaces and matrix operations. Essential for representing and manipulating data. | Eigenvalues and eigenvectors are used in Principal Component Analysis (PCA) for dimensionality reduction, improving computational efficiency. | Deep Learning by Ian Goodfellow, Matrix Computations by Gene H. Golub |

| Calculus | Focuses on derivatives and integrals, crucial for optimization. Gradient-based optimization methods use derivatives to find optimal model parameters. | In neural networks, backpropagation computes gradients to minimize the loss function through gradient descent. | Calculus for Machine Learning by Jason Brownlee, Coursera: Mathematics for ML |

| Probability | Provides the framework to quantify uncertainty in predictions. Central to models like Bayesian networks and Gaussian processes. | Bayes’ theorem plays a key role in Naive Bayes classifiers, while Gaussian distributions are used in Gaussian Mixture Models for clustering. | Probability for Machine Learning by Jason Brownlee |

| Statistics | Essential for understanding data distribution, hypothesis testing, and model evaluation. | Hypothesis testing and p-values are fundamental in determining model performance and validating hypotheses in A/B testing. | All of Statistics by Larry Wasserman, ISLR by James et al. |

- Programming Foundations: Python for Machine Learning

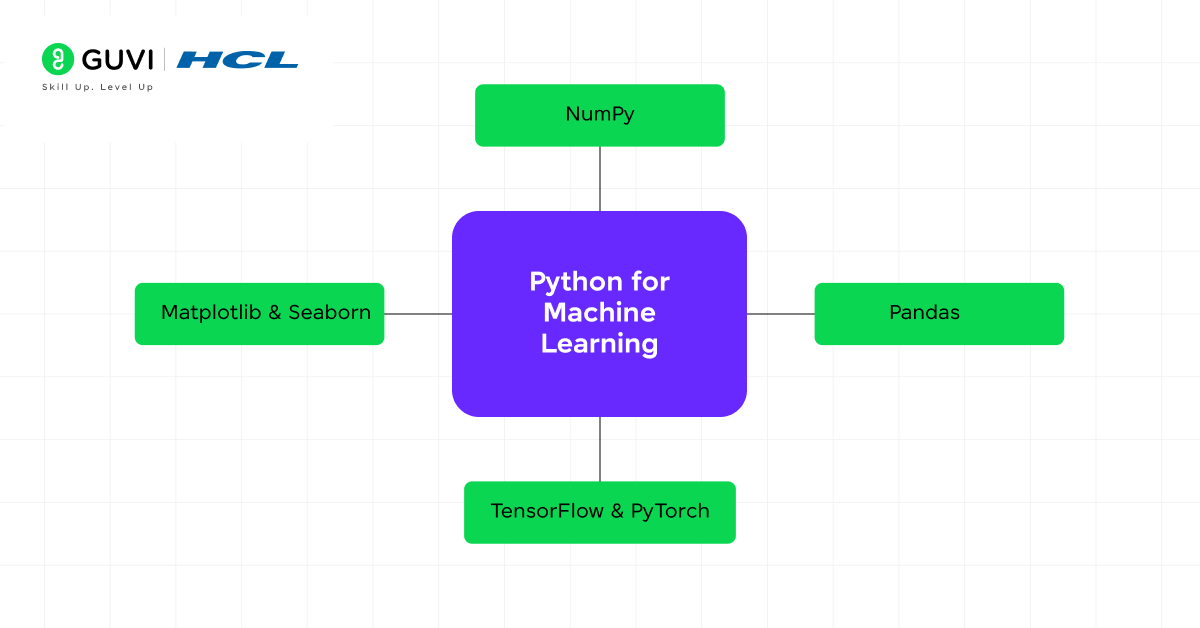

Python’s ecosystem of libraries is the bedrock of machine learning. Libraries like NumPy, Pandas, and Matplotlib make data manipulation and visualization seamless, while Scikit-learn and TensorFlow offer tools to implement machine learning algorithms.

| Library | Description | Common Use Cases | Advanced Resources |

| NumPy | Provides high-performance operations on arrays and matrices. Essential for linear algebra operations in machine learning. | Data cleaning, feature engineering, and working with time series data. | Python Data Science Handbook by Jake VanderPlas |

| Pandas | A library for data manipulation, especially useful for handling structured data (e.g., tabular data). | Data cleaning, feature engineering, working with time series data. | Python for Data Analysis by Wes McKinney |

| Matplotlib & Seaborn | Data cleaning, feature engineering, and working with time series data. | Visualizing loss curves, confusion matrices, and ROC curves for model evaluation. | Python Data Visualization Cookbook by Igor Milovanovic |

| TensorFlow & PyTorch | Deep learning frameworks that offer tools to build and train neural networks at scale. | Building custom deep neural networks, deploying models in production environments. | Deep Learning with Python by François Chollet, PyTorch documentation |

Machine Learning Fundamentals

- Supervised Learning

In supervised learning, the model learns from labeled data. Let’s explore in-depth algorithms that are widely used in practice, highlighting their mechanics, challenges, and specific use cases.

| Algorithm | Description | Applications | Advanced Resources |

| Linear Regression | Predicts continuous outputs based on linear relationships between input features and target values. | Predicting stock prices, housing market trends. | Elements of Statistical Learning (ESL) |

| Logistic Regression | A classification algorithm that models the probability of a binary outcome. | Medical diagnosis (predicting disease presence). | Hands-On ML with Scikit-learn & TensorFlow |

| Decision Trees | Tree-like structures for making decisions based on feature splits. | Credit scoring, fraud detection. | Scikit-learn Documentation: Decision Trees |

| Random Forest | An ensemble method combining multiple decision trees to reduce overfitting and variance in predictions. | Predicting customer churn, product recommendations. | Random Forests Explained: Towards Data Science |

- Unsupervised Learning

Unsupervised learning finds hidden patterns in data without explicit labels, essential for tasks like clustering and anomaly detection.

| Algorithm | Description | Applications | Advanced Resources |

| K-Means Clustering | Groups data into k-clusters by minimizing intra-cluster distances. | Customer segmentation, image compression. | An Introduction to Statistical Learning (ISLR) |

| Principal Component Analysis (PCA) | A dimensionality reduction technique that transforms features into a smaller set of components while preserving variance. | Used to speed up algorithms by reducing data dimensionality (e.g., image recognition). | Pattern Recognition and Machine Learning by Bishop |

| DBSCAN (Density-Based Clustering) | Clusters data based on the density of points, better suited for handling noise and irregular clusters. | Anomaly detection, spatial data analysis. | DBSCAN Algorithm Explained: Towards Data Science |

Advanced Topics

- Deep Learning

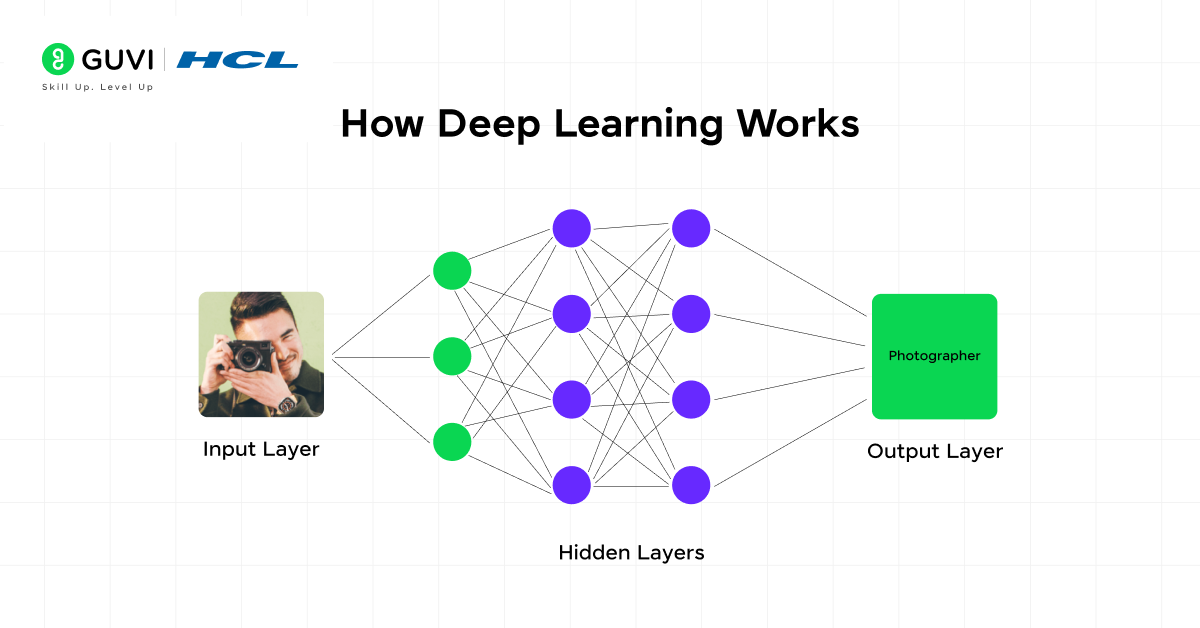

Deep learning techniques use neural networks with multiple layers to model complex patterns in high-dimensional data. As of 2025, neural architectures like CNNs (for image processing) and Transformers (for NLP) dominate many fields.

| Topic | Description | Applications | Advanced Resources |

| Neural Networks | Basic building blocks of deep learning models that use layers of neurons to learn representations. | Speech recognition, recommendation systems. | Deep Learning by Ian Goodfellow |

| Convolutional Neural Networks (CNNs) | Specialized for grid-like data (e.g., images). CNNs use convolution layers to extract features hierarchically. | Image classification, object detection. | Stanford CS231n: CNN for Visual Recognition |

| Recurrent Neural Networks (RNNs) | Designed for sequential data, capturing dependencies across time steps. | Time-series prediction, language modeling. | Coursera: Deep Learning Specialization by Andrew Ng |

| Transformers | State-of-the-art architectures for NLP tasks, replacing RNNs with attention mechanisms to model long-range dependencies. | Text generation, machine translation (BERT, GPT-3). | Attention is All You Need by Vaswani et al. |

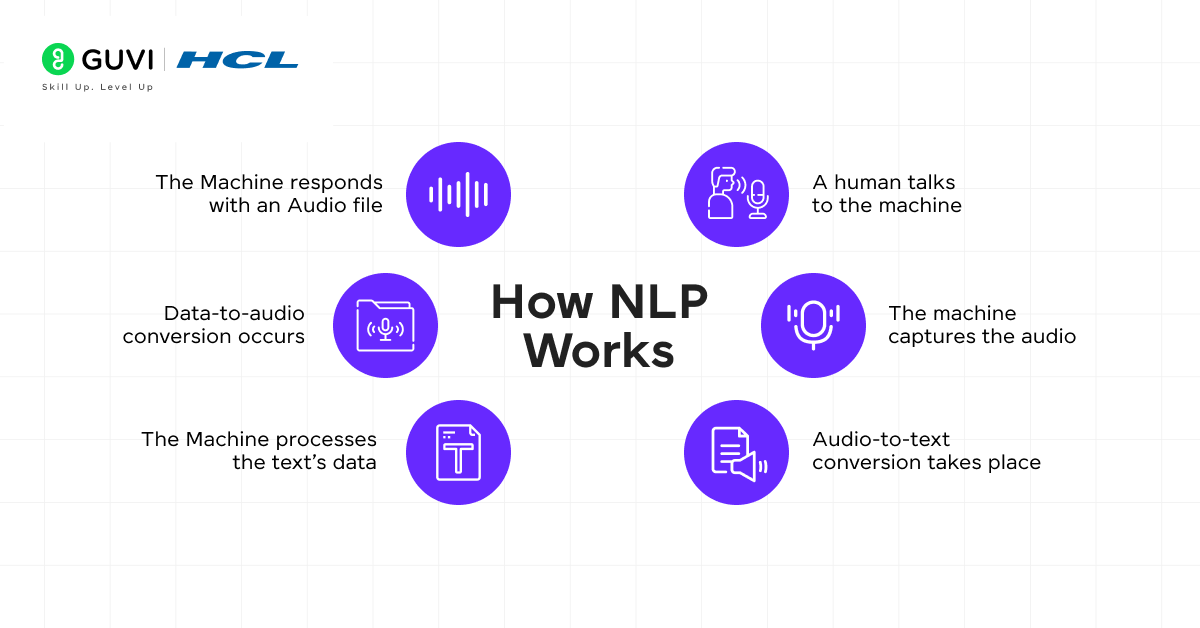

- Natural Language Processing (NLP)

NLP has grown rapidly, leveraging deep learning techniques for understanding human language. Key models like BERT and GPT represent massive advancements in language representation.

| Technique | Description | Applications | Advanced Resources |

| Text Preprocessing | Techniques for cleaning and transforming raw text into a structured format for models. | Tokenization, stop-word removal, stemming, and lemmatization are crucial for NLP tasks like sentiment analysis. | Speech and Language Processing by Daniel Jurafsky and James Martin |

| Word Embeddings | Methods for transforming words into numerical vectors to capture semantic meaning. | Pre-trained models like Word2Vec, GloVe, and FastText represent words in a continuous vector space, improving the quality of downstream NLP tasks like sentiment analysis. | Coursera: NLP with Deep Learning |

| Transformers in NLP | Neural architectures using self-attention for tasks like text classification and summarization. | BERT (Bidirectional Encoder Representations from Transformers) for masked language modeling and GPT-3 for text generation. | BERT Paper: Attention is All You Need |

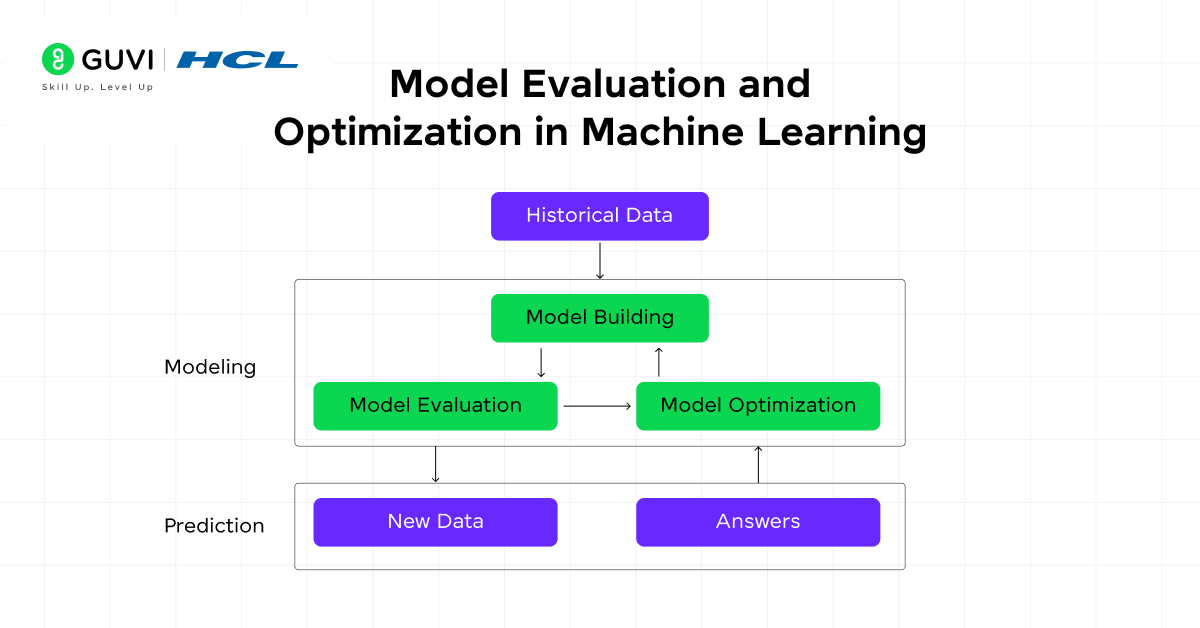

Model Evaluation and Optimization

Model evaluation is essential for assessing the performance and generalization capabilities of machine learning models. Understanding metrics and tuning models to avoid overfitting or underfitting is key to practical implementation.

- Model Evaluation Metrics

| Metric | Description | Use Cases | Advanced Resources |

| Accuracy | Percentage of correct predictions out of total predictions, typically used for balanced datasets. | Used in classification problems like spam detection, image classification. | Pattern Recognition and Machine Learning by Christopher M. Bishop |

| Precision & Recall | Precision measures the accuracy of positive predictions, while recall measures the proportion of actual positives identified. | Used in medical diagnostics to evaluate the trade-off between false positives and false negatives. | Precision-Recall Explained: Towards Data Science |

| F1-Score | The harmonic mean of precision and recall, is best used in imbalanced datasets where one class is more prevalent. | Classifying rare diseases where positive cases are much smaller than negatives. | Coursera: Evaluating Machine Learning Models |

| AUC-ROC | Area under the receiver operating characteristic curve, measuring the trade-off between true positive and false positive rates. | Fraud detection, medical tests. | AUC-ROC Curve: Towards Data Science |

- Optimization Techniques

Tuning machine learning models involves a combination of hyperparameter optimization and regularization methods to achieve optimal performance.

| Technique | Description | Example | Advanced Resources |

| Cross-Validation | A method of splitting data into multiple training and testing sets to validate model performance. | K-Fold Cross Validation helps evaluate model stability and avoid overfitting. | Python Machine Learning by Sebastian Raschka |

| Grid Search & Random Search | Techniques for systematically or randomly searching through hyperparameter combinations to find optimal settings. | Hyperparameter tuning in Random Forest or XGBoost can dramatically improve accuracy on classification tasks. | Hyperparameter Tuning: Sklearn Documentation |

| Regularization (L1/L2) | Methods like Lasso (L1) and Ridge (L2) penalize model complexity to prevent overfitting. | Lasso regression selects only the most important features, making models more interpretable. | Statistical Learning with Sparsity: Hastie, Tibshirani |

Machine Learning Projects for Practical Application

Machine learning cannot be mastered through theory alone. Hands-on projects solidify understanding, build a portfolio, and develop skills needed for the industry. I’m listing a few popular ones below:

| Project | Description | Tools & Resources |

| Image Classification with CNNs | Build a convolutional neural network using TensorFlow or PyTorch to classify images from the CIFAR-10 dataset. | Kaggle: CIFAR-10 Dataset, TensorFlow Documentation |

| Sentiment Analysis on Movie Reviews | Implement NLP techniques and a recurrent neural network (RNN) to predict movie review sentiments using pre-trained word embeddings. | Kaggle: Sentiment Analysis Dataset, PyTorch NLP Tutorials |

| Reinforcement Learning with OpenAI Gym | Build an agent to solve an environment from OpenAI Gym using deep reinforcement learning. | OpenAI Gym, Deep Reinforcement Learning Course |

| Predicting Housing Prices with XGBoost | Train a regression model using XGBoost to predict house prices based on location, size, and amenities. | Kaggle: House Price Dataset, XGBoost Documentation |

Roadmap: Step-by-Step Learning Path

This roadmap outlines a structured approach to progressing from beginner to advanced machine learning proficiency, with emphasis on practical applications, theoretical foundations, and projects.

| Stage | Topics | Suggested Duration | Outcome |

| Stage 1: Foundations | Mathematics (Linear Algebra, Probability), Python programming. | 1-2 months | Develop foundational knowledge for ML models. |

| Stage 2: Supervised Learning | Techniques like K-Means, PCA, and Clustering Algorithms. | 2-3 months | Build and evaluate fundamental ML models. |

| Stage 3: Unsupervised Learning | Techniques like K-Means, PCA, Clustering Algorithms. | 1-2 months | Learn to identify patterns without labeled data. |

| Stage 4: Deep Learning | Neural Networks, CNNs, RNNs, Transfer Learning. | 3-4 months | Implement state-of-the-art deep learning models. |

| Stage 5: Advanced Topics | Reinforcement Learning, NLP, GANs, Transformers. | 2-3 months | Handle complex real-world tasks. |

| Stage 6: Capstone Projects | Hands-on projects in areas like Computer Vision, NLP, and Reinforcement Learning. | Ongoing | Build a portfolio to showcase expertise. |

Concluding Thoughts…

The machine learning syllabus that we discussed above is designed to guide you from foundational concepts to advanced applications in fields like computer vision, NLP, and reinforcement learning.

By following a structured, resource-driven roadmap, you will not only gain theoretical knowledge but also hands-on experience through real-world projects.

I hope you have thoroughly gone through the whole blog and have found what you need to begin your machine-learning journey. Do let me know what you thought of it and any doubts you may have in the comments section below.

FAQs

Machine learning focuses on creating algorithms that enable computers to learn from data and make predictions or decisions without explicit programming.

Yes, machine learning relies heavily on mathematical concepts like statistics, linear algebra, and calculus to build and optimize models, but it’s not just that; there’s so much more. Read the blog for more info.

No, while coding is essential, machine learning also involves data analysis, model selection, and understanding algorithms, requiring both coding and theoretical knowledge.

Python is the most widely used language for machine learning due to its vast libraries and ease of use, though R and Java are also popular.

While some NLP concepts can be learned independently, machine learning is essential for more advanced tasks like text generation and language models.

![A Beginner's Guide to AI Agents, MCPs & GitHub Copilot [2025] 15 ai agent](https://www.guvi.in/blog/wp-content/uploads/2025/07/ai-agent.webp)

![Spring Boot AI: A Beginner’s Guide [2025] 16 spring boot](https://www.guvi.in/blog/wp-content/uploads/2025/07/spring-boot.webp)

Did you enjoy this article?