What is ChatGPT, DALL-E, and Generative AI?

Jun 06, 2025 5 Min Read 6331 Views

(Last Updated)

Today, we live in a world where machines can create art, write stories, and engage in human-like conversations, thanks to ChatGPT, DALL-E, and generative AI. These groundbreaking technologies are changing artificial intelligence, offering new ways to interact with and create content using computers.

In this article, we will discuss at length generative AI and explore two of its most talked-about applications: ChatGPT and DALL-E. We’ll break down what these technologies are, how they work, and the impact they’re having on various industries.

Table of contents

- What is Generative AI?

- Core Concepts:

- Brief History of AI

- Types of Generative AI

- How Generative AI Works

- Applications Across Industries

- ChatGPT

- What is ChatGPT?

- How ChatGPT Works

- Technical Architecture

- Applications and Use Cases

- DALL-E

- The Concept Behind DALL-E

- DALL-E's Image Generation Process

- Comparing DALL-E with Other Image Generation Tools

- Real-World Applications

- Table: ChatGPT vs DALL-E

- Concluding Thoughts…

- FAQs

- Is ChatGPT considered a type of generative AI?

- What is DALL-E in ChatGPT?

- How does AI differ from generative AI?

- What category of AI does ChatGPT belong to?

What is Generative AI?

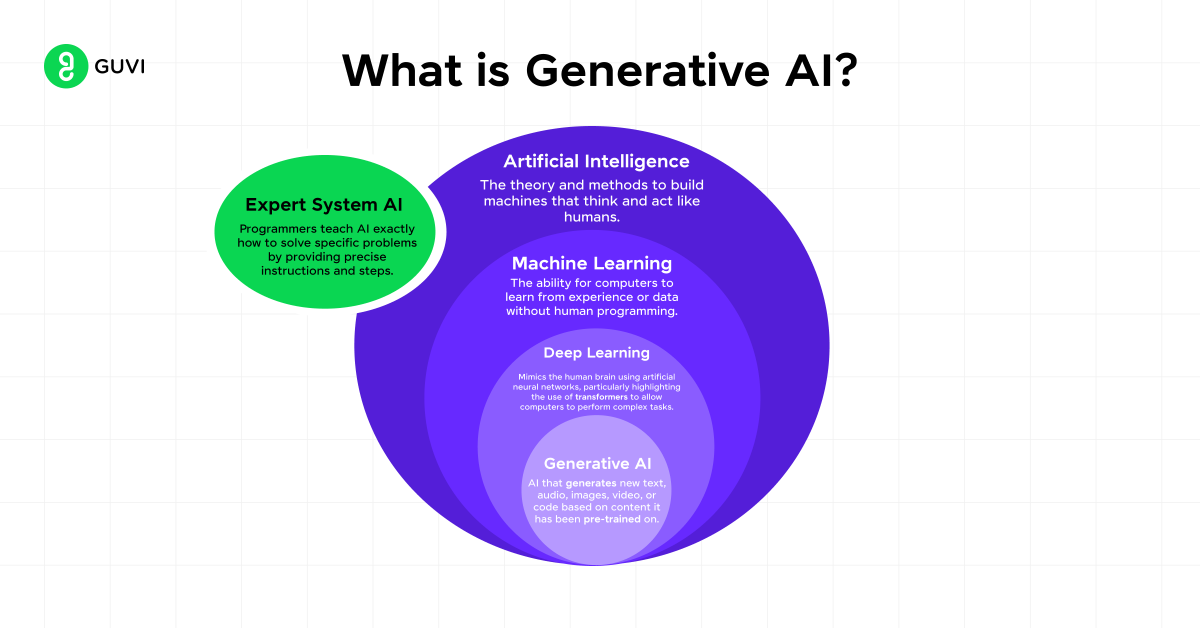

Generative AI is a groundbreaking subset of artificial intelligence that’s changing the game in how machines create content. It is a subset of artificial intelligence focused on creating new content, whether text, images, or other forms of data, that resembles the input data it was trained on.

Core Concepts:

- Deep Learning: Utilizes deep neural networks with multiple layers to capture complex patterns in data.

- Neural Networks: Computational models inspired by the human brain, consisting of interconnected nodes (neurons) that process information in layers.

- Unsupervised Learning: Generative models often employ unsupervised learning, where they learn the underlying structure of input data without explicit labels.

- Models: Common models include Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Transformers like GPT.

Brief History of AI

Let us look at how AI has evolved and learn about the difference between traditional AI and the AI we have today.

1950s-1980s (Early AI):

- Focused on symbolic AI and rule-based systems, which were rigid and unable to generalize beyond their programming.

- Key Developments: The Turing Test, expert systems, and the first neural network models (Perceptrons).

1990s-2010s (Machine Learning):

- Introduction of data-driven approaches where algorithms learned from examples rather than explicit programming.

- Key Developments: Decision trees, support vector machines, and the resurgence of neural networks.

2010s-Present (Deep Learning):

- Rapid advancements in neural networks with deeper architectures have led to breakthroughs in computer vision, NLP, and generative tasks.

- Key Developments: AlexNet (for image classification), GPT (for text generation), and GANs (for image synthesis).

Types of Generative AI

There are several types of generative AI models, each with its unique approach:

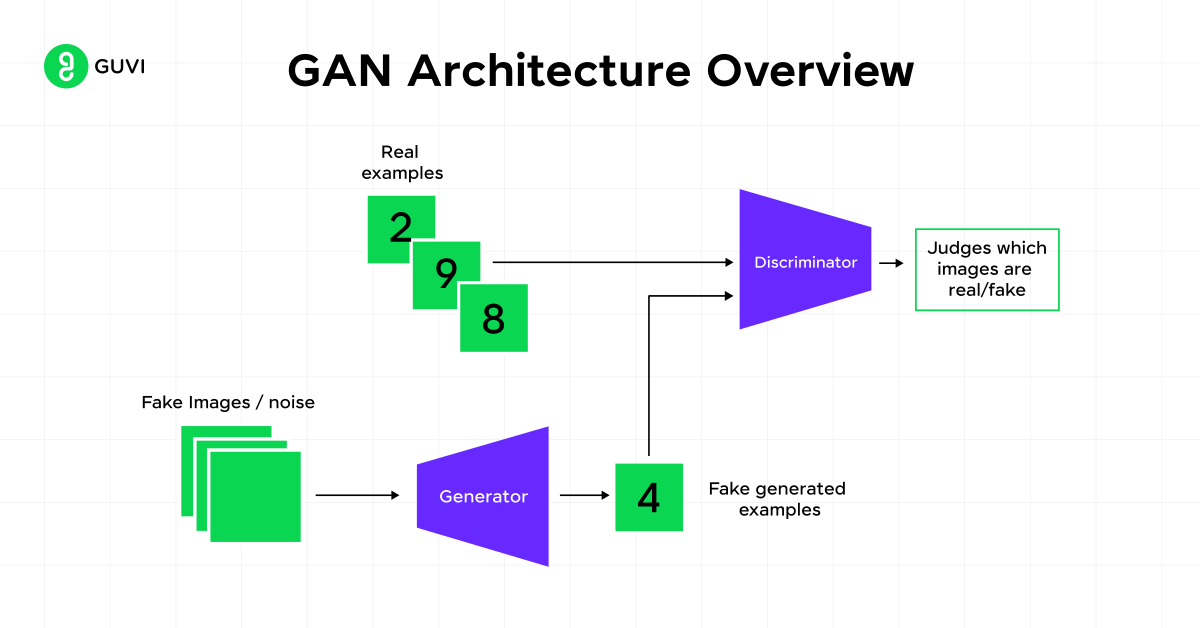

- Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, that compete against each other. The generator creates new data instances, while the discriminator evaluates them. The goal is for the generator to produce data indistinguishable from real data, fooling the discriminator.

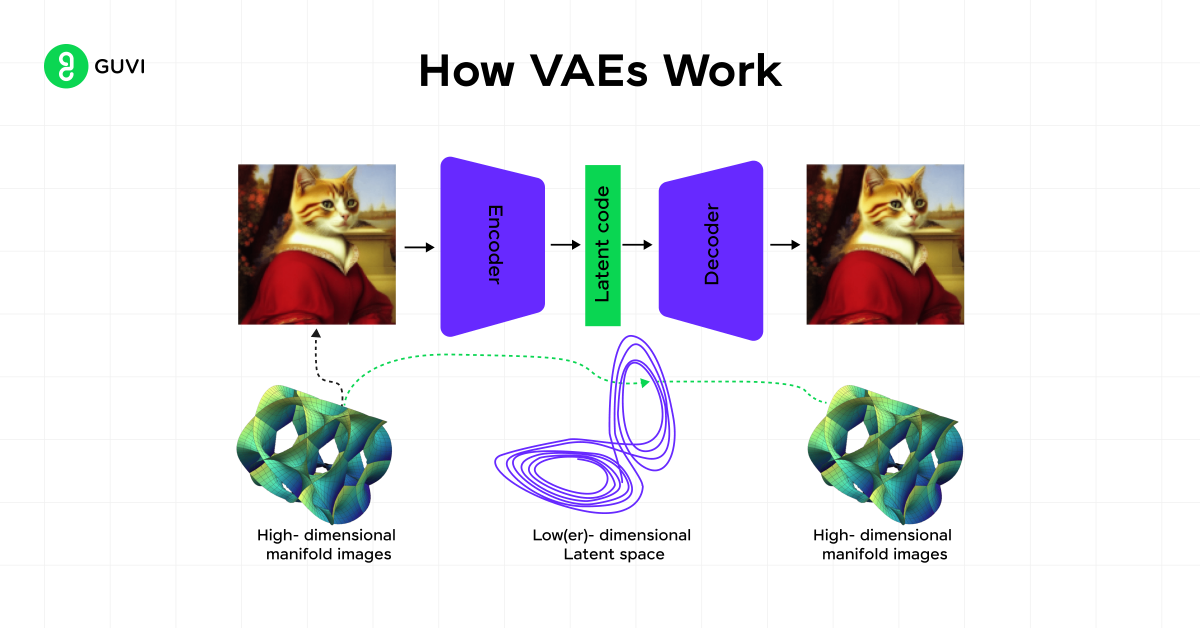

- Variational Autoencoders (VAEs): VAEs encode input data into a latent space and then decode it back to the original data domain. Unlike traditional autoencoders, VAEs impose a probabilistic structure on the latent space, allowing them to generate new data by sampling from this space.

- Transformer-based Models: Transformer-based models, like GPT, use self-attention mechanisms to process input data, allowing them to capture long-range dependencies in sequences. These models are particularly effective for text generation, where context and coherence are crucial.

- Diffusion Models: Diffusion models generate data by iteratively refining noise into structured output, reversing a diffusion process. These models are known for producing high-quality images and are gaining traction in fields where fine detail is crucial.

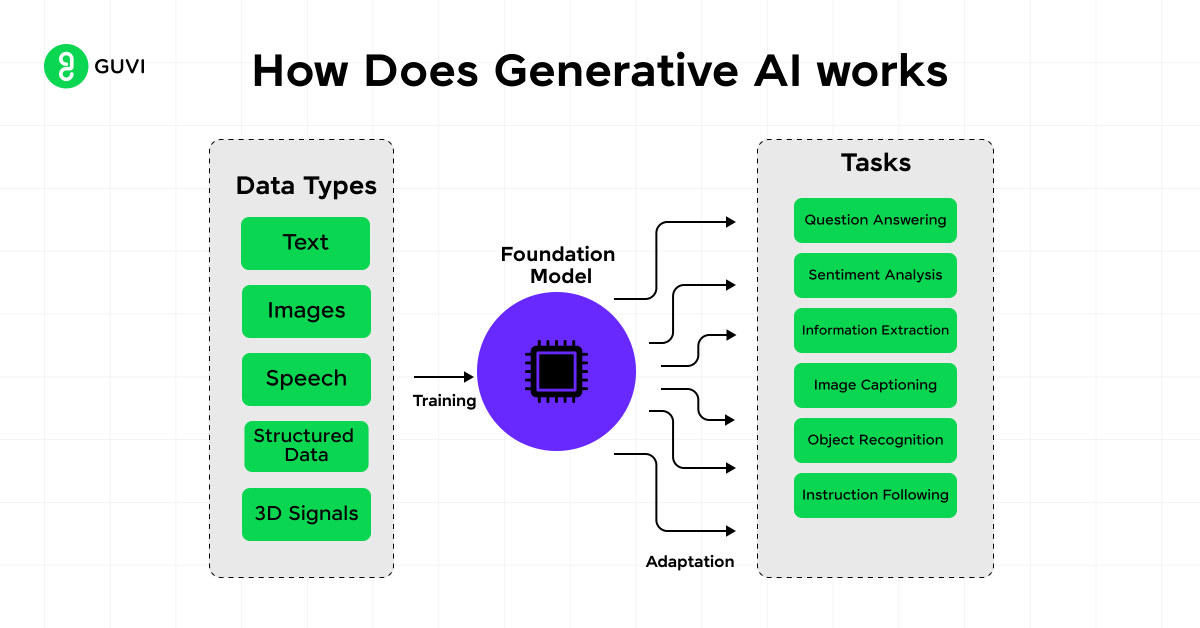

How Generative AI Works

Generative AI typically uses deep learning algorithms to learn patterns and features from large datasets. Let’s look at the process in detail:

1. Training Phase:

- Data Collection: Massive datasets are collected to train models, such as text from the internet for language models or images for visual models.

- Model Training: The AI model learns to recognize patterns in the data by adjusting the weights of connections between neurons in the network.

- Loss Function: During training, the model uses a loss function to measure how far its predictions are from the actual data, iteratively refining itself to reduce this loss.

2. Generation Phase:

- Sampling: Once trained, the model generates new content by sampling from the learned probability distributions.

- Coherence: Techniques like beam search or temperature scaling are used to ensure the generated content is coherent and contextually appropriate.

3. Feedback Loop:

- Human-in-the-Loop: Human feedback is often incorporated to fine-tune models, especially in sensitive applications like content moderation.

- Continuous Learning: Models can be continuously updated with new data to improve performance and adapt to changing contexts.

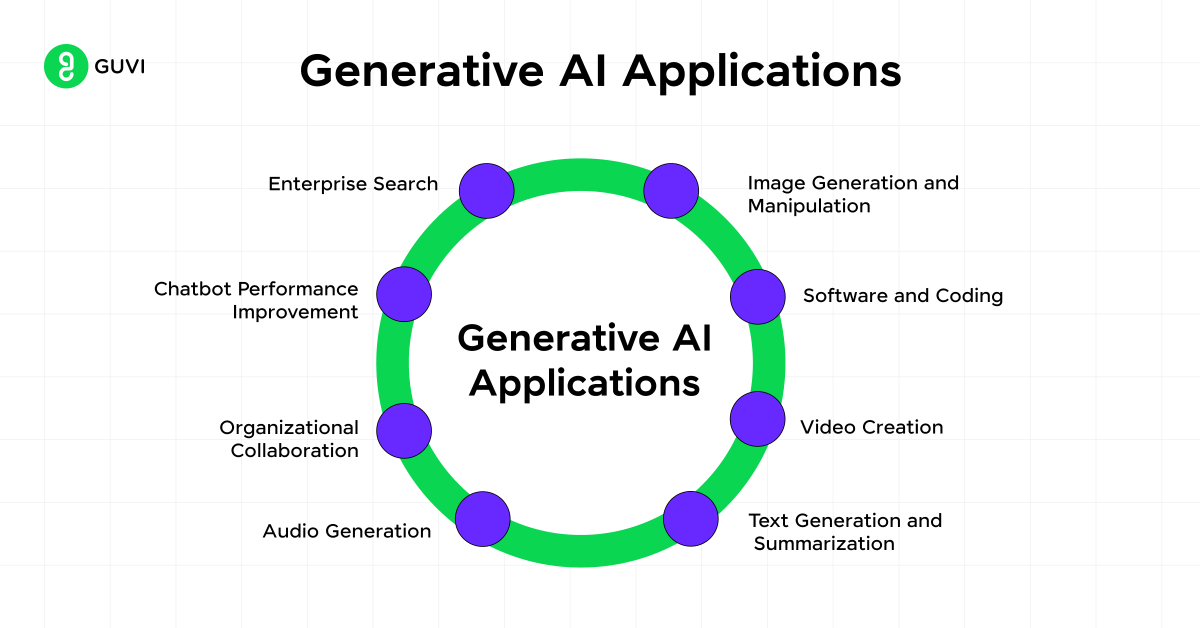

Applications Across Industries

You’ll find generative AI being applied in various fields:

- Content Creation (text, images, videos): Automates the generation of creative content, including writing, imagery, and video production, tailored to specific requirements.

- Code Generation: Assists developers by automatically generating code snippets, reducing development time and effort.

- Data Augmentation: Enhances training datasets by creating additional synthetic data, improving the performance of AI models.

- Personalized Customer Service: Provides tailored customer interactions through AI-driven chatbots and virtual assistants, improving user experience.

- Drug Discovery: Accelerates the identification and development of new drugs by predicting molecular structures and simulating biological processes.

As this technology continues to evolve, its impact on industries and our daily lives is bound to grow.

ChatGPT

What is ChatGPT?

ChatGPT is an artificial intelligence language model designed to understand and generate human-like responses to various prompts. Developed by OpenAI, this groundbreaking technology has the ability to engage in natural language conversations on a wide range of topics.

You can think of it as a highly sophisticated computer program trained on massive amounts of data, enabling it to simulate human-like interactions. It engages in open-ended conversations, answering questions, providing explanations, and generating creative content.

And is capable of adapting to various tones and styles, making it suitable for customer service, content creation, and more.

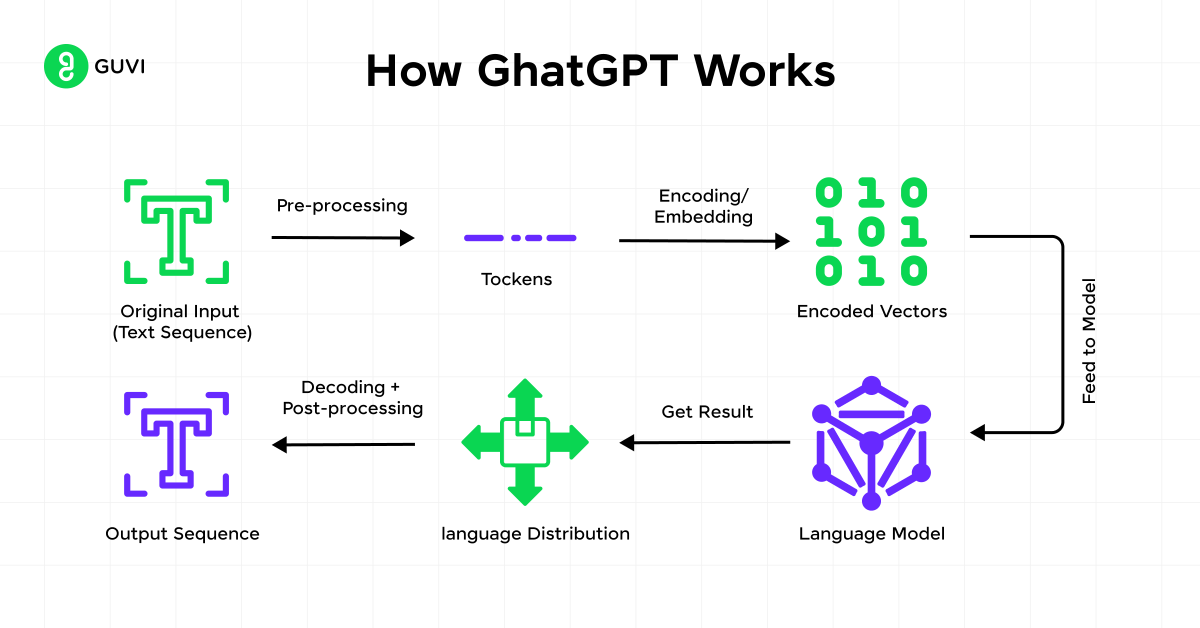

How ChatGPT Works

At its core, ChatGPT uses deep learning techniques to process and generate text.

- Transformer Architecture: ChatGPT is based on the Transformer model, which uses self-attention mechanisms to process input text in parallel, capturing complex dependencies and context.

- Pre-training: The model is pre-trained on a massive dataset of internet text, learning to predict the next word in a sequence. This allows it to understand and generate coherent text across diverse topics.

- Fine-tuning: After pre-training, ChatGPT is fine-tuned on specific tasks or datasets, with adjustments made based on human feedback to improve its conversational abilities.

- Contextual Understanding: ChatGPT maintains context across multiple turns in a conversation, allowing for more natural and coherent dialogue.

Technical Architecture

ChatGPT is built on the GPT (Generative Pre-trained Transformer) architecture. This neural network design uses self-attention mechanisms to weigh the importance of different words in a sequence when making predictions.

- Attention Mechanism: The self-attention mechanism enables the model to weigh the importance of different words in the input, focusing on relevant parts of the text while generating responses.

- Multi-head Self-Attention: This technique allows the model to consider multiple aspects of the input simultaneously, leading to richer and more nuanced text generation.

- Decoder-Only Model: ChatGPT uses only the decoder part of the Transformer architecture, optimized for generating text rather than understanding it (which would require an encoder-decoder setup).

- Tokenization: Input text is broken down into tokens, which are then processed by the model to generate output one token at a time, ensuring high control over the text generation process.

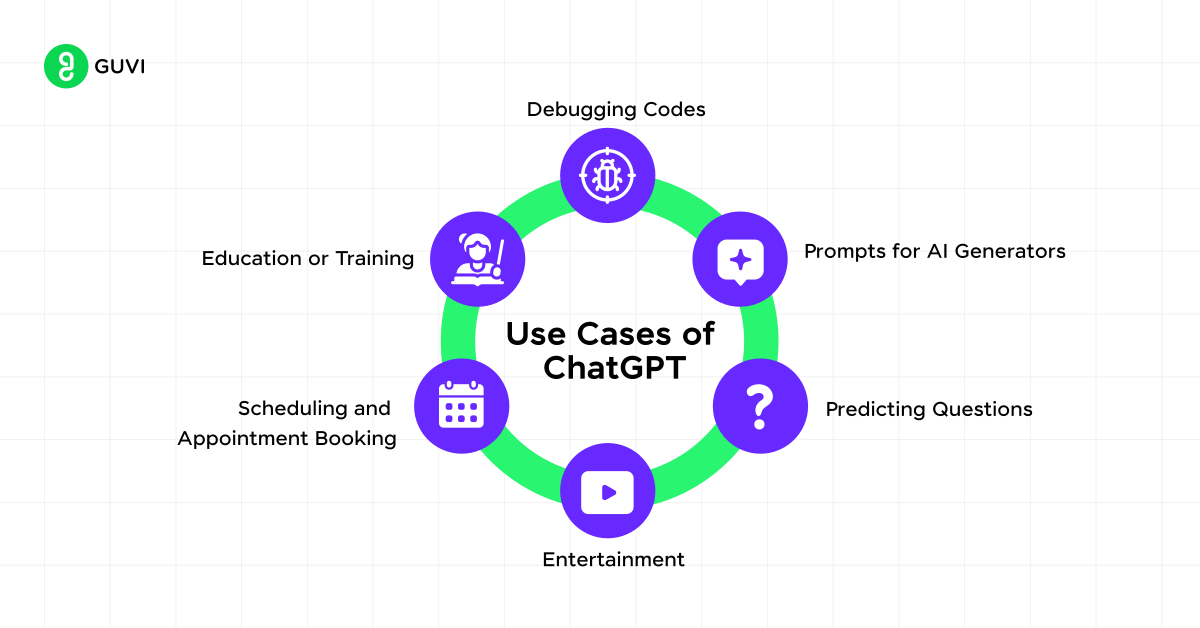

Applications and Use Cases

You’ll find ChatGPT useful in various fields:

- Education: Automating grading, answering student queries, and creating personalized learning experiences.

- Content Creation: Generating articles, social media posts, and video scripts.

- Programming: Debugging and writing code.

- Business: Automating customer service and improving workflow efficiency.

- Creative Tasks: Composing music and assisting with art generation.

ChatGPT’s versatility makes it a valuable tool for both individuals and businesses seeking to enhance productivity and creativity in today’s interconnected world.

DALL-E

The Concept Behind DALL-E

DALL-E is an AI-powered image generation platform created by OpenAI. It’s designed to produce incredibly realistic and detailed pictures based on textual descriptions. You can think of it as a digital artist that brings your words to life.

DALL-E uses deep learning techniques, combining large language models (LLMs), diffusion processing, and natural language processing to understand and create visual content.

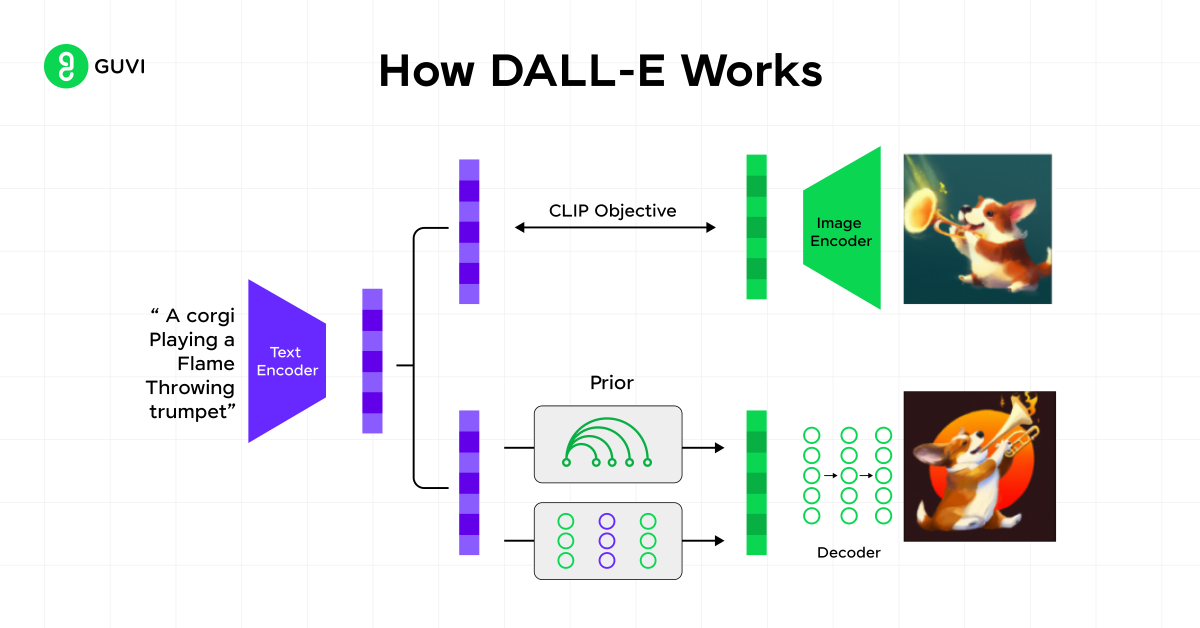

DALL-E’s Image Generation Process

The process begins when you input a text prompt. DALL-E’s encoder-decoder approach first encodes your text, analyzes it, and then decodes it to produce a visual image:

- Text Encoder: Transforms the input text into a series of embeddings that capture the semantic meaning of the description, laying the groundwork for image creation.

- Image Decoder: Using the encoded text, the image decoder generates images by predicting pixel values or patches, guided by patterns learned during training.

- Variational Autoencoders (VAE): DALL-E employs VAEs to ensure that the generated images are not only high-quality but also coherent and closely aligned with the input text.

- Training Process: The model is trained on a large dataset of image-text pairs, learning to map textual descriptions to corresponding images. This training allows it to generalize and create new images from unseen prompts.

Comparing DALL-E with Other Image Generation Tools

DALL-E stands out for its ability to interpret complex prompts and generate highly detailed images. However, other tools like Stable Diffusion and Midjourney have made significant strides in image quality.

Traditional tools require manual editing and manipulation of existing images, while DALL-E generates images from scratch, offering a more automated and creative approach. DALL-E’s integration with ChatGPT gives it an edge in prompt interpretation, making it more user-friendly for both short and long descriptions.

Real-World Applications

You’ll find DALL-E useful in various fields:

- Advertising: DALL-E can create unique and tailored visual content for ad campaigns, aligning imagery perfectly with brand messaging.

- Design: Assists designers by generating concept art, mockups, and other visual ideas based on simple text prompts, streamlining the creative process.

- Education: Provides visual representations of complex concepts, historical events, or scientific phenomena, enhancing educational materials and learning experiences.

- Healthcare: Used to generate medical imagery from textual descriptions, aiding in training and educational content creation.

DALL-E’s versatility makes it a valuable tool for both creative professionals and businesses looking to enhance their visual content creation process.

Table: ChatGPT vs DALL-E

To help you understand the key differences between ChatGPT and DALL-E, here’s a comparison table highlighting their main features and capabilities:

| Feature | ChatGPT | DALL-E |

| Primary Function | Text generation and conversation | Image generation |

| Input Type | Text prompts | Text descriptions |

| Output Type | Text responses | Visual images |

| AI Technology | Large Language Model (LLM) | Text-to-Image Generation |

| Pricing | Free version available; Plus ($20/month), Team ($25/month), Enterprise (Custom) | Contact for custom pricing |

| Key Features | – Natural Language Processing- Conversation Intelligence- Multilingual Interface- Text-to-Text Generation | – Text-to-Image Generation- Deep Learning- Neural Networks |

| Use Cases | – Content creation- Customer service- Programming assistance- Education | – Visual content creation- Product design- Architectural visualization |

| Usability Score | 7.2 | 5.5 |

| Overall Score | 8.0 | 5.9 |

As you can see, while both ChatGPT and DALL-E use advanced AI technologies, they serve different purposes. ChatGPT excels in text-based tasks and conversations, while DALL-E specializes in creating visual content from textual descriptions.

Before we conclude, if you’d like to master Artificial Intelligence and Machine learning and bag an industry certificate to enhance your skills and resume, enroll in GUVI’s IIT-M Pravartak Certified Artificial Intelligence and Machine Learning Courses!

Concluding Thoughts…

The rapid advancement of generative AI technologies like ChatGPT and DALL-E has quite literally changed how various industries function and our daily lives. These powerful tools are changing the way we interact with computers, create content, and solve complex problems. From generating human-like text responses to producing stunning visual art based on textual descriptions, the potential applications of these AI systems are vast and continue to grow.

As we look to the future, it’s clear that generative AI will play an increasingly important role in shaping our digital landscape. While these technologies offer exciting possibilities, they also bring new challenges and ethical considerations to address.

To make the most of these innovations, it’s crucial to stay informed about their capabilities and limitations and to use them responsibly to enhance human creativity and productivity rather than replace them.

FAQs

ChatGPT is a specific application within the broader field of generative AI, which is focused on creating new content or generating information. While generative AI encompasses a wide range of technologies, ChatGPT is specifically tailored for conversational applications like chatbots or virtual assistants.

DALL-E is a generative AI model developed by OpenAI that creates images from textual descriptions. It is often integrated with ChatGPT to generate visual content based on user prompts.

Artificial intelligence (AI) broadly refers to machines designed to perform tasks that typically require human intelligence. Generative AI, a subset of AI, specifically focuses on content creation, distinguishing it by its ability to produce new and original content autonomously.

ChatGPT falls under generative AI, which is a type of artificial intelligence that enables the generation of human-like responses in various forms such as text, images, or videos based on user prompts. This makes it particularly useful for applications requiring natural language processing.

![A Beginner's Guide to AI Agents, MCPs & GitHub Copilot [2025] 14 ai agent](https://www.guvi.in/blog/wp-content/uploads/2025/07/ai-agent.webp)

![Spring Boot AI: A Beginner’s Guide [2025] 15 spring boot](https://www.guvi.in/blog/wp-content/uploads/2025/07/spring-boot.webp)

Did you enjoy this article?