What is Semantic Segmentation? An Exclusive Beginner’s Guide

Sep 10, 2025 4 Min Read 1304 Views

(Last Updated)

If you’ve just started learning computer vision, there’s one phrase you’re probably hearing a lot: Semantic Segmentation. Everyone’s talking about it. But what exactly is it, and why is it such a big deal?

In this guide, I’ll walk you through everything you need to know about semantic segmentation in most simplest and interactive way possible. By the end of this blog, you’ll know what semantic segmentation is, how it works, where it’s used, and how you can try it out yourself, even as a beginner. Let’s begin!

Table of contents

- What is Semantic Segmentation?

- Why Semantic Segmentation?

- How does it work?

- Architectures for Semantic Segmentation:

- PixelLib:

- Semantic Segmentation using PixelLib:

- Prerequisites:

- Code:

- Input:

- Output:

- Output with Overlay:

- The code for applying semantic segmentation to a video is given below.

- Input:

- Output with Overlay:

- Concluding Thoughts…

What is Semantic Segmentation?

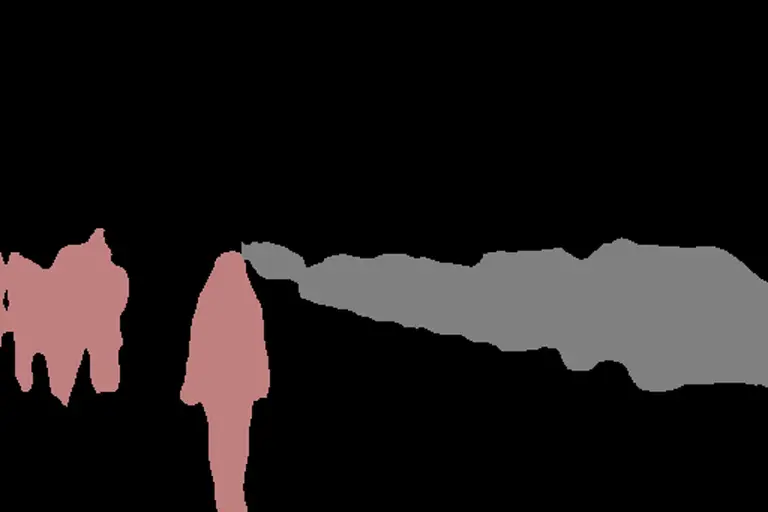

Semantic segmentation is the process of segmenting the input image or video according to semantic information and predicting the semantic category of each pixel in an image or video. In a way, we can think of it as a combination of Classification and Object detection.

- For example, in a picture containing multiple objects like cars, people, trees, and sky, each pixel containing Person (people) is segmented into the same colormap (label,) and each pixel containing cars is segmented into another colormap, etc.

- The main point to note here is that Semantic segmentation focuses and works at the class level, not the instance level. It means, even if multiple instances of the Class ‘Person’ (multiple persons) are available in a single image, semantic segmentation will not segment different instances (persons) with different colormaps (labels).

- It will represent all pixels containing the class ‘Person’ with the same color map. It does not differentiate across different instances of the same object.

Note: If we want to achieve the same at the instance level (i.e., to segment each instance of an object into different colors or labels), we can go for Instance segmentation.

Semantic segmentation is more accurate than the state-of-the-art detection systems like YOLO, as the segmentation is done at the pixel level. This is crucial in self-driving vehicles to prevent a simple miscalculation from causing a fatal accident. Semantic segmentation is also called Dense prediction as it deals with lots of pixels in a single image.

Why Semantic Segmentation?

Semantic segmentation has uses in multiple fields, like automobiles, biomedical imaging, etc.

Its major applications are in

- Autonomous vehicles – To identify the lanes or roads and objects on the road, like other vehicles, pedestrians, and animals. It is also used to understand traffic signals and drive based on the obtained and segmented information.

- Bio-medical Imaging and Diagnostics – To easily identify the difficult-to-find or minute parts of MRI and CT Scans, and also to diagnose cancer or tumors. It can also be used to identify different types of cells. In one of the above images, it is used in the segmentation of White matter, grey matter, and cerebrospinal fluid.

- Aerial Crop monitoring – To monitor a vast area of land within a few seconds and to get accurate field mapping, including elevation information that allows farmers to find any irregularities in their farm.

- Geo-sensing – To identify the water bodies and different land categories, etc, through satellites.

- Facial Recognition – To recognize and distinguish age, expression, and ethnicity, and use it in various applications like Camera, face unlock, etc.

- Image and video Manipulation – Semantic segmentation can be extended to achieve background removal, background blur (Portrait mode), etc.

How does it work?

The general structure used by most of the deep neural network models for semantic segmentation consists of a series of downsampling to reduce the spatial dimensions of the image and then followed by a series of upsampling to increase the spatial dimensions of the image, till it reaches the same dimension as the input image.

For example, if the original image is of dimensions 32 x 32, then the final up-sampled image will also be of dimensions 32 x 32.

The purpose of the downsampling is for the model to obtain more information about the larger portions of the image.

For example, just by looking at a small portion of the middle of the animal, we would not be able to identify whether the given animal is a cat or a dog. We need a bigger portion of the picture to identify the same.

Similarly, for the model to understand and classify what is given in the image, it needs a big picture and hence the process of downsampling is done. But the accuracy of spatial information reduces as the model undergoes down-sampling, causing an output in which the location details are not accurate and clear.

To avoid this, some information from previous layers is passed to the corresponding up-sampling layers, so that the locations of the objects in the images are saved accurately. The data passed between corresponding layers differs in each architecture.

For developing a good semantic segmentation model, we need maximum precision in both classification and localization.

Once the down-sampling and up-sampling are done, the semantic labels are assigned to the pixels as shown above. Then the semantic labels are developed into an image with the same dimensions as the original image in the same or different image format as required.

Architectures for Semantic Segmentation:

As mentioned earlier, the whole process mentioned is the general structure of semantic segmentation. Multiple architectures or algorithms handle the mentioned aspects in their own way to reduce expenses (duration). Some of them are given below.

- Fully Convolutional Network (FCN)

- U-Net

- Mask RCNN

- SegNet

PixelLib:

There are multiple ways to achieve semantic segmentation. We are going to look at a simple and beginner-friendly way that uses a library called PixelLib.

Semantic Segmentation using PixelLib:

If this is your first time learning about segmentation, it is preferable to start with PixelLib due to its simplicity and ease of use. It is a Python library that provides easy out-of-the-box solutions to perform segmentation on images and videos with minimal coding. It is implemented with the Deeplabv3+ framework.

In this framework down down-sampling and up-sampling are called encoding and decoding, respectively. The Xception model used here is trained on pascalvoc dataset (This Xception model classifies the objects in a given image or video as Cat, Dog, Person, etc). This model can be downloaded from this Kaggle link.

This contains 20 object categories. The color code for each object is given below.

Note: If you want to train your custom model, you can find multiple documents and video tutorials on how to train a custom model on the internet.

Prerequisites:

- Python (Version 3.5 or above)

- pip (Version 19.0 or above)

- TensorFlow (Version 2.0 or above)

- Opencv-python

- scikit-image

!pip install pixellib

!pip install tensorflow

!pip install opencv-python

!pip install scikit-imageNote: If you stumble upon the below error, please install TensorFlow V2.6.0 and Keras V2.6.0, additionally.

“cannot import name BatchNormalization’ from tensorflow.python.keras.layers’”

!pip install tensorflow==2.6.0

!pip install keras==2.6.0Code:

import pixellib

import cv2

from pixellib.semantic import semantic_segmentation

seg_img = semantic_segmentation()

seg_img.load_pascalvoc_model("deeplabv3_xception_tf_dim_ordering_tf_kernels.h5")

seg_img.segmentAsPascalvoc("Image.jpg", output_image_name = "output_image.jpg") # Change the input filename accordinglyInput:

Output:

Note: Since the Xception model is trained with datasets of only 20 objects, only those objects will be detected and segmented. Other objects will be considered as background and black color will be assigned.

If you enable the overlay option as below, you can get the segmentation output with partial transparency showing the input.

import pixellib

import cv2

from pixellib.semantic import semantic_segmentation

seg_img = semantic_segmentation()

seg_img.load_pascalvoc_model("deeplabv3_xception_tf_dim_ordering_tf_kernels.h5")

seg_img.segmentAsPascalvoc("Image.jpg", output_image_name = "output_image.jpg", overlay = True)Output with Overlay:

The code for applying semantic segmentation to a video is given below.

import pixellib

from pixellib.semantic import semantic_segmentation

seg_vid = semantic_segmentation()

seg_vid.load_pascalvoc_model("deeplabv3_xception_tf_dim_ordering_tf_kernels.h5")

seg_vid.process_video_pascalvoc("City.mp4", overlay = True, frames_per_second= 15, output_video_name="output_video.mp4") # Change the input filename accordinglyInput:

Output with Overlay:

Want to dive deeper into AI and master skills like semantic segmentation? Check out HCL GUVI’s Artificial Intelligence & Machine Learning Course, designed by industry experts with real-world projects and certification from IIT-M & GUVI.

Concluding Thoughts…

As we’ve discussed, semantic segmentation plays a vital role in real-world applications, from smart vehicles to medical imaging. The best part? You can get started with just a few lines of Python using tools like PixelLib.

I hope this guide has helped you start out with semantic segmentation with the easy steps we discussed and that you can soon master this computer vision technique. If you have any doubts, reach out to me in the comments section below.

Did you enjoy this article?