What is Reinforcement Learning? Top 3 Techniques for Beginners

Sep 10, 2025 5 Min Read 1796 Views

(Last Updated)

Reinforcement Learning (RL) is one of the most exciting frontiers in machine learning, teaching agents to learn from trial and error, just like humans. Unlike supervised or unsupervised learning, where labeled or unlabeled data guide the process, RL trains models through experience, rewards, and feedback.

Let’s take an example of a self-driving car. With object detection techniques, we can identify a signal with a red sign. Now that the signal and the color red are detected and identified, what action should we perform?! How does the car make its own decision of whether it should stop or not? That’s where Reinforcement learning comes into play.

In this beginner-friendly guide, you’ll discover the core RL techniques like Q‑Learning, Markov Decision Processes (MDP), and policy gradient methods, and see how they power real-world systems like robots, drones, game AI, and recommendation engines. Let’s get started!

Table of contents

- What is Reinforcement Learning?

- Three Core Approaches to Reinforcement Learning

- Key Decision Process Methods

- 1) Markov Decision Process

- 2) Q- Learning

- 3) Policy Gradient Methods

- Applications of Reinforcement Learning

- 1) Robotics

- 2) Drones

- Concluding Thoughts…

What is Reinforcement Learning?

RL is a distinct type of machine learning where an agent explores an environment, takes actions, receives rewards, and transitions between states—all to learn which behaviors yield the highest cumulative rewards.

Let me put it simply, when people say “Machine Learning,” many of us are aware of the two primary types, Supervised and Unsupervised. Reinforcement Learning is also a type of Machine Learning.

When we already have labeled data and we use that data to train an algorithm, it is called the Supervised Learning technique. On the other hand, it is called Unsupervised Learning when we train an algorithm using unlabeled data.

But, what if there is no data? That’s when we let the machine learn on its own by allowing it to make its own mistakes and correct itself by learning from the mistakes.

Instead of a human, reinforcement learning has an agent! This agent explores the environment and learns to perform the desired tasks by taking action. Now this action can give a good outcome or a bad outcome. Avoiding outcomes with bad actions is the task, and this is where a reward is introduced for every good outcome.

Let’s understand the components in this picture,

Example: Self-driving car

Agent – A component assigned to practice in the environment

Environment- A place where the agent interacts and does trial and error

Action – The choice that the agent makes in every step

State – The current situation of the agent at which an action has taken place

Reward- A Reward is given if the action is completed successfully

In short, reinforcement learning will learn from its own experience, and over time, it will be able to identify which actions lead to the best rewards.

Also Read: Machine Learning Must-Knows: Reliable Models and Techniques

Three Core Approaches to Reinforcement Learning

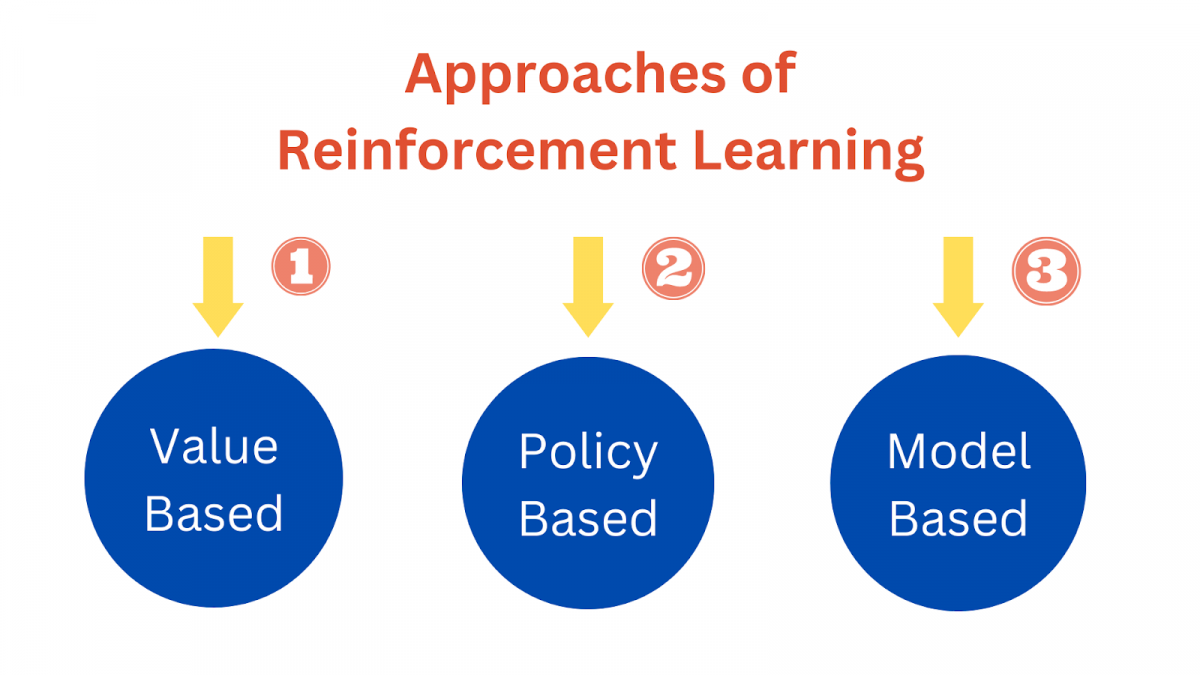

Now that we’ve understood what reinforcement learning is, let me explain to you the approaches you can take to solve a Reinforcement Learning problem. There are three approaches:

- Value-Based (Q‑Learning): Learns a Q‑value table or function predicting the best action’s long-term reward. The agent chooses the highest Q‑value action.

- Policy-Based (Policy Gradient): Learns a policy directly (e.g., a probability distribution over actions) and optimizes it to maximize expected reward.

- Model-Based: Builds an internal model of the environment to simulate future states and rewards, and planning.

Key Decision Process Methods

There are many concepts in Reinforcement Learning. As I told you earlier, it requires a complex understanding of math and derivations. I’m not going to go in-depth into the derivatives. Here in this article, I’m going to cover three concepts to understand how the agent works in the environment.

- Markov Decision Processes – How the agent decides to transition from one state to another

- Q-Learning – The reward calculation technique to choose the moves

- Policy Gradient- An action-based method to get high rewards

1) Markov Decision Process

In the previous image, you can see there is a component called ‘State’. This refers to the state in which the agent is.

Let’s take a simple example.

I want a robot that is sitting on a chair to stand up and pick up an object.

Here, as you can see, the agent has three states, and the transition happens from one state to another. This is based on the probability of the current state and not on the previous states. In simple terms, State 3 depends on State 2 and not on State 1. This is called the Markov Process.

It is defined by (S, P) where S represents the states and P is the state transition probability.

The future is independent of the past, given the present!

A Markov Process is a memoryless random process with a sequence of random states. When this process of transitioning the state is combined with the reward, then this gives us the Markov Decision Process. This reward process is like a chain with values that help the agent to take the right decision.

This process is also combined with the discount factor, which tells how important is the current state to achieve future rewards. It is a value that varies between 0 to 1.

Do you not like the math factors behind this concept?! Just know this…

Markov Decision Process(MDP) is a rewarding process with decisions based on the parameters such as the states, actions, state transition probability, reward function, and discount factor.

Suggested Read: Real-World Machine Learning Applications

2) Q- Learning

When the agent directly derives an optimal policy from its interactions with the environment without needing to create a model beforehand is called Model-free learning.

Q-learning is a value-based model-free learning technique that can be used to find the optimal action-selection policy using a Q function.

Q here stands for Quality. Now that we know there is a reward for an agent when the right decision is made. With the Q-learning, the agent will choose a path where the reward is high.

Let’s look at the image above. Now, according to you, where should the agent go? To get 10 points or 100 points? The answer is 100 points. This can be done by making a Q table with the values of rewards the agent will get. The best possible rewards are based on the table, the agent can decide whether to take right, left, up, or down.

This is an example of a Q table based on the action the agent should take.

The agent’s work is to take the right action to reach the end without getting into mines, and also to try to get the powers. This is possible by Q Learning, and the table shows how the value can be calculated to let the agent know which way is more rewarding.

3) Policy Gradient Methods

There is also another method, like Q-Learning, on makes the agent take its decisions based on certain parameters. While Q Learning aims at predicting the reward of certain actions taken in a certain state, Policy Gradients directly predict the action itself

The term ‘Gradient’ means a change in the value of the quantity with a change in the given variable. I’m sure you now know the work of the agent, is to try to maximize the reward. Now if this maximizing happens when following a policy, it is following a policy gradient method. This policy is derived by defining a set of parameters where the change is found and acting accordingly.

Applications of Reinforcement Learning

Let’s now understand some of the applications of Reinforcement Learning in the real world and the simulated world.

1) Robotics

Reinforcement Learning(RL) is widely used in the field of Robotics. In Robotics, the environment can be a simulation or a real-world scenario. Let’s see some of the areas where it is applied.

- Autonomous Navigation: Reinforcement algorithms can be used to train robots to navigate from one location to a target location while avoiding obstacles in the environment

- Manipulation Tasks: We can train robots to perform tasks such as grasping objects, putting them in specific locations, or stacking blocks.

- Aerial Robots: RL algorithms have been used to control the flight of quadrotors, allowing them to perform aerial acrobatics, fly autonomously, or perform tasks such as search and rescue.

- Robotics in Manufacturing: RL can be used to optimize production processes by controlling the movement of robots in a factory.

- Human-robot Interaction: RL can be used to learn a policy for a robot that maximizes human-robot interaction by making decisions such as whether to move closer or further from a person or how to respond to different gestures.

2) Drones

Reinforcement learning (RL) is widely used in the control of drones, both for research purposes and for practical applications.

Some common ways RL is used in drones include:

- Autonomous flight: RL algorithms can be used to train drones to fly autonomously, navigate to specific locations, avoid obstacles, and perform tasks such as search and rescue.

- Flight control: RL can be used to learn control policies for the stabilization of the flight of drones, improving their stability and robustness to external disturbances.

- Trajectory optimization: RL algorithms can be used to optimize the trajectory of drones, allowing them to fly more efficiently and conserve energy.

- Motion planning: RL can be used to plan the motion of drones in real time, taking into account obstacles and other constraints in the environment.

- Task allocation: RL can be used to divide tasks among multiple drones, allowing them to work together efficiently to complete a common goal.

In these examples, the drone’s environment could be a simulated or real-world scenario, and the state could include information such as the drone’s position, orientation, velocity, and so on. The actions taken by the drone could include commands to control its motors and other actuators, and the reward signal could be designed to reflect the goals of the task the drone is performing.

As with other applications of RL in robotics, the use of RL in drones is challenging and requires careful consideration of the design of the reward signal, the simulation or real-world scenario, and the algorithm used to learn the policy.

If you’re looking to master Reinforcement Learning along with the core concepts of AI and ML, GUVI’s Artificial Intelligence and Machine Learning Course is a perfect start. Designed by industry experts and powered by IIT-M certification, this course offers hands-on projects and placement support to launch your AI career with confidence.

Concluding Thoughts…

Reinforcement learning (RL) is a promising area of artificial intelligence and machine learning that has the potential to revolutionize many fields and industries. RL algorithms enable agents to learn from experience, optimizing their behavior over time to achieve a desired goal. Applications of RL are wide-ranging, from controlling robots and drones to optimizing resource allocation, game playing, and human-computer interaction.

In conclusion, RL is a field with great potential, and it will be exciting to see how it continues to evolve and what new applications emerge in the future. But no matter what, I will always be here to explain all advancements as simply as possible just for you. Good Luck!

Did you enjoy this article?