Transfer Learning: Trending and Hottest Topic in NLP

Sep 10, 2025 3 Min Read 1198 Views

(Last Updated)

Have you ever wondered how chatbots like ChatGPT, voice assistants like Alexa, or search engines understand and respond so naturally? The answer lies in one of the hottest breakthroughs in machine learning—Transfer Learning.

It has revolutionized how we train models for Natural Language Processing (NLP) by dramatically reducing time, cost, and data requirements.

In this blog, we’ll explore Transfer Learning in NLP, its rise through pre-trained transformer models like BERT, RoBERTa, and GPT-2, and how they continue to evolve and empower applications like sentiment analysis, entity recognition, and question answering.

Table of contents

- What is Natural Language Processing?

- What is Transfer Learning?

- Why Use Transfer Learning?

- Key Pretrained Transformer Models

- BERT – Bidirectional Encoder Representations from Transformers

- RoBERTa – Robustly Optimized BERT Approach

- GPT-2 and Beyond

- Fine-Tuning Methods in Transfer Learning

- Real-World NLP Tasks Using Transfer Learning

- Sentiment Analysis

- Named Entity Recognition (NER)

- The Future of Transfer Learning in NLP

- Conclusion

What is Natural Language Processing?

Natural language processing (NLP) is a branch of Artificial Intelligence (AI) that gives computers the ability to understand text and spoken words in the same way human beings can.

NLP resolves ambiguity in language and adds useful numeric structure to the data for a lot of applications, such as speech recognition or text analytics, etc. Transfer learning is one of the trending and hottest topics in NLP, and it is a novel way to train machine learning models.

What is Transfer Learning?

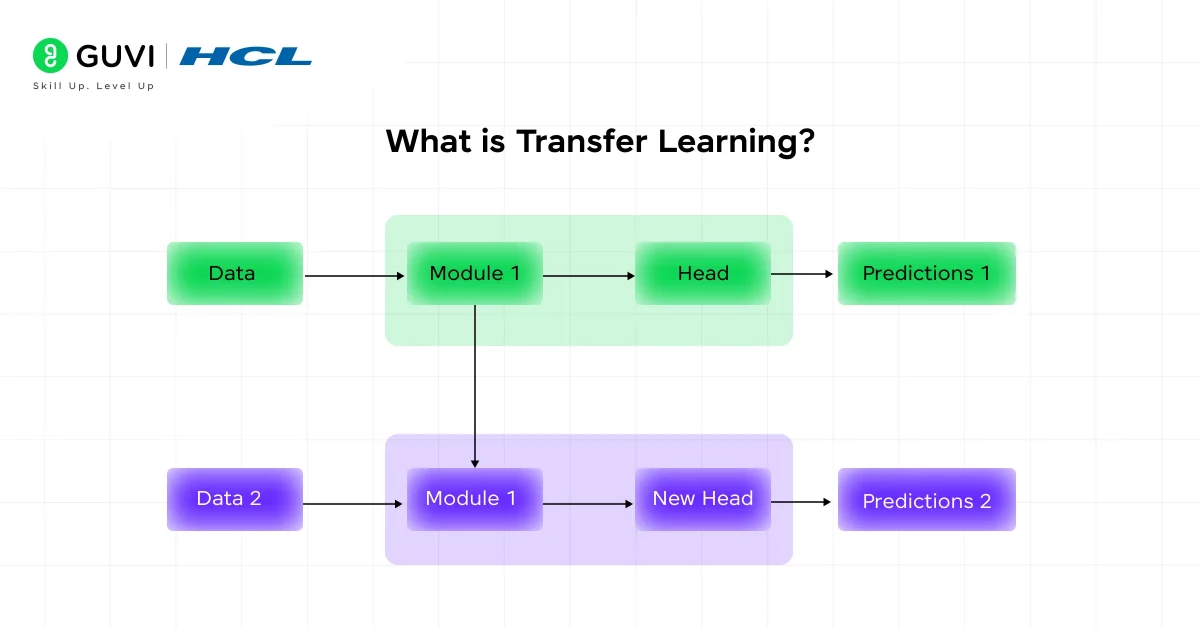

Transfer Learning is a technique where a model trained on a large dataset (source task/domain) is reused or adapted to perform a different but related task (target domain/task). Instead of training from scratch, we build upon the knowledge the model has already learned, often improving performance with less data.

In the above picture, Transfer learning uses knowledge learned from a previous task/domain for a new one. The formal definition for transfer learning states that, given a source domain, a corresponding source task, as well as a target domain and a target task, the objective of transfer learning now is to enable us to learn the target conditional probability distribution in target domain with the information gained from source domain and where source domain ≠target domain or source task ≠ target task.

Why Use Transfer Learning?

- Reduces training time

- Works well with limited labeled data

- Leverages general knowledge from large corpora

- Achieves state-of-the-art results across multiple tasks

Especially in NLP, transfer learning forms the basis of most applications you see in production today.

Key Pretrained Transformer Models

A wide variety of transformer-based models are there for performing different NLP tasks, but the most important ones are:

1. BERT – Bidirectional Encoder Representations from Transformers

Developed by Google, BERT learns deep bidirectional representations by conditioning on both left and right context in all layers. It laid the foundation for modern NLP architectures.

BERT Base:

- Layers (L): 12

- Hidden Size (H): 768

- Attention Heads (A): 12

- Parameters: 110M

BERT Large:

- L: 24, H: 1024, A: 16

- Parameters: 340M

Latest Update (2025): BERT is still widely used, often fine-tuned for task-specific applications or as a baseline for comparison.

2. RoBERTa – Robustly Optimized BERT Approach

Developed by Meta AI, RoBERTa improves BERT by:

- Training on 160GB of data (10x BERT)

- Using dynamic masking

- Removing next-sentence prediction

- Increasing batch size and training time

Latest Update (2025): RoBERTa continues to be a preferred choice for text classification and QA, often outperforming vanilla BERT in benchmarks.

3. GPT-2 and Beyond

OpenAI’s GPT-2, released in 2019, set the tone for generative NLP by showing how a transformer-based model could:

- Translate

- Summarize

- Generate human-like content

GPT-2 (1.5B parameters) was followed by:

- GPT-3 (175B) – API only

- GPT-4 (2023) – Multi-modal with text and image input

- GPT-4o (2024) – “Omnimodal” with text, vision, and audio integration

Latest Update (2025): GPT-4o powers voice assistants, reasoning engines, and customer support agents. Though closed-source, open-source alternatives like Mistral, LLaMA, and Falcon offer similar capabilities with transfer learning approaches.

Fine-Tuning Methods in Transfer Learning

There are three main ways to adapt a pre-trained model:

- Train the Entire Architecture: Retrain all layers on new data. Best for domain-specific NLP tasks.

- Freeze Base, Train Top Layers: Retain original representations, train a few final layers. Saves time.

- Freeze All Layers, Add Classifier: Useful for fast experimentation with small datasets.

Real-World NLP Tasks Using Transfer Learning

1. Sentiment Analysis

Classifies text as positive, negative, or neutral. Used in social media monitoring, customer feedback, and product reviews.

BERT-based sentiment models now reach 95%+ accuracy with datasets like IMDB Reviews, Amazon Product Data, and Twitter Sentiment Corpus.

2. Named Entity Recognition (NER)

Identifies entities like:

- Persons

- Locations

- Organizations

- Time/date, numerical measures, and more

RoBERTa and BERT fine-tuned with BIO tagging schemes dominate NER tasks in 2025.

The Future of Transfer Learning in NLP

- Multi-modal learning: Models like GPT-4o integrate text, image, and speech data.

- Continual Learning: Adapting without forgetting previous tasks.

- Parameter-efficient fine-tuning: Using LoRA, adapters, and prefix-tuning to fine-tune massive models faster.

- Edge deployment: Smaller distilled models (e.g., DistilBERT) enable NLP on devices.

If you want to learn more about how NLP helps in our day-to-day life and how learning can impact your surroundings, consider enrolling in HCL GUVI’s IITM Pravartak Certified Artificial Intelligence and Machine Learning course that teaches NLP, Cloud technologies, Deep learning, and much more that you can learn directly from industry experts.

Conclusion

Transfer learning has completely transformed NLP—from time-consuming model building to plug-and-play intelligence. Whether it’s classifying a tweet, answering a customer query, or summarizing a legal document, the magic lies in leveraging powerful pre-trained models like BERT, RoBERTa, and GPT.

With the advancement of transformer architectures and fine-tuning techniques, NLP is only becoming more accurate, accessible, and impactful in 2025 and beyond.

If you’re looking to build an NLP application today, remember: you don’t need to start from scratch; start with transfer learning.

Did you enjoy this article?