What are Data Science Models? Types, Techniques, Process

Sep 21, 2024 5 Min Read 1782 Views

(Last Updated)

In the fast-evolving world of technology, data science models are pivotal in transforming data into actionable insights, powering decisions across industries from healthcare to finance.

These models are the backbone of understanding complex data entities, employing various data modeling techniques and methodologies to organize data elements in a meaningful way.

The significance of these models lies in their ability to represent physical data models and abstract concepts and their capability to predict future trends and behaviors, making them indispensable in today’s data-driven environment.

This article will delve into the intricate world of data science models, exploring the various types of data models, key techniques in data science modeling, and the comprehensive steps involved in the data science modeling process.

Table of contents

- What are Data Science Models?

- A) Understanding the Basics

- B) Data Collection and Preparation

- C) Exploratory Data Analysis (EDA)

- D) Model Selection and Training

- E) Model Evaluation and Refinement

- F) Deployment

- What is Data Modeling?

- Types of Data Science Models

- 1) Conceptual Models

- 2) Logical Models

- 3) Physical Models

- Differentiation Table: Model Types

- Key Techniques in Data Science Modeling

- 1) Hierarchical Models

- 2) Network Models

- 3) Graph Models

- 4) ER Models

- 5) Dimensional Models

- Steps in the Data Science Modeling Process

- Step 1: Identification of Entities

- Step 2: Identification of Key Properties

- Step 3: Identification of Relationships

- Step 4: Mapping Attributes

- Step 5: Assign

- Concluding Thoughts...

- FAQs

- What are the different types of data science models?

- What are the different types of data modeling techniques?

- What are the 4 types of data in data science?

- What are modeling techniques?

What are Data Science Models?

Data science models are essential tools that transform raw data into insightful, actionable information. They play a critical role in various industries by predicting outcomes and optimizing solutions based on data.

Understanding these models involves grasping their fundamental steps and applications, which are designed to be accessible even to those new to the field of data science.

A) Understanding the Basics

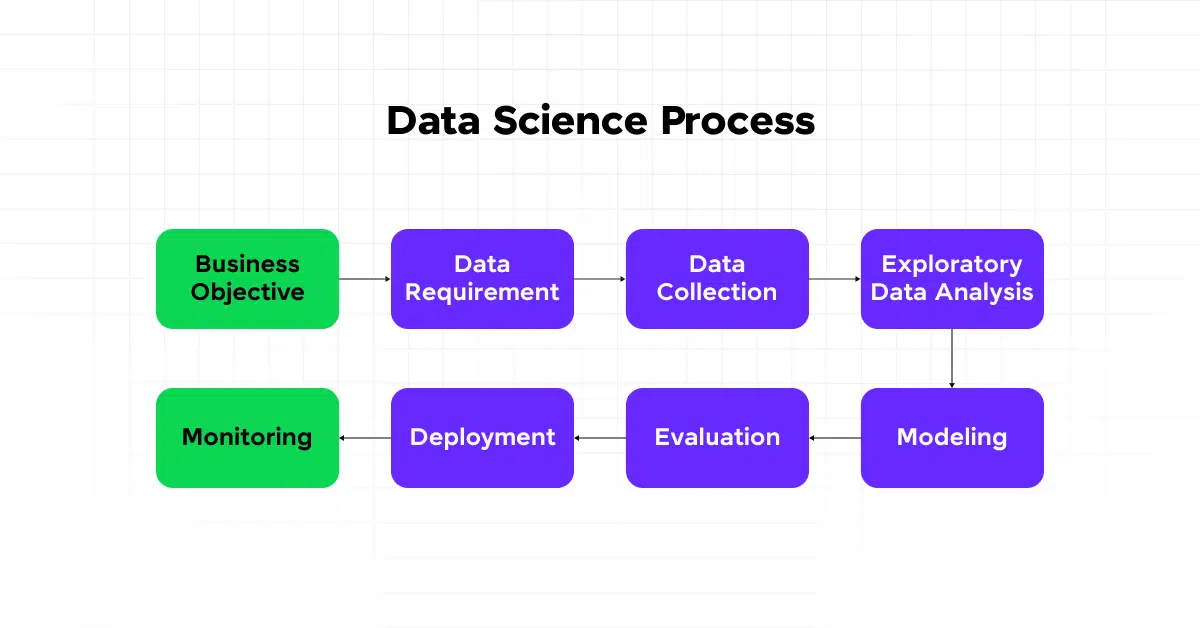

Data science modeling is a structured process that begins with a clear definition of the problem you aim to solve.

This could range from predicting customer churn to enhancing product recommendations or identifying patterns within data sets. Initially, you need to clarify your objectives, which will guide your choice of data, algorithms, and evaluation metrics.

B) Data Collection and Preparation

The next step involves collecting data relevant to your defined problem. This data might come from internal company databases, publicly available datasets, or external sources.

Ensuring the sufficiency and relevance of your data is crucial for effective model training. Following collection, data cleaning is performed to prepare your dataset for modeling.

This includes handling missing values, removing duplicates, and correcting errors to ensure the reliability of your model’s predictions.

C) Exploratory Data Analysis (EDA)

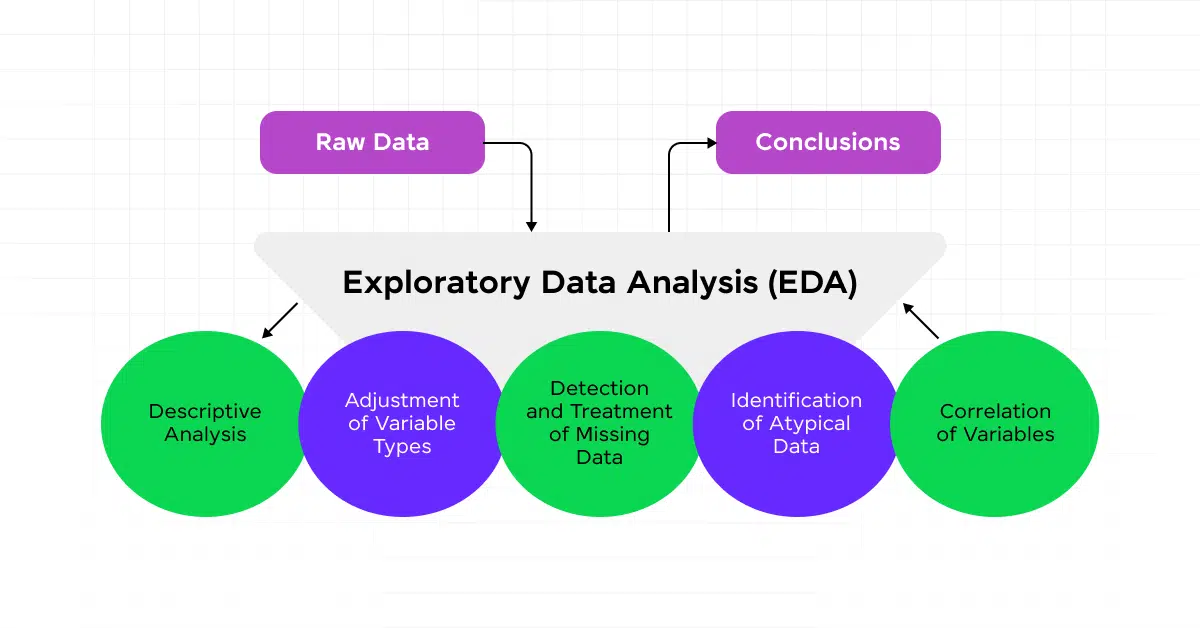

Data exploration or EDA is critical in summarizing the main characteristics of your data. Employing visualizations and statistical methods helps uncover patterns, anomalies, and relationships between variables.

This step is vital for understanding the underlying structure of your data and informing further modeling decisions.

D) Model Selection and Training

Choosing the right model is dependent on the type of problem and the nature of your data. Beginners might start with simpler models like linear regression or decision trees, while more complex problems might require advanced models like neural networks.

Training involves feeding your model with the dataset, where the model learns and adjusts its parameters to minimize errors.

E) Model Evaluation and Refinement

After training, your model’s performance is assessed using the testing set. Common evaluation metrics include accuracy, precision, recall, and the F1 score.

These metrics help determine how well your model will perform on unseen data. Based on the evaluation, you might need to refine your model by tuning hyperparameters or revisiting data preparation steps for improvement.

F) Deployment

Once satisfied with your model’s performance, the final step is to deploy it for real-world applications. This could involve integrating the model into business applications or using it for strategic decision-making within your organization.

By following these steps, data science models will enable you to make informed decisions and drive strategic actions based on data-driven insights. This process simplifies data science modeling and provides a clear, stepwise guide that anyone with a basic understanding of data science can follow.

Before we move into the next section, ensure you have a good grip on data science essentials like Python, MongoDB, Pandas, NumPy, Tableau & PowerBI Data Methods. If you are looking for a detailed course on Data Science, you can join GUVI’s Data Science Course with Placement Assistance. You’ll also learn about the trending tools and technologies and work on some real-time projects.

Additionally, if you would like to explore Python through a Self-paced course, try GUVI’s Python certification course.

What is Data Modeling?

Data modeling is a crucial step in the data science process, providing a structured method to define and analyze data requirements needed to support business processes.

This process involves three distinct levels: physical, logical, and conceptual, each serving a unique purpose in the organization and manipulation of data. Let’s discuss them at length:

Types of Data Science Models

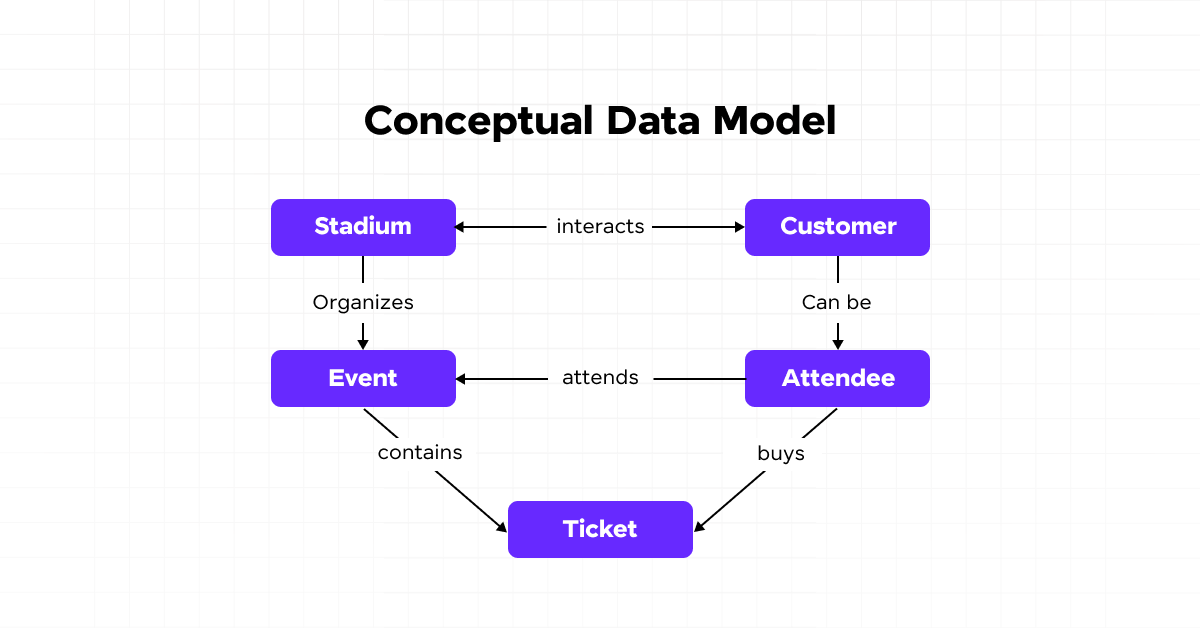

1) Conceptual Models

Conceptual data models are high-level, abstract representations that focus on what data is required and how it is related rather than how it is processed or stored. These models serve as a bridge between business stakeholders and technical implementers by capturing the core concepts and business rules.

For instance, in a conceptual model for a retail business, entities like ‘Customers’, ‘Orders’, and ‘Products’ are identified along with their relationships without delving into database specifics.

This model helps visualize the business requirements in a simplified manner, making it easier for everyone to understand the data needs of the organization.

2) Logical Models

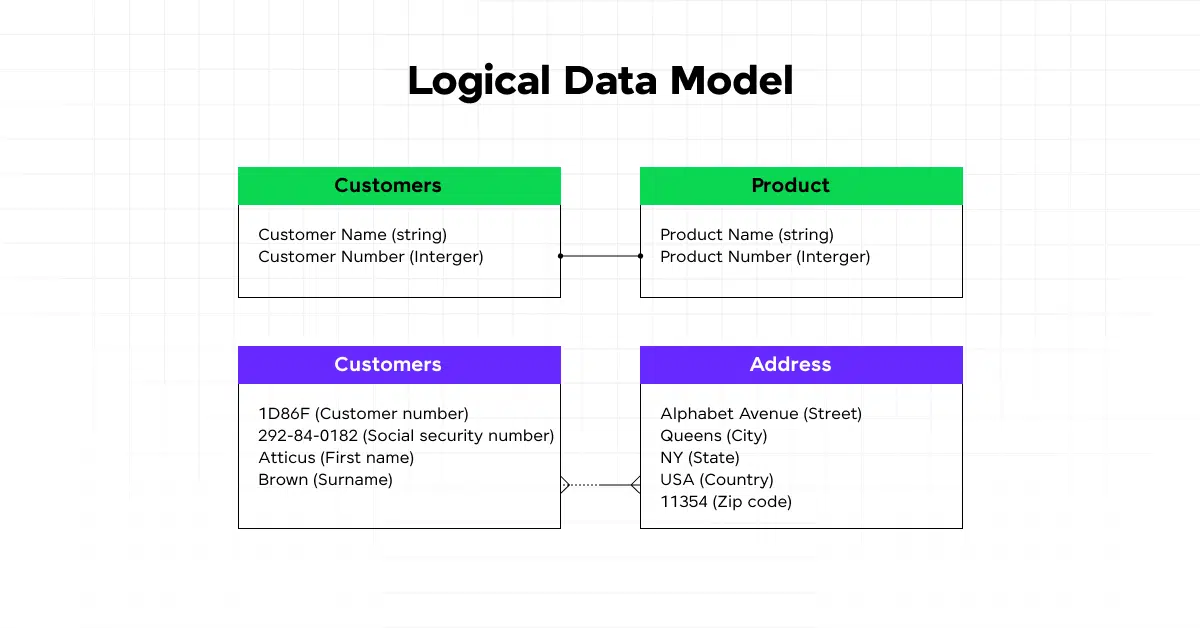

Logical data models provide a more detailed explanation, showing how data is structured within the system. These models define the entities, attributes, keys, and relationships but are independent of database technologies.

For example, a logical model might detail attributes of a ‘Customer’ entity like name, address, and order history, and describe the relationships to other entities such as ‘Orders’.

Logical models are crucial for aligning business processes with actual data structures and are often used as a foundation for developing physical data models.

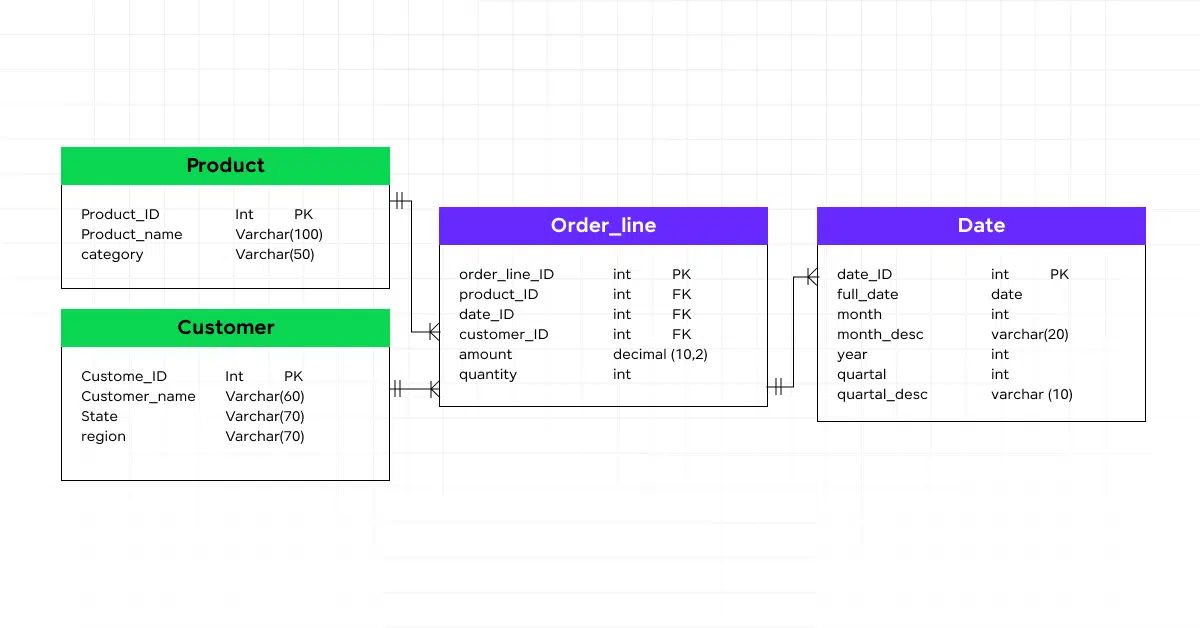

3) Physical Models

Physical data models specify how models will be implemented in the database. This includes detailed schematics of tables, columns, data types, and relationships.

A physical model for a customer management system, for instance, would detail how customer data is stored in tables, how tables relate to one another, and how foreign keys are used to link ‘Customers’ to ‘Orders’.

This model is essential for database administrators and developers as it provides a blueprint for building the database structure.

Differentiation Table: Model Types

| Model Type | Focus | Usage | Example |

|---|---|---|---|

| Conceptual | High-level business concepts | Initial planning and communication | Entities like ‘Customers’ and ‘Products’ |

| Logical | Data structure and relationships | Foundation for physical model | Attributes and relationships of ‘Customer’ |

| Physical | Database implementation details | Actual database construction | SQL tables, columns, relationships |

By understanding these different types of data models, you can better appreciate how data science models are constructed from a high-level concept to a detailed, technical implementation.

Each type plays a critical role in the data management ecosystem, ensuring that data is accurately represented and efficiently handled across various stages of the data modeling process.

Key Techniques in Data Science Modeling

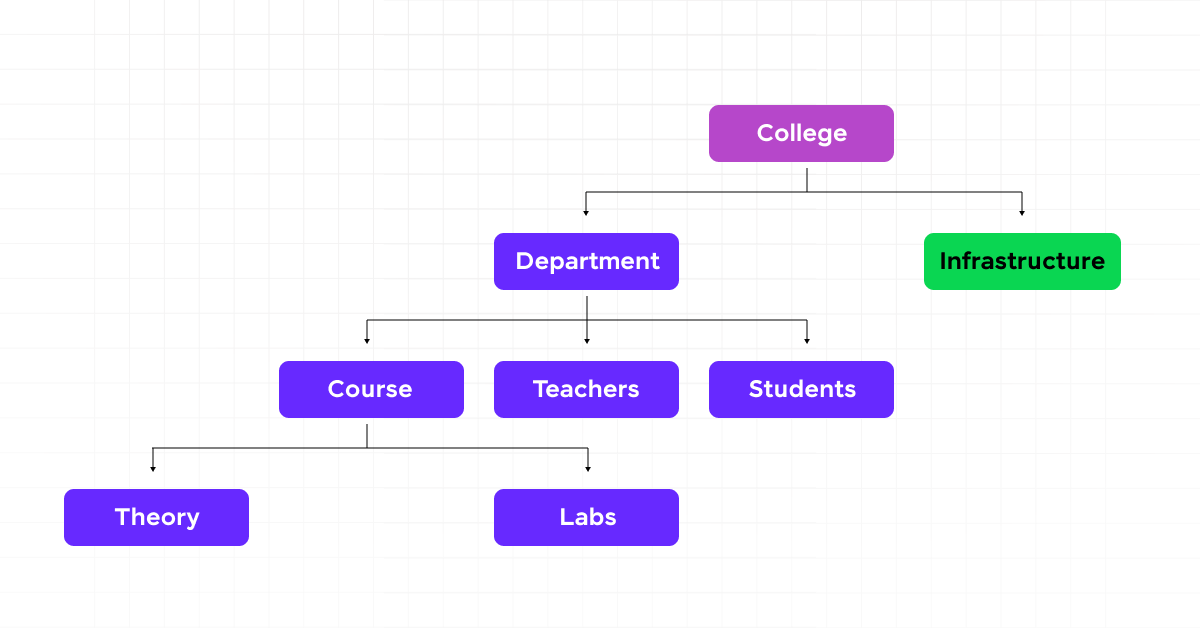

1) Hierarchical Models

In data science modeling, hierarchical models, also known as multilevel models, play a crucial role when dealing with clustered or grouped data. These models consider the independence of samples within each group, capturing both fixed effects (individual capabilities) and random effects (group influences).

For example, in educational data, students’ performance might be influenced by both their abilities and the teaching methods of their assigned classes.

Hierarchical modeling allows for partial pooling, which provides a balance between completely pooled models and individual unpooled models, enhancing the robustness and reliability of the model predictions.

2) Network Models

Network models are essential for representing structured data in a comprehensible and accessible manner. By structuring data like a tree with a parent-child dynamic, these models simplify data management and enhance data integrity.

However, the hierarchical nature of network models may limit their flexibility, as they are best suited for environments with a clear top-down hierarchy. They are particularly effective in systems where data integrity and unambiguous relationships are critical.

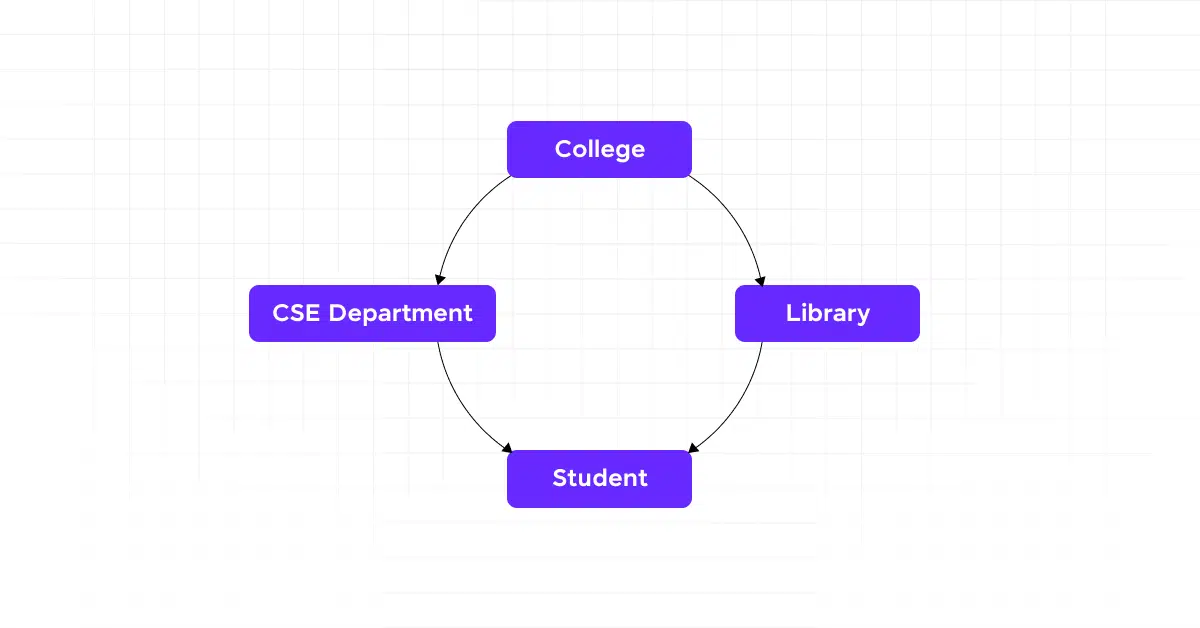

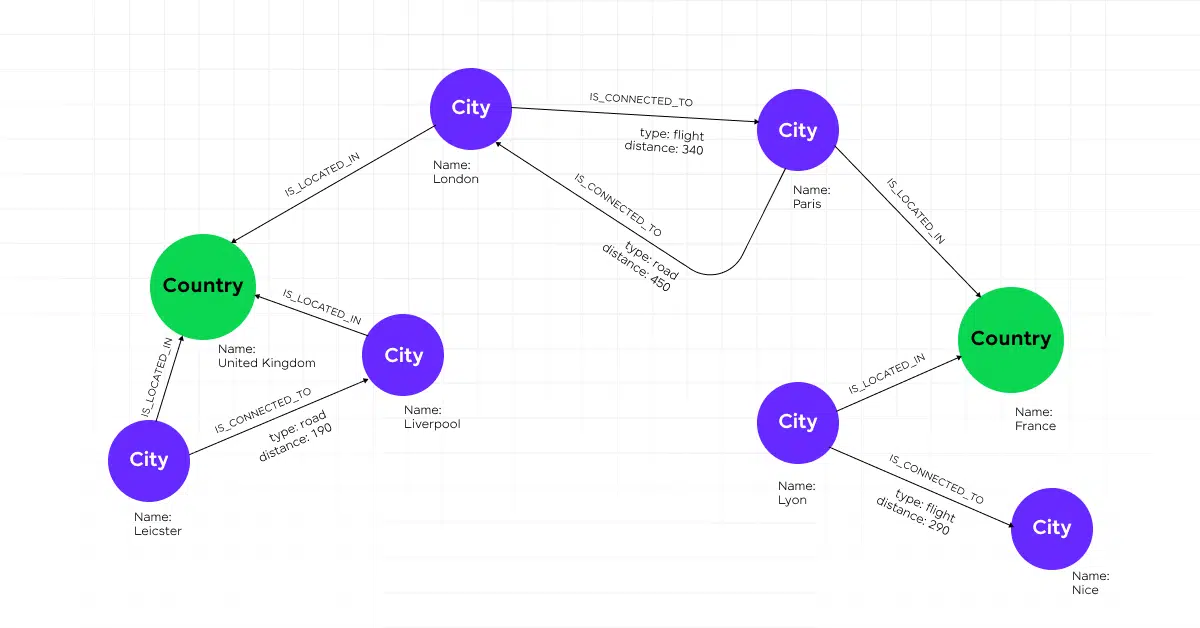

3) Graph Models

Graph models utilize nodes and edges to represent entities and their relationships. This approach is invaluable for analyzing complex relational datasets, such as social networks or biological pathways.

Graph models support various graph types, including directed, undirected, weighted, and unweighted graphs, each suitable for different analytical needs.

By applying graph theory, data scientists can employ algorithms like PageRank or community detection to analyze the data effectively, making graph models a powerful tool for uncovering insights in networked data structures.

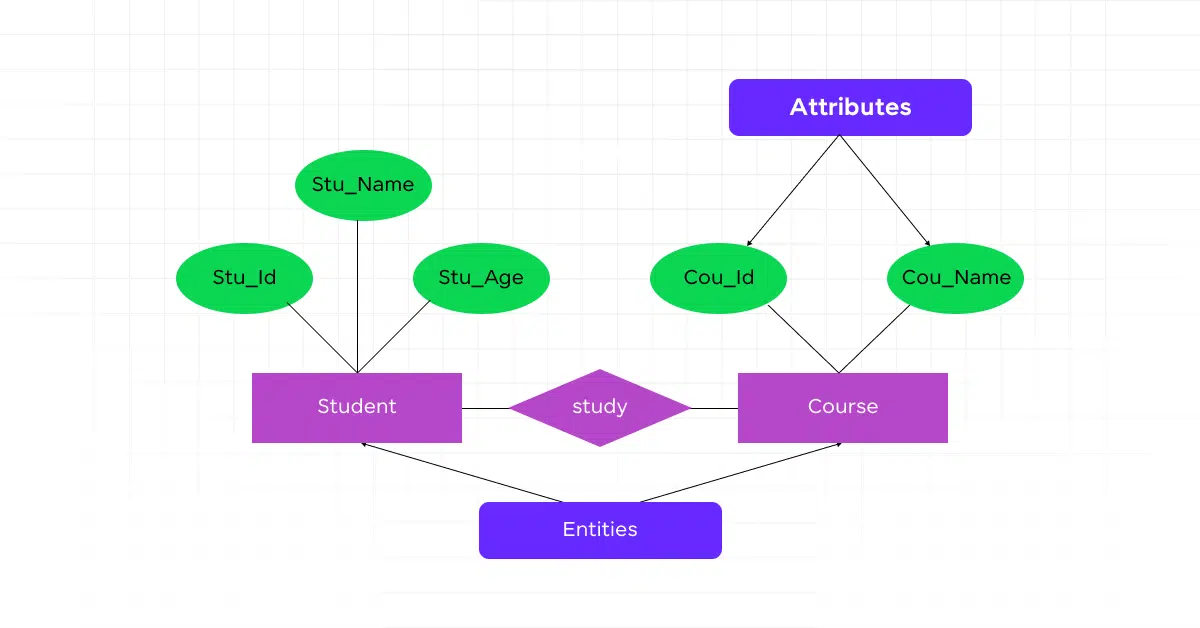

4) ER Models

Entity-relationship (ER) models are foundational in database design, providing a clear diagrammatic representation of entities and their relationships. These models help in visualizing the logical structure of databases, making them easier to understand and manage.

ER diagrams, used in these models, depict entities, attributes, and relationships, facilitating the conceptual design of database schemas. This modeling technique is particularly beneficial for designing databases that accurately reflect complex real-world relationships.

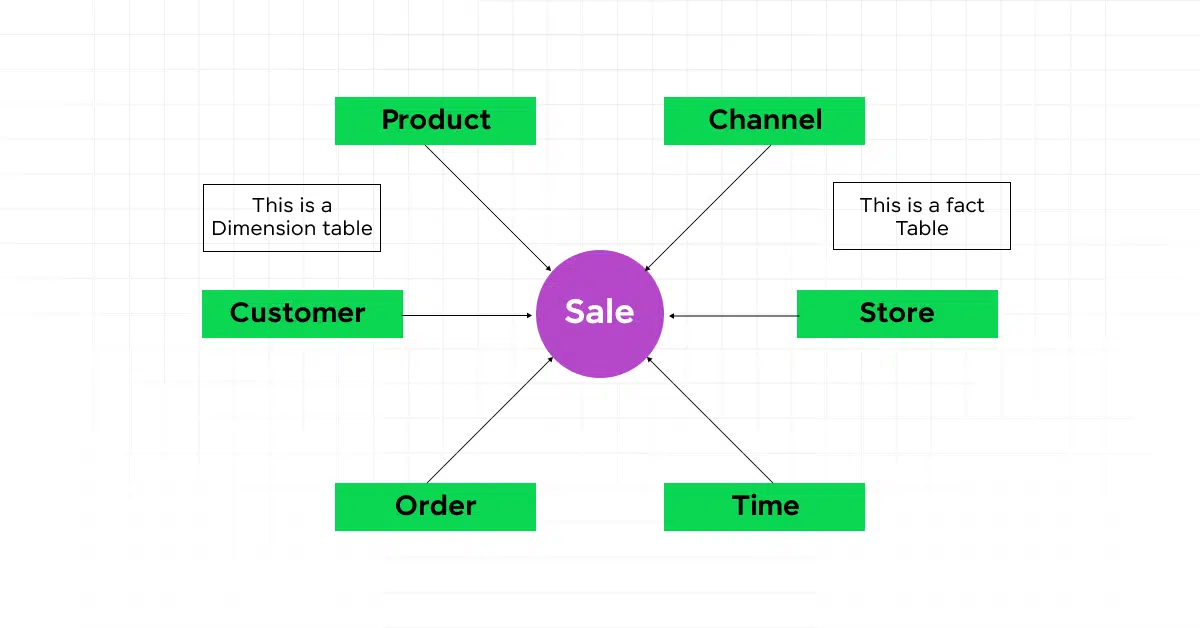

5) Dimensional Models

Dimensional modeling is a technique specifically designed for data warehousing and business intelligence. By organizing data into fact and dimension tables, these models enhance data retrieval and analysis.

Dimensional models use a star schema to optimize query performance, making data analysis both faster and more intuitive.

This approach is particularly advantageous for users needing to perform complex queries frequently, as it simplifies data structure and speeds up data access.

By incorporating these key techniques in data science modeling, you can address various data analysis challenges, ensuring that your models are both efficient and effective in extracting valuable insights from complex datasets.

Steps in the Data Science Modeling Process

Step 1: Identification of Entities

In the initial phase of data science modeling, you identify the core components of your dataset. These entities are the objects, events, or concepts you need to analyze.

Each entity should be distinct and cohesive, representing a singular aspect of your data. For example, if you’re modeling customer behavior, your entities might include “Customer,” “Transaction,” and “Product.”

Step 2: Identification of Key Properties

Once the entities are defined, the next step is to pinpoint their key properties or attributes. These attributes are unique characteristics that help differentiate one entity from another.

For instance, for the entity “Customer,” attributes could include “Customer ID,” “Name,” and “Contact Information.” This step ensures each entity is clearly defined and distinguishable.

Step 3: Identification of Relationships

Understanding how entities interact with each other is crucial. This step involves defining the relationships between your identified entities.

These relationships help in mapping out how data flows and connects within your system, which is vital for structuring your data model effectively.

For example, the relationship between “Customer” and “Transaction” might be defined by the purchases a customer makes.

Step 4: Mapping Attributes

After establishing entities and their relationships, the next step is to map attributes to these entities. This process ensures that all necessary data points are accounted for and correctly linked to the respective entities.

It’s about assigning data fields and determining how these fields relate across different entities, ensuring the model reflects the real-world use of the data.

Step 5: Assign

The final step involves assigning keys and deciding the degree of normalization needed. Keys are used to create relationships between different data elements efficiently and without redundancy.

Normalization helps in organizing the data model to reduce redundancy and improve data integrity. This balance is crucial for maintaining performance while ensuring the data model is manageable and scalable.

By following these steps, you ensure that your data science model is robust, scalable, and aligned with business objectives, paving the way for insightful analysis and decision-making.

Kickstart your Data Science journey by enrolling in GUVI’s Data Science Course where you will master technologies like MongoDB, Tableau, PowerBI, Pandas, etc., and build interesting real-life projects.

Alternatively, if you would like to explore Python through a Self-paced course, try GUVI’s Python certification course.

Concluding Thoughts…

Throughout this article/guide, we have learned about the foundational methodologies, various model types, and key techniques essential to understanding and leveraging these tools in data-driven environments.

After going through all the details discussed in this article, it’s clear that models ranging from conceptual to physical, augmented by techniques like hierarchical and network models, are indispensable in distilling complex datasets into actionable insights.

The highlighted models, including the ER and dimensional models, underpin the critical role that data science plays in predicting trends, optimizing processes, and empowering decisions across sectors by transforming raw data into strategic assets.

FAQs

What are the different types of data science models?

The three main types of data science models are conceptual, logical, and physical.

What are the different types of data modeling techniques?

Common data modeling techniques include entity-relationship (ER) modeling, dimensional modeling, and object-oriented modeling.

What are the 4 types of data in data science?

The four types of data in data science are nominal, ordinal, interval, and ratio data.

What are modeling techniques?

Modeling techniques refer to the methods used to create representations of systems or processes, such as regression analysis, decision trees, clustering, and neural networks.

![Top 13 Tableau Projects [with Source Code] 15 tableau projects](https://www.guvi.in/blog/wp-content/uploads/2024/11/Tableau-Project-Ideas.png)

Did you enjoy this article?