Top 30 Big Data Interview Questions and Answers

Oct 10, 2025 7 Min Read 1937 Views

(Last Updated)

Working on extremely big and extremely large datasets might be exciting to you right? Cracking your dream job interview might be your dream come true. Do you want to crack your upcoming big data interview? We got you!

This blog covers top 30 big data interview questions and answers. We will provide you with important big data concepts that you need to learn to feel confident in your interview. This blog will be your great go to guide before the interview. Let’s get started!

Table of contents

- Important Big Data Concepts for Interviews

- Top 30 Big Data Interview Questions and Answers

- Conclusion

Important Big Data Concepts for Interviews

In this section, we will look into the important big data concepts for interviews. Since, big data mostly revolves around the data, you should have a decent understanding of databases and its types. The important concepts include:

- 5 Vs of Big Data: It describes the big data characteristics such as Volume, Velocity, Variety, Veracity and Value.

- NoSQL Databases: It stores unstructured data such as images, videos and documents.

- Hadoop Ecosystem: It is a framework for distributed storage (HDFS) with tools like Hive and HBase.

- Apache Spark: It is an in-memory processing engine for batch, real-time data analytics faster than MapReduce.

- Batch and Stream Processing: It processes both large volumes and real time data as events occur.

- Data Ingestion: It is used for processing the collected data into storage systems using various tools.

- Data Lake: It is a centralized repository which is used to store raw data in any format.

- Data Warehousing: It is a structured form of data storage optimized for analytics.

- ETL and ELT: It defines transformation of data in the warehouse both before and after loading.

- Data Modeling: It is used for designing the structure and relationships of data entities.

- Workflow Orchestration: It is an architecture to manage data pipelines and job dependencies.

Interested in learning more about NoSQL databases? Enroll in HCL Guvi’s FREE E-book on MongoDB for Beginners: Your Guide to Modern Databases.

Top 30 Big Data Interview Questions and Answers

In this section, we will look into top 30 big data interview questions and answers from beginner to expert levels. Let’s get into the details.

- What is Big Data?

Big Data refers to extremely huge and large amounts of complex datasets that are difficult to manage and analyze using traditional methods. It stores the complex data in a form that it is easy to extract the necessary information at high speed for processing and creating a data driven solution. It includes various types of data such as structured, unstructured and semi-structured data.

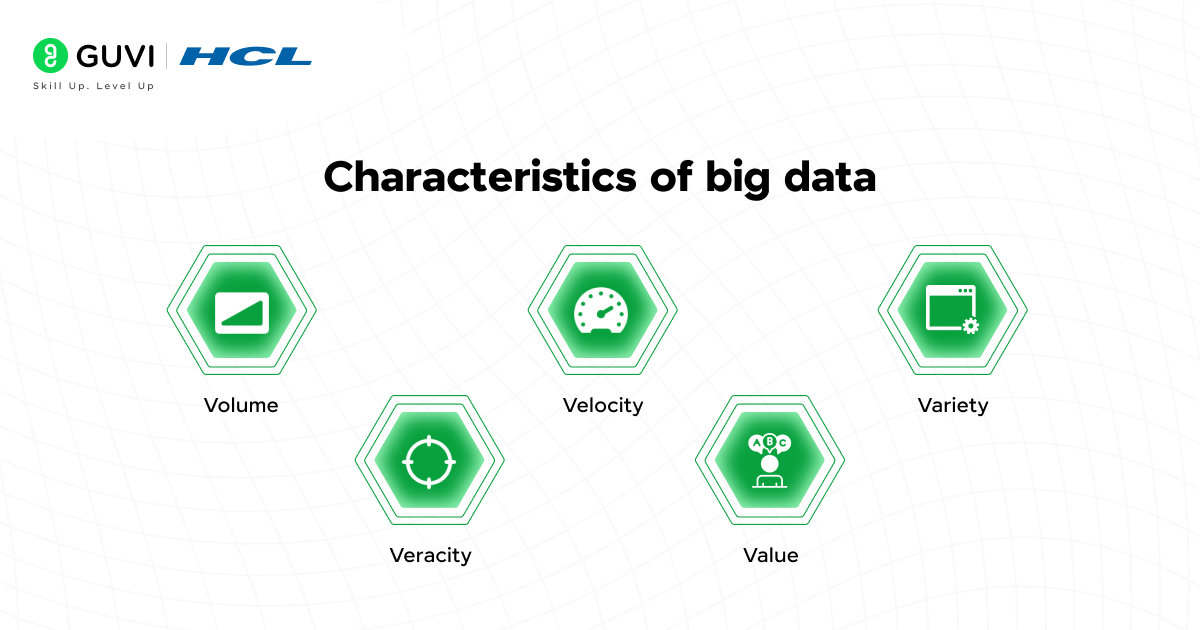

- What are 5Vs of big data?

The 5Vs are the key characteristics of big data. It includes:

- Volume: It indicates the involvement of enormous amounts of data from megabytes to terabytes or even petabytes.

- Velocity: It refers to the speed at which the data is collected and processed in real-time.

- Variety: It represents the different types of data that big data supports for storing and processing.

- Veracity: It refers to the accuracy and reliability of the data.

- Value: It defines the insights and business values that are derived from the data.

- What are the common applications of big data?

Big Data focuses on solving complex problems in various industries that require data-driven solutions. Some of the common applications include:

- Sentiment analysis on social media to understand public opinion

- Recommendation Systems and Inventory management systems for personalized shopping experiences

- Forecasting and optimizing real-time traffic for better transportation of goods

- Fraud detection using transactional patterns in finance sector

- Predictive analytics and data aggregation to improve diagnostic accuracy and treatment plans for patients

- Why is big data important in industry? Or How does big data solve industry challenges?

Big Data focuses on addressing critical challenges that require unstructured and huge amounts of data for processing and analyzing. The data may include images, videos and textual documents. It uses distributed computing system frameworks such as Hadoop and Spark for storing and processing massive datasets.

- What is the difference between structured, unstructured and semi-structured data?

Dataset can be classified into three categories:

- Structured data: The data is organized in tabular formats with labelled columns. It is often stored in relational databases such as SQL.

- Semi structured data: The data is organized with tags for some data and other lacks the tag. It includes formats like XML, JSON and YAML.

- Unstructured data: The data does not follow any predefined structure. It includes data like audio, video and text and is stored in NoSQL databases such as MongoDB.

- What are the challenges that come with a big data project?

Whenever dealing with huge amounts of data and the enormous processing power required for that data, it comes with some challenges. We need to trade off between the challenge that suits best for specific problems.

- Many organizations do not have large data storage for storing the data and deploying the results.

- Securing data from malicious attacks and protecting personal details of customers are complicated by the data types and amount of data.

- Ensuring data quality and integrity can be difficult to achieve when working with large quantities of heterogeneous data.

- The cost of infrastructure, storage and software are difficult to keep under control.

- What is HDFS?

Hadoop Distributed File Systems (HDFS) plays an important role in big data systems that are built to store and manage huge amounts of data across multiple nodes. The working of HDFS is based on dividing the huge data into smaller blocks and distributing them across different clusters of nodes. It ensures data availability, reliability by replicating the data blocks into different nodes so that even if there is a hardware failure it works.

- What are the differences between on-premise and cloud based big data solutions?

On-premises: To implement an on-premises big data solution, you need to set up a dedicated infrastructure which is ideal for business needs to have a complete control over the data, and computations. If you are working on sensitive data, on-premises solutions are best for data security and enhanced control.

Cloud-based: This provides a dedicated infrastructure that is ideal for business needs through cloud computing. Services like AWS, Azure and Google Cloud offer pay-as-you-go services that are best suited for scalability and integration with big data tools like Spark and Hadoop. If you do not want to set up your own physical infrastructure, this is the best option for you.

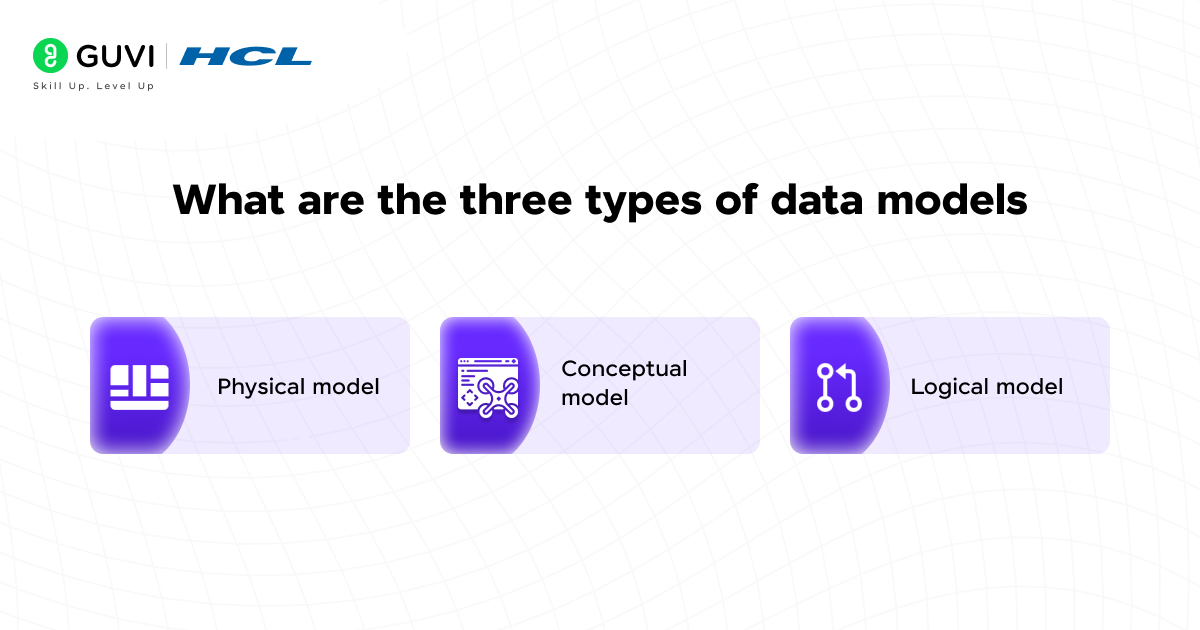

- What are the three types of data models?

Data modelling is the process of storing, organizing, accessing and processing relevant data for big data systems. There are three types of data models:

- Physical model: This model defines how data is stored and accessed including the file formats and indexes.

- Conceptual model: It provides a high level conceptual view of the data, its relationships among other data for business purposes.

- Logical model: It describes the logical part i.e., the data structure such as data attributes and relationships.

- Compare relational and non-relational databases

| Feature | Relational Databases(SQL) | NoSQL |

| Definition | It stores data in structured tables. | It stores data in non-structured formats such as json, graphs. |

| Schema | It uses a predefined schema. | It uses a flexible schema. |

| Scalability | It uses horizontal scaling. | It uses vertical scaling. |

| Query language | Structured Query language(SQL) | No standard language. It uses APIs. |

| Examples | MySQL, PostgreSQL, Oracle. | MongoDB, Cassandra, Redis. |

Start your journey in MongoDB with HCL Guvi’s Mastering MongoDB course that teaches you from the basics to advanced. You will gain industry recognized certifications and enhance your skill on becoming a Big Data developer.

- What is the difference between partitioning and sharding?

Partitioning: It is a technique to divide the large dataset into smaller and manageable pieces. The partitioning can be done on rows (horizontal), columns (vertical), range and hash. It helps in query performance optimization and data management.

Sharding: It is a type of horizontal partitioning where each subset of data known as shard is stored on a separate server. It is used for scaling purposes. It is required for very large scale applications that are in need of distributed architecture.

Note: All sharding is partitioning but not all partitioning is sharding.

- What are they key steps in deploying a big data platform?

There is no set of steps for deploying a big data platform. Each problem requires a different way of approach. However, the big data platforms requires three basic steps:

- Data ingestion: It is a process of collecting data from multiple sources such as public databases, social media platforms, log files and business documents. It also supports collecting data for real-time analytics.

- Data storage: Once the data is extracted, we need to store it in the database for future purposes. It might be HDFS, Apache HBase or NoSQL databases.

- Data processing: The final step is to prepare and process the data for data analysis. With the help of frameworks such as Hadoop, Apache Spark, Flink or MapReduce to handle a massive amount of data.

- What is Hadoop and mention its components?

Hadoop is an open source framework for distributed processing that handles large data sets across clusters. It has the ability to process different types of data and distribute the workloads across multiple nodes which makes a good fit for big data.

The components of hadoop includes:

- HDFS: It is a key component of the Hadoop ecosystem that handles and serves the platform’s primary data storage system.

- Hadoop YARN: It is a resource management framework that schedules jobs and allocates system resources across the ecosystem.

- Hadoop MapReduce: It is a YARN based system for parallel processing.

- Hadoop Common: It is a huge collection of utilities that support other modules.

- Why is Hadoop popular?

Hadoop provides support for storing and handling huge volumes of structured, semi-structured and unstructured data. Processing and analyzing unstructured data is not an easy task, but with the help of Hadoop’s storage system it is possible to process. In addition to it, it is open source and runs on commodity hardware so it is less costly than systems that rely on proprietary hardware and software. These are some of the reasons why Hadoop is popular for data analysis.

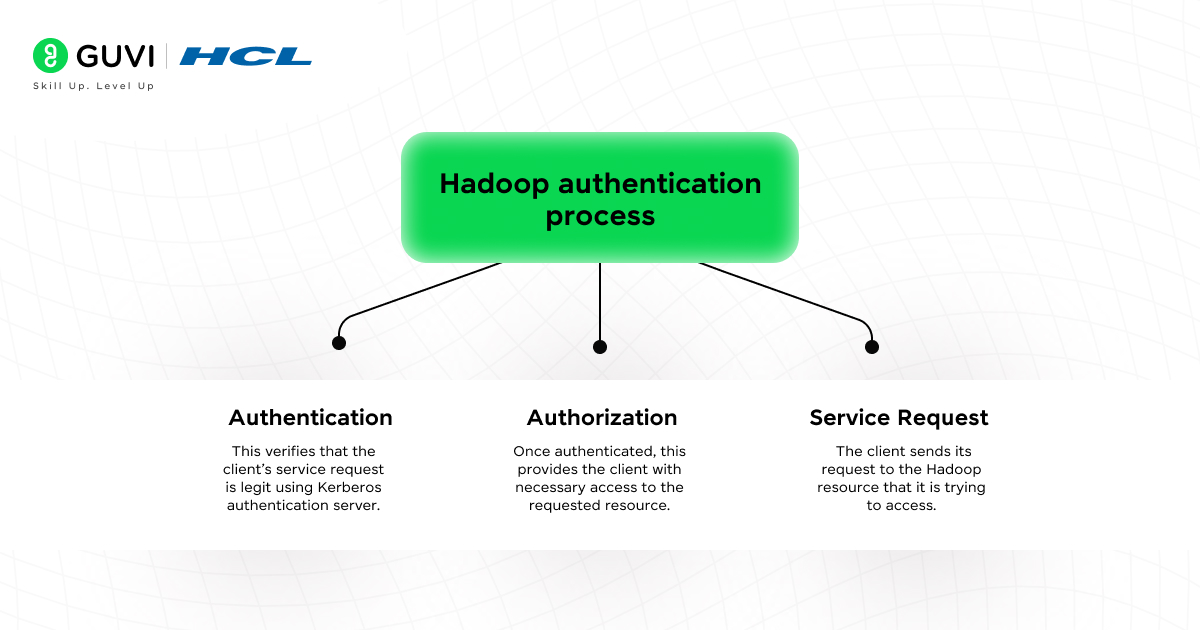

- How does Hadoop protect the data against unauthorized access?

Hadoop uses Kerberos network which uses cryptography algorithms to provide a strong authentication mechanism for client/server applications. With the help of ticket granting server (TGS), a client must undergo three basic steps to prove their identity to that server:

- Authentication: This verifies that the client’s service request is legit using Kerberos authentication server.

- Authorization: Once authenticated, this provides the client with necessary access to the requested resource.

- Service Request: The client sends its request to the Hadoop resource that it is trying to access.

- What are the two main phases of MapReduce operation?

There are two phases in MapReduce:

- Map phase: In this phase, MapReduce splits the inputs into chunks and maps those chunks in preparation for analysis.

- Reduce phase: In this phase, it processes the mapped chunks based on the defined logic. The output is then written to HDFS.

- How is Spark different from Hadoop MapReduce?

| Apache Spark | Hadoop MapReduce |

| The processing type is based on in-memory computation. | The processing type is based on disk-based computation. |

| It is much faster | It is slower due to frequent disk I/O |

| The APIs are used in Scala, Java, Python and R. | It uses only complex Java based APIs |

- How does Hadoop handle data replication and fault tolerance?

Hadoop uses HDFS for data durability and availability. Each block of smaller data is replicated across different nodes in the cluster. By default it replicates 3 copies to ensure that if one fails, the block can be retrieved from another. When a node fails, the NameNode detects the missing block and triggers to get replicas from other replicated data blocks.

- What are the key components of the Spark ecosystem?

The Apache Spark consists of several key components:

- Spark Core: It is the fundamental engine for in-memory, task scheduling and fault recovery.

- Spark Streaming: It supports real-time streaming using mini-batches.

- GraphX: It is an API for graph processing and analytics.

- Spark SQL: It is a module for working with structured data using SQL queries.

- What is batch processing and in what scenarios is it best suited?

Batch processing is the process of handling and analyzing data in groups or batches. It runs the jobs on scheduled intervals and not in real time. This process is common in ETL jobs, data aggregation and log analysis. It is suited for historical data analysis, nightly data transformation, data warehousing and for workloads that don’t require immediate results.

- How does Spark Streaming process real-time data?

Similar to batch processing, spark streaming uses micro-batching to divide the data into small batches. But unlike batch processing, the spark streaming process is used for real-time analysis. The data is ingested from sources like Kafka and Flume and created batched at regular intervals i.e., for every 5 seconds. The transformation and actions are applied batch wise and the processed data is stored in databases or file systems.

- What is the difference between Kafka and Flume?

| Kafka | Flume |

| It is a distributed messaging system. | It is a specialized data ingestion tool. |

| It is highly scalable and supports fault tolerance. | It is scalable but less flexible. |

| The data sources can be from applications, IoT, services, etc. | The data sources from web server logs and syslogs. |

- What is a Data Lake and how is it different from a Data Warehouse?

| Data Lake | Data Warehouse |

| It is a centralized repository which stores raw data. | It is a centralized database which stores structured and processed data. |

| It uses a schema-on-read policy. | It uses a schema-on-write policy. |

| It is used to create real-time and exploratory use cases. | It is used for reporting and dashboards. |

- How do you handle schema evolution in a Data Lake?

The schema evolution in a data lake is handled by the following methods:

- Using flexible file formats like Avro, ORC or Parquet.

- By tracking schema changes using tools like Apache hive Metastore or Confluent Schema Registry.

- By maintaining different versions of data with versioned folders.

- Using a data validation framework to catch incompatible changes.

- What are the main differences between ETL and ELT processes?

| ETL | ELT |

| Extract, Transform and Load | Extract, Load and Transform |

| Transformation occurs before loading into the data store. | Transformation happens after loading. |

| It is performed on an intermediate server. | It is performed with a targeted system. |

| Tools: Talend, Informatica, Apache NiFi | Tools: dbt, Spark SQL, cloud-native tools |

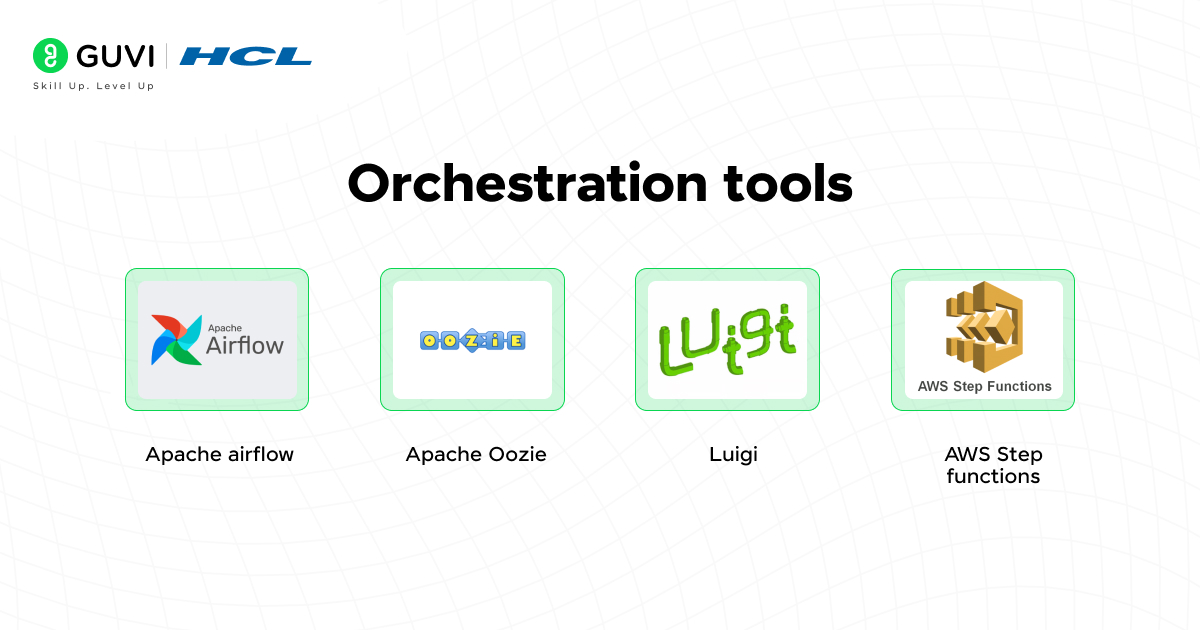

- What tools have you used for workflow orchestration and why?

I have used the most common tools for workflow orchestration. Some of them are:

- Apache airflow: It supports DAGs, alerts, scheduling and python.

- Apache Oozie: The workflow is based on XML and better for Hadoop jobs.

- Luigi: It is good for dependency resolution, useful in pipelines with heavy logic.

- AWS Step functions: It is used in cloud-native workflows.

- What are the key differences between normalized and denormalized models in Big Data?

| Normalized Model | Denormalized Model |

| The data in this model is split into multiple related tables. | The data in this model is combined into fewer and larger tables. |

| It eliminates redundancy. | It allows redundancy for performance. |

| It has slower joins and better data integrity. | It has fewer joins and faster query processing. |

| Example: OLTP systems. | Example: OLAP systems. |

- How do you ensure compliance with data privacy regulations like GDPR?

To ensure compliance with data protection regulations like GDPR, there are few key practices to be followed:

- Identify personal and sensitive data.

- Collect only the necessary information and for lawful purposes.

- Implement role based access, audit trails and encryption.

- Store and enforce user consent preferences.

- Provide mechanisms to delete user data on request.

- Use Apache Ranger, AWS Lake formation and GDPR compliant data catalogs as compliance tools.

- Explain star schema vs snowflake schema. When would you use each?

| Star Schema | Snowflake Schema |

| It is based on a denormalized model. | It is based on a normalized model. |

| It performs faster queries. | It performs slower due to more joins |

| It uses more space. | It uses less space |

| It is used in simpler and faster reporting. | It is used in complex relationships and space optimization. |

- Describe a time when you had to redesign a data model for performance improvement.

Explain your experience from your previous role. If you don’t have relevant experience, then provide the optimal solution without referring to the experience. If you are explaining your previous experience, make sure to quantify your result.

Example Response:

In a previous role, we had a denormalized table in our data warehouse that combined product, user, and order information. As data grew, query times increased significantly due to high cardinality and redundant joins in BI dashboards.

I redesigned the model into a star schema, separating dimensions (user, product) and keeping a slim fact table with order events. I also implemented partitioning by date and materialized views for heavy aggregation queries.

The result was a 70% reduction in average query time, improved scalability, and easier onboarding for analysts working with the schema.

Conclusion

In conclusion, this blog is the perfect last minute guide to ace your big data technical interview. It covers deep learning topics ranging from types of data, databases, big data characteristics, Apache Spark, Hadoop Ecosystem, MapReduce, ETL, ELT, Data modelling, data lake, data warehouse and data ingestion .Mastering these concepts will not only help you in acing data science roles related interviews but also help you in other data related roles that require big data knowledge. Happy Learning!

Did you enjoy this article?