Top 30 Deep Learning Interview Questions and Answers

Dec 29, 2025 9 Min Read 3226 Views

(Last Updated)

Do you have an upcoming interview that requires deep learning knowledge? Confused about what to expect in the interview? We have got you covered!

In this blog, we will see a detailed introduction to deep learning, important concepts for interviews, and the top 30 deep learning interview questions and answers. This blog will be the best last-minute guide for your deep learning interviews. Let’s get started!

Table of contents

- Quick Answer

- What is Deep Learning?

- Important Deep Learning Concepts for Interviews

- Top 30 Deep Learning Interview Questions and Answers

- Scenario-Based Interview Questions

- 💡 Did You Know?

- Conclusion

Quick Answer

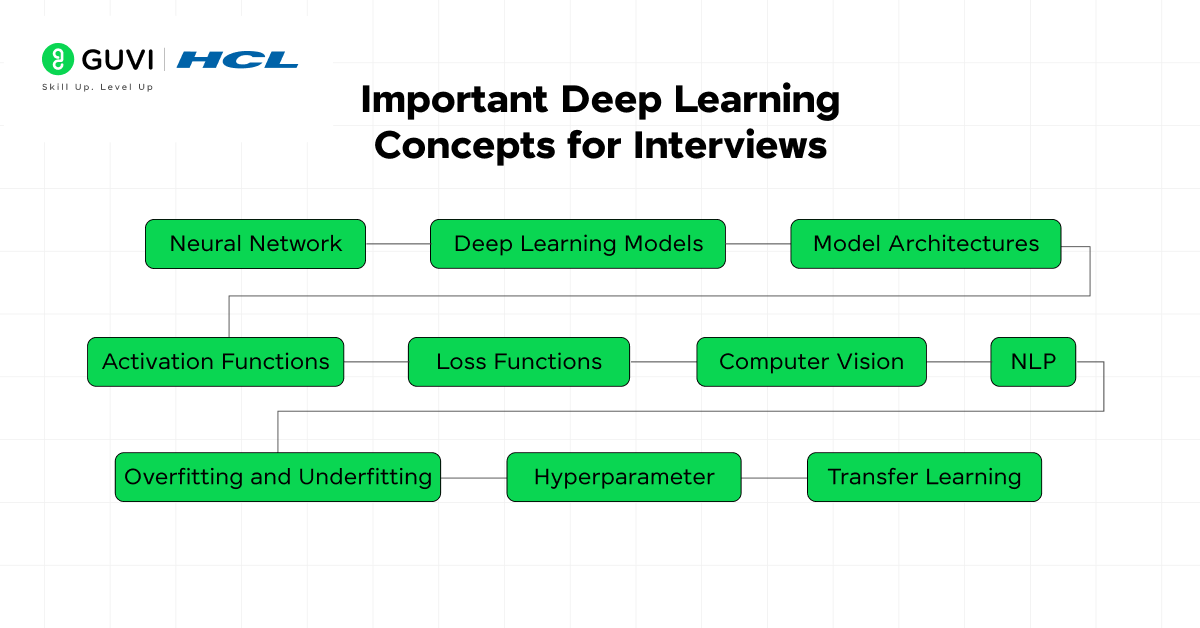

Deep learning interviews focus on neural networks, deep learning models, model architecture, activation functions, loss functions, computer vision, NLP, overfitting, underfitting, hyperparameters and transfer learning. You should also be prepared to answer application-based and scenario-based questions.

What is Deep Learning?

Deep learning is a specialized type of machine learning that uses multiple layers of artificial neural networks to train from large amounts of data to produce accurate results. Deep learning models try to imitate the human learning process. In general, deep learning models consist of three layers of nodes: the Input layer, the Hidden layers, and the Output layer. Between each connection of neurons, it has weight that adjusts as the network learns.

Important Deep Learning Concepts for Interviews

This section covers the important deep learning concepts that are essential for interviews. Since deep learning is a subset of machine learning and artificial intelligence, it consists of advanced learning methodologies along with AI.

- Neural Network: It is based on the working of the human brain, which consists of multiple layers of interconnected nodes.

- Deep Learning Models: Learning about different models, their use cases, advantages, and disadvantages helps in the efficient use of models.

- Model Architectures: Understanding the different model architectures, including the number and types of layers used, helps in choosing the best model for the problem statement.

- Activation Functions: It determine whether the node should be activated or not, helping to capture the nonlinear relationship between input and output.

- Loss Functions: It measures the model’s performance, prediction accuracy by comparing it with targeted values. It also provides feedback that is later used to update the model weight through optimization.

- Computer Vision: It is a field in deep learning that enables machines to understand information from visual data through videos.

- NLP: Natural language processing involves teaching machines to understand and generate human language.

- Overfitting and Underfitting: These concepts are used to understand the model’s training levels.

- Hyperparameter: These are external configurations that are set before training a model to increase the model’s performance and training efficiency.

- Transfer Learning: It is an advanced type of training that pre-trains a model and fine-tunes it for specific tasks.

Can’t wait to start your journey in deep learning? Start with HCL Guvi’s free E-book on Mastering Data Science that provides a detailed roadmap towards machine learning, data science, AI, and deep learning.

Top 30 Deep Learning Interview Questions and Answers

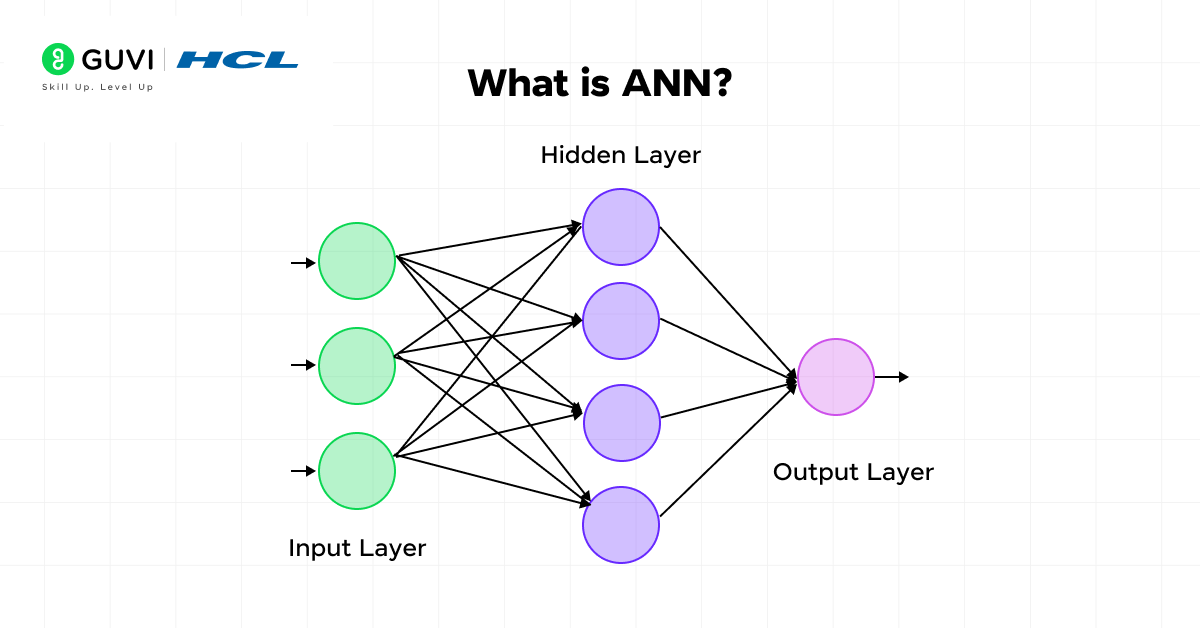

- What is ANN?

ANN stands for Artificial Neural Network. It is machine learning that is inspired by the structure and function of the human brain. The connected nodes between the layers of ANN are known as neurons that have weights to define the strong connections between neurons. These neurons process information, learn from data, and make predictions.

- How would you choose the right deep learning model for your problem and data?

There are certain factors, such as dataset types, problem structure, complexity, available resources, and performance goals, that you need to consider before choosing a deep learning model. For example:

- CNN is best for tasks based on images, RNNs for time series or sequential data, Multi-layer perceptron (MLP) for tabular data, and consider transfer learning with a pre-trained model for limited data.

- Perform lots of experiments and comparisons between the models using cross-validation and evaluation metrics to find the model that best fits the use case.

- How would you evaluate the performance of deep learning models?

The performance of a deep learning model is evaluated using the machine learning model’s evaluation metrics. For classification models, metrics like accuracy, precision, F-1 score, and recall are used. For regression models, the MSE (Mean Squared Error) metric is used, and other metrics the confusion matrix, cross validation, provide a detailed overview between the actual and target values.

- What are the differences between single-layer and multilayer perceptrons?

A single-layer perceptron has only one layer of weights between the input and output layers. It can only solve linear problems.

A multilayer perceptron (MLP) has one or more hidden layers, which allows it to capture nonlinear patterns. It serves as a backbone for complex model architectures.

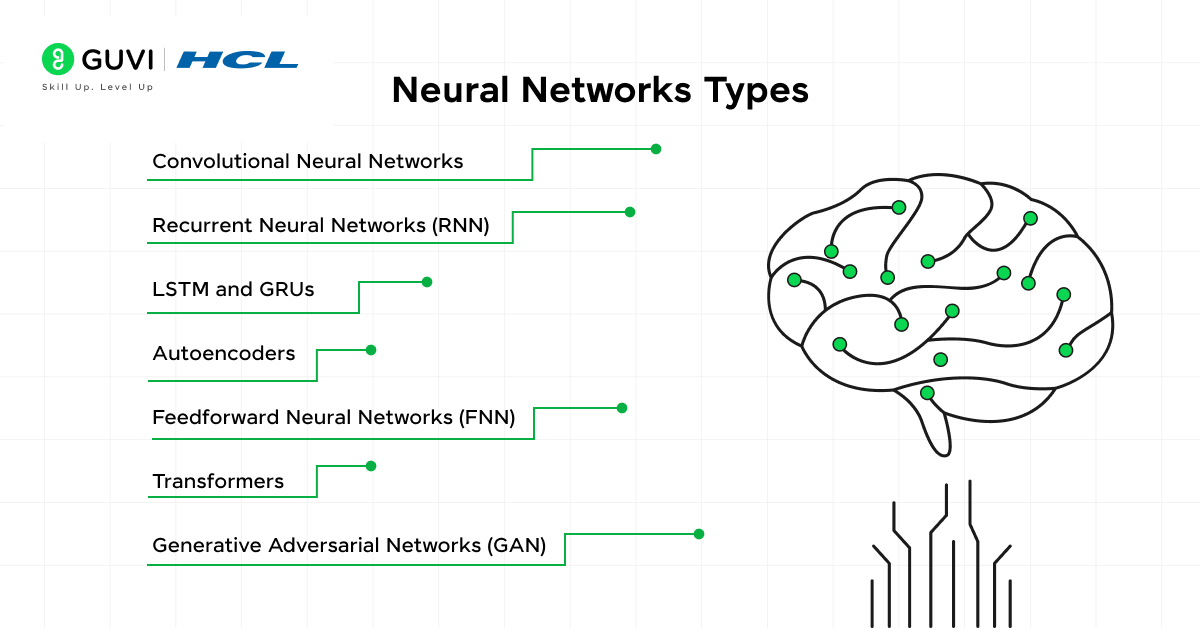

- What are Neural Networks? Give me its types.

Neural networks are computer systems that imitate human nervous systems, working to learn from huge datasets to solve complex problems. There are many types of neural networks available, and people are working on creating new neural networks. Some of the common types are:

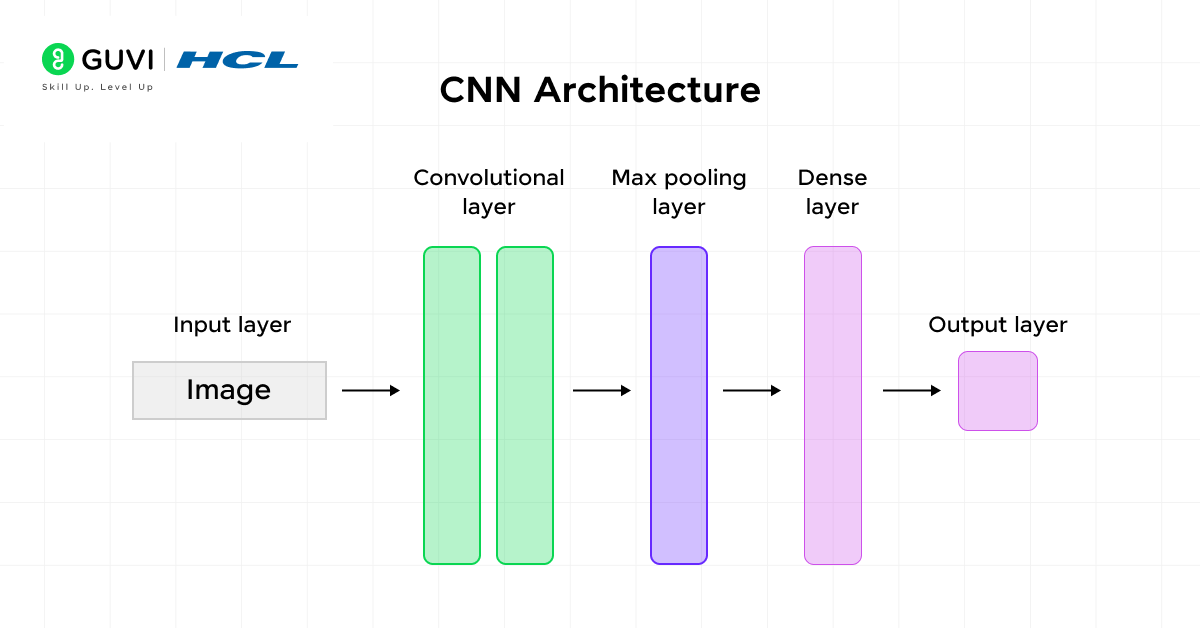

- Convolutional Neural Networks (CNN): It is used for tasks like object detection and image classification.

- Recurrent Neural Networks (RNN): Tasks include time series forecasting and text generation.

- LSTM and GRUs: These are used in speech synthesis, chatbots, and sentiment analysis.

- Autoencoders: Most of the tasks include dimensionality reduction, anomaly detection, and unsupervised learning.

- Feedforward Neural Networks (FNN): This is used for regression and classification tasks that have structured datasets.

- Generative Adversarial Networks (GAN): It is a recent model popular for generating images, styles, and synthetic data with super resolution.

- Transformers: It is suitable for natural language processing (NLP) tasks.

- What are the real-world applications of deep learning?

A wide range of industries use deep learning in the real world. Some of them are:

- Object detection for autonomous vehicles

- Customized chatbots for customer support

- Medical image diagnosis

- Predictive solutions in the retail industry for trend prediction

- You are using a deep neural network for a prediction task. After training your model, you notice that it is strongly overfitting the training set and that the performance on the test isn’t good. What can you do to reduce overfitting?

To reduce overfitting in a deep neural network, changes can be made in three places/stages: The input data to the network, the network architecture, and the training process.

- The input data to the network:

- Check if all the features are available and reliable

- Check if the training sample distribution is the same as the validation and test set distributions. Because if there is a difference in validation set distribution, then it is hard for the model to predict, as these complex patterns are unknown to the model.

- Check for train / valid data contamination (or leakage)

- The dataset size is enough; if not, try data augmentation to increase the data size

- The dataset is balanced

- Network architecture:

- Overfitting could be due to model complexity. Question each component:

- Can fully connected layers be replaced with convolutional + pooling layers?

- What is the justification for the number of layers and several neurons chosen? Given how hard it is to tune these, can a pre-trained model be used?

- Add regularization – lasso (l1), ridge (l2), elastic net (both)

- Add dropouts

- Add batch normalization

- How would you handle the overfitting in a deep learning model?

Overfitting occurs when the model is excessively trained and comfortable with the existing dataset; later, it will struggle to perform correct inference on any unseen data. The best way to handle deep learning model overfitting is by implementing regularization techniques such as L1 or L2 regularization with penalty terms that are added to the loss function. These strategies can then be combined with early stopping to finalize the training. Early stopping is the process of terminating the execution once the validation loss stops improving.

- When should you use deep learning over machine learning solutions?

Deep learning models are chosen when the dataset is large, unstructured, and to extract meaningful information from the irregular data. Understanding and experimenting with each deep learning model will help you choose the best model for specific use cases. Here are some of the problems that can be solved using deep learning solutions more efficiently.

- Image classification of animals and plants.

- Voice recognition, Translation, and other language-based tasks.

- Smart locks using face recognition in real-time images.

- Stock market predictions for the long term.

- What is CNN?

CNN is a type of neural network that stands for Convolutional Neural Network. It is mostly used for computer vision tasks that include real-time object detection, image classification, image processing, and segmentation. With the help of mathematical operations, it detects texture, structure, patterns, and edges from the images and uses them for providing solutions to specific problems.

Interested in learning more about artificial intelligence and machine learning? Then, be sure to enroll in HCL Guvi’s IIT-M Pravartak certified Artificial Intelligence & Machine Learning Course.

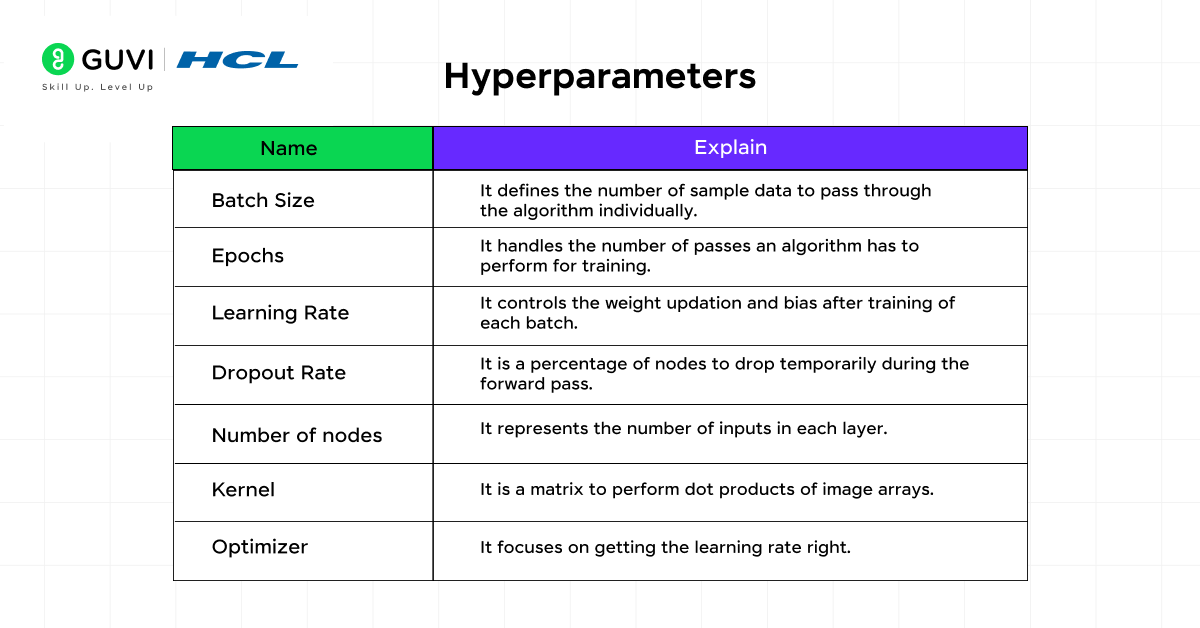

- Can you name and explain a few hyperparameters used for training a neural network?

Hyperparameters are the set of parameters in the model which affect the performance of the model, but it is not learned from the data, and they can be changed manually.

- Batch Size: It defines the number of sample data to pass through the algorithm individually.

- Epochs: It handles the number of passes an algorithm has to perform for training.

- Learning Rate: It controls the weight updation and bias after training of each batch.

- Dropout Rate: It is the percentage of nodes to drop temporarily during the forward pass.

- Number of nodes: It represents the number of inputs in each layer.

- Kernel: It is a matrix to perform dot products of image arrays.

- Optimizer: It focuses on getting the learning rate right.

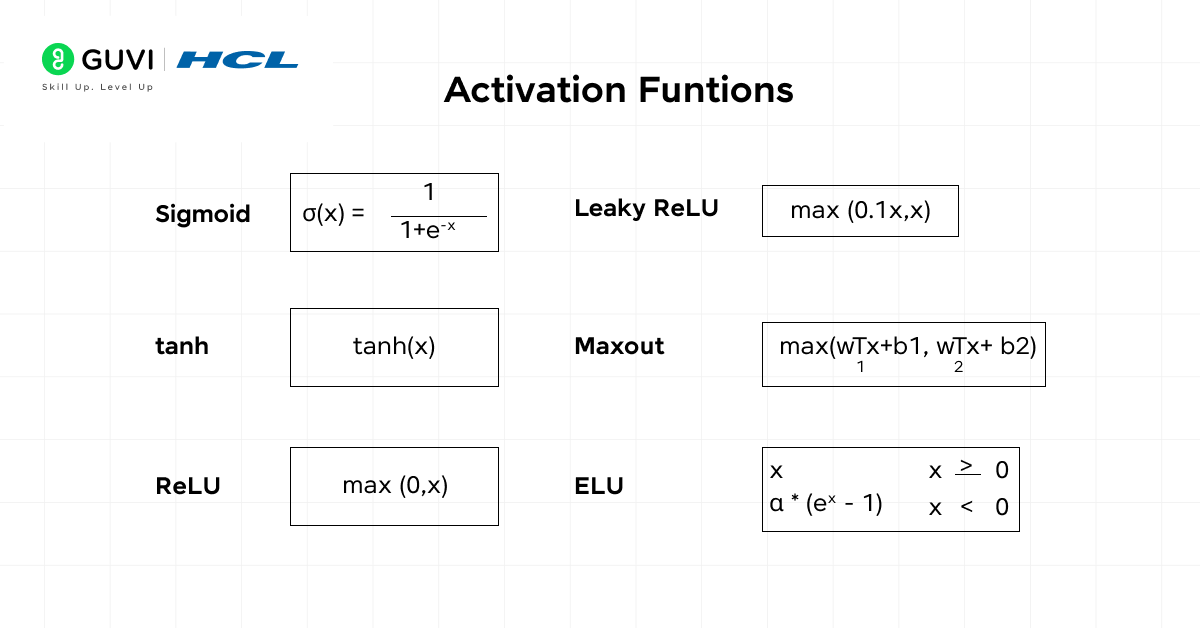

- What is the use of activation functions? Give me its types.

Activation functions play a crucial role in neural networks by introducing non-linearity to the data to find and learn complex patterns. It occurs at the neuron level during the processing of mapping the input into an output value. Without activation functions, neural networks will behave like a linear regression model. Some of the types of activation functions are:

- ReLU: It is the simplest and most common function.

- Sigmoid: It is used in binary classification because the values range between 0 and 1.

- Tanh: It is similar to sigmoid, but the output values range between -1 and 1.

- Leaky ReLU: It is a variant of ReLU that allows small negative values as outputs.

- Softmax: It converts all the logics into probabilities and is used in multi-classification problems.

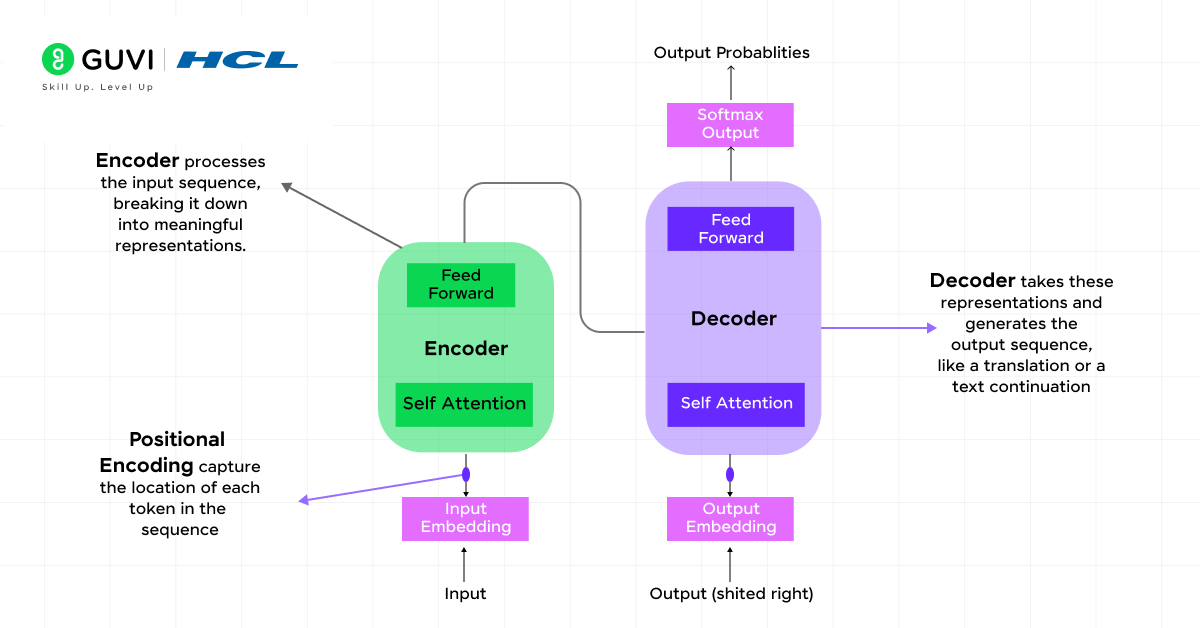

- Describe the process of generating text using a transformer-based language model.

Transformer models like GPT follow certain steps to generate relevant text as output. The process includes the following steps:

- Encoding: The received user query as the prompt is tokenized and converted into embeddings for easier understanding.

- Self-Attention Mechanism: The model processes the embedded input using attention to understand the previous words and determine the possible relevant words.

- Decoding: Using the learned patterns, it predicts the next word one at a time.

- Autoregression: The new word is added to the input and repeated until the end, such as the maximum length of the response.

- What is computer vision, and why is it important?

Computer vision is a field in artificial intelligence that acts as an eye for machines to see through cameras, interpret, analyse, and understand visual data such as images and videos. It is used in many real-world applications such as autonomous vehicle driving, facial recognition, medical image analysis, industrial automation, and surveillance.

- Can you explain the concept of feature extraction in computer vision?

When the user or the machine detected an image, it analyzed the provided image to extract the features by identifying and isolating the important patterns, such as edges, textures, shapes, or regions of interest. In deep learning, the feature extraction process is automatically done through convolutional layers, making it a stress-free process.

- Why should we use batch normalization?

Batch normalization is a process of normalizing the mapping of inputs to a layer for each mini batch, which keeps the mean and variance balance of the inputs stable. The benefits of using batch normalization include:

- It provides faster training with high learning rates

- It improves the stability, which reduces internal covariate shift

- It helps to reduce overfitting

- What is a GPU and why is it required?

GPU (Graphics Processing Unit) is a special processing unit for handling parallel computations quickly and efficiently. Deep learning involves massive matrix operations to train neural networks. With the help of a GPU, it can perform thousands of operations in parallel, making it speed up the training process, especially with large datasets.

- What are the different layers in ANN?

In general, there are three main types of layers present in neural networks: input layer, hidden layers, and output layer. The purpose of the input layer is to receive data from the users, the hidden layers perform complex computations, and the output layer produces the final prediction. There are different types of hidden layers for ANN, and each can be used for various purposes. Some of the hidden layers are:

- Dense Layer: It performs a weighted sum and activation functions

- Convolutional Layer: It applies a convolution using filters

- Recurrent Layer: It maintains state across time steps

- Dropout Layer: It randomly drops neurons during training

- Batch Normalization: It stabilizes and speeds up the training

- What is forward and backward propagation?

| Forward propagation | Backward propagation |

| It starts with the existing facts and moves towards applying rules to derive new rules until a goal is reached. | It starts with the output of a neural network and moves backward adjusting weights to minimize the error between actual and predicted output. |

| It is used in rule based system | It is used to train the model based on the error rate |

- How the number of hidden layers and the number of neurons per hidden layer selected?

The number of hidden layers and the number of neurons per hidden layer will differ for every problem statement. There are no separate techniques and principles for finding the number of hidden layers exactly. We need to experiment with a different number of hidden layers for each problem until we find the exact number. Simple problems require only 1 hidden layer, whereas complex problems require n layers. By practicing, you will know the tradeoff between problem complexity and several hidden layers.

- Define epoch, iteration, and batches.

Epoch: It handles the amount of data that is being sent to the model for training at a time.

Iteration: It defines the number of iterations required to train the whole training dataset.

Batches: It is a subset of the training data that is processed in one go.

The relationship between Epoch, iteration, and batch size is given as:

Epoch = Number of iterations * Batch Size

Number of iterations = (Total number of sample data) / Batch Size

- What is fine-tuning in Deep learning?

Fine-tuning is a transfer learning technique where an existing pre-trained model (that is already trained on a large dataset) is further trained on a smaller, task-specific dataset. Instead of training the model entirely from scratch, fine-tuning just updates the model’s weights and other hyperparameters according to the required tasks.

- What is LSTM, and how does it work?

LSTM (Long Short-Term Memory) is a type of RNN that is designed to learn long-term dependencies in sequential data. An LSTM cell contains three gates: the Forget gate, input gate, and output gate. The forget gate decides what information needs to be stored and what needs to be discarded from the cell. The input gate selects which new information needs to be added, and the output gate determines the output from the current cell.

- What is GAN?

GAN (Generative Adversarial Network) is a type of deep learning model that combines two neural network models, such as a generator and discriminator, to compete against each other to generate new data. The generator model creates new data, where the discriminator model tries to distinguish between real and generated data, making the generator create real-like data. It is used to generate images, music, and text.

- What are the key components of a transformer model?

The key components of a transformer model include

- Self-attention mechanism: It helps the model focus on relevant parts of the input query.

- Encoder-decoder network: The encoder helps in analyzing the input sequence and creates a context vector, which helps the decoder to construct the output sequence.

- Multi-head attention: It allows the model to attend to different parts of a sequence from multiple perspectives.

- Positional encoding: It adds information in order of tokens to structure the output.

- What are some challenges or ethical considerations associated with LLMs?

- Bias and Fairness: LLMs may inherit bias against gender or racial groups present in the training data.

- Misinformation: It may have the possibility to generate false or misleading content.

- Misuse: It may be misused for malicious purposes such as generating spam, deepfakes, or misinformation.

- Privacy: It may unintentionally memorize and output private information from training data.

- What do you know about dropouts?

Dropout is a regularization technique used to avoid overfitting of the model during the training period. With the help of the dropout technique, at each stage of training, a random set of neurons is dropped. This forces the network to learn redundant representations to improve robustness.

- What is bias and variance in deep learning models? How can you achieve a balance between the two?

Bias is an error that occurs due to overassumptions present in the model (underfitting).

Variance refers to the error from sensitivity to small fluctuations in training data (overfitting).

To achieve balance between bias and variance, we can use cross-validation techniques, regularization techniques that prevent overfitting and by using complex models to reduce bias if underfitting occurs.

- The input image has been transformed into a 28 x 28 matrix and a 7 x 7 kernel with a stride of 1. What will the convoluted matrix’s size be?

Output size formula is given as:

Where, N => input size, F => filter/kernel size, S => Stride

Given data:

N = 28

F = 7

S = 1

Apply the above formula,

The convolution is applied in both the horizontal and vertical directions, the output matrix size is = 22 x 22.

- What is transfer learning?

Training a model from scratch for a specific task would require a huge amount of data, and it is also a tedious process. Transfer learning allows us to leverage the knowledge from a pretrained model on a large dataset and provide a new dataset on top of it to tune it for a specific task in a short period and at low cost.

Scenario-Based Interview Questions

1. You trained a neural network, and it performs well on training data but poorly on test data. What is happening and how do you fix it?

This is overfitting. The model has memorized the training patterns instead of learning general features. You can fix this by adding dropout, L2 regularization, data augmentation, simplifying the architecture, or collecting more data. Early stopping and cross‑validation also help maintain generalization performance.

2. A CNN struggles to recognize images captured under different lighting conditions. What would you do?

The model isn’t robust to real‑world variations. Use data augmentation such as brightness shifts, rotations, cropping, and color jitter to expose the network to more variations. Batch normalization can stabilize learning, and training with more diverse data improves generalization across lighting environments.

3. An NLP model gives different outputs for sentences that mean the same thing. Why, and how can you improve it?

The model may be underfitting or using weak embeddings that fail to capture semantic meaning. Switching to contextual embeddings like BERT, fine‑tuning the model, increasing capacity, and adding more training examples with paraphrased sentences can significantly improve consistency and understanding.

4. Your LSTM struggles to capture dependencies in very long sequences. What should you try?

Traditional RNNs and LSTMs can lose information over long ranges. Adding attention mechanisms helps the model focus on important tokens. Alternatively, using Transformers (or even GRUs for efficiency) improves long‑range dependency handling, often with better accuracy and faster training.

5. You only have a small labeled dataset for image classification. How do you build an effective model?

Transfer learning is ideal. Start with a pre‑trained CNN, freeze the early layers, and fine‑tune the later layers on your dataset. This reuses powerful feature detectors learned from large datasets, reducing overfitting, training time, and improving accuracy with limited data.

6. Training loss keeps decreasing, but validation loss starts increasing after some epochs. What is happening?

The model is beginning to overfit. It is learning noise and specific patterns from training data. Apply early stopping, dropout, stronger regularization, and more augmentation. Monitoring validation curves and tuning capacity helps maintain a balanced generalization performance.

7. Training is very slow and doesn’t converge well. What changes would you make?

Possible causes include a poor learning rate, unsuitable optimizer, or unnormalized inputs. Try Adam or RMSProp, apply learning‑rate scheduling, normalize or standardize inputs, and ensure proper initialization. These adjustments typically stabilize gradients and accelerate convergence.

8. You need your model to work on data from a slightly different domain than the training set. What approach fits best?

This is a domain shift problem. Fine‑tuning on a small sample from the new domain, using domain adaptation techniques, or leveraging robust pre‑trained models helps the network adjust without retraining from scratch. Regularization ensures the model doesn’t forget the original domain completely.

9. A CNN detects large objects well but misses very small ones. How do you improve detection?

Small objects often vanish after repeated downsampling. Increase input resolution, use feature pyramid networks, add skip connections, or adjust anchor scales. These techniques preserve fine‑grained details and improve detection accuracy for smaller targets.

10. A Transformer model is powerful but training takes too long. How do you make it faster without losing too much accuracy?

Use mixed‑precision training, gradient accumulation, and efficient batching to reduce computation. Distilled Transformer variants maintain accuracy with fewer parameters. Limiting sequence length and pruning unnecessary layers can significantly speed up training while preserving performance.

💡 Did You Know?

- Some interviewers test your knowledge by giving a pre-trained model and asking how you would fine-tune it for a completely different task.

- Certain activation functions like Swish and GELU are gaining popularity in interviews for high-performance models over traditional ReLU.

- Transfer learning can drastically reduce training time, letting models achieve state-of-the-art results with only a fraction of the data.

Conclusion

In conclusion, this blog is the perfect last-minute guide to ace your deep learning technical interview. It covers deep learning topics ranging from neural networks and their types, activation functions, natural language processing, computer vision, transformer models, autoencoders, transfer learning, hyperparameters, and loss functions. Mastering these concepts will not only help you in acing data science-related interviews but also help you in other AI-related roles that require deep learning knowledge. Happy Learning!

It's very helpfull to my interviews