What Is Transfer Learning in AI and Why It Matters

Dec 15, 2025 5 Min Read 929 Views

(Last Updated)

Picture a scenario in which every time you taught yourself something new, you had to forget everything you already knew. You went through the process of learning how to drive a car, but when you switched to a bike, you started at square one. This would be crazy, right? But, for a very long time, this is how artificial intelligence has worked; every new model would be trained as if it had never seen the world before, innocently blank and dreadfully slow to learn.

But Transfer Learning in AI changes all of that. Transfer Learning allows machines to use knowledge learned in one task and apply that knowledge to learn a new task much faster. A model that can be trained to recognize animals, such as a cat, can recognize another animal, such as a dog, with drastically less data, saving both time and resources.

This advanced improvement not only allows AI to become smarter. It also allows AI to be much more human-like. In this blog, we will discuss what Transfer Learning is in AI, how Transfer Learning works, why Transfer Learning is important, and how Transfer Learning in AI is changing fields like healthcare and natural language processing.

Table of contents

- What Is Transfer Learning in AI?

- Key Definitions

- Why Use Transfer Learning?

- Mechanisms & Types of Transfer Learning

- Feature Extraction vs Fine-Tuning

- Taxonomy & Transfer Types

- Transfer Learning in AI Workflow

- Applications of Transfer Learning in AI

- Computer Vision

- Natural Language Processing (NLP)

- Specialized Domains (Healthcare, Finance, etc.)

- Emerging Research Areas

- Benefits and Business Value

- Challenges and Limitations

- Wrapping it up:

- FAQs

- What is Transfer Learning in Artificial Intelligence?

- Why is Transfer Learning significant to AI?

- What are the examples of Transfer Learning used in the real world?

- What’s the difference between Transfer Learning and traditional machine learning?

What Is Transfer Learning in AI?

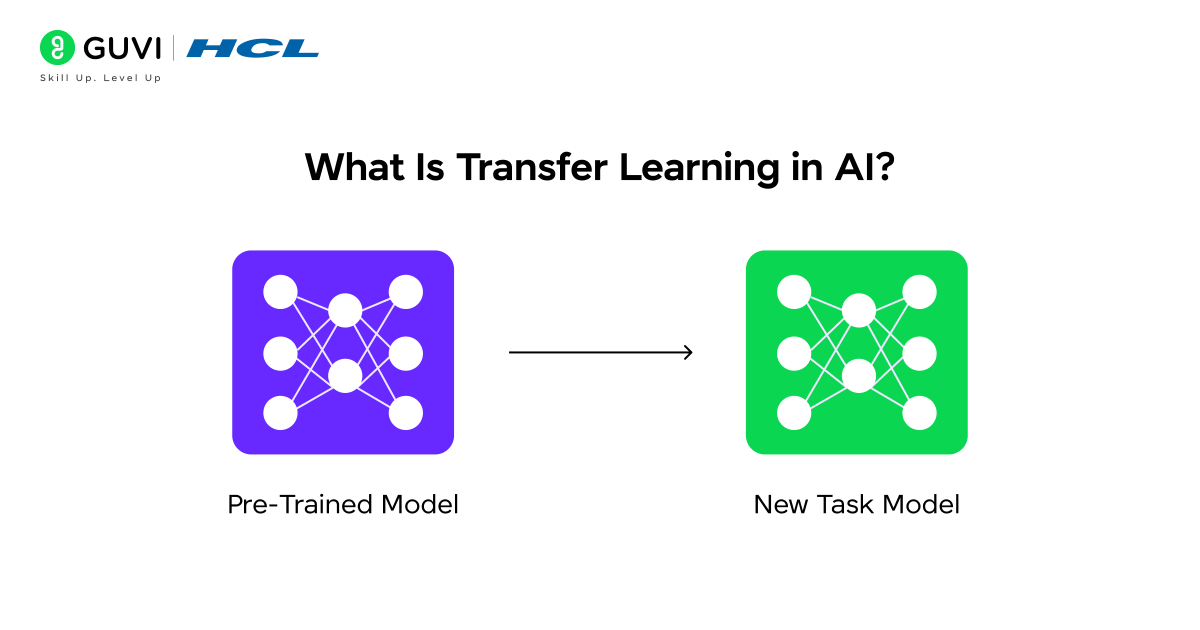

Transfer learning in AI is a machine learning methodology in which knowledge that was acquired while solving one problem (the “source task”) is applied to a different but related problem (the “target task”). In more formal terms, we have a source domain and source learning task, and a target domain and target learning task that are different (possibly in data distribution or label space). We want to improve the performance on the target task by transferring knowledge and/or representations learned in the source domain.

Why is this powerful? In supervised learning, we built models from scratch for a new task, assuming that the training and test data were from the same distribution, meaning we had to discover all relevant features. But in real-world problems, there is often structured sharing of relevant features, and we don’t need to reinvent the wheel every time. Rather, we just apply previously learned “wheels” from each task.

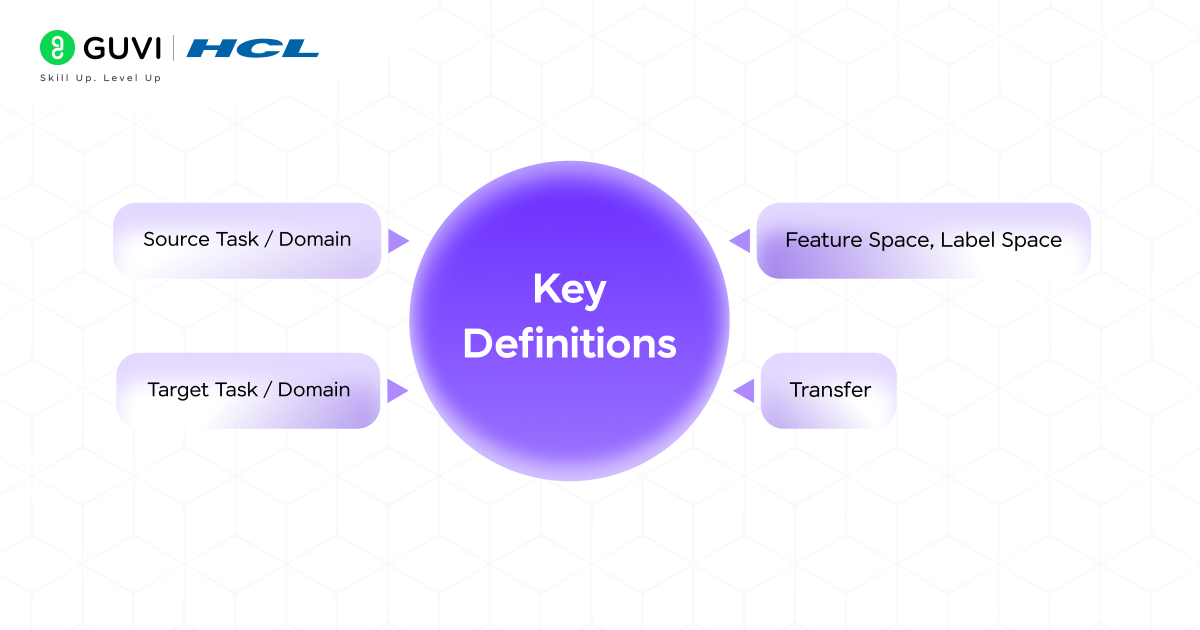

Key Definitions

- Source Task / Domain: The task or domain that the model was first trained on (with lots of data)

- Target Task / Domain: the new problem or dataset, typically smaller, for which we hope to transfer our previous learning

- Feature Space, Label Space: In formal definitions, a domain is defined with a feature space and marginal distribution, and a task is defined as the label space plus a predictive function.

- Transfer: The act of taking what is learned (parameters, representations, embeddings), and applying it to the target task.

Why Use Transfer Learning?

There are many good reasons to do this.

- It decreases the amount of data we need, which is good for scenarios where you might not have much labeled data for the task on which you want the model to operate.

- It accelerates training because the model will be trained from a pre-trained model instead of needing to learn everything from scratch.

- It makes our computation cheaper, because we don’t have to train a massive model from the ground up.

- And, it increases our generalization, because the model will have been trained on many datasets instead of just one, which could possibly lessen overfitting.

Mechanisms & Types of Transfer Learning

Feature Extraction vs Fine-Tuning

Two typical approaches:

- Feature Extraction: In this approach, the pre-trained model (trained on the source task) is used as the fixed feature extractor. Many of the layers are frozen (their weights don’t change), and only a new classifier head (or a small number of layers) is trained on the target data set. This approach is useful when the target data set is small.

- Fine Tuning: Instead of freezing most of the model, we allow some or all of the layers to be optimized during training on the target task. This allows for additional adaptation to the target domain but carries more risk for over-fitting, and requires more data and computing.

Taxonomy & Transfer Types

Transfers can also be understood in terms of a taxonomy based on characteristics of the domain and/or task similarities:

- Same domain, same task but different data set: e.g., image classification on one data set to another data set of an almost identical type.

- Same domain, different task: e.g., a model trained for object classification reused for object detection or object segmentation.

- Different domain, different task: more challenging, e.g., a language model reused for an audio classification task.

In each case, you have to evaluate whether knowledge transfer is positive (helpful), negative (harmful), or neutral.

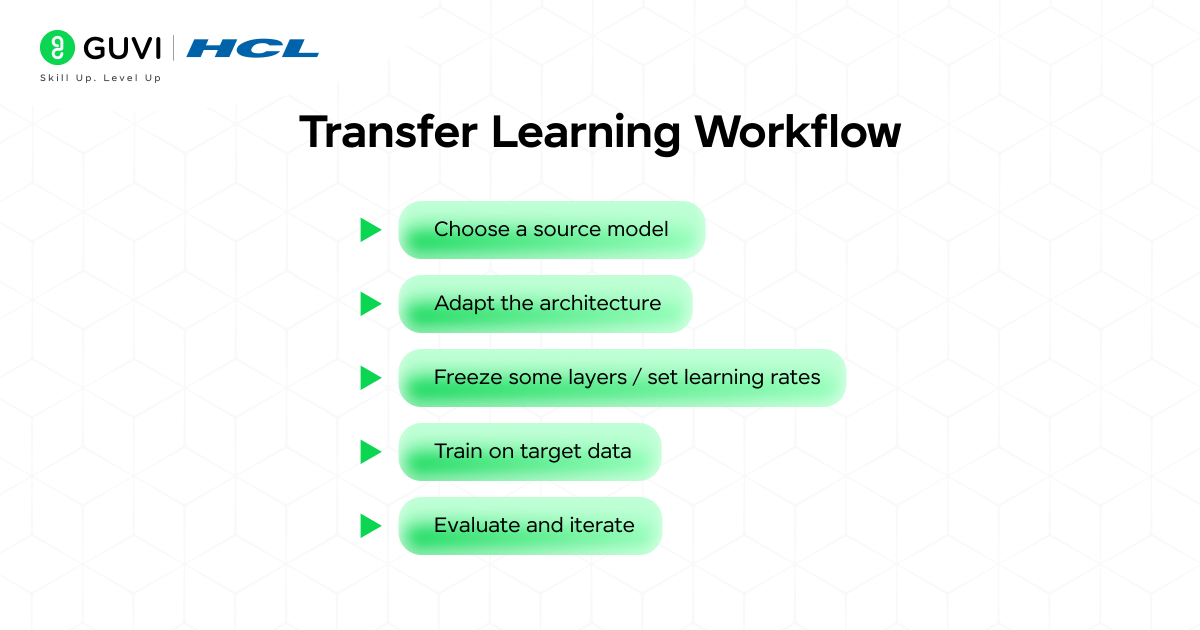

Transfer Learning in AI Workflow

- Choose a source model: Usually a model that was pre-trained on a large data source (e.g., ImageNet for vision, and large text corpora for language).

- Adapt the architecture: Sometimes modify the classifier head or add layers related to the output classes for the target tasks.

- Freeze some layers / set learning rates: Decide which segments of the model to be trained, and potentially even set lower learning rates for pre-trained segments.

- Train on target data: With the target dataset, train the model as either the feature extractor or fine-tune it.

- Evaluate and iterate: Track performance of the model, possibly unfreezing depth layers and adjusting hyper-parameters if necessary.

Also read: Complete Machine Learning Syllabus: Roadmap with Resources

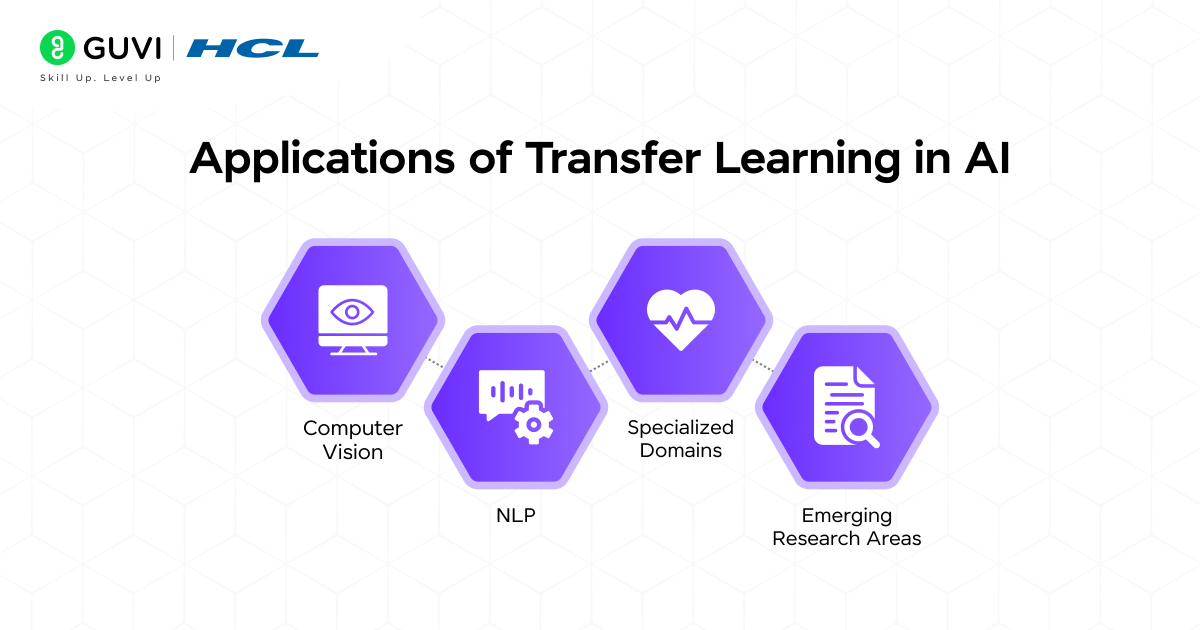

Applications of Transfer Learning in AI

Computer Vision

In image-based tasks, pre-trained convolutional neural networks (CNNs) like VGG, ResNet, Inception (trained on datasets such as ImageNet) serve as the backbone for new tasks like medical image diagnosis, object detection, segmentation, or custom image classification.

For instance: a model trained to recognise general objects (cars, animals, chairs) may be fine-tuned to detect tumors in X-rays or abnormalities in satellite imagery.

Natural Language Processing (NLP)

In text and language tasks, large pre-trained language models (LLMs) are often transferred to domain-specific tasks. A model that has learned language structure from massive corpora can be fine-tuned for sentiment analysis, question-answering, translation, or summarization.

Because creating massive labeled text datasets is often expensive, transfer learning helps significantly.

Specialized Domains (Healthcare, Finance, etc.)

In domains with limited data (e.g., medical imaging, genomic data) or a high cost of annotation, transfer learning is especially valuable. For example, a general image recognition model adapted to MRI scans, or a financial fraud detection model re-purposed across related fraud types.

Emerging Research Areas

Researchers are extending transfer learning into areas like tabular data classification using fine-tuned language models and cross-domain transfers such as cybersecurity intrusion detection.

This shows the concept is evolving and being stretched into domains beyond classical vision/NLP.

Also read: AI vs ML vs Data Science: What Should You Learn?

Benefits and Business Value

Adopting Transfer Learning in AI presents several advantages from a business or product standpoint:

- Faster time-to-market: The use of pre-trained models means the cycle of development is considerably shorter. You can deploy a solution faster.

- Reduced cost: Because there are fewer data annotations, less computing, and fewer training iterations, it lowers the cost.

- Better performance: Often, especially when data is lacking, transfer learning would yield better performance than building a model from scratch.

- Scalability: Because you can utilize the same backbone model across related tasks, with fine-tuning, this provides a scalable architecture across an assortment of products.

- Competitive edge: Companies able to repurpose and modify AI solutions faster can be more agile than companies required to start everything on a whiteboard.

- The concept of Transfer Learning in AI is inspired by human learning — we rarely start from scratch when learning new skills.

- Many state-of-the-art AI models like GPT and BERT rely heavily on Transfer Learning to adapt to multiple tasks efficiently.

- Transfer Learning can reduce the amount of data needed for training by up to 90% in some cases.

- In computer vision, a model trained to recognize everyday objects can be fine-tuned to detect medical abnormalities with minimal new data.

- Transfer Learning not only saves time but also reduces computational costs, making AI more accessible to smaller companies.

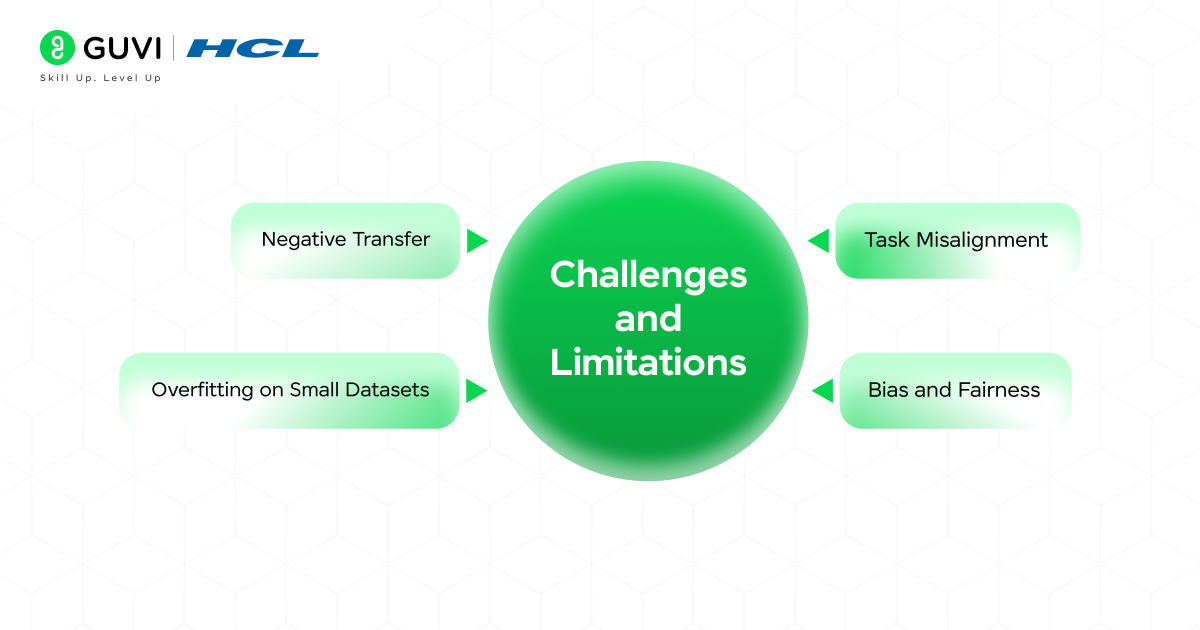

Challenges and Limitations

- Negative Transfer: This occurs when the knowledge from the source task is not just unhelpful but actually harmful to the target task. If the domains are too dissimilar (e.g., using a model trained on natural images for a text-based task), the transferred features might be misleading. Careful selection of the source model is crucial.

- Overfitting on Small Datasets: Even with a frozen base, if the new dataset is extremely small, the new head can still overfit to it. Techniques like data augmentation (artificially creating more training data by flipping, rotating, and scaling images) are essential.

- Task Misalignment: The architecture of the pre-trained model must be compatible with the new task. You cannot easily use an image classification model for a segmentation task without significant architectural changes.

- Bias and Fairness: A model inherits the biases present in its pre-training data. If a model is pre-trained on a dataset that underrepresents certain demographics, the fine-tuned model will also be biased. De-biasing is an active and critical area of research.

Also, check out Join HCL GUVI’s IITM Pravartak Certified Artificial Intelligence & Machine Learning Course, designed by industry experts and backed by NSDC, to build your career in the world of intelligent systems from foundational ML concepts to hands-on LLM projects.

Wrapping it up:

Regardless if you are a startup creating a niche application, an enterprise scaling multiple AI solutions, or a developer trying out new tasks with limited data, don’t forget about transfer learning in AI. You not only get to stand on the shoulders of previously trained models and take the smart, efficient, and practical approach to bridge past work to future work, but more importantly, you can change the way we build intelligent systems. Furthermore, with an abundance of data, expensive computing, and fast iterations, transfer learning enables an intelligent application of workflows that leads to faster and smarter solutions.

FAQs

1. What is Transfer Learning in Artificial Intelligence?

Transfer Learning in AI is a technique in which a model that has been trained for one task can leverage what it has learned to perform better on another similar task.

2. Why is Transfer Learning significant to AI?

Transfer Learning reduces training time, data needs, and computing costs, with the benefit of improved model performance and accuracy.

3. What are the examples of Transfer Learning used in the real world?

Transfer Learning has been successfully utilized in image recognition, natural language processing, speech recognition, and healthcare analytics.

4. What’s the difference between Transfer Learning and traditional machine learning?

Traditional models need to learn from zero knowledge (or from scratch) for every new task, whereas Transfer Learning models will take advantage of prior knowledge to facilitate and improve the learning process.

Did you enjoy this article?