Data Cleaning in Data Science: A Comprehensive Guide

Sep 21, 2024 7 Min Read 2919 Views

(Last Updated)

In data science, the quality of your data can make or break your analysis. Raw data, fresh from its source, is often messy and riddled with inconsistencies, errors, and missing values. This is where data cleaning comes into play. Data cleaning, also known as data cleansing or scrubbing, is a critical first step in the data science process, ensuring that your dataset is accurate, consistent, and ready for analysis.

Without proper data cleaning, the insights drawn from your analysis may be flawed, leading to incorrect conclusions and potentially costly decisions. In this blog, we will explore the process of data cleaning, providing you with a clear understanding of its importance, common challenges, and effective techniques. Let’s begin!

Table of contents

- What is Data Cleaning?

- Step-by-Step Data Cleaning Process

- Step 1: Understand the Data

- Step 2: Make a Copy of the Raw Data

- Step 3: Perform Initial Data Exploration

- Step 4: Check Data Types and Structures

- Step 5: Handle Missing Data

- Step 6: Remove Duplicates

- Step 7: Handle Outliers

- Step 8: Standardize and Normalize

- Step 9: Correct Invalid Values

- Step 10: Handle Structural Errors

- Step 11: Validate and Cross-Check

- Step 12: Handle Special Cases

- Step 13: Document the Cleaning Process

- Step 14: Create Automated Cleaning Scripts

- Step 15: Perform a Final Review

- Data Cleaning in Data Science: Ensuring Quality for Meaningful Insights

- Common Data Issues

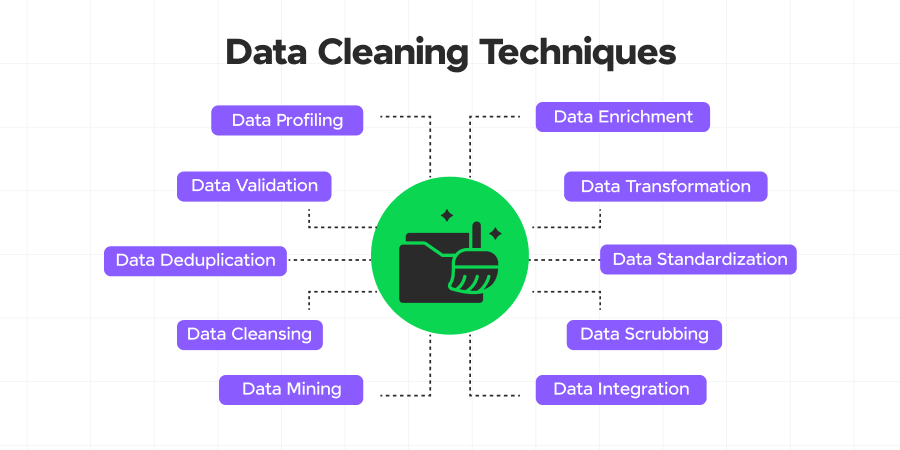

- Data Cleaning Techniques and Tools

- Popular Tools for Data Cleaning

- Best Practices in Data Cleaning

- Conclusion

- FAQs

- Why is data cleaning important in data science?

- What are the common techniques used in data cleaning?

- How do you handle missing data during the cleaning process?

What is Data Cleaning?

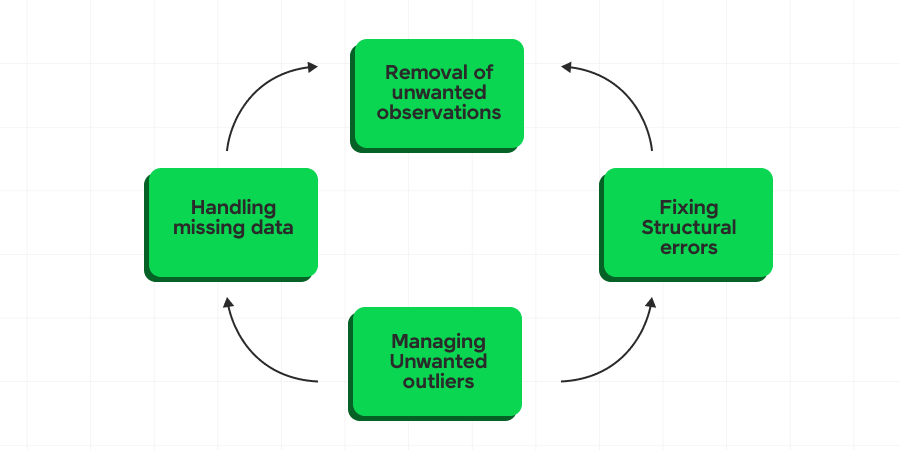

Data cleaning is the process of identifying and correcting or removing errors, inconsistencies, and inaccuracies in datasets. It involves:

- Removing duplicate or irrelevant observations

- Fixing structural errors

- Handling missing data

- Filtering out outliers

- Standardizing data formats

- Correcting typos or formatting issues

This process is important for ensuring data quality and reliability in analysis and decision-making.

Before we move into the next section, ensure you have a good grip on data science essentials like Python, MongoDB, Pandas, NumPy, Tableau & PowerBI Data Methods. If you are looking for a detailed course on Data Science, you can join GUVI’s Data Science Course with Placement Assistance. You’ll also learn about the trending tools and technologies and work on some real-time projects.

Additionally, if you want to explore Python through a self-paced course, try GUVI’s Python course.

In the next section, we’ll walk through a step-by-step process for data cleaning, providing a structured approach to tackling even the messiest datasets.

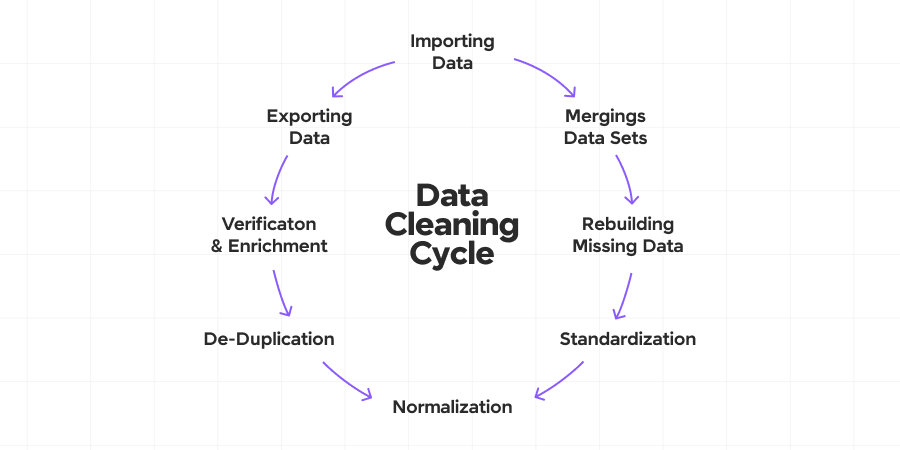

Step-by-Step Data Cleaning Process

While every dataset is unique and may require specific approaches, following a structured process can help ensure thorough and effective data cleaning. Here’s a step-by-step guide to data cleaning:

Step 1: Understand the Data

Before getting into cleaning, it’s important to understand your data:

- Review the data dictionary or metadata if available.

- Examine the structure of the dataset (rows, columns, data types).

- Understand the context and source of the data.

- Identify the key variables for your analysis.

Step 2: Make a Copy of the Raw Data

Always preserve the original dataset:

- Create a working copy of the raw data.

- This allows you to revert changes if needed and maintains data provenance.

Step 3: Perform Initial Data Exploration

Get a feel for the dataset:

- Use descriptive statistics (mean, median, standard deviation, etc.).

- Visualize distributions with histograms, box plots, or scatter plots.

- Check for obvious anomalies or patterns.

Step 4: Check Data Types and Structures

Ensure data is in the correct format:

- Verify that each column has the appropriate data type (numeric, categorical, datetime, etc.).

- Check if date formats are consistent.

- Identify any columns that may need to be split or combined.

Step 5: Handle Missing Data

Address null or empty values:

- Quantify the amount of missing data for each variable.

- Determine if data is missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR).

- Decide on an appropriate strategy (deletion, imputation, etc.) based on the nature of the missingness and its potential impact on your analysis.

Step 6: Remove Duplicates

Identify and handle duplicate entries:

- Determine what constitutes a duplicate in your dataset.

- Use appropriate methods to identify duplicates (exact or fuzzy matching).

- Decide whether to remove duplicates or flag them for further investigation.

Step 7: Handle Outliers

Identify and address extreme values:

- Use statistical methods or visualization to detect outliers.

- Investigate the cause of outliers (data entry errors, genuine anomalies, etc.).

- Decide on an appropriate treatment (removal, capping, transformation, etc.) based on the nature of the outlier and its relevance to your analysis.

Step 8: Standardize and Normalize

Ensure consistency across the dataset:

- Standardize units of measurement.

- Normalize text data (e.g., consistent capitalization, handling of special characters).

- Encode categorical variables consistently.

Step 9: Correct Invalid Values

Address values that don’t make logical sense:

- Check for values outside of possible ranges (e.g., negative ages, future dates).

- Correct obvious data entry errors.

- Flag or remove values that violate business rules or logical constraints.

Step 10: Handle Structural Errors

Address issues with the dataset’s structure:

- Reshape data if necessary (e.g., from wide to long format or vice versa).

- Split or combine columns as needed.

- Ensure consistent naming conventions for variables.

Step 11: Validate and Cross-Check

Ensure the cleaning process hasn’t introduced new errors:

- Cross-validate cleaned data against the original dataset.

- Check if summary statistics make sense after cleaning.

- Verify that relationships between variables are preserved or explainable.

Step 12: Handle Special Cases

Address any domain-specific issues:

- Apply any specific business rules or constraints.

- Handle industry-specific data quirks (e.g., financial data rounding, scientific notation).

Step 13: Document the Cleaning Process

Maintain a clear record of all cleaning steps:

- Document each transformation applied to the data.

- Note any assumptions made during the cleaning process.

- Record the rationale behind significant cleaning decisions.

Step 14: Create Automated Cleaning Scripts

If possible, automate the cleaning process:

- Write scripts or use workflow tools to automate repetitive cleaning tasks.

- This ensures reproducibility and makes it easier to apply the same cleaning process to future datasets.

Step 15: Perform a Final Review

Take a step back and review the entire cleaned dataset:

- Check if the cleaned data meets the requirements for your intended analysis.

- Verify that all identified issues have been addressed.

- Ensure the cleaning process hasn’t introduced bias or significantly altered the fundamental characteristics of the dataset.

By following these steps, you can approach data cleaning in a systematic and thorough manner. However, it’s important to remember that data cleaning is often an iterative process. As you proceed with your analysis, you may uncover new issues that require you to revisit and refine your cleaning process.

Moreover, the specific order and emphasis of these steps may vary depending on the nature of your dataset and the requirements of your analysis. The key is to be thorough, document your process, and always keep in mind the potential impact of your cleaning decisions on the final analysis.

Data Cleaning in Data Science: Ensuring Quality for Meaningful Insights

Let’s learn data cleaning, and explore common issues, techniques, tools, best practices, and challenges. Read Data Science vs Data Analytics if you’re deciding between these two dynamic fields and want to make an informed career choice.

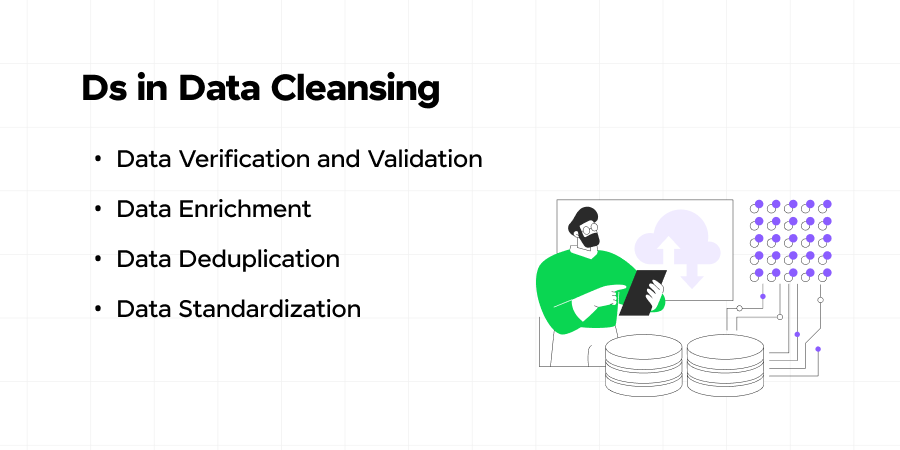

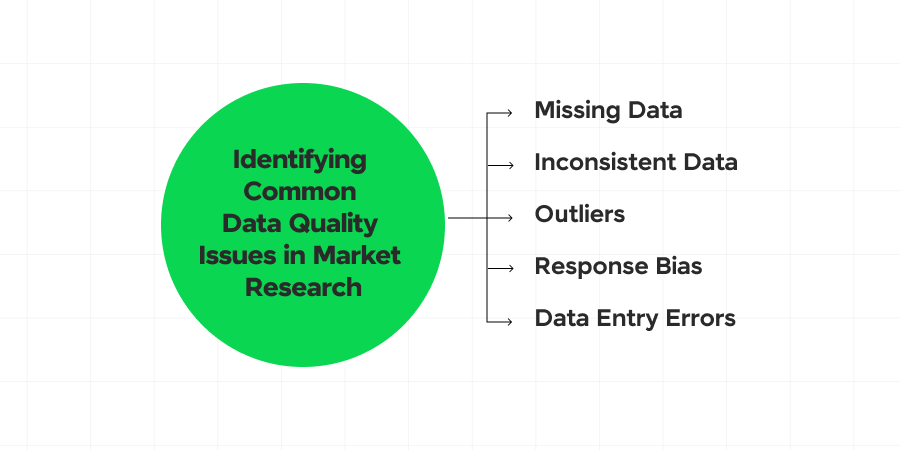

Common Data Issues

Before we explore the solutions, it’s important to understand the problems. Here are some of the most common data issues that necessitate cleaning:

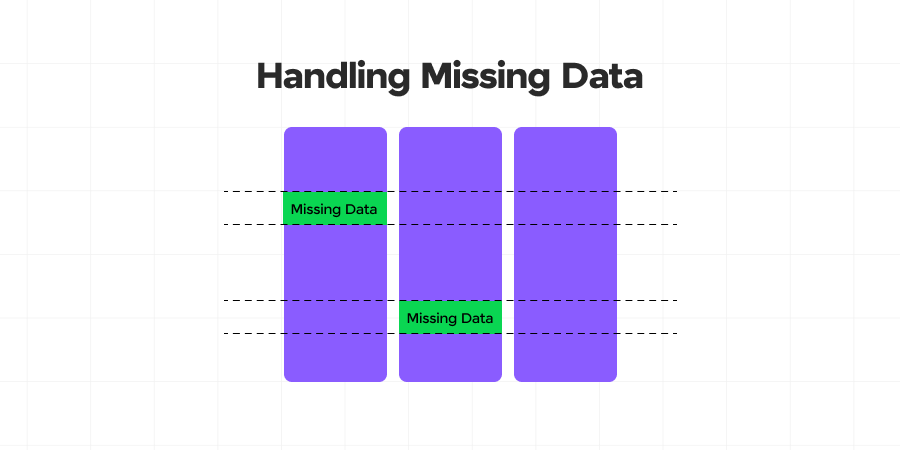

a) Missing Data: One of the most prevalent issues in datasets is missing values. This can occur due to data entry errors, system malfunctions, or simply because the information wasn’t available at the time of collection. Missing data can significantly skew analyses and lead to incorrect conclusions if not handled properly.

b) Duplicate Data: Duplicate records can inflate dataset size and lead to biased analysis results. They often occur due to manual data entry errors, system glitches, or when merging data from multiple sources.

c) Inconsistent Formatting: Inconsistencies in data format can make analysis challenging. For example, dates might be recorded in different formats (MM/DD/YYYY vs. DD/MM/YYYY), or names might be entered with varying capitalization or order (John Doe vs. Doe, John).

d) Typos and Spelling Errors: Human error in data entry can lead to typos and misspellings. While seemingly minor, these errors can cause significant problems, especially when dealing with categorical data or when using the data for text analysis.

e) Outliers: Outliers are data points that significantly differ from other observations. While sometimes outliers represent genuine anomalies that require investigation, they can also be the result of measurement or data entry errors.

f) Inconsistent Units: When working with numerical data, inconsistent units can lead to severe misinterpretations. For instance, mixing metric and imperial measurements or using different currencies without proper conversion.

g) Structural Errors: These occur when there are issues with data labeling, categorization, or the overall structure of the dataset. For example, having inconsistent category names or mislabeled variables.

h) Encoded or Garbled Data: Sometimes data can become corrupted during transfer or storage, resulting in unreadable or nonsensical values. This is particularly common with special characters or when dealing with different character encodings.

i) Inconsistent Naming Conventions: Variables or categories might be named inconsistently across different parts of a dataset or across multiple datasets that need to be merged.

j) Data Type Mismatches: This occurs when data is stored in an inappropriate format. For example, storing numerical data as text, which can prevent mathematical operations.

k) Truncated Data: Sometimes data can be cut off or truncated due to system limitations or data transfer issues. This is particularly problematic with long text fields or large numerical values.

l) Unnecessary Metadata: Datasets might include extraneous information that isn’t relevant to the analysis at hand, such as automatically-generated timestamps or system-specific identifiers.

m) Inconsistent Aggregation: When working with data that has been aggregated or summarized, there might be inconsistencies in how the aggregation was performed across different subsets of the data.

Understanding these common data issues is the first step in effective data cleaning. By being aware of what to look for, data scientists can develop targeted strategies to address these problems and ensure the integrity of their datasets. Read the Roles and Responsibilities of a Data Scientist if you want to understand the essential skills and duties that drive successful data-driven decisions.

However, identifying these issues is just the beginning. The real challenge lies in effectively addressing them without introducing new errors or biases into the data. This requires a combination of technical skills, domain knowledge, and careful consideration of the potential impact of each cleaning action. Read Top Data Analytics Skills if you want to excel in data analytics and advance your career.

In the next section, we’ll explore various techniques and tools that data scientists use to tackle these common data issues, transforming messy, raw data into clean, analysis-ready datasets.

Data Cleaning Techniques and Tools

Once you’ve identified the issues in your dataset, the next step is to apply appropriate cleaning techniques. Here’s an overview of common data cleaning techniques and some popular tools used in the process:

a) Handling Missing Data

- Deletion: Remove rows or columns with missing data. This is simple but can lead to significant data loss.

- Imputation: Fill in missing values using statistical methods. Common approaches include:

- Mean/Median/Mode imputation

- Regression imputation

- Multiple imputation

- Using a dedicated category: For categorical data, treating “missing” as its own category.

- Advanced techniques: Using machine learning algorithms like K-Nearest Neighbors or Random Forests for prediction-based imputation. Read Data Science vs. Machine Learning to master the key differences and elevate your coding skills.

b) Dealing with Duplicates

- Exact matching: Identify and remove identical rows.

- Fuzzy matching: Use algorithms to identify near-duplicate entries, accounting for minor differences in spelling or formatting.

- Composite key matching: Create a unique identifier using multiple columns to identify duplicates.

c) Standardizing Formats

- Regular expressions: Use regex patterns to identify and correct inconsistent formatting.

- Parsing libraries: Utilize specialized libraries for parsing dates, addresses, or other structured data.

- Lookup tables: Create standardized mappings for common variations of the same data.

d) Correcting Typos and Misspellings

- Spell-checking algorithms: Use built-in or custom dictionaries to identify and correct misspellings.

- Fuzzy string matching: Employ algorithms like Levenshtein distance or Soundex to match similar strings.

- Manual correction: For critical or high-impact data, manual review and correction may be necessary.

e) Handling Outliers

- Statistical methods: Use techniques like z-score or Interquartile Range (IQR) to identify outliers.

- Visualization: Employ scatter plots, box plots, or histograms to visually identify outliers.

- Domain expertise: Consult subject matter experts to determine if outliers are genuine anomalies or errors.

- Winsorization: Cap extreme values at a specified percentile of the data.

Popular Tools for Data Cleaning

- Python Libraries

- Pandas: Offers a wide range of data manipulation and cleaning functions.

- NumPy: Useful for numerical operations and handling missing data.

- Scikit-learn: Provides imputation methods and other preprocessing techniques.

- Fuzzywuzzy: Specializes in fuzzy string matching.

- R Packages

- tidyr: Part of the tidyverse, focused on tidying data.

- dplyr: Offers a grammar for data manipulation.

- stringr: Provides tools for string manipulation.

- mice: Specializes in multiple imputations for missing data.

- SQL

- While primarily a query language, SQL can be used for various data cleaning tasks, especially when working with large datasets in databases.

- OpenRefine

- A powerful tool for working with messy data, offering features like clustering for cleaning text data.

- Trifacta Wrangler

- Provides a user-friendly interface for data cleaning and transformation tasks.

- Talend Data Preparation

- Offers both open-source and enterprise versions for data cleaning and preparation.

- Excel

- While not suitable for large datasets, Excel’s built-in functions and Power Query can be useful for smaller-scale data cleaning tasks.

- Databricks

- A unified analytics platform that integrates with various big data tools, useful for cleaning large-scale datasets.

- Google Cloud Dataprep

- A cloud-based service for visually exploring, cleaning, and preparing data for analysis.

- Alteryx

- Provides a workflow-based approach to data cleaning and preparation, with a strong focus on spatial data.

The choice of technique and tool often depends on the specific nature of the data, the scale of the cleaning task, and the analyst’s familiarity with different platforms. In many cases, a combination of tools and techniques is necessary to effectively clean a dataset.

It’s important to note that while these tools can greatly facilitate the data cleaning process, they are not a substitute for critical thinking and domain knowledge. The decisions made during data cleaning can significantly impact subsequent analyses, so it’s important to approach each step thoughtfully and document the rationale behind each cleaning action.

In the next section, we’ll discuss best practices in data cleaning that can help you navigate this complex process more effectively.

Best Practices in Data Cleaning

While the specific techniques used in data cleaning may vary depending on the dataset and project requirements, there are several best practices that can help ensure a more effective and reliable cleaning process:

Read Top Data Analytics Project Ideas if you’re looking to enhance your skills, gain practical experience, and make your resume stand out.

- Understand the Data Context

- Familiarize yourself with the data’s origin, collection methods, and intended use.

- Consult domain experts to understand what constitutes “normal” or “abnormal” in the data.

- Be aware of any regulatory or compliance requirements that may affect data handling.

- Preserve Raw Data

- Always keep an untouched copy of the original dataset.

- Implement version control for your datasets and cleaning scripts.

- This allows you to backtrack if needed and maintains data provenance.

- Document Everything

- Keep a detailed log of all cleaning steps, including the rationale for decisions made.

- Document any assumptions or limitations in your cleaning process.

- This aids in reproducibility and helps others understand your process.

- Start with a Sample

- If working with large datasets, start by cleaning a representative sample.

- This allows you to develop and refine your cleaning strategy more efficiently.

- Once perfected, apply the cleaning process to the full dataset.

- Automate Where Possible

- Develop scripts or use tools to automate repetitive cleaning tasks.

- This reduces human error and makes the process more efficient and reproducible.

- However, be cautious of over-automation – some cleaning tasks may require human judgment.

- Validate Continuously

- Regularly check the impact of your cleaning steps on the data.

- Use statistical tests and visualizations to ensure cleaning hasn’t introduced new biases.

- Cross-validate cleaned data against the original dataset.

By adhering to these best practices, you can develop a more robust and reliable data cleaning process. Remember, the goal of data cleaning is not just to fix errors, but to enhance the overall quality and usability of your data for meaningful analysis.

Kickstart your Data Science journey by enrolling in GUVI’s Data Science Course where you will master technologies like MongoDB, Tableau, PowerBI, Pandas, etc., and build interesting real-life projects.

Alternatively, if you would like to explore Python through a Self-paced course, try GUVI’s Python course.

Conclusion

While data cleaning presents numerous challenges, it remains a critical step in the data science workflow. By understanding these challenges and implementing robust strategies to address them, data scientists can significantly enhance the quality of their analyses and the reliability of their insights, ultimately driving more informed decision-making and value creation for their organizations.

By understanding common data issues, mastering various cleaning techniques and tools, following a structured cleaning process, adhering to best practices, and being prepared to tackle common challenges, you’ll be well-equipped to handle even the messiest datasets and extract meaningful insights from your data.

FAQs

Why is data cleaning important in data science?

Data cleaning is essential in data science to ensure accuracy and reliability. It eliminates errors, inconsistencies, and missing values from raw data, improving the quality of analysis and decision-making. Clean data forms a solid foundation for reliable insights and predictions.

What are the common techniques used in data cleaning?

Common techniques include handling missing values through imputation or removal, removing duplicates, standardizing data formats, managing outliers, and correcting data entry errors. Tools like Excel, Python (with libraries like pandas and NumPy), and specialized software facilitate these processes.

How do you handle missing data during the cleaning process?

Handling missing data involves strategies such as imputing missing values with mean, median, or advanced algorithms, removing rows with missing values when appropriate, or using machine learning methods that handle missing data seamlessly. The approach depends on data characteristics and analysis requirements.

Did you enjoy this article?