Agents in Artificial Intelligence: Working, Architecture & Classification

Sep 09, 2025 9 Min Read 1766 Views

(Last Updated)

Analysts expect the AI agents market to rise from $7.84 billion in 2025 to $52.62 billion in 2030 at a compound annual growth rate of about 46 percent. The adoption is rising because teams struggle with repetitive handoffs and slow response loops. Agents in artificial intelligence help by stitching tools into repeatable workflows and handling decisions that follow clear rules. They watch signals and keep a short memory of recent events. They act through approved APIs so responses arrive faster and with fewer slips.

Read the full guide to see how AI agents are structured and how each module fits together.

AI agents can now handle 95% of customer service inquiries—without a single human in the loop.

Companies using AI agents report 55% higher efficiency and cut operational costs by 35%.

By 2027, AI agents are projected to automate 15–50% of all business tasks—radically transforming how work gets done.

Table of contents

- What are AI Agents?

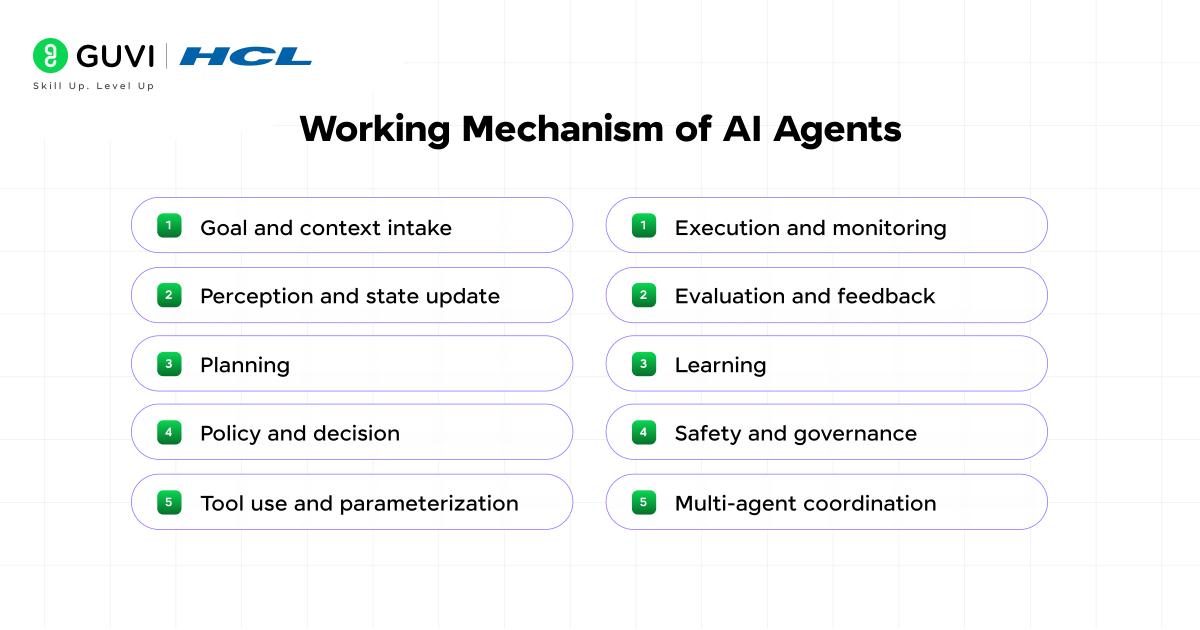

- How do Agents in Artificial Intelligence Work?

- Step 1: Goal and context intake

- Step 2: Perception and state update

- Step 3: Planning

- Step 4: Policy and decision

- Step 5: Tool use and parameterization

- Step 6: Execution and monitoring

- Step 7: Evaluation and feedback

- Step 8: Learning

- Step 9: Safety and governance

- Step 10: Multi-agent coordination

- Architecture of AI Agents

- BDI Architecture

- Core Elements

- Control Loop

- Top Features

- Best Use Cases

- Classification of AI Agents

- Based on Decision-Making Approach

- Based on Perception & Autonomy

- Based on Cognitive Capabilities

- Based on Agency Scope

- Top Applications of AI Agents

- Limitations of AI Agents

- The Bottom Line: Future of AI Agents

- FAQs

What are AI Agents?

AI agents are software systems designed to pursue goals with a degree of autonomy. They sense their environment through data inputs, then use rules or learned models to decide what action to take. These actions can be carried out through APIs or physical actuators, and the results feed back into the system’s internal state to guide later decisions.

Some AI and generative AI agents follow fixed policies, whereas others adapt by learning from experience, which allows them to refine their performance over time. Such systems are applied in areas like customer support bots and scheduling assistants, where consistent decision-making is essential.

How do Agents in Artificial Intelligence Work?

Following steps outline the seamless functioning of smart AI agents:

Step 1: Goal and context intake

The system receives a goal with supporting context and normalizes natural language into structured intents and entities. That representation links user aims to data sources and prepares signals for later stages.

Step 2: Perception and state update

New observations merge with working memory and a belief state so uncertainty is tracked. Vector retrieval brings related facts and past traces, and that evidence constrains planning to reduce drift.

Step 3: Planning

A planner expands the goal into steps using search or learned heuristics, then scores partial paths. Many systems cast control as an MDP or a POMDP so the agent reasons about hidden variables and observation noise.

Step 4: Policy and decision

The controller selects the next action with a policy that may be rule based or reinforcement learned. Selection balances utility and cost so time and budget stay under control.

Step 5: Tool use and parameterization

Action candidates map to tools through function schemas or adapters, and parameters are validated against contracts. Rate limits and quotas are checked so calls succeed under load.

Step 6: Execution and monitoring

Calls run through a sandbox or a gateway that records traces. A monitor tracks latency and error codes, and retries with backoff handle congestion and partial faults.

Step 7: Evaluation and feedback

Outputs pass through tests and assertions aligned to the goal, and a scorer estimates progress against targets. Failing checks trigger replanning or a safe stop so quality remains consistent.

Step 8: Learning

Traces feed a replay buffer or a log so policies can improve between runs. Updates use supervised signals or rewards, and memory indices adjust so retrieval becomes sharper over time.

Step 9: Safety and governance

Filters inspect prompts and tool results for policy risk, and high risk paths route to a human review. Audit trails cover inputs and actions so later analysis has clear evidence.

Step 10: Multi-agent coordination

Complex work splits across specialists that share messages and artifacts under a coordinator. Shared context keeps roles clear and avoids loops, and merged results roll up to the original goal.

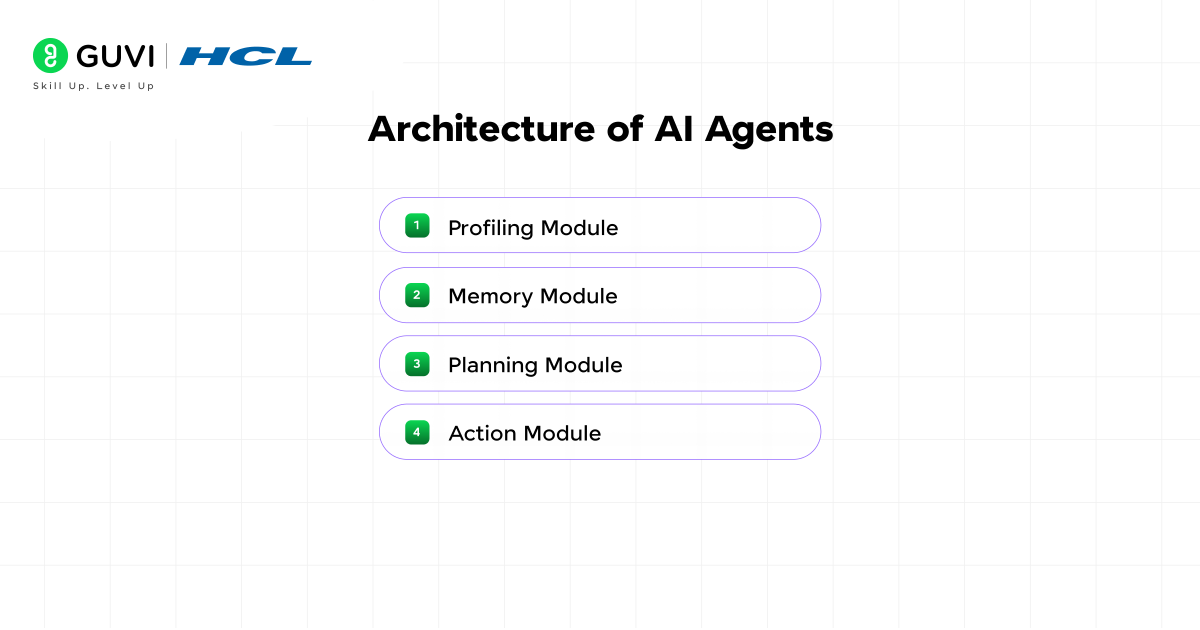

Architecture of AI Agents

Here are four main components in an AI agent’s architecture:

Profiling Module: The profiling module builds a structured view of the user and the task. It captures objectives and constraints. Risk limits are recorded and tied to permissions. Signals come from explicit settings and interaction traces. The profile guides tool selection and plan preferences. Privacy guards restrict collection and support redaction.

• Memory Module: The memory module maintains working memory and long term store. Entries hold facts and events. Retrieval uses embeddings or keyed indexes. Policies apply TTL and LRU to control size. Safety filters remove secrets and personal data. Writes are atomic and versioned.

• Planning Module: The planning module converts goals into ordered steps. Search may use A star or policy rollouts. Cost functions score options and pick a frontier. Constraints and budgets cap branch growth. Failures trigger plan repair and state updates. The planner exposes rationales and estimated risk.

• Action Module: The action module binds a step to a tool. Arguments pass schema checks and type checks. Execution uses retries with backoff and idempotent tokens. Side effects run inside a sandbox and a least privilege scope. Telemetry records latency and error codes and outputs flow to evaluators and memory.

BDI Architecture

BDI is a model for goal-directed agents that separates beliefs and goals, then tracks a live intention that carries the current plan. The split lets the agent absorb new facts and keep useful progress without throwing away work. Beliefs ground choices so options stay tied to reality. The active intention steers execution, which keeps actions aligned with policy and resource limits.

Core Elements

- Beliefs: A structured world model built from perception and memory.

- Desires: Candidate goals with priorities and constraints.

- Intentions: The chosen goal that the plan the agent is pursuing.

- Plan Library: Reusable procedures with preconditions and effects.

- Commitment Rules: Policies that decide whether to keep or drop the current intention.

- Event Queue: New percepts and internal signals that trigger updates.

Control Loop

- Step 1: Perceive and update beliefs.

- Step 2: Generate options from events and policy.

- Step 3: Filter options to pick an intention using priority and feasibility.

- Step 4: Build or fetch a plan that satisfies the intention.

- Step 5: Execute the next step through a tool or actuator with guard checks.

- Step 6: Monitor results and revise beliefs, plans, or the intentions.

Top Features

- Clear separation of state and goals.

- Stable behavior under changing inputs.

- Incremental replanning that preserves progress.

- Traceable decisions with interpretable plans.

Best Use Cases

- Long running workflows under strict policy rules.

- Robotics that need path planning and safety checks.

- Operations centers that react to alerts and protect work in progress.

- Enterprise automations that span several tools and require approvals.

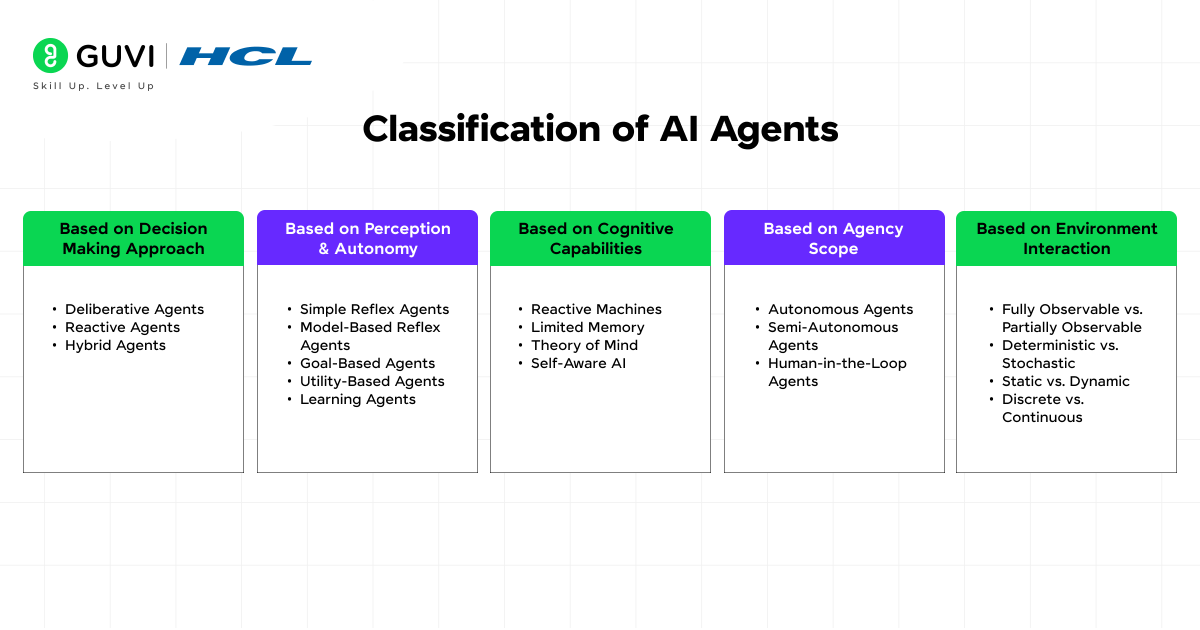

Classification of AI Agents

1. Based on Decision-Making Approach

AI agents can be grouped by how decisions are formed and applied. This framing clarifies trade-offs between planning depth and speed. It further includes:

- Deliberative Agents

Definition: These agents maintain a world model and compute action sequences with search or logic so each step follows a coherent plan.

Top Features

- Model-based planning using A* or Monte Carlo Tree Search.

- Goal reasoning with constraints and preferences.

- Belief state tracking under partial observability.

- Explicit plans that improve traceability.

- Strong results on long tasks that need foresight.

Best Use Cases

- Multi-step data integration and validation.

- Robotics tasks that need safety checks and path planning.

- Workflow automation with approvals and audits.

- Simulation control with parameter sweeps.

- Reactive Agents

Definition: These agents map the current observation to an action through rules or learned policies so decisions arrive with very low latency.

Top Features

- Millisecond-level response using simple policies.

- Operation with minimal state to reduce overhead.

- Tolerance to noise in streaming inputs.

- Solid fit for event-driven loops.

Best Use Cases

- High-throughput content filtering and triage.

- Real-time game bots that respond to player moves.

- Execution of trading signals under strict deadlines.

- User Interface assistants that act on screen cues.

- Hybrid Agents

Definition: These agents combine a planner for global guidance with a fast control layer for step-level choices so the system stays coherent and responsive.

Top Features

- Hierarchical control where a planner sets subgoals and skills handle steps.

- Retrieval-augmented reasoning with tool calls.

- Fallback policies for tight loops.

- Memory that supports replanning after errors.

Best Use Cases

- Customer journeys across multiple tools and approvals.

- Research assistants that call APIs and write reports.

- Robotics with path planning and reflex layers.

- Incident response that triages alerts and escalates.

2. Based on Perception & Autonomy

Classification based on perception and autonomy explains how an agent gathers signals and builds a workable view of its environment so actions follow context. This framing spans reflex control through planning and learning approaches that raise reliability under noise.

- Simple Reflex Agents

Definition: Simple reflex agents select an action from the current percept through if then rules. The design ignores history and internal state, which yields short reaction time and low compute cost. This approach fits fully observable settings with stable mappings between signals and actions. Performance drops under partial observability and delayed effects.

Top Features

- Stateless control with fixed rules.

- Very low latency under load.

- Easy to verify and test.

- Predictable behavior in fully observable tasks.

Best Use Cases

- Protocol compliance checks in pipelines.

- Simple UI macros for repetitive clicks.

- Hardware interrupts and low level control loops.

- Basic content triage on clear signals.

- Model-Based Reflex Agents

Definition: Model-based reflex agents maintain an internal state that summarizes the world. The state updates using a transition model and the latest percept. Action selection still uses rules, yet those rules apply to the internal state rather than only raw input. This extension supports partial observability and noise.

Top Features

- Internal state that tracks recent percepts.

- Transition model that predicts state updates.

- Rules that reference state variables instead of raw input.

- Better robustness under partial observability.

Best Use Cases

- Robots in cluttered rooms with occlusion.

- IoT sites with intermittent sensors.

- Web automation with paginated flows.

- Network monitoring with delayed alerts.

- Goal-Based Agents

Definition: Goal-based agents choose actions by reasoning over outcomes and desired states. The system uses search or planning to reach the goal from the current state. Heuristics guide the search so time stays bounded. Feedback from the environment triggers plan repair and re-evaluation.

Top Features

- Explicit goals with success tests.

- Forward search or planning over actions.

- Heuristic guidance for speed.

- Plan repair after failures.

Best Use Cases

- Multi-step API workflows with dependencies.

- Route planning with road closures.

- Form filling that spans several pages.

- Data curation pipelines with approvals.

- Utility-Based Agents

Definition: Utility-based agents score states with a utility function that reflects preferences and risk. The policy selects actions that raise expected utility under the current belief. Trade-offs between speed and quality become explicit in the function. Calibration matters because poor scaling skews choices.

Top Features

- Utility function that encodes preferences.

- Expected utility for action ranking.

- Risk-sensitive choices through concave or convex utilities.

- Graceful trade-offs between speed and quality.

Best Use Cases

- Recommendation selection under budget limits.

- Trading systems with risk targets.

- Energy management that balances cost and comfort.

- Autonomous lab scheduling with throughput goals.

- Learning Agents

Definition: Learning agents improve behavior through experience and feedback. The design often separates a performance element and a learning element. A critic estimates progress with rewards or losses. A problem generator proposes informative actions that gather data for the next update.

Top Features

- Experience replay or logs for training.

- Online or batch updates to models.

- A critic that supplies rewards or losses.

- Exploration strategies that balance curiosity and safety.

Best Use Cases

- Personal assistants that adapt to a user.

- Robotics skills that improve with practice.

- Fraud models that update with new patterns.

- Text agents that refine retrieval over time.

3. Based on Cognitive Capabilities

This classification focuses on which cognitive skills an agent simulates and how that shapes memory and interaction. Higher tiers add modeling power over earlier ones, and later tiers remain research topics rather than deployed systems.

- Reactive Machines

Definition: Reactive machines respond only to the current input and carry no memory of prior steps, which yields fast decisions and simple control. Classic chess systems such as IBM Deep Blue reflect this style, and their strength comes from evaluation of the present position rather than stored history.

Top Features

- No persistent memory or belief state.

- Deterministic policies under fixed rules.

- Low latency in fully observable settings.

- High reliability under stable signal mappings.

Best Use Cases

- Hardware control loops.

- Protocol compliance checks.

- Simple classification routers.

- Arcade game agents with short horizons.

- Limited Memory

Definition: Limited memory agents store recent observations and maintain a short history that guides the next action. State updates come from the latest percepts, and that state supports better choices under noise or delay.

Top Features

- Sliding window memory over recent steps.

- State estimation that fuses new signals with prior context.

- Short-term experience replay for updates.

- Online adjustment within a bounded computer.

Best Use Cases

- Lane keeping in driver assistance.

- Conversational tools that reference recent turns.

- Predictive maintenance with rolling sensor windows.

- Web automation across multi-page flows.

- Theory of Mind

Definition: Theory of Mind agents attempt to model the beliefs and goals of other actors so interaction accounts for hidden motives. Full capability does not exist today, and current work approximates these skills with statistical inference.

Top Features

- User modeling that infers intent.

- Belief tracking for multi-agent dialog.

- Perspective taking during plan selection.

- Explanations that reflect inferred mental state.

Best Use Cases

- Social robotics research.

- Tutors who adapt to learner misconceptions.

- Negotiation simulators.

- Training tools that model customer behavior.

- Self-Aware AI

Definition: Self-aware AI refers to hypothetical systems that maintain a persistent self model and report internal experience. No such system exists in production, and discussion remains theoretical with strong focus on ethics.

Top Features

- Self model that tracks capabilities and limits.

- Introspective monitoring of goals and resources.

- Calibrated reports of uncertainty.

- Strong alignment and oversight mechanisms.

Best Use Cases

- None in production.

- Research on safety and governance.

- Thought experiments that probe agency limits.

- Policy design and risk assessment.

4. Based on Agency Scope

This view classifies agents by how much independence they hold during execution and how often they defer to people. Greater autonomy shifts control to the system and reduces touch points, and lower autonomy keeps a human in charge with the agent acting as a helper.

- Autonomous Agents

Definition: These agents plan and act within predefined bounds without human intervention for most steps. They set subgoals, select tools, and iterate until success or a guardrail stops progress.

Top Features

- End-to-end planning and execution loops with stateful memory.

- Tool use through APIs with schema validation.

- Self-checks with tests and assertions before and after calls.

- Retry logic with backoff and circuit breakers.

- Telemetry and audit logs for review.

Best Use Cases

- Data pipeline repair and routine maintenance.

- Long-running workflows that coordinate several services.

- Robotic process automation in stable environments.

- Batch research that aggregates results and writes reports.

- Semi-Autonomous Agents

Definition: These agents operate largely on their own yet pause at defined checkpoints for review. Control flows alternate between the system and a designated reviewer so risks stay bounded.

Top Features

- Configurable approval gates at key steps.

- Confidence thresholds that trigger handoff.

- Editable plans with side-by-side previews.

- Safe tool sets with scoped permissions.

- Clear fallbacks that return control to a person.

Best Use Cases

- Procurement tasks that require manager confirmation.

- Code changes that need review before merging.

- Customer support actions, such as refunds above a limit.

- Sales outreach where drafts need human edits.

- Human-in-the-Loop Agents

Definition: These agents rely on a person for major decisions and focus on retrieval, drafting, and execution after approval. The design maximizes oversight and keeps final authority with the human operator.

Top Features

- Interactive prompts that collect preferences.

- Explanations of options and expected impact.

- Editable outputs with version history and rollback.

- Strict permissioning with least privilege access.

- Real-time previews before any action runs.

Best Use Cases

- Clinical documentation drafting with clinician review.

- Legal document preparation with attorney edits.

- Financial analysis memos that require analyst approval.

- Content creation workflows that pass through editors.

5. Based on Environment Interaction

- Fully Observable vs. Partially Observable

| Environment Type | Definition | Top Features | Best Use Cases |

| Fully Observable | The complete state is available at each step so the policy acts on ground truth. | Direct state policies with simple controllers. Minimal need for memory. Straightforward verification and reproducibility. | Board games with perfect information. Grid control in simulation studies. Quality checks on well-instrumented lines. |

| Partially Observable | Parts of the state are hidden and inputs are noisy so the agent maintains a belief over possible states. | Belief tracking with Bayesian or Kalman filters. Memory that summarizes recent percepts. Sensor fusion that blends predictions with measurements. | Mobile robots with occlusion. Speech interfaces in noisy rooms. Network operations with delayed alerts. |

- Deterministic vs. Stochastic

| Environment Type | Definition | Top Features | Best Use Cases |

| Deterministic | A state and action map to a single next state. | Exact rollouts and reproducible traces. Precise planning with bounded search. Easier debugging and formal proofs. | Compiler optimization.Assembly and pick place cells with tight tolerances. Sorting and routing on closed courses. |

| Stochastic | A state and action map to a distribution over outcomes. | Expected value estimates for planning. Risk aware policies with variance control. Monte Carlo evaluation and policy gradients. | Portfolio execution under market noise. Inventory and demand planning. Ad ranking with click uncertainty. |

- Static vs. Dynamic

| Environment Type | Definition | Top Features | Best Use Cases |

| Static | The world stays unchanged unless the agent acts. | Offline planning on stable snapshots. Batch scheduling with fixed inputs. Simple monitoring for completion. | Batch data cleanup.Map routing on a closed course. Nightly ETL jobs with frozen sources. |

| Dynamic | The world evolves over time even without agent input. | Receding horizon control with frequent replans. Schedulers that adapt to fresh signals. Continuous monitoring with fast triggers. | Fleet control in live traffic. Power balancing on variable loads. Real time incident response. |

- Discrete vs. Continuous

| Environment Type | Definition | Top Features |

| Discrete | States or actions take countable values and time often advances in ticks. | Graph search with A or MCTS. Tabular value iteration with Bellman updates. Exact combinatorial solvers where scale allows. |

| Continuous | Variables are real valued and often evolve through smooth dynamics. | Optimal control with trajectory optimization. Policy learning with continuous action spaces. State estimation with continuous filters. |

Find out how to turn your understanding of AI agent structures, architectures, and applications into career-ready expertise with our Intel®-certified AI/ML course. With an industry-aligned curriculum, hands-on projects, native language support, and access to a global network of 80,000+ learners, you’ll gain the skills to design, implement, and optimize AI agents for automation, decision-making, and real-world impact in 2025 and beyond.

Top Applications of AI Agents

AI agents shine when a goal can be expressed clearly and the tools to act are reliable. Practical value grows as the agent keeps context across steps and feeds results back into memory so later choices improve. The following areas illustrate where that loop turns intent into consistent outcomes.

- Customer Support. Agents classify intent, fetch answers from approved sources, and draft replies that respect policy. Escalation rules route unclear cases to a person so service quality stays steady.

- IT Operations. Agents watch metrics, match patterns to runbooks, and execute gated fixes. Logs and traces anchor each action so audits remain straightforward.

- Data Engineering. Agents orchestrate pipelines, run quality checks, and apply targeted repairs. Context about schemas and lineage guides each fix so downstream jobs keep running.

- Software Delivery. Agents triage issues, propose small pull requests, and keep dependencies current. Safeguards require review before merge so risk stays contained.

- Personal Productivity. Agents schedule meetings, summarize threads, and draft emails with project context. Preferences in a profile keep tone and timing consistent.

Limitations of AI Agents

Real systems face limits that arise from data quality, tool fragility, and oversight. Understanding these limits helps teams set the right scope and design guardrails that keep results dependable.

- Generalization and Reliability: Performance drops outside the training or prompt context. Small shifts in inputs can cause brittle choices.

- Partial Observability: Hidden state and noisy signals confuse planning. Belief tracking helps, yet mistakes still slip in.

- Tool and API Fragility: Schema changes and rate limits interrupt action. Retries and backoff reduce pain but do not remove it.

- Cost and Latency: Deep reasoning raises token use and time. Caching and on-device models help with routine steps.

- Privacy and Security: Broad permissions create exposure. Least privilege scopes and redaction policies reduce that risk.

- Evaluation Gaps: Ground truth is scarce in open tasks. Proxy metrics drift and require regular review.

The Bottom Line: Future of AI Agents

Progress in the world of Artificial Intelligence agents points to tighter links between reasoning, verified tools, and traceable memory. Agents will record claims with sources so explanations read like accountable reports. Smaller models on devices will handle routine calls, and larger models in the cloud will take rare and hard cases. Planning will fold in uncertainty more cleanly so choices reflect risk and budget, and governance will ship as reusable templates that slot into existing approval flows.

AI agents work best as patient and policy aware teammates that act within clear bounds. Strong results come from crisp goals and review points that catch drift early. Teams that treat AI agents as systems with memory, tests, and audits gain steady productivity without surprises.

FAQs

1) How are AI agents different from chatbots?

Chatbots follow scripted flows and handle turn-by-turn replies. AI agents hold goals and call tools to act on the world, so they can plan steps and check results before replying.

2) What data do AI agents need?

They need task context and tool schemas. Access rules tell the agent what it may read and change.

3) How do AI agents connect to tools and APIs?

Agents use function calling or adapters to format a request. A gateway checks permissions and rate limits so calls stay within policy.

4) How do AI agents handle errors?

The control loop retries with backoff and uses idempotent tokens to avoid duplicate side effects. Failing steps route to a safe stop or a human review so the system does not drift.

5) How do teams measure an AI agent’s success?

Teams track goal completion and cost per outcome. They also review traces and acceptance tests to catch regressions early.

Did you enjoy this article?