Types of Machine Learning Algorithms: Top Features & Applications

Sep 09, 2025 7 Min Read 2093 Views

(Last Updated)

Every second, machine learning algorithms make millions of predictions worldwide. They influence what we watch, buy, drive, and even how we receive healthcare. But not all algorithms are created equal. Understanding the different types of machine learning algorithms and their real-world applications is vital for bending data into measurable impact.

If you’ve ever wondered which machine learning algorithm could transform your business, keep reading; the answer is in the details.

Table of contents

- What is a Machine Learning Algorithm?

- Broad Categories of a Machine Learning Algorithm

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

- Final Words

- FAQs

What is a Machine Learning Algorithm?

A machine learning algorithm is a set of computational rules and mathematical models. The algorithm is designed to enable computers to specify patterns and make predictions or decisions without being explicitly programmed for each specific task. Machine learning algorithms learn from data by analyzing relationships between input variables and desired outcomes instead of following fixed instructions.

Broad Categories of a Machine Learning Algorithm

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

We will explore each of these types in detail

in the following sections:

1. Supervised Learning

Supervised learning is a type of machine learning algorithm in which the model is trained using labeled data. It means that the input data is paired with the correct output. The algorithm learns by mapping inputs to their corresponding outputs. This allows it to make accurate predictions or classifications when exposed to unseen data.

The training process involves feeding the model large datasets with both features (inputs) and target labels (outputs) in supervised learning. The system adjusts its internal parameters to minimize the difference between predicted and actual results. It is precisely a process usually measured through a loss function.

This approach is widely used in:

- Predictive analytics

- Fraud detection

- Spam filtering

- Customer behavior forecasting.

Supervised learning is ideal for applications where historical data exists and precise and outcome-based predictions are required.

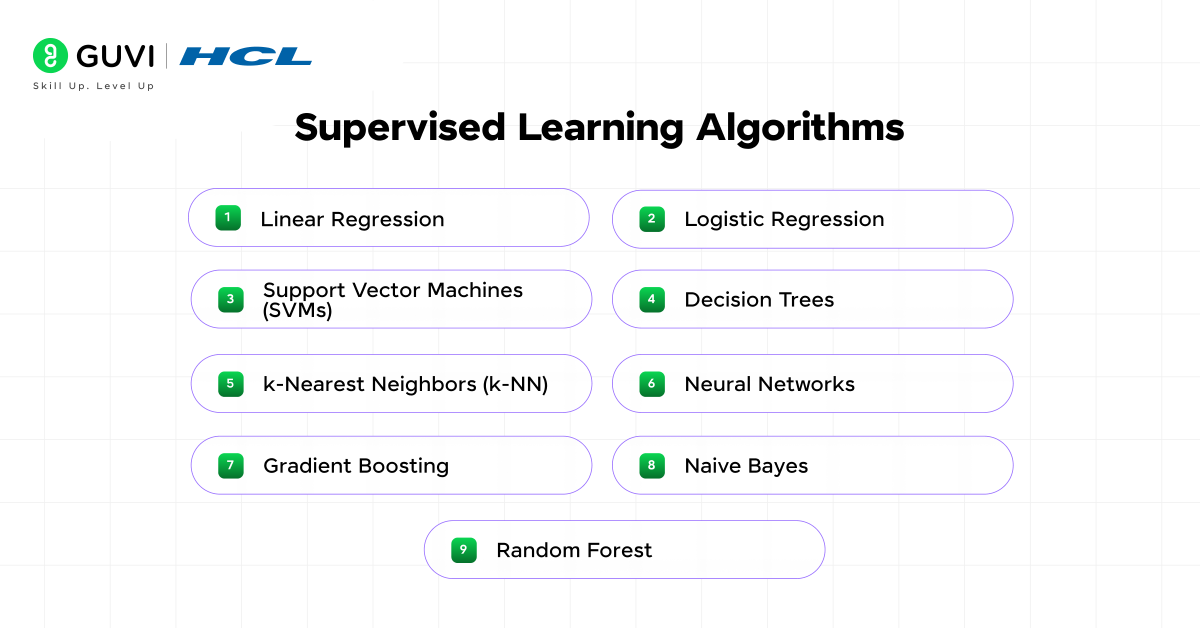

Common supervised learning algorithms include:

- Linear Regression

Definition: Linear regression is a supervised machine learning algorithm. It predicts a continuous numerical outcome based on the relationship between one or more input variables or features and an output variable. It models this relationship as a straight line.

Key Points:

- Suitable for predicting prices and trend analysis.

- Works best when there is a linear correlation between dependent and independent variables.

- Can be simple (one predictor) or multiple (several predictors).

- Highly interpretable but not suitable for complex and non-linear relationships.

Real-World Applications:

- Real Estate: Foreseeing house prices based on square footage and amenities.

- Finance: Forecasting stock prices or company revenues based on historical trends.

- Marketing: Estimating the effect of advertising spend on sales performance.

- Logistic Regression

Definition: Logistic regression is a classification algorithm used to predict the probability of a categorical outcome. It is most often binary (yes/no, spam/not spam). It uses the logistic (sigmoid) function to map outputs between 0 and 1.

Key Points:

- Common in healthcare risk prediction and churn analysis.

- Produces probability scores, which can be used to set custom decision thresholds.

- Deals with both binary and multi-class classification (via extensions like multinomial logistic regression).

- Works well with linearly separable data.

Real-World Applications:

- Healthcare: Predicting the probability of a patient having a disease based on symptoms and medical history.

- Banking: Classifying loan applicants as high or low credit risk.

- E-commerce: Determining the probability of a customer churning based on engagement metrics.

- Support Vector Machines (SVMs)

Definition: Support Vector Machines are powerful supervised learning models that classify data by finding the optimal separating boundary (hyperplane) with the maximum margin between classes.

Key Points:

- Performs well in high-dimensional spaces, such as text classification and bioinformatics.

- Effective for non-linear classification through kernel functions (e.g., RBF, polynomial).

- Robust against overfitting in high-dimensional datasets.

- Works for both classification and regression tasks (SVR).

Real-World Applications:

- Text Classification: Categorizing emails as spam or not spam.

- Bioinformatics: Classifying proteins or cancer cells based on gene expression data.

- Face Recognition: Identifying individuals from images using facial feature vectors.

- Decision Trees

Definition: Decision trees are rule-based models that split datasets into smaller subsets based on feature conditions. It further forms a tree-like structure for decision-making.

Key Points:

- Easy to interpret and explain to non-technical stakeholders.

- Addresses both categorical and numerical data without feature scaling.

- Common in credit scoring and medical diagnosis.

- Prone to overfitting unless pruned or combined with ensemble methods.

Real-World Applications:

- Retail: Segmenting customers for personalized offers.

- Healthcare: Assisting doctors in diagnosing diseases based on patient data.

- Finance: Decision trees in data science assists in determining credit card approval based on income and spending history.

- k-Nearest Neighbors (k-NN)

Definition: k-NN is a non-parametric algorithm that classifies data points based on the majority class among their k closest neighbors in the feature space.

Key Points:

- Best for recommendation systems and pattern recognition.

- No explicit training phase as predictions are made from stored data.

- Performance depends on choice of k and distance metric (Euclidean or Manhattan).

- Sensitive to irrelevant or high-dimensional features.

Real-World Applications:

- E-commerce: Recommending products based on customer purchase history.

- Image Recognition: Classifying handwritten digits like in the MNIST dataset.

- Sports Analytics: Grouping players with similar performance metrics for strategy planning.

- Neural Networks

Definition: Neural networks are multi-layered computational models inspired by the human brain. They are capable of learning complex patterns in large datasets through interconnected nodes or neurons.

Key Points:

- Backbone of deep learning in image recognition and speech processing.

- Can model highly non-linear and abstract relationships in data.

- Require large datasets and computational resources.

- Types include feedforward, convolutional (CNNs), and recurrent neural networks (RNNs).

Real-World Applications:

- Computer Vision: Detecting objects in autonomous vehicles.

- Natural Language Processing: Powering virtual assistants like Siri or Alexa.

- Healthcare: Identifying tumors in medical imaging scans.

- Gradient Boosting

Definition: Gradient boosting is an ensemble method that builds models sequentially, with each new model correcting the errors of the previous one. Popular frameworks include XGBoost and CatBoost.

Key Points:

- Consistently among the top performers in Kaggle competitions and industry ML solutions.

- Handles missing data and feature importance ranking.

- Excellent for tabular datasets in finance and risk modeling.

- Requires careful hyperparameter tuning to avoid overfitting.

Real-World Applications:

- Credit Scoring: Predicting loan defaults using financial history.

- Fraud Detection: Flagging unusual transactions in banking systems.

- E-commerce: Ranking search results and product recommendations.

- Naive Bayes

Definition: Naive Bayes is a probabilistic classifier based on Bayes’ theorem. It assumes that features are conditionally independent given the class label.

Key Points:

- Highly efficient for text classification and spam filtering.

- Works surprisingly well even when independence assumptions are not strictly true.

- Very fast to train and predict, making it suitable for large datasets.

- Variants include Gaussian and Bernoulli Naive Bayes.

Real-World Applications:

- Email Filtering: Detecting spam emails.

- Sentiment Analysis: Classifying customer reviews as positive or negative.

- News Categorization: Assigning articles to topics like sports or technology.

- Random Forest

Definition: Random Forest is an ensemble learning method that combines multiple decision trees. Each decision tree is trained on random subsets of the data and features. These subsets and feature selection techniques help to improve predictive accuracy.

Key Points:

- Reduces overfitting compared to a single decision tree.

- Works well for classification and feature selection.

- Can handle missing values and outliers effectively.

- Provides feature importance for better model interpretability.

Real-World Applications:

- Finance: Predicting stock market movements using historical indicators.

- Healthcare: Identifying risk factors for chronic diseases.

- Manufacturing: Detecting defective products in quality control processes.

2. Unsupervised Learning

Unsupervised learning is a machine learning approach in which algorithms work with unlabeled data. It means that there are no predefined outputs. The goal is to discover hidden patterns or structures within the data without prior guidance.

The machine learning algorithm analyzes the data to find clusters or dimensional reductions that reveal underlying trends instead of being told what the correct answer is. This makes unsupervised learning powerful for exploratory data analysis and customer segmentation. It is also equally prevalent for anomaly detection and recommendation systems. Unsupervised learning is valuable for big data environments and scenarios where manual labeling is impractical or costly because it can work without human-labeled datasets.

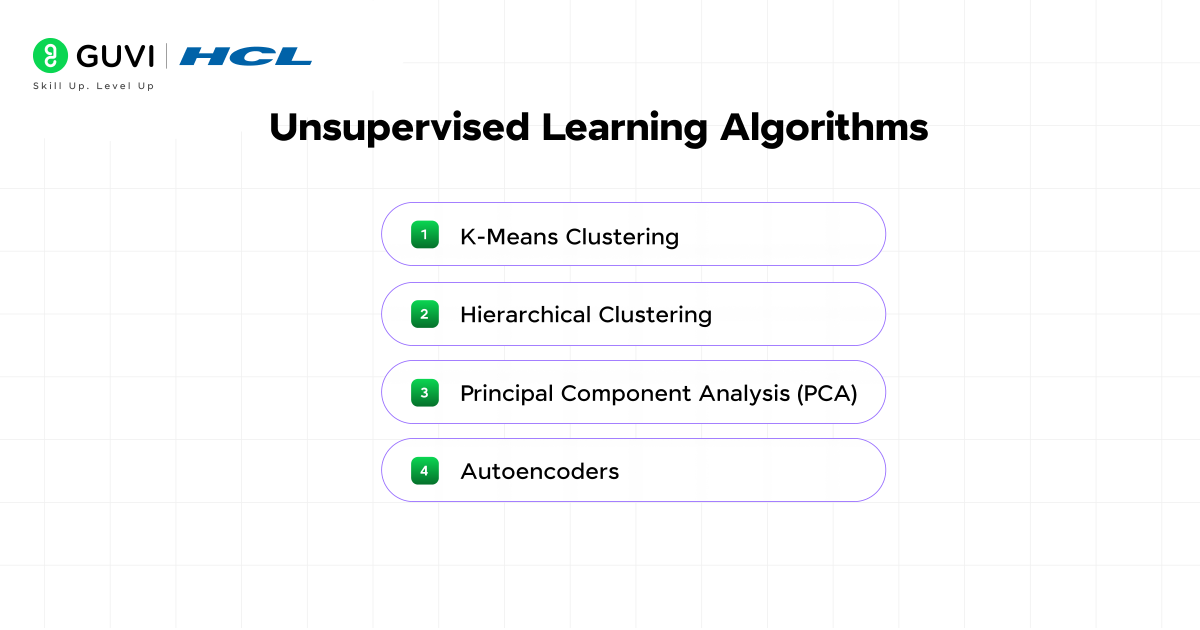

Popular unsupervised learning techniques include:

- K-Means Clustering

Definition: K-Means clustering is an unsupervised machine learning algorithm that groups similar data points into a predefined number of clusters (k). It works by assigning each point to the nearest cluster center (centroid) and recalculating those centers until the groupings are stable.

Key Points

- Works best with numerical data where similarities can be measured by distance.

- Requires the number of clusters (k) to be set before running the algorithm.

- Uses an iterative approach to minimize the distance between points and their cluster centers.

- Efficient for large datasets and widely used in segmentation tasks.

Real-World Applications

- Customer Segmentation: Retailers use it to group buyers with similar purchasing patterns for targeted marketing.

- Image Compression: Groups pixels of similar colors to reduce image file sizes.

- Fraud Detection: Clusters normal transaction behavior to help spot unusual activities.

- Hierarchical Clustering

Definition: Hierarchical clustering is an unsupervised learning method that creates a hierarchy of clusters in the form of a tree structure called a dendrogram. It does not require a fixed number of clusters in advance and allows you to choose the cluster cut-off after analysis.

Key Points

- Two main types: Agglomerative (bottom-up merging) and Divisive (top-down splitting).

- Useful when the number of clusters is unknown.

- Produces a dendrogram that visually shows the similarity between points.

- Works well with smaller datasets where relationships between data points are important.

Real-World Applications

- Bioinformatics: Groups genes or proteins with similar patterns for genetic research.

- Document Categorization: Clusters articles by topic for easier organization.

- Market Research: Segment customers without prior knowledge of the ideal number of groups.

- Principal Component Analysis (PCA)

Definition: Principal Component Analysis is a dimensionality reduction technique that transforms large sets of variables into a smaller set called principal components. It does the same while retaining the most important patterns in the data.

Key Points

- Helps reduce the complexity of datasets without losing much valuable information.

- Removes redundant and correlated features.

- Often used before training machine learning models to improve efficiency.

- The first principal component captures the most variance, the second captures the next highest, and so on.

Real-World Applications

- Facial Recognition: Compresses image data while retaining key facial features.

- Finance: Combines multiple market indicators into fewer components for better analysis.

- Sensor Data Analysis: Simplifies data from IoT devices for faster processing.

- Autoencoders

Definition: Autoencoders are a type of artificial neural network used for learning efficient data representations in an unsupervised way. They compress data into a smaller form (encoding) and then reconstruct it back to its original form (decoding).

Key Points

- Consist of two main parts: Encoder (compresses data) and Decoder (reconstructs data).

- Learn automatically from input data without needing labeled examples.

- Useful for anomaly detection because unusual inputs produce high reconstruction errors.

- Can handle complex and high-dimensional data such as images and audio.

Real-World Applications

- Fraud Detection: Identifies suspicious transactions by spotting patterns that cannot be accurately reconstructed.

- Image Denoising: Removes visual noise while keeping the core image intact.

- Recommendation Systems: Creates compact representations of user preferences for faster and more accurate suggestions.

3. Reinforcement Learning

Reinforcement learning (RL) is a machine learning paradigm where an agent learns how to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties. The goal of the agent is to maximize cumulative rewards over time through trial-and-error learning.

RL focuses on sequential decision-making and is particularly effective in situations where an optimal strategy is not known in advance but can be learned through experience. The agent observes the current state and chooses an action. It then transitions to a new state while receiving feedback that guides future choices.

Reinforcement learning powers advanced applications such as:

- Autonomous vehicles

- Robotics control

- Game AI (e.g., AlphaGo)

- Personalized recommendations

- Dynamic pricing strategies

Reinforcement learning’s strength is in solving complex and high-dimensional problems. It resolves issues typically where long-term rewards outweigh immediate gains. The same strength further makes it a cornerstone of artificial intelligence innovation.

Popular RL algorithms include:

- Q-Learning

Definition: Q-Learning is a model-free reinforcement learning algorithm that helps an agent learn the best action to take in a given state to maximize long-term rewards. It uses a value-based approach where a Q-table stores the expected rewards for state-action pairs.

Key Points

- Works without needing a model of the environment.

- Uses the Bellman equation to update Q-values based on actions and observed rewards.

- Balances exploration (trying new actions) and exploitation (choosing the best-known action).

- Works well in environments with discrete states and actions.

Real-World Applications

- Robotics: Enables robots to learn movement strategies without prior programming.

- Game AI: Used to train agents to play games like Tic-Tac-Toe or simple maze navigation.

- Network Routing: Optimizes data packet delivery in communication networks.

- Deep Q-Networks (DQNs)

Definition: Deep Q-Networks combine Q-Learning with deep neural networks to deal with large or continuous state spaces. A DQN uses a neural network to approximate them instead of storing Q-values in a table.

Key Points

- Allows reinforcement learning to scale to complex and high-dimensional environments.

- Introduces experience replay or storing and reusing past experiences to improve stability.

- Uses a target network to prevent unstable learning updates.

- Often applied in environments where traditional Q-tables would be too large.

Real-World Applications

- Atari Games: DQNs famously learned to play classic games directly from raw pixels.

- Self-Driving Cars: Helps vehicles learn decision-making for navigation and obstacle avoidance.

- Financial Trading: Assists in building automated trading strategies that adapt over time.

- Policy Gradient Methods

Definition: Policy Gradient methods are reinforcement learning algorithms that directly learn a policy (a mapping from states to actions) rather than estimating Q-values. They adjust policy parameters in the direction that increases expected rewards.

Key Points

- Works well for environments with continuous action spaces.

- Avoids some limitations of value-based methods like Q-Learning.

- Uses techniques like REINFORCE or Actor-Critic to improve performance.

- Can represent stochastic policies for better exploration.

Real-World Applications

- Robotics Control: Learns smooth and precise control strategies for robotic arms.

- Autonomous Drones: Helps drones plan smooth flight paths in real-world conditions.

- Natural Language Processing: Used in training conversational AI to optimize dialogue strategies.

All set to go beyond just knowing the types of machine learning algorithms? Turn your understanding of supervised, unsupervised, and reinforcement learning into career-ready skills with our Intel®-certified AI–ML course. With an industry-aligned curriculum and a global community of 80,000+ learners, you will gain the expertise top companies demand in 2025 and beyond, giving you the tools to design and deploy your own intelligent systems.

Final Words

Machine learning algorithms are the engines behind modern artificial intelligence and comprehending them is essential for anyone looking to innovate or optimize in today’s data-driven world. Hence, the bottom line is that:

- Supervised learning excels when historical and labeled data is available.

- Unsupervised learning reveals hidden patterns without prior labeling.

- And, reinforcement learning facilitates adaptive decision-making in dynamic environments.

The key takeaway? Choosing the right algorithm is a strategic move. The more you understand their capabilities and limitations, the better positioned you are to use them for impactful results.

FAQs

1. How do machine learning algorithms improve over time?

Machine learning algorithms improve through iterative training on data. They adjust internal parameters to reduce errors and refine predictions with each cycle. Advanced techniques like gradient boosting and reinforcement learning support models to adapt dynamically, even in unpredictable environments.

2. Can one machine learning algorithm handle multiple types of problems?

Yes, some algorithms are versatile. For example, neural networks can oversee image or pattern recognition and speech processing with different architectures. However, in most cases, choosing a specialized algorithm for each problem type yields better accuracy and efficiency.

3. What factors influence the choice of a machine learning algorithm?

The best algorithm depends on factors like:

- Data size

- Feature types

- Available labels

- Desired accuracy

- Interpretability

- Computational resources.

For example, decision trees are easy to interpret. On the very other side, gradient boosting often wins in accuracy for complex datasets.

4. Are unsupervised learning algorithms suitable for big data?

Absolutely. Unsupervised learning techniques like k-means clustering and PCA excel in big data environments by finding hidden patterns. They also assist in reducing dimensionality and grouping data points efficiently without labeled datasets. These features make them perfect for large-scale analytics.

5. How is reinforcement learning different from supervised learning?

Supervised learning counts on labeled datasets to learn correct outputs. On the other side, reinforcement learning learns through interaction with an environment by using rewards and penalties as feedback. This makes RL ideal for decision-making tasks like robotics control and adaptive game strategies.

Did you enjoy this article?