Reproducibility in Machine Learning: A Beginner’s Guide 2025

Jan 09, 2026 7 Min Read 3273 Views

(Last Updated)

Reproducibility in machine learning remains a significant challenge despite the field’s rapid advancement. Research is currently facing a reproducibility crisis, where many studies produce results that are difficult or impossible to reproduce. When you work with machine learning models, reproducibility means you can repeatedly run your algorithm on specific datasets and obtain the same (or similar) results.

Although different solutions like machine learning platforms exist to address this issue, the level of reproducibility in ML-driven research isn’t increasing substantially. This matters because reproducibility helps in debugging, comparing models, sharing work with others, and deploying reliable systems in the real world. Despite its importance, reproducibility doesn’t come easy – complex challenges make replicating ML results from papers seem almost impossible.

In this beginner-friendly guide, you’ll learn what reproducibility means in machine learning, why it’s crucial for your projects, and practical steps to overcome common challenges. We’ll explore essential tools, best practices, and straightforward techniques that can help you create more reliable and trustworthy machine learning models. Let’s begin!

Table of contents

- What is reproducibility in machine learning?

- Why reproducibility matters in ML research

- Difference between reproducibility and replicability

- Core components of reproducible machine learning

- Code versioning and tracking

- Dataset consistency and versioning

- Environment and dependency management

- Common Challenges in Achieving Reproducibility

- 1) Lack of experiment tracking

- 2) Randomness in training processes

- 3) Hyperparameter inconsistencies

- 4) Framework and library updates

- 5) Hardware and floating-point variations

- Top Tools and Platforms for Reproducibility in Machine Learning

- DVC (Data Version Control)

- MLflow

- neptune.ai

- WandB (Weights & Biases)

- Comet.ml

- TensorFlow Extended (TFX)

- Kubeflow

- Amazon SageMaker

- Best Practices to Improve Reproducibility in ML Projects

- 1) Set random seeds and control randomness

- 2) Use model and data versioning tools

- 3) Track metadata and artifacts

- 4) Collaborate using shared platforms

- 5) Avoid non-deterministic algorithms

- Concluding Thoughts…

- FAQs

- Q1. What is reproducibility in machine learning?

- Q2. Why is reproducibility important in machine learning research?

- Q3. What are the core components of reproducible machine learning?

- Q4. What are some common challenges in achieving reproducibility in ML projects?

- Q5. What are some best practices to improve reproducibility in ML projects?

What is reproducibility in machine learning?

Reproducibility in machine learning represents the ability to consistently achieve the same (or similar) results when repeatedly running algorithms on specific datasets. This concept extends beyond mere result duplication to encompass the entire ML pipeline—from data processing to model design, evaluation, and successful deployment.

Why reproducibility matters in ML research

The machine learning community faces a significant reproducibility crisis. A survey in Nature revealed that more than 70% of researchers have tried and failed to reproduce another researcher’s experiments, while over half couldn’t even reproduce their own work. This crisis threatens the credibility of the entire field and hampers scientific progress.

Reproducibility serves several critical functions:

- Verification of claims: It allows the scientific community to validate findings and build upon established knowledge rather than potentially flawed research

- Error detection: Reproducible research makes it easier to identify mistakes or biases in experimental design

- Resource efficiency: Without reproducibility, researchers waste valuable time and computing resources attempting to build on irreproducible work

- Scientific integrity: As Ali Rahimi noted in his influential 2017 NeurIPS talk, the field has become overly reliant on intuition and luck, lacking rigorous scientific scaffolding

Furthermore, reproducibility matters because ML researchers often selectively report only their best results, potentially obscuring model fragility.

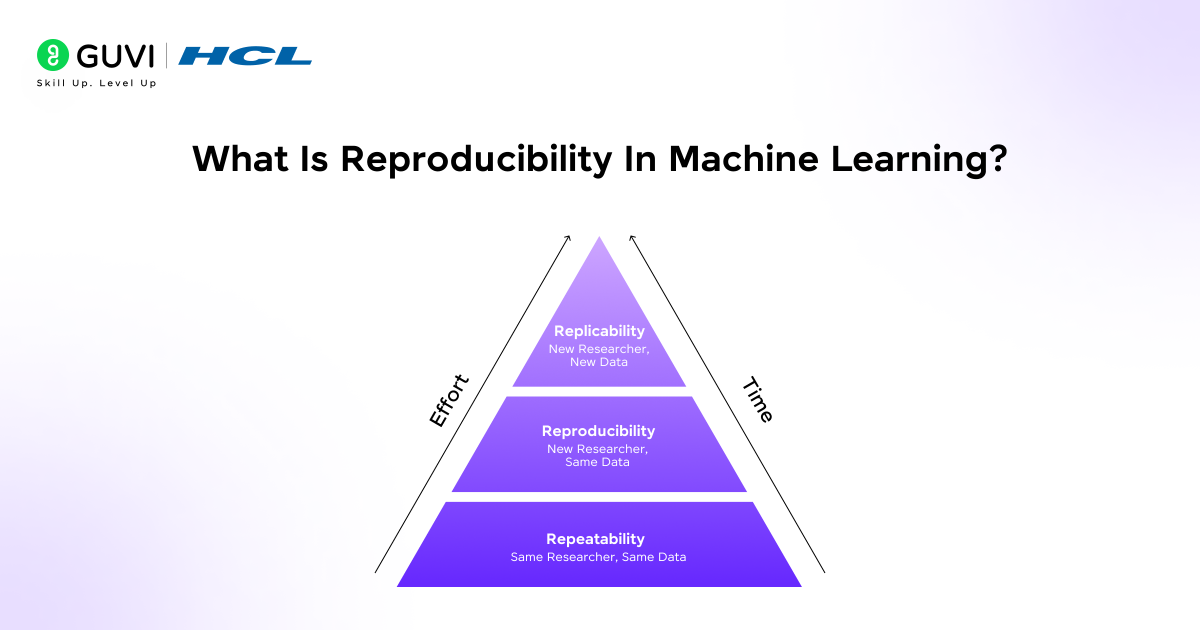

Difference between reproducibility and replicability

- While often used interchangeably, these terms represent distinct concepts. According to the National Academies of Science, Engineering, and Medicine, reproducibility refers to obtaining the same results using the same data, code, and methods as the original study.

- Conversely, replicability involves finding similar results in a new study with new data but similar methods. Additionally, researchers have identified different levels of reproducibility:

- Outcome reproducibility requires experiments to yield the same or adequately similar results, leading to the same analysis and interpretation.

- Analysis reproducibility doesn’t demand identical outcomes but requires that the same analysis can be performed, leading to similar interpretations.

- Interpretation reproducibility only requires that the same conclusions can be drawn, even if outcomes and analyzes differ.

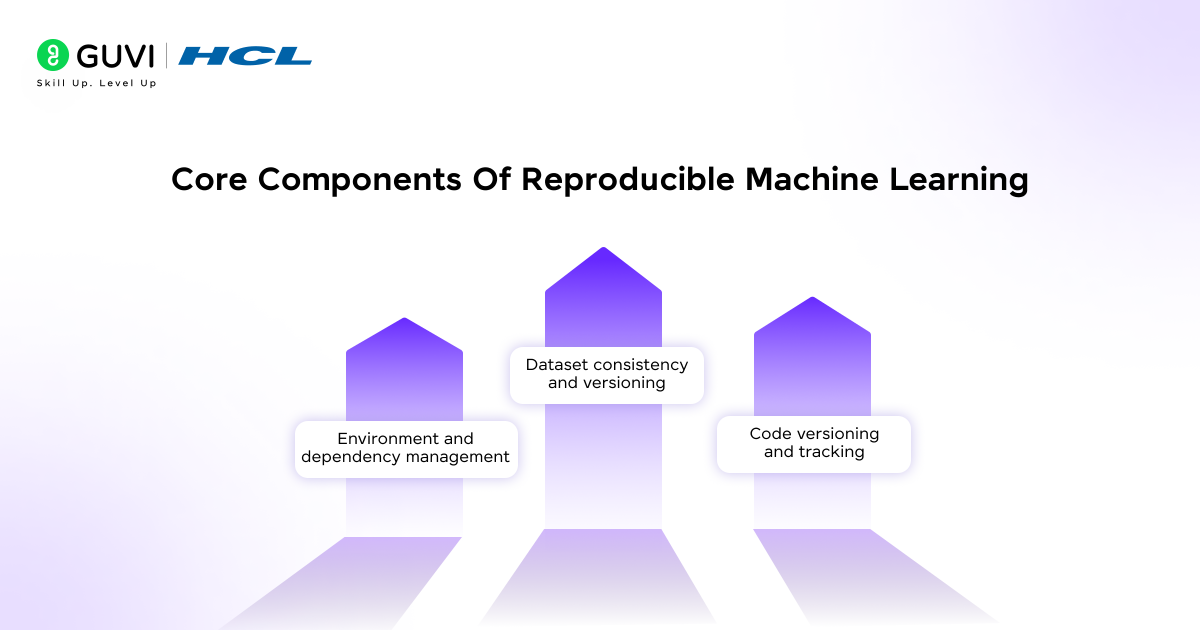

Core components of reproducible machine learning

To build truly reproducible machine learning systems, you need to establish a solid foundation based on three essential pillars. These components form what experts call the “Holy Trinity of ML Reproducibility”, working together to ensure your models can be reliably recreated regardless of who runs them or where they’re deployed.

1. Code versioning and tracking

Code versioning serves as the backbone of reproducible machine learning projects. Essentially, it involves systematically tracking every change made to your code, enabling you to maintain a complete history of your model’s evolution. At AAAI, Odd Erik Gundersen reported that only 6% of researchers at top AI conferences shared their code, highlighting a major obstacle to reproducibility.

For ML projects, code versioning offers several unique advantages:

- Traceability: Every modification to model architecture, hyperparameters, and preprocessing steps becomes visible and documented

- Collaboration: Team members can work simultaneously without conflicts, especially important in distributed environments

- Rollback capability: You can easily revert to previous working versions when needed

Unlike traditional software, machine learning code undergoes rapid iterations with high variance during experimentation. Therefore, implementing proper version control through tools like Git becomes even more crucial. This practice helps you avoid obscuring training processes in personal notebooks or isolated virtual machines, which is “the literal inverse of reproducible training”.

2. Dataset consistency and versioning

Data versioning tackles perhaps the most challenging aspect of ML reproducibility. Unlike code, data is “highly mutable by nature” and can change due to:

- Collaboration when team members update records

- External dependencies when third-party sources update

- Data management policies when information gets rewritten or deleted

Many data scientists attempt to solve this by creating duplicate copies of datasets, but this approach quickly becomes impractical due to storage costs and governance concerns. Instead, proper dataset versioning tools like Data Version Control (DVC) allow you to create “immutable snapshots” of data at specific points in time without duplicating entire datasets.

Dataset version control ultimately delivers what Ali Rahimi called for in his influential NeurIPS talk – a more rigorous scientific scaffolding for machine learning work [previous section reference]. By tracking exactly which data produced which results, debugging that once “took weeks now takes hours” since there’s no ambiguity about dataset versions.

3. Environment and dependency management

The final pillar focuses on ensuring consistency in the software environment where your code runs. Environment management involves tracking all external software required for your model and prediction code to function correctly.

This component includes documenting:

- Library dependencies (like scikit-learn, numpy, pandas)

- Specific version numbers

- System-level dependencies (operating systems, runtime libraries)

Without proper environment management, even identical code and data can produce different results. Tools like virtual environments (venv, conda) and containerization (Docker) help address these challenges by creating isolated, reproducible environments.

Virtual environments allow you to install packages for specific projects without affecting your global Python installation. Moreover, tools like Conda can manage not only Python packages but also system-level dependencies, ensuring a more complete and portable environment.

Together, these three pillars form a comprehensive foundation for reproducible machine learning. When properly implemented, they ensure that you—or anyone else—can recreate your exact experimental conditions, leading to consistent and trustworthy results.

Common Challenges in Achieving Reproducibility

Despite best efforts, achieving true reproducibility in machine learning remains difficult. Numerous obstacles stand in the way of creating consistently replicable ML experiments. Let’s examine the five major challenges you’ll likely encounter.

1) Lack of experiment tracking

This obstacle represents perhaps the most significant barrier to reproducible ML experiments. When inputs, parameters, and decisions aren’t systematically recorded during experimentation, replicating results becomes nearly impossible. Many researchers fail to log critical changes in:

- Hyperparameter values

- Batch sizes

- Data preprocessing steps

- Model architecture decisions

Without proper documentation of these details, understanding and reproducing models later becomes extremely difficult. In fact, at top AI conferences, only 6% of researchers share their code, creating a massive barrier to reproducibility across the field.

2) Randomness in training processes

Machine learning systems rely heavily on randomization techniques that introduce variability. These sources of randomness include:

- Random weight initialization

- Random noise introduction

- Data shuffling and augmentation

- Dropout layer behavior

- Random batch selection

All these elements can significantly impact model performance. One striking study found that running 16 identical training processes with the same architecture resulted in accuracy variations ranging from 8.6% to 99.0% – a staggering 90.4% difference.

3) Hyperparameter inconsistencies

Even slight modifications to hyperparameters during experimentation can yield dramatically different results. Importantly, default hyperparameter values might change between training runs without proper documentation. This creates a situation where:

- The original hyperparameter combination becomes lost

- New runs produce inconsistent outcomes

- Reproducing the exact model becomes virtually impossible

In ML systems, the number of possible hyperparameter combinations grows combinatorially large, making systematic tracking essential.

4) Framework and library updates

ML frameworks and libraries continuously evolve, often creating compatibility issues. For instance:

- A particular library version used to achieve specific results might no longer be available

- Updates can cause significant changes in output

- Different frameworks process operations differently

One notable example: PyTorch 1.7+ supports mixed-precision natively from NVIDIA’s apex library, while previous versions did not. Similarly, running identical algorithms with fixed random seeds in PyTorch versus TensorFlow produces different performance results.

5) Hardware and floating-point variations

Hardware differences represent a final major challenge for reproducibility. Various studies demonstrate that:

- Different GPUs or CPUs can produce different computational outcomes

- Floating-point variations occur due to hardware settings, software settings, or compilers

- Changes in GPU architectures make exact reproduction extremely difficult

For instance, deep learning frameworks use CUDA and cuDNN for GPU implementations, which introduce randomization to expedite processes through operations like selecting primitives, adjusting floating-point precision, and optimizing matrix operations.

To mitigate these issues, some researchers resort to CPU-only computations, which reduces uncertainty but sacrifices computational efficiency.

To keep things light, here are a few bite-sized nuggets about making ML results repeatable:

Origin of the “Crisis” Term in ML: The phrase “reproducibility crisis” in ML gained momentum after surveys showed most researchers struggled to reproduce published results, pushing conferences to demand more transparency.

Reproducibility ≠ Replicability: Reproducibility means same data + same code + same setup → same results; replicability means new data + similar methods → similar conclusions.

Seeds Aren’t Magic: Fixing random seeds helps, but non-deterministic GPU ops, parallelism, and library changes can still shift results.

Checklists Changed the Game: Leading conferences began adopting reproducibility checklists (e.g., code/data availability, hyperparameters, environment details), making papers easier to verify.

Containers Made It Practical: Tools like Docker and Conda let teams “freeze” environments so the same code runs the same way on different machines.

Top Tools and Platforms for Reproducibility in Machine Learning

As the machine learning field matures, a robust ecosystem of tools has emerged to tackle reproducibility challenges. These platforms offer specialized features to help researchers and practitioners create truly repeatable ML experiments. Let’s explore the most effective tools available in 2025.

1. DVC (Data Version Control)

DVC functions like Git but specifically for machine learning datasets and experiments. It enables version control for large files without storing them directly in Git repositories. Key capabilities include:

- Version tracking for datasets, making it possible to roll back to previous data states

- Git-like operations (commit, branch, merge) for managing ML experiments

- Integration with various cloud storage solutions for efficient data sharing

DVC is particularly valuable for teams with strong software engineering backgrounds who need robust data versioning.

2. MLflow

MLflow stands out as an open-source platform addressing the entire machine learning lifecycle. It provides comprehensive tracking capabilities through four key components:

- Tracking: Records parameters, code versions, metrics, and artifacts during ML processes

- Model Registry: Manages different model versions and their deployment states

- Projects: Standardizes ML code packaging for reproducibility

- Models: Deploys models across different serving platforms

MLflow’s unified platform approach makes it ideal for organizations seeking end-to-end reproducibility solutions.

3. neptune.ai

Neptune.ai focuses strongly on collaboration and scalability for experiment tracking. Its forking feature allows researchers to resume experiments from saved checkpoints without waiting for one experiment to finish before starting another. Neptune excels at:

- Logging diverse metadata including source code, Jupyter notebook snapshots, and Git information

- Tracking large-scale experiments (over 100,000 runs with millions of data points)

- Creating customizable dashboards for visualizing results

This platform offers superior visualization capabilities while remaining accessible to non-technical collaborators.

4. WandB (Weights & Biases)

WandB requires minimal setup—just five lines of code to begin tracking experiments. It provides:

- Automatic logging of gradients and model parameters

- Built-in visualization tools for comparing experiments

- Integration with major frameworks including PyTorch, TensorFlow, and scikit-learn

WandB’s lightweight integration makes it particularly appealing for those seeking quick implementation without sacrificing functionality.

5. Comet.ml

Comet.ml offers comprehensive experiment management with particular attention to model optimization. It excels at:

- Hyperparameter tuning and optimization tracking

- Automatic dataset versioning and lineage tracking

- Custom visualization tools for domain-specific analysis

Comet’s automatic logging captures hyperparameters, metrics, code, and system performance without additional configuration.

6. TensorFlow Extended (TFX)

TFX provides an end-to-end platform specifically designed for production ML pipelines. Its components include:

- TensorFlow Data Validation for analyzing and validating data

- TensorFlow Transform for consistent preprocessing

- ML Metadata for tracking provenance of artifacts

TFX ensures consistency across model lifecycle stages, from development to deployment.

7. Kubeflow

Kubeflow enables machine learning workflows on Kubernetes, making deployments portable and scalable. It offers:

- Notebook-based environments for development

- Pipeline tools for creating repeatable workflows

- Multi-user isolation and access management

These features make Kubeflow excellent for organizations needing enterprise-grade reproducibility on cloud infrastructure.

8. Amazon SageMaker

SageMaker provides managed MLOps tools with strong reproducibility features:

- Integration with MLflow for experiment tracking

- Random seed control for reproducible model tuning

- Model Registry for versioning and metadata management

SageMaker automates standardized processes across the ML lifecycle while maintaining consistent model performance in production.

Best Practices to Improve Reproducibility in ML Projects

First and foremost, implementing reproducible machine learning practices requires systematic approaches that address both technical and collaborative aspects of your workflow.

1) Set random seeds and control randomness

Machine learning models contain multiple sources of randomness that can significantly affect results. To control this:

- Fix random seeds across all frameworks (Python, NumPy, PyTorch, TensorFlow) before training

- Evaluate models with multiple random seeds to understand performance variance

- When using PyTorch with CUDA, set torch.backends.cudnn.deterministic = True to ensure consistent algorithms

2) Use model and data versioning tools

Version control extends beyond code to include datasets and models:

DVC offers Git-like functionality specifically for datasets, enabling snapshot creation without duplicating entire files. This practice transforms debugging that “took weeks” into something that “now takes hours”.

3) Track metadata and artifacts

Consistently document experiment details by:

Recording parameters, metrics, and environment configurations with each run Storing artifacts (model weights, preprocessors) alongside experiment data Logging input data characteristics including dimensions and sample counts

4) Collaborate using shared platforms

MLflow and similar tools enable efficient teamwork by:

Creating shared workspaces where multiple contributors access the same experiments Establishing consistent user access management across projects

5) Avoid non-deterministic algorithms

Certain operations introduce unpredictability:

Batch-size variations during inference often cause nondeterminism—make every kernel “batch-invariant” In critical applications requiring consistency, choose simpler deterministic systems over complex non-deterministic ones

Get hands-on with reproducible machine learning workflows through HCL GUVI’s Artificial Intelligence & Machine Learning Course—co-designed with Intel and IITM Pravartak—where you’ll learn modern ML pipelines, version control, containerisation (Docker), and deployment best-practices in an industry-ready, language-inclusive format.

Concluding Thoughts…

Reproducibility stands as a cornerstone of trustworthy machine learning development. Throughout this guide, you’ve seen how achieving consistent results requires attention to multiple factors, from code versioning to environment management. Additionally, understanding challenges like randomness and framework inconsistencies helps you anticipate potential pitfalls before they occur.

As you embark on your machine learning journey, consider reproducibility not as an afterthought but as a fundamental aspect of your workflow from day one. Start with simple practices like version control and gradually incorporate more sophisticated tools as your projects grow. Ultimately, the time invested in reproducibility pays dividends through reliable models and credible results – essential qualities for any successful machine learning project.

FAQs

Q1. What is reproducibility in machine learning?

Reproducibility in machine learning refers to the ability to consistently achieve the same or similar results when repeatedly running algorithms on specific datasets. It encompasses the entire ML pipeline, from data processing to model design, evaluation, and deployment.

Q2. Why is reproducibility important in machine learning research?

Reproducibility is crucial in ML research as it allows for verification of claims, error detection, resource efficiency, and maintaining scientific integrity. It helps validate findings, identify mistakes, and build upon established knowledge rather than potentially flawed research.

Q3. What are the core components of reproducible machine learning?

The core components of reproducible machine learning include code versioning and tracking, dataset consistency and versioning, and environment and dependency management. These elements work together to ensure that experiments can be reliably recreated regardless of who runs them or where they’re deployed.

Q4. What are some common challenges in achieving reproducibility in ML projects?

Common challenges in achieving reproducibility include lack of experiment tracking, randomness in training processes, hyperparameter inconsistencies, framework and library updates, and hardware and floating-point variations. These factors can significantly impact model performance and make exact reproduction difficult.

Q5. What are some best practices to improve reproducibility in ML projects?

Some best practices to improve reproducibility include setting random seeds and controlling randomness, using model and data versioning tools, tracking metadata and artifacts, collaborating using shared platforms, and avoiding non-deterministic algorithms when possible. Implementing these practices can lead to more consistent and trustworthy results.

Did you enjoy this article?