What is an Artificial Neural Network? The Simple Guide You Need (2025)

Oct 24, 2025 7 Min Read 1754 Views

(Last Updated)

Artificial neural networks (ANNs) are powerful machine learning algorithms designed to mimic how the human brain processes information. You’ve likely encountered their capabilities without realizing it—whether through face recognition on your smartphone, product recommendations, or even virtual assistants responding to your voice commands.

ANNs serve as the foundation for numerous applications of neural networks across industries. The ANN algorithm excels particularly in image and character recognition because of its unique ability to process multiple inputs and identify complex, non-linear correlations. Additionally, deep neural networks form the core of what we now call “deep learning,” opening up transformative advances in computer science, speech recognition, and natural language processing.

In this thorough guide, you’ll discover what an artificial neural network is, how it functions, the different types available, and practical examples of ANN applications that impact your daily life. Let’s begin!

Table of contents

- What is an Artificial Neural Network (ANN)?

- How ANN mimics the human brain

- Why ANN is important in AI and ML

- How Artificial Neural Networks Work

- 1) Input, hidden, and output layers

- 2) Role of weights and biases

- 3) Activation functions explained simply

- 4) Backpropagation and learning process

- Types of Artificial Neural Networks

- 1) Feedforward Neural Network (FNN)

- 2) Convolutional Neural Network (CNN)

- 3) Recurrent Neural Network (RNN)

- 4) Long Short-Term Memory (LSTM)

- 5) Generative Adversarial Network (GAN)

- Applications of Neural Networks in Real Life

- 1) Image and speech recognition

- 2) Medical diagnosis and healthcare

- 3) Financial forecasting and fraud detection

- 4) Customer service and chatbots

- 5) Self-driving cars and robotics

- Pros and Cons of Using ANN

- Advantages of ANN

- Limitations and challenges

- Concluding Thoughts…

- FAQs

- Q1. What exactly is an Artificial Neural Network?

- Q2. How does an Artificial Neural Network function?

- Q3. What are some real-world applications of Artificial Neural Networks?

- Q4. What are the main advantages of using Artificial Neural Networks?

- Q5. Are there any limitations to Artificial Neural Networks?

What is an Artificial Neural Network (ANN)?

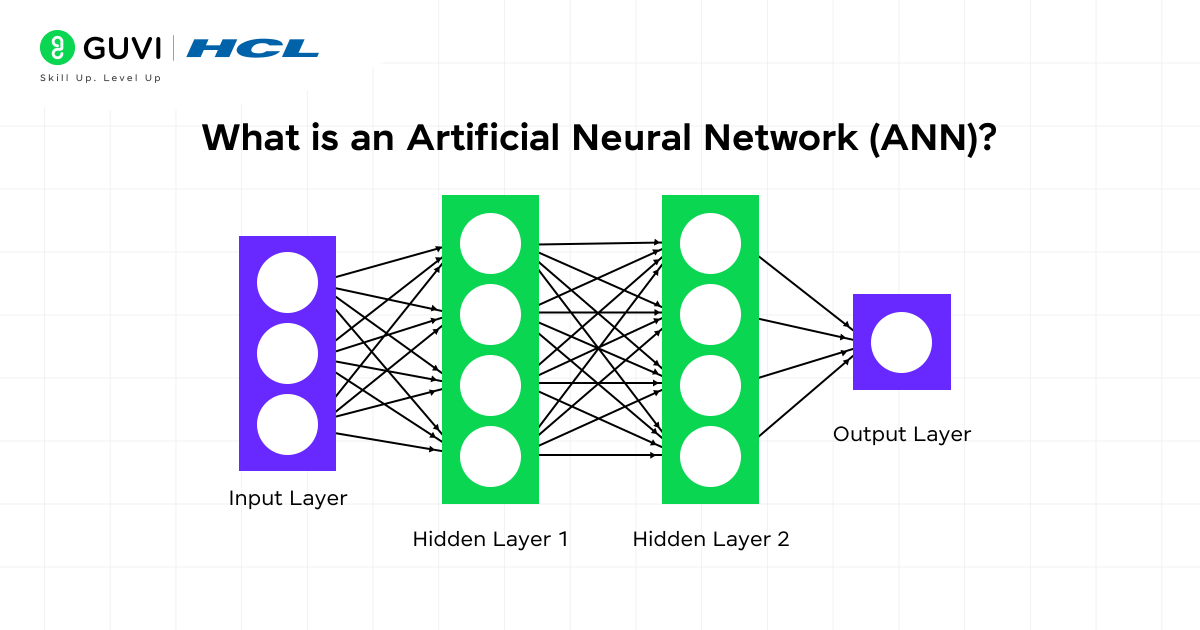

An artificial neural network (ANN) is a computational model that processes information in a way inspired by the human brain’s neural structure. At its core, an ANN consists of interconnected nodes or “neurons” arranged in layers that work together to solve complex problems by learning from data.

Each neural network has three main components:

- Input layer – Receives raw data (like pixels from an image)

- Hidden layers – Where the actual processing happens through mathematical transformations

- Output layer – Produces the final result or prediction

In essence, these networks function as a series of machine learning algorithms searching for relationships in datasets. They excel at tasks that are difficult to solve with conventional programming approaches, such as image recognition, language translation, and decision-making in complex environments.

How ANN mimics the human brain

The human brain contains approximately 85 billion neurons that receive, process, and transmit signals. Similarly, an artificial neural network contains artificial neurons that process information through interconnected pathways.

Here’s how the parallel works:

- In your brain, dendrites receive input signals from other neurons

- The cell body processes these signals

- The processed signal travels along the axon to the output terminals

Artificial neural networks follow this same pattern. Each artificial neuron:

- Receives weighted inputs (like dendrites)

- Processes them using mathematical functions

- Passes outputs to connected neurons

Furthermore, both systems learn through experience. While your brain strengthens neural connections when you practice skills, ANNs adjust the “weights” between nodes during training to improve accuracy. Though this mimicry isn’t perfect—the brain contains vastly more neurons than most ANNs—this bio-inspired approach has proven remarkably effective.

Why ANN is important in AI and ML

Artificial neural networks have become fundamental to modern artificial intelligence primarily because they can:

- Learn directly from data without requiring explicit programming rules

- Recognize complex patterns in non-linear, complicated information

- Adapt to changing environments by continuously updating their understanding

- Process massive datasets quickly and efficiently

Their ability to identify patterns makes them invaluable for tasks like image classification, speech recognition, and natural language processing. As a non-parametric modeling technique, ANNs can tackle problems where underlying functions are unknown or too complex to define manually.

How Artificial Neural Networks Work

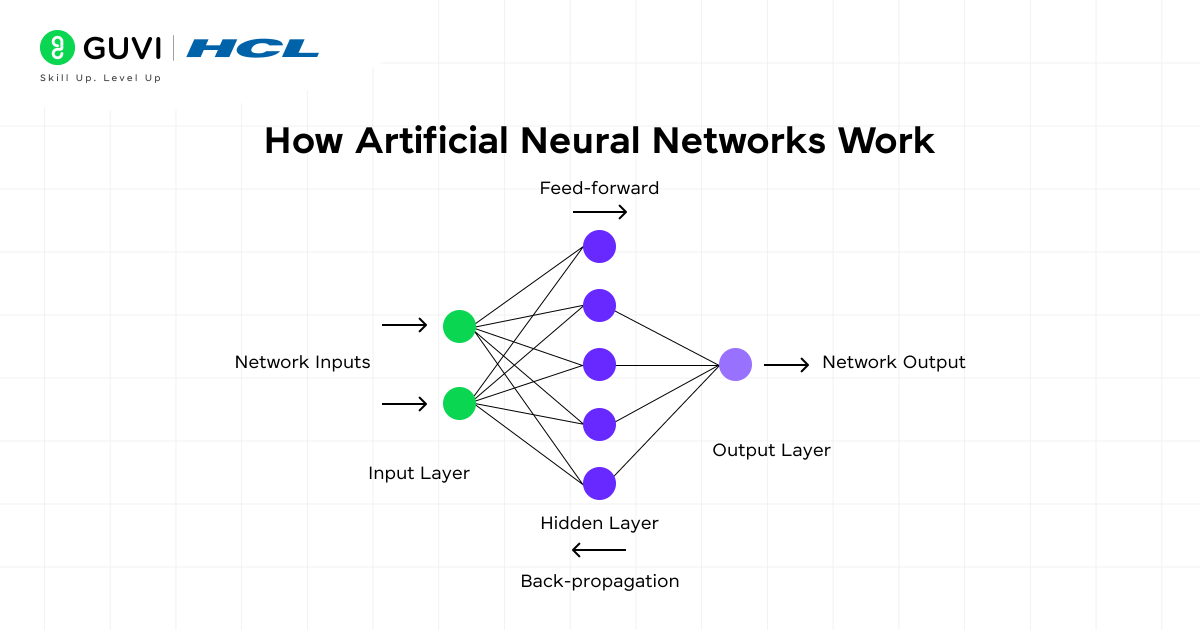

The architecture of an artificial neural network consists of interconnected layers that process information through a series of mathematical operations. Each layer plays a specific role in transforming input data into meaningful predictions, making ANNs remarkably flexible tools for complex problem-solving.

1) Input, hidden, and output layers

Every artificial neural network features three primary types of layers:

- Input layer – Receives raw data from external sources. Each input neuron represents a feature in your dataset (for example, pixel values in image recognition tasks or individual columns in tabular data).

- Hidden layers – Process and transform the data, extracting increasingly abstract features. These layers perform most of the computational work, with more hidden layers enabling the network to learn more complex patterns.

- Output layer – Produces the final prediction or classification result. The number of neurons here depends on your task—one for regression problems, multiple for classification tasks.

The connection between these layers creates a “feedforward” structure where information flows in one direction, from input through hidden layers to output.

2) Role of weights and biases

Weights and biases are the trainable parameters that enable neural networks to learn:

- Weights determine how strongly neurons influence each other. Initially assigned random values, each connection between neurons has a unique weight that scales the signal passing between them. Think of weights as importance indicators—higher weights amplify signals, lower weights diminish them.

- Biases serve as threshold adjusters that help neurons activate even when inputs alone aren’t sufficient. Each neuron has its own bias term added to the weighted sum of inputs. This additional parameter provides flexibility by allowing activation across a wider range of conditions.

The formula for a neuron’s output can be expressed as: Output = Activation Function(∑(Inputs × Weights) + Bias)

3) Activation functions explained simply

Activation functions introduce non-linearity, enabling networks to learn complex patterns that simple linear operations cannot capture. Without them, even deep networks would be limited to solving only linearly separable problems.

Common activation functions include:

- ReLU (Rectified Linear Unit) – Returns input if positive, zero otherwise. Popular for hidden layers due to computational efficiency and solving the vanishing gradient problem.

- Sigmoid – Outputs values between 0 and 1. Useful for binary classification tasks.

- Tanh – Outputs values between -1 and 1. Similar to sigmoid but with a broader range.

- Softmax – Used in output layers for multi-class classification, converting raw outputs into probabilities.

4) Backpropagation and learning process

Neural networks learn through a process called backpropagation—a method for adjusting weights based on prediction errors:

- Forward pass – Data enters the input layer and moves through the network, with each neuron calculating its weighted sum and applying an activation function before passing results to the next layer.

- Error calculation – After producing an output, the network compares its prediction with the actual target value to determine error.

- Backward pass – The error is propagated backwards through the network. Using the chain rule from calculus, the algorithm calculates how much each weight contributed to the error.

- Weight adjustment – The weights and biases are updated to reduce the error, typically using optimization algorithms like gradient descent.

This process repeats with many examples, allowing the network to gradually improve its accuracy. The learning rate—a hyperparameter that defines the size of corrective steps—influences how quickly the network adapts to errors.

Through repeated training cycles, artificial neural networks gradually fine-tune their internal parameters, enabling them to recognize complex patterns and make increasingly accurate predictions.

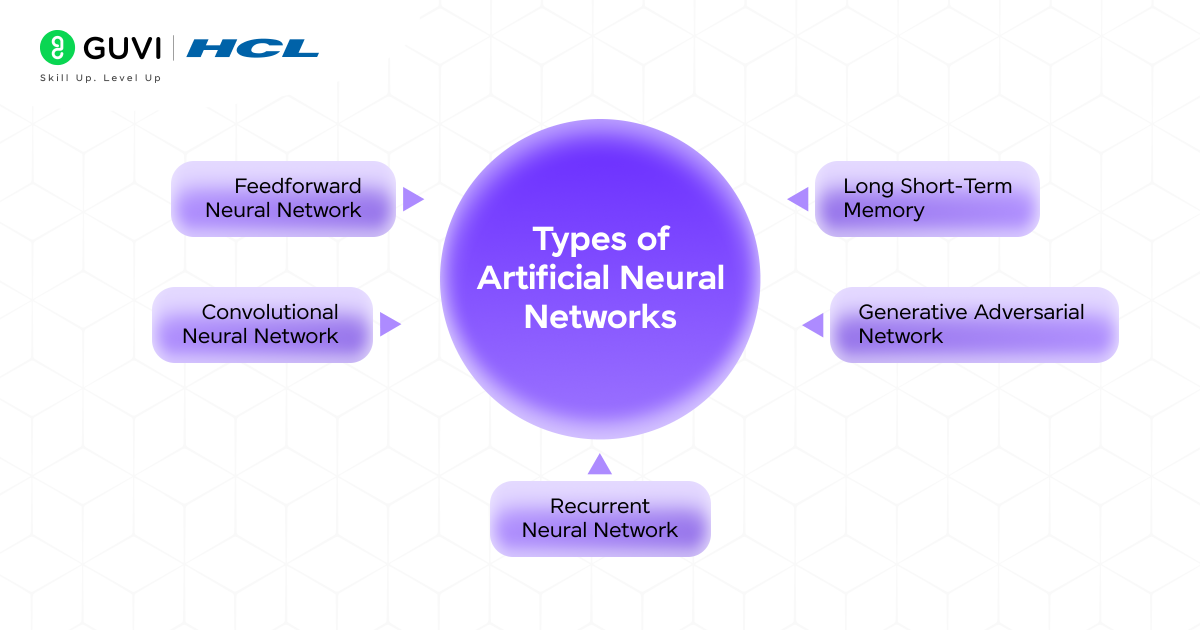

Types of Artificial Neural Networks

Neural networks come in various architectures, each designed to excel at specific tasks. Let’s explore the five main types of artificial neural networks that form the foundation of today’s AI applications.

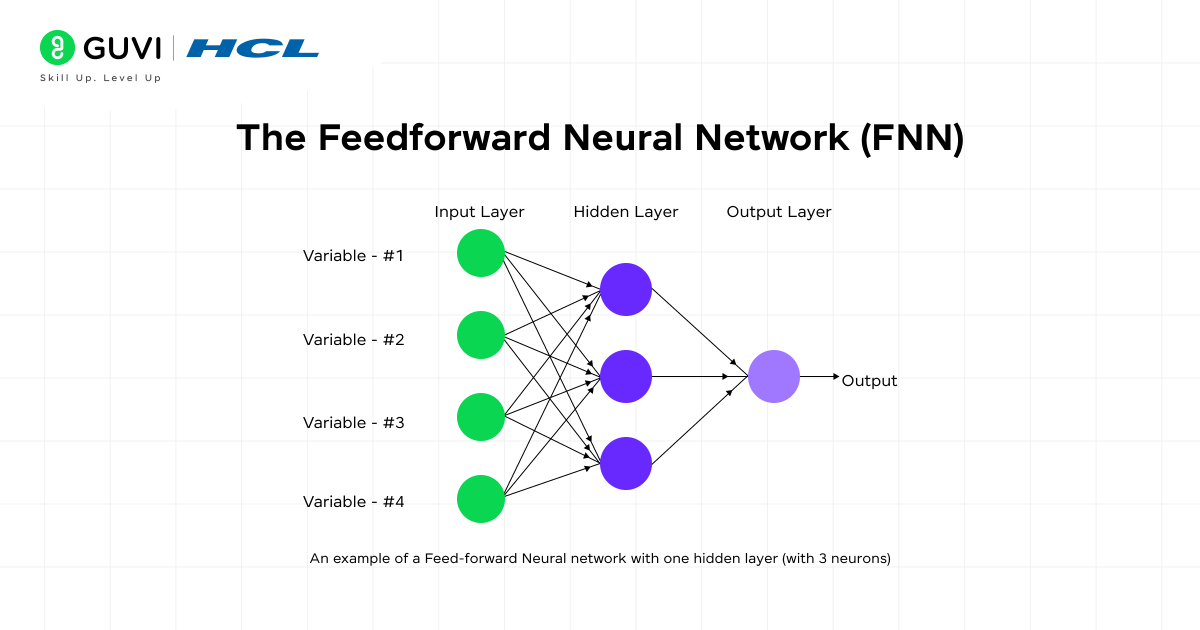

1) Feedforward Neural Network (FNN)

Feedforward neural networks represent the simplest type of ANN architecture where information flows in one direction only—from input through hidden layers to output, without any cycles or loops. As the first type of artificial neural network invented, FNNs serve as the building blocks for more complex designs.

Key characteristics:

- Information always moves forward, never backwards

- Each neuron in one layer connects to every neuron in the next layer

- Primarily used for pattern recognition and classification tasks

FNNs excel at general-purpose tasks like classification and regression, especially when working with static data that has no sequential dependencies. They typically use backpropagation during training to reduce prediction errors.

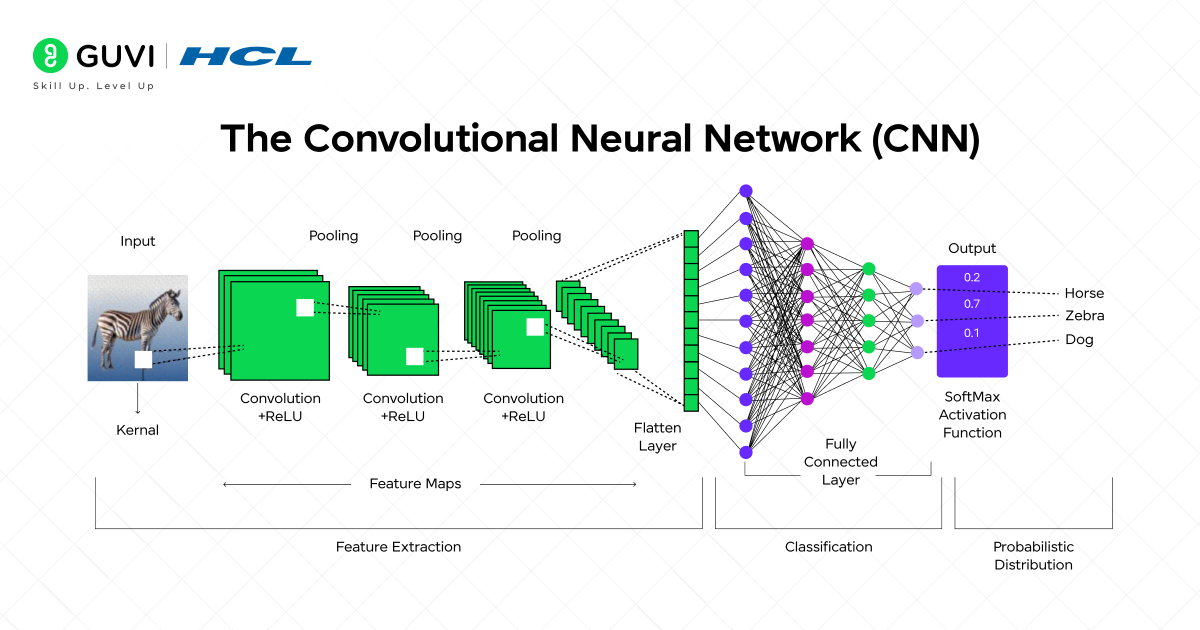

2) Convolutional Neural Network (CNN)

CNNs are specialized for processing grid-like data such as images. Inspired by the organization of the animal visual cortex, these networks use convolutional layers to filter inputs and extract meaningful features.

The architecture consists of:

- Convolutional layers: Apply filters to detect patterns and features

- Pooling layers: Reduce dimensions while maintaining important information

- Fully connected layers: Classify based on extracted features

Consequently, CNNs have demonstrated superior performance in image classification, object detection, and medical imaging analysis. Their ability to recognize visual patterns regardless of position makes them ideal for computer vision tasks.

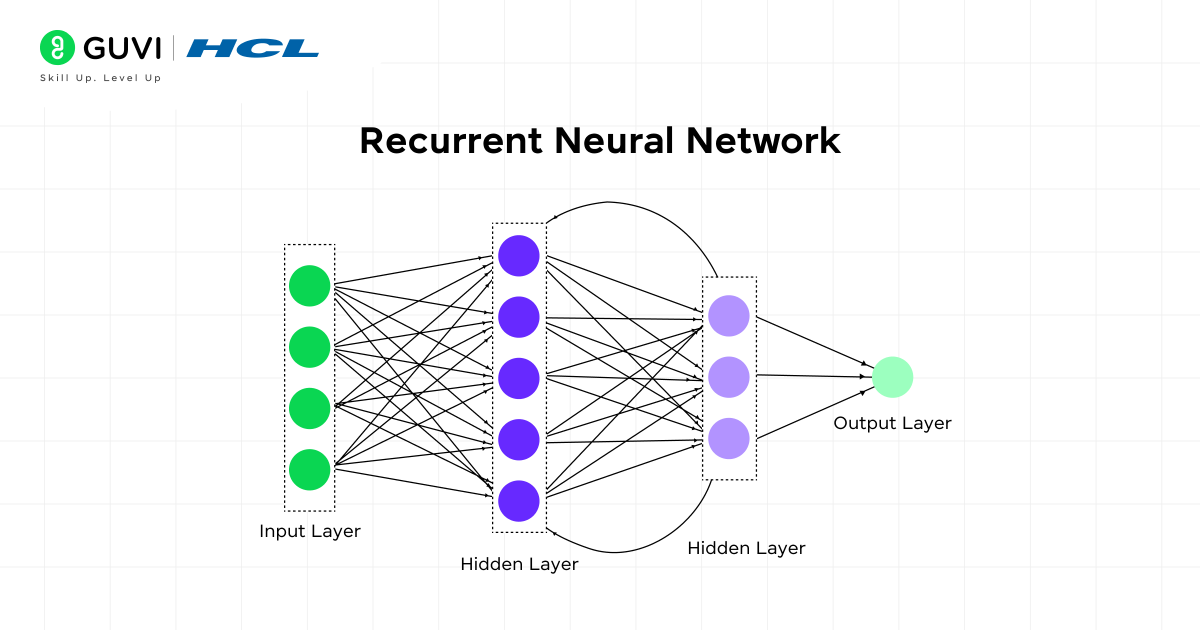

3) Recurrent Neural Network (RNN)

Unlike feedforward networks, RNNs incorporate feedback loops that allow information to persist. This creates an internal “memory” that helps process sequential data where order matters.

RNNs are characterized by:

- Recurrent connections enabling feedback loops

- A hidden state that acts as memory

- Connections that form a directed graph along a temporal sequence

These networks excel at tasks involving sequential data, such as text processing, speech recognition, and time series prediction. Nevertheless, traditional RNNs struggle with learning long-term dependencies due to the vanishing gradient problem.

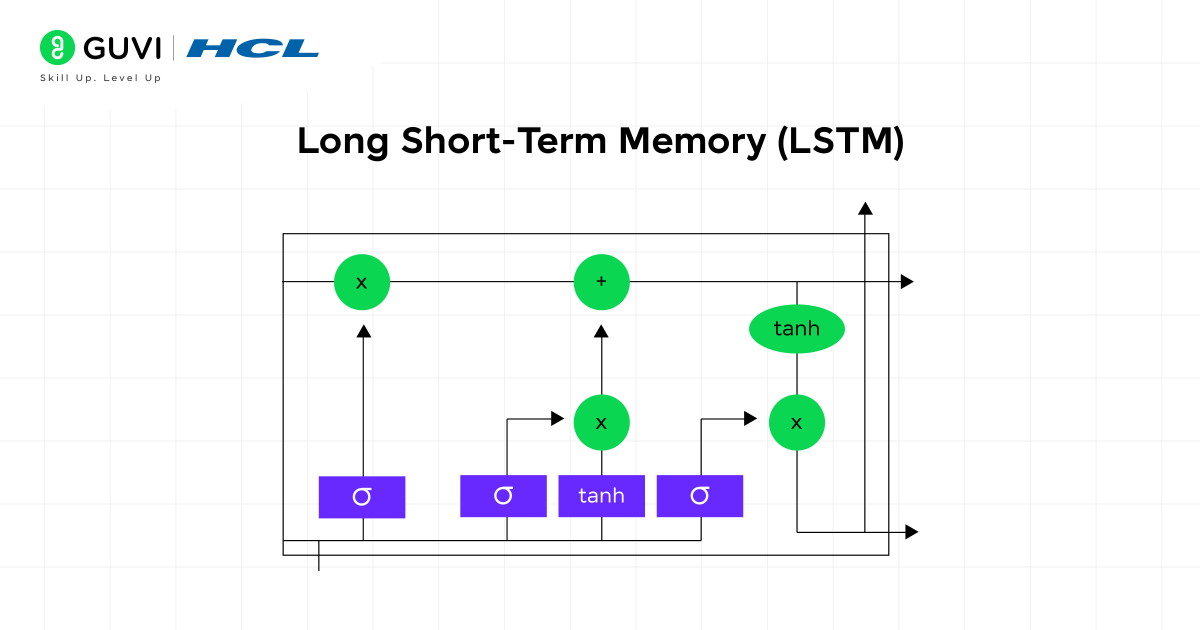

4) Long Short-Term Memory (LSTM)

LSTM networks are a specialized type of RNN designed to overcome the vanishing gradient problem. Created to provide short-term memory that can last thousands of timesteps, LSTMs feature a unique architecture with memory cells and gates.

Each LSTM unit contains:

- A cell that remembers values over arbitrary time intervals

- An input gate that controls new information flow

- A forget gate that decides what information to discard

- An output gate that determines what to output

Essentially, this structure allows LSTMs to selectively remember or forget information, making them particularly effective for tasks requiring long-term memory like language modeling and translation.

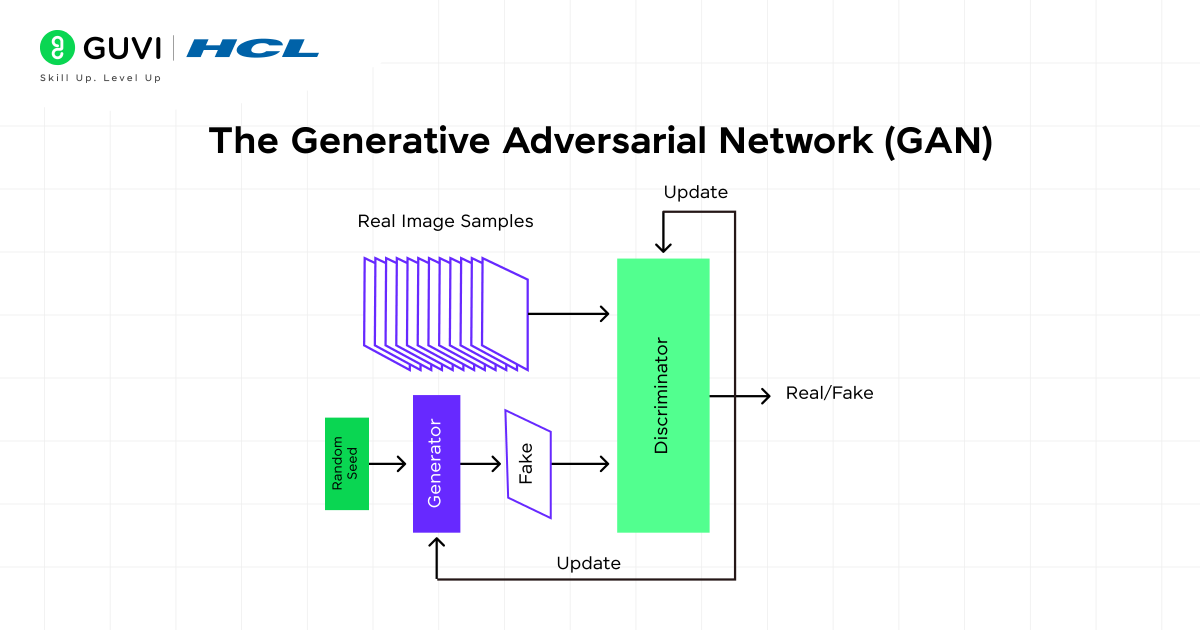

5) Generative Adversarial Network (GAN)

GANs represent an innovative approach where two neural networks—a generator and a discriminator—compete against each other in a game-like scenario. Introduced in 2014, these networks learn to generate new data with the same characteristics as the training set.

In this adversarial process:

- The generator creates fake data (like images)

- The discriminator evaluates authenticity

- Both networks improve through competition

GANs have found applications in generating photorealistic images, style transfer, and enhancing low-resolution images. Notably, they can also be used for unsupervised video retargeting and facial feature alteration.

Applications of Neural Networks in Real Life

From shopping recommendations to voice assistants, neural networks have moved from research labs into our everyday lives. These powerful tools now shape multiple industries with their pattern recognition and prediction capabilities.

1) Image and speech recognition

- Neural networks excel at identifying patterns in visual and audio data. In image processing, convolutional neural networks (CNNs) automatically identify objects in photos and recognize handwriting. First and foremost, CNNs analyze local patterns in images to extract meaningful features, much like how your visual cortex processes what you see.

- For speech recognition, recurrent neural networks (RNNs) enable real-time translation services like Google Translate. These systems can distinguish subtle speech patterns across different languages, making communication seamless across regions. Additionally, voice recognition systems in healthcare track patient information through natural language processing.

2) Medical diagnosis and healthcare

- The healthcare industry has embraced artificial neural networks for numerous critical applications. For cancer detection, applications like SkinVision use neural networks with a specificity of 80% and sensitivity of 94%—higher than most dermatologists.

- Medical imaging particularly benefits from CNN analysis of MRIs, CT scans, X-rays, and ultrasounds. These networks detect abnormalities like tumors with high precision, reducing evaluation time while increasing diagnostic accuracy.

- IBM Watson demonstrates how neural networks analyze cancer patient data to suggest personalized treatment plans tailored to individual needs. This capability supports doctors in making informed decisions about patient care.

3) Financial forecasting and fraud detection

- Financial institutions implement neural networks to analyze transaction patterns and predict market behavior. Companies like MasterCard and PayPal utilize these systems to detect and prevent fraudulent transactions in real-time, protecting both businesses and customers.

- In stock market forecasting, researchers found that Gated Recurrent Unit (GRU) models provide superior results for currency exchange rate prediction. Multi-layer perceptrons (MLPs) analyze past stock performance, yearly returns, and nonprofit relations to develop effective real-time stock predictions.

4) Customer service and chatbots

Neural networks power modern customer service through natural language processing. These systems:

- Analyze customer queries and past conversations to understand context

- Provide relevant, accurate responses instantly

- Anticipate potential issues based on historical interactions

Businesses implementing AI chatbots report improved customer engagement. For instance, Camping World’s virtual assistant “Arvee” increased customer engagement by 40% while decreasing wait times to approximately 33 seconds.

5) Self-driving cars and robotics

- Autonomous vehicles use neural networks to perceive environments, make decisions, and control movement. Tesla, Waymo, Uber, and Volkswagen all leverage these networks for advanced perception and autonomous decision-making.

- The first self-driving car using neural networks was created in 1989, called ALVINN, which used an end-to-end approach—feeding an image directly into a neural network that generated steering angles. Currently, autonomous vehicles collect terabytes of driving data that continually improve their neural models through over-the-air updates.

Here are some quick, fascinating facts about artificial neural networks (ANNs) that you might not know:

The First Neural Network Model Was Created in 1943: Warren McCulloch and Walter Pitts developed the very first computational model of a neuron, laying the foundation for today’s deep learning systems.

Cats Inspired Neural Networks: In the 1950s, neuroscientists studying how cats process visual signals influenced the development of convolutional neural networks (CNNs), now widely used in image recognition.

Backpropagation Became Popular Only in the 1980s: Although the idea existed earlier, backpropagation—essential for training modern ANNs—wasn’t widely adopted until researchers like Geoffrey Hinton revived it decades later.

From cat experiments to cutting-edge AI, the evolution of neural networks is filled with surprising milestones!

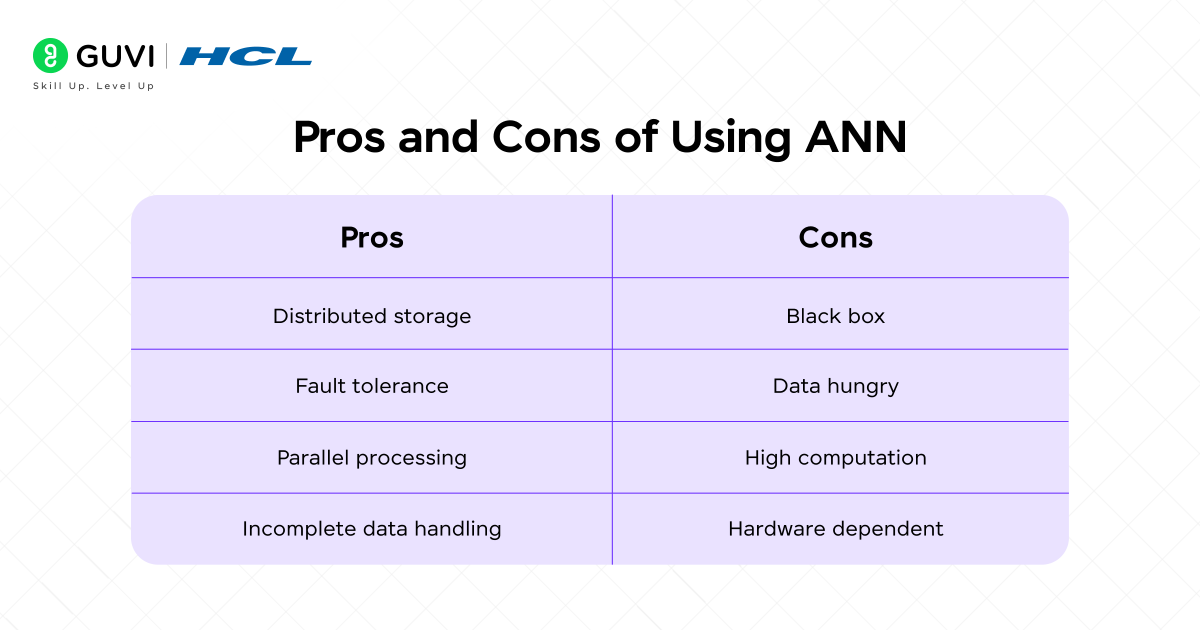

Pros and Cons of Using ANN

Understanding the strengths and weaknesses of artificial neural networks helps you choose the right tool for your specific challenges. Knowing when to apply ann networks versus traditional algorithms can make the difference between success and failure in your projects.

Advantages of ANN

Artificial neural networks offer several significant benefits that make them valuable for complex problems:

- Distributed information storage across the entire network means that losing some data doesn’t prevent the network from functioning

- Fault tolerance allows ANNs to continue working even when some cells become corrupted

- Parallel processing capability enables handling multiple tasks simultaneously

- Working with incomplete knowledge – ANNs can still produce outputs even with missing information

- Gradual degradation rather than sudden failure, as networks slow over time

Beyond these structural advantages, ANNs excel at processing large volumes of raw data and improve continuously as they receive more information. Unlike traditional algorithms that plateau, neural networks keep getting better with additional data.

Limitations and challenges

Despite their power, artificial neural networks face important constraints:

- Black box nature – arguably their best-known disadvantage, ANNs don’t explain how or why they produced specific outputs

- Data requirements – neural networks typically need thousands or millions of labeled samples

- Computational expense – training can take weeks, making it resource-intensive

- Hardware dependency – requires processors with parallel processing capabilities

- Network structure challenges – no specific rules exist for determining optimal structure

Training neural networks involves additional challenges, including overfitting, vanishing gradients, and hyperparameter tuning issues.

Unlock your AI potential with HCL GUVI’s Intel & IITM-Pravartak certified Artificial Intelligence & Machine Learning Course—designed for all learners, with no prior coding experience needed. Dive into cutting-edge topics like Generative AI, Deep Learning, Agentic AI, and MLOps through live sessions, hands-on projects, and global certification.

Concluding Thoughts…

Artificial neural networks represent a remarkable advancement in computing that continues to transform our digital landscape. Throughout this guide, you’ve learned how these brain-inspired systems process information through interconnected layers of artificial neurons, adjusting weights and biases to learn from data.

As AI technology advances, neural networks will likely become even more integrated into your daily life. The fundamental concepts you’ve explored here provide a solid foundation for understanding these powerful systems. Good luck on your ML journey!

FAQs

Q1. What exactly is an Artificial Neural Network?

An Artificial Neural Network (ANN) is a computational model inspired by the human brain’s neural structure. It consists of interconnected nodes or “neurons” arranged in layers that work together to process information, learn from data, and solve complex problems.

Q2. How does an Artificial Neural Network function?

ANNs function through layers of interconnected artificial neurons. They process information by receiving inputs, applying weights and biases, using activation functions, and producing outputs. The network learns by adjusting these weights and biases through a process called backpropagation, gradually improving its accuracy on given tasks.

Q3. What are some real-world applications of Artificial Neural Networks?

ANNs are used in various fields, including image and speech recognition, medical diagnosis, financial forecasting, customer service chatbots, and self-driving cars. They excel at tasks involving pattern recognition, data analysis, and decision-making in complex environments.

Q4. What are the main advantages of using Artificial Neural Networks?

Key advantages of ANNs include their ability to process large volumes of data, learn from experience, handle incomplete information, and perform parallel processing. They also demonstrate fault tolerance and can improve continuously as they receive more data.

Q5. Are there any limitations to Artificial Neural Networks?

Yes, ANNs have some limitations. They often require large amounts of data for training, can be computationally expensive, and their decision-making process is not always transparent (known as the “black box” problem). Additionally, determining the optimal network structure can be challenging, and they may not always be the best choice for simple, easily explainable tasks.

Did you enjoy this article?