Minerva: AI Companion for Math Challenges

Sep 10, 2025 3 Min Read 1704 Views

(Last Updated)

Minerva is built on the same principles and architecture as other NLP models like Google’s BERT and Chat GPT, but a subtle differentiation is that the model is trained on documents comprising sophisticated mathematical expressions, thereby enabling the model to preserve the underlying mathematical expressions.

To understand Minerva, we need to understand the basics of transformers and their components. In this blog, we will look into the architecture and implementation behind Minerva.

Table of contents

- What is Minerva?

- Working of Transformers: key processes and components

- Minerva's training dataset

- Inference-time techniques

- Few-shot prompting

- Chain-Prompting or Chain-of-thought Prompting

- Majority Voting

- Efficiency after applying different inference techniques.

- Examples of Minerva with 'right' predictions

- Examples of Minerva with 'wrong' predictions

- Conclusion

- Reference

What is Minerva?

Minerva is developed by Google. It is a transformer-based natural language processing model used to solve problems in quantitative reasoning, such as mathematics and science, given only the input statement without the underlying formulation. Minerva is currently capable of solving university-level problems without breaking down the problem into mathematical expressions to feed into other tools to get the result.

Besides successfully solving problems, Minerva is also capable of giving clear-cut information on each step during the process. Minerva is an NLP model whose purpose is text prediction or to predict the appropriate next word based on probability.

Ultimately, it is not just a tool but a toolbox capable of solving a plethora of problems. It is an invaluable tool for students for learning and for researchers to delegate tasks.

Working of Transformers: key processes and components

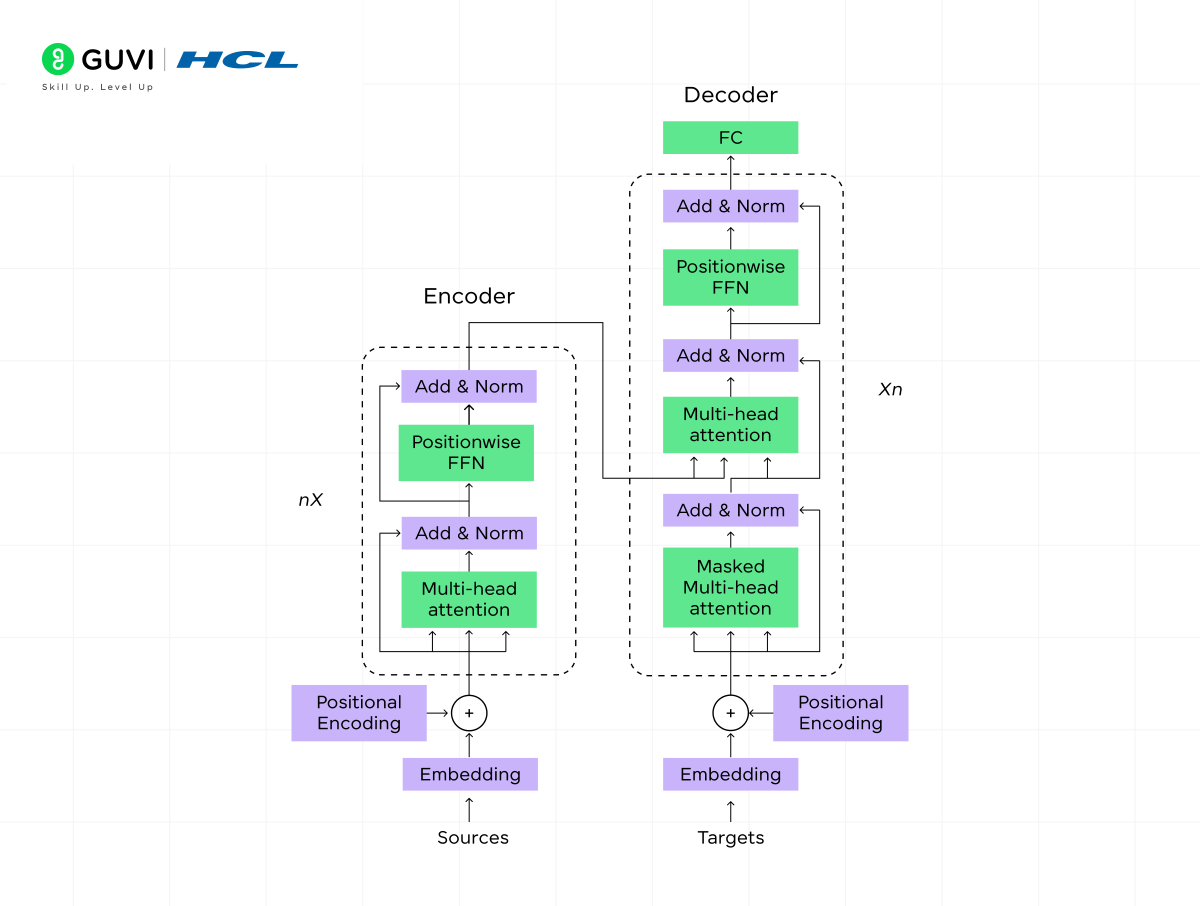

- A transformer contains a Transform encoder and a Transform decoder, both are fundamental components of neural networks.

- The Transformer decoder and encoder have a forward pass layer and some other layers before passing to the neural network that is relevant to the task at hand.

- The forward pass contains embedded layers and self-attention layers. Self-attention layer has a long range of dependencies, that is, we can capture a long range of previous words, which is an advantage over existing recurrent neural networks, and also capture the semantic meaning of the words by mapping similar words of similar context to similar vectors.

- The text encoder also does positional encoding into the input embedding matrix. The corpus of text goes through preprocessing, which includes removing punctuations, digits, and stop words, as well as lowercasing, and other necessary steps by the task at hand.

- The text is then passed through a word2vec embedding layer. Word2Vec assigns lesser magnitude identifiers to more frequent words and higher magnitude values to less frequent words to avoid numerical instability and larger gradient descents.

- The unique identifiers are associated with embedded vectors through the creation of the embedding matrix. Each embedding vector reduces the cost function through backpropagation and gradient descent.

- The embedding vectors are also referred to as dense vectors because of their higher dimensionality.

- At the end of the embedded layer now the embedded matrix is passed to the other layers. One of the other essential layers is the Self-attention layer.

- Self-attention layers use three matrices: Query, Value, and Key matrices to produce an attention output or weight matrix.

- The weights for Query, Value, and Key are initialized with random weights, and each of them is produced by the dot product of an embedded matrix.

- To produce the attention weight matrix, we carry out the dot product of Q and K and divide it by the square root of the embedded matrix. This division reduces the dimensionality of the embedded matrix and prevents the higher gradient from causing issues.

- Then, we apply the Soft-max function to the attention matrix so that the components of vectors add up to 1; indeed, we are normalizing the score to make it a probability distribution over the input sequence. After that, the score is a matrix multiplied by the Value matrix and returned as the output of the self-attention layer.

- The output of the layer is passed to several other layers, like residual connections and feed-forward neural networks, which help in the process of predicting the output label.

- If there is a difference in the output label, the weight of the self-attention layer and additional layers changed by backpropagation to find the optimal weights.

Are you interested in learning more about transformers? Enroll in HCL Guvi’s IITM Pravartak certified Artificial Intelligence and Machine Learning Course. This covers all the important concepts of artificial intelligence from basics such as the history of AI, Python programming, to time series analysis, deep learning, and image processing techniques with hands-on projects.

Recent research proves that scaling the model improves the model’s performance on predictability.

Minerva uses a different word to vector algorithm, FastText, which is similar to the Word2Vector algorithm, but FastText breaks the sentence into substrings rather than each word. Why?

- It decreases computational power.

- In quantitative reasoning problems, most words are reductant. Words associated with numerical information are valuable.

Minerva’s training dataset

The Minerva team collected documents with large mathematical descriptions to train and help them to preserve the mathematical notations, all this done without changing the internal architecture of Transformer-based NLP.

The model was trained on 175 GB of mathematical text. The documents are primarily collected from the following sources.

- ArXiv – arxiv.org is a free distribution service and an open-access archive for 2 million+ scholarly articles in the fields of physics, mathematics, and computer science.

- Webpages with mathematical texts.

Inference-time techniques

Inference time is the process of making a trained model predict unseen data. Remember, the main purpose of NLP is to generalize to new, unseen data and learn it.

Few-shot prompting

Few-shot prompting is a technique where the model is given an example to solve a problem. The examples provided do not involve complex math, but they help the model to solve the required problem in a single step by showing it an example, such as a direct equation. This approach allows the model to generalize to new problems based on a few examples, making it more efficient than traditional methods that require extensive training with large datasets.

Chain-Prompting or Chain-of-thought Prompting

Chain-Prompting is a technique in which the model is given a prompt that consists of a chain of related statements or a complex problem with multiple lines. The model then learns from examples and tries to predict the output from unseen data. This technique can be used to solve complex problems that require multiple steps or involve multiple variables. By breaking down the problem into smaller steps or sub-problems, the model can learn to solve the entire problem more efficiently.

Majority Voting

Minerva assigns probabilities to the different possible outputs. It generates many outputs by stochastically sampling all potential outcomes while answering a question. The model tries to sample the same answer-question pair to immediately get a prompt answer. Majority voting prevents the wrong answer if the model goes wrong and singles it to prompt the correct answer.

Efficiency after applying different inference techniques.

- Minerva 540B is a model that without applying Majority voting but other techniques of inference.

- Minera 540B maj1@k after applying Majority voting along with other techniques of inference.

- Efficiency is about 10% increase if the Majority voting is performed.

Examples of Minerva with ‘right’ predictions

Examples of Minerva with ‘wrong’ predictions

Analysis of False positives reveals that 8% of the positive answers claimed by the model are false positives.

Conclusion

Minerva is a model that is used to solve quantitative problems. It was trained on the 175GB math text. Able to preserve mathematical text unaltered. It utilizes the PaLM pre-trained model which has 540B parameters, It efficiently solves a problem provided with an example.

Reference

- https://ai.googleblog.com/2022/06/minerva-solving-quantitative-reasoning.html

- https://arxiv.org/pdf/2203.11171.pdf

- https://analyticsdrift.com/google-developed-minerva-an-ai-that-can-answer-math-questions/#:~:text=Google%20Developed%20Minerva%2C%20an%20AI%20That%20Can%20Answer%20Math%20Questions,-July%201%2C%202022&text=Google%20unveiled%20Minerva%20AI%2C%20a,into%20existence%20in%20April%2C%202022.

Did you enjoy this article?