Mathematics for Machine Learning: A Zero-to-Hero Guide for Beginners (2025)

Sep 22, 2025 7 Min Read 2244 Views

(Last Updated)

Do you actually need to learn mathematics for machine learning, or can you get by without it? The answer depends on what you want to do in the field. While research-focused roles developing new algorithms require deep mathematical knowledge, many practitioners can be competent without mastering all the underlying details, especially when starting.

However, understanding the math required for machine learning offers significant advantages. Mathematics is truly the foundation of machine learning, helping you choose the best approaches based on factors like accuracy, complexity, and features. When you grasp mathematical concepts like statistics, probability distributions, and linear algebra, you gain the ability to interpret data more effectively and understand the bias-variance tradeoff that helps identify underfitting and overfitting issues.

This zero-to-hero guide breaks down the essential mathematics for machine learning into digestible sections. You’ll learn why these mathematical foundations matter and how they specifically power ML algorithms. From linear algebra fundamentals to statistics, probability, and calculus basics – I’ve organized everything you need to build your confidence in the mathematics required for machine learning.

Table of contents

- Why Math Matters in Machine Learning

- How math powers ML algorithms

- The role of math in model accuracy and tuning

- Mathematics for Machine Learning: Learn With me Step-by-Step

- Step 1) Linear Algebra

- Step 2) Statistics You Need to Know

- Step 3) Probability and Its Role in ML

- 4) Calculus and Optimization Basics

- The Zero-to-Hero Path

- Stage 1: Build Intuition with the Basics

- Stage 2: Add Probability & Statistics to the Mix

- Stage 3: Explore Calculus & Optimization

- Stage 4: Practice with Real Applications

- Stage 5: Expand into Advanced Topics

- Concluding Thoughts…

- FAQs

- Q1. What mathematical foundations are essential for machine learning?

- Q2. Do I need to master all the math before starting machine learning?

- Q3. Which programming languages are most commonly used in machine learning?

- Q4. How does deep learning differ from traditional machine learning?

- Q5. What are some good resources for beginners to learn machine learning?

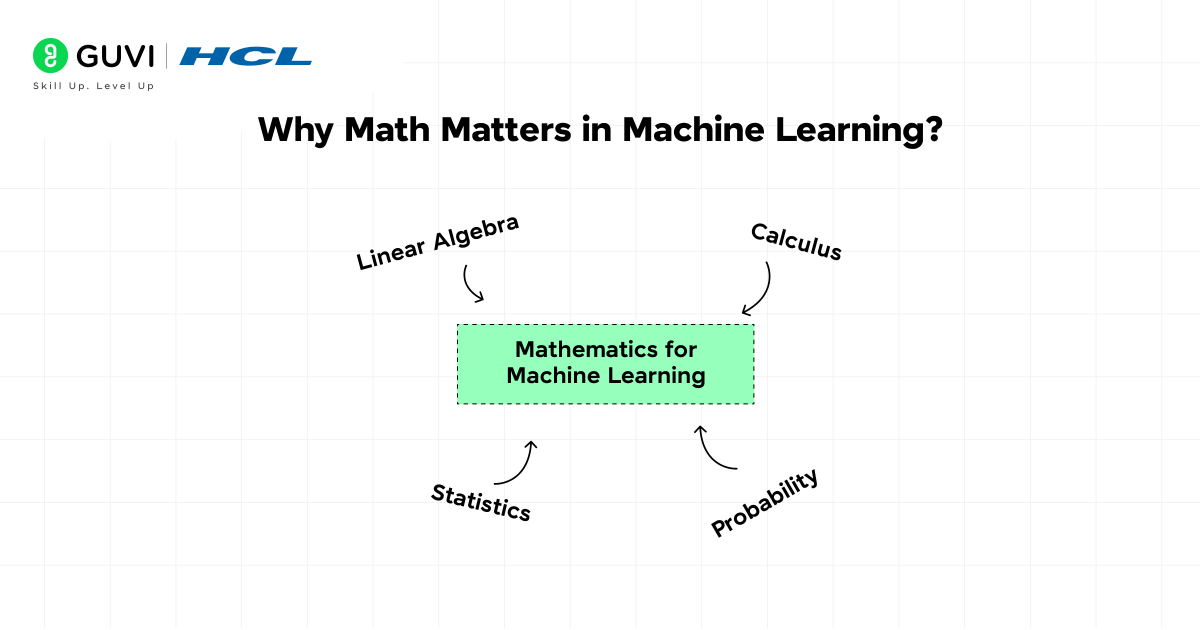

Why Math Matters in Machine Learning

Mathematics for machine learning serves as the true power source behind machine learning systems, not just computers as many might assume. Beyond being mere formulas, math provides the essential language that enables machines to learn and improve from data.

How math powers ML algorithms

Mathematics acts as the backbone for machine learning algorithms, allowing them to process information and enhance accuracy over time. Here’s how math powers these systems:

- Data representation and manipulation: Linear algebra helps organize large datasets into vectors, matrices, and tensors, making data storage and processing more efficient.

- Pattern recognition: Mathematical concepts enable algorithms to identify trends, recognize outliers, and compare information from various sources.

- Decision making: Even with incomplete information, math allows systems to calculate confidence levels and make decisions based on probabilities.

Feature scaling, a concept rooted in linear algebra, ensures each aspect of your data contributes proportionally to model outputs. Furthermore, cost functions—mathematical expressions that measure model performance—help algorithms improve during training by quantifying errors.

The role of math in model accuracy and tuning

Understanding mathematics for machine learning significantly improves your ability to fine-tune models for better performance. In fact, model tuning based on mathematical principles can deliver impressive results—tuned GPT-3.5-turbo models have outperformed untuned GPT-4 models with 90% vs. 70% accuracy on easier problems.

Mathematical optimization plays a crucial role in this process:

- Calculus enables continuous adjustments that minimize errors and improve accuracy.

- Gradient descent, a calculus-based concept, helps models learn by adjusting parameters in directions that reduce errors most effectively.

- Parameter tuning based on mathematical principles helps identify optimal configurations for specific tasks.

As Andrew Ng often says, “It’s only when studying machine learning—specifically gradient descent and other optimization algorithms—that I appreciated how useful calculus is”.

Mathematics for Machine Learning: Learn With me Step-by-Step

Now that you’ve gotten a gist of how important it is, let’s discuss what and how you must learn mathematics for machine learning step-by-step:

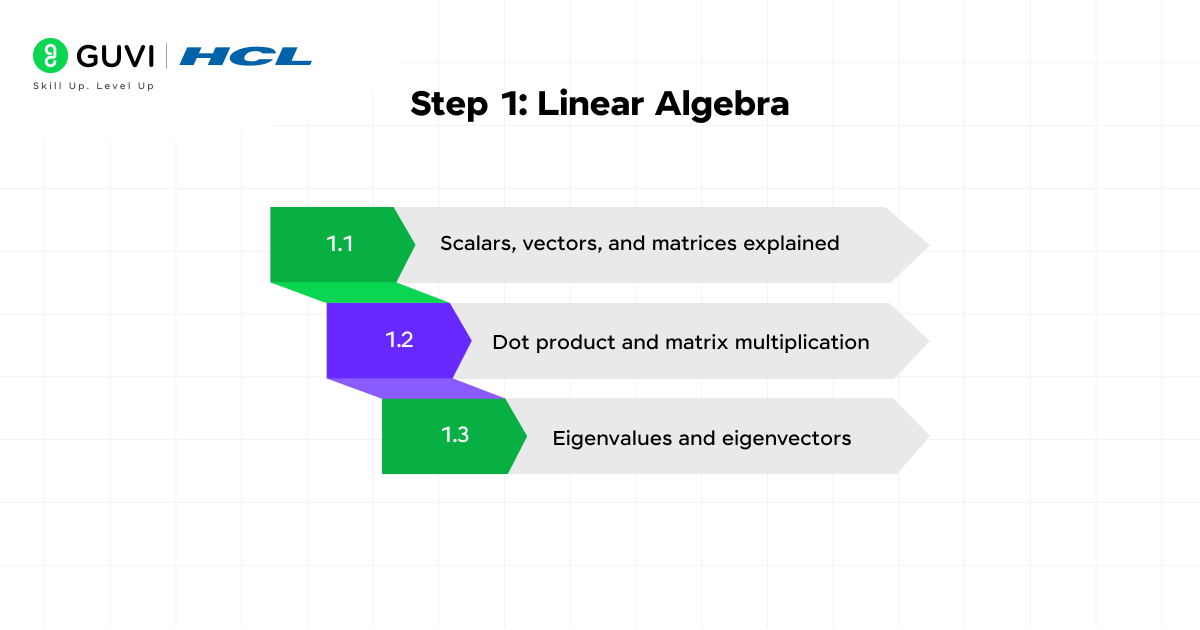

Step 1) Linear Algebra

Linear algebra forms the mathematical language that enables machines to process and learn from data. It provides the essential tools for representing data points, manipulating them through transformations, and extracting meaningful patterns—all crucial operations in machine learning systems.

1.1) Scalars, vectors, and matrices explained

The building blocks of linear algebra begin with three fundamental mathematical entities:

- Scalars are single numbers without direction, representing only magnitude. In machine learning, scalars often denote constants, error metrics, or model accuracy values. For instance, a scalar might represent the accuracy percentage of your classification model.

- Vectors are ordered arrays of numbers that possess both magnitude and direction. Unlike scalars, vectors can represent multiple features simultaneously. They serve as the backbone for feature representation, where each dimension corresponds to a distinct attribute in your dataset. For example, a three-dimensional vector [height, weight, age] might represent a patient in a healthcare dataset.

- Matrices expand on vectors by organizing numbers into rectangular grids with rows and columns. Essentially, they’re two-dimensional arrays that can store and process complex data efficiently. In practical terms, datasets in machine learning are typically represented as matrices, with rows representing observations and columns representing features or variables.

1.2) Dot product and matrix multiplication

These operations are fundamental to understanding how machine learning algorithms process information:

- The dot product takes two equal-length vectors and outputs a single scalar value. It’s calculated by multiplying corresponding elements and summing the results. This operation reveals how similar two vectors are, making it essential for calculating correlations and measuring vector similarity.

The formula looks like: A⋅B = A₁B₁ + A₂B₂ + … + AₙBₙ

- Matrix multiplication builds upon dot product operations. When multiplying matrices, each element in the resulting matrix is the dot product of a row from the first matrix and a column from the second matrix. This operation requires that the number of columns in the first matrix equals the number of rows in the second.

This operation is foundational because:

- It enables data transformations

- It allows for feature scaling and normalization

- It’s used extensively in neural network computations

1.3) Eigenvalues and eigenvectors

Among the most powerful concepts in linear algebra are eigenvalues and eigenvectors:

- Eigenvectors are special vectors that, when transformed by a matrix, only change in scale but not in direction (except possibly reversing). They represent the directions in which a linear transformation stretches or compresses space.

- Eigenvalues are the scaling factors associated with eigenvectors, telling us how much the eigenvector is stretched or compressed during the transformation. To find them, we solve the equation: det(A – λI) = 0, where A is our matrix, λ is an eigenvalue, and I is the identity matrix.

These concepts are particularly valuable in dimensionality reduction techniques like Principal Component Analysis (PCA), where they help identify the directions of maximum variance in data.

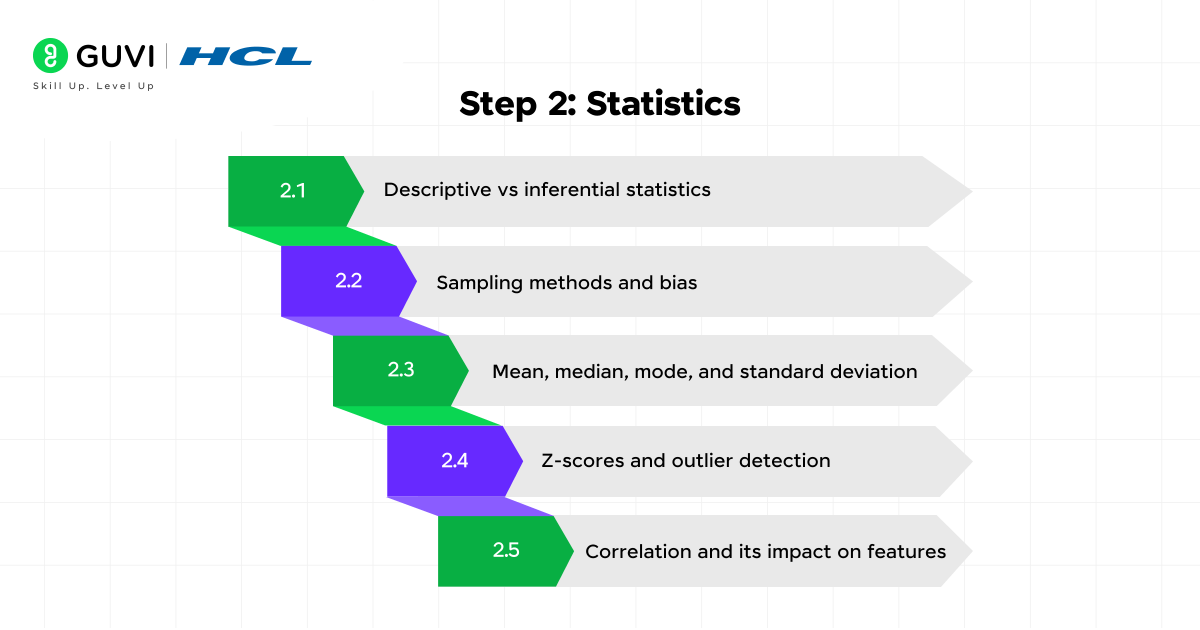

Step 2) Statistics You Need to Know

Statistical knowledge empowers machine learning practitioners to understand data distributions, detect patterns, and make accurate predictions. These mathematical tools help you extract meaningful insights from data before feeding it into your algorithms.

2.1) Descriptive vs inferential statistics

Statistics is broadly categorized into two main types:

- Descriptive statistics summarize and organize data in a meaningful way through charts, graphs, and numerical measures. It simply describes what’s already known without making predictions. This approach helps you understand your dataset’s characteristics before model building.

- Inferential statistics, conversely, uses sample data to conclude larger populations. In machine learning, this allows you to make predictions and generalizations beyond your training data. Through techniques like hypothesis testing and confidence intervals, you can validate your models and assess their reliability.

2.2) Sampling methods and bias

Sampling bias occurs when your data collection introduces systematic errors, leading to non-representative samples. This creates a significant problem in machine learning—models trained on biased data will produce inaccurate and unreliable predictions.

To minimize sampling bias:

- Use diverse data sources to gain comprehensive population views

- Apply techniques like stratified sampling and data augmentation

- Carefully preprocess data to handle missing values and outliers

2.3) Mean, median, mode, and standard deviation

These measures form the foundation of data analysis in machine learning:

- Mean is the average of all values, calculated by summing data points and dividing by their count. While useful, it’s sensitive to outliers.

- Median represents the middle value in sorted data, effectively dividing the dataset into equal halves. It’s more robust against outliers than the mean.

- Mode is simply the most frequently occurring value in your dataset.

- Standard deviation measures how data points spread from the mean. Lower values indicate data clustering close to the mean, while higher values suggest greater dispersion.

2.4) Z-scores and outlier detection

- Z-score is a standardization technique that tells you how many standard deviations a data point sits from the mean. This helps in both feature scaling and outlier detection.

- For outlier detection, data points with Z-scores greater than 3 or less than -3 are typically considered outliers, as they fall outside 99.7% of normally distributed data.

- This is based on the fact that approximately 68% of data falls within ±1 standard deviation, 95% within ±2, and 99.7% within ±3 standard deviations.

2.5) Correlation and its impact on features

Correlation measures relationships between variables, indicating how changes in one variable relate to changes in another. In machine learning, feature correlation significantly impacts model performance.

When features are highly correlated, they essentially provide redundant information. This can lead to several issues:

- Decreased feature importance for correlated variables

- Creation of unrealistic data points during permutation feature importance calculations

- Potential model overfitting to correlated features

Nevertheless, understanding feature correlations helps you select relevant variables and improve model accuracy and interpretability.

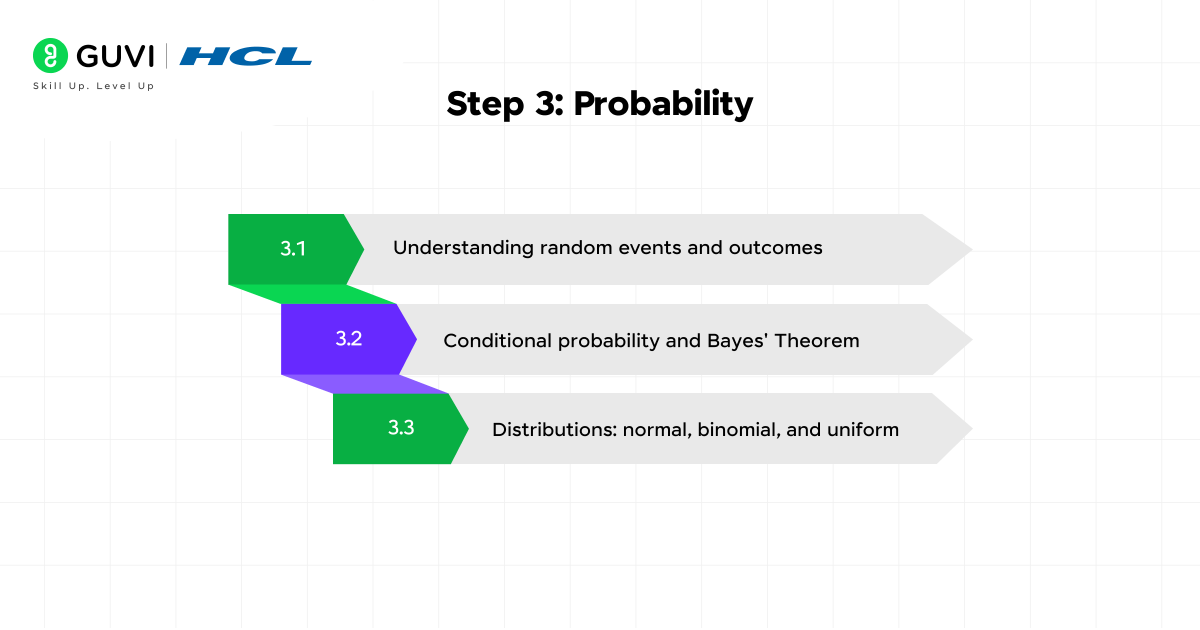

Step 3) Probability and Its Role in ML

Probability theory provides the mathematical foundation for handling uncertainty in machine learning systems. It gives algorithms the ability to make informed decisions even when dealing with incomplete or noisy data.

3.1) Understanding random events and outcomes

- Initially, probability helps quantify how likely events are to occur, assigning values between 0 (impossible) and 1 (certain). In ML, a random experiment might be analyzing user behavior or predicting stock prices—situations where outcomes aren’t completely predictable.

- The sample space represents all possible outcomes of an experiment, while an event is a subset of this space. For instance, when building a recommendation system, you need to calculate the probabilities of users selecting different items based on historical data.

3.2) Conditional Probability and Bayes’ Theorem

Conditional probability measures the likelihood of an event occurring given that another event has already happened. This concept is crucial when working with dependent features in your dataset.

Bayes’ Theorem, a cornerstone of probabilistic ML, is expressed as:

P(A|B) = [P(B|A) × P(A)] / P(B)

Where:

- P(A|B) is the posterior probability

- P(B|A) is the likelihood

- P(A) is the prior probability

- P(B) is the evidence

This theorem enables machines to update beliefs based on new evidence, forming the basis for algorithms like Naive Bayes classifiers.

3.3) Distributions: normal, binomial, and uniform

Different probability distributions model various types of data patterns:

- Normal (Gaussian): Bell-shaped curve ideal for modeling continuous variables like height or temperature

- Binomial: Represents events with two possible outcomes (success/failure) with the same probability across trials

- Bernoulli: Special case of binomial with a single trial; useful for binary outcomes in logistic regression

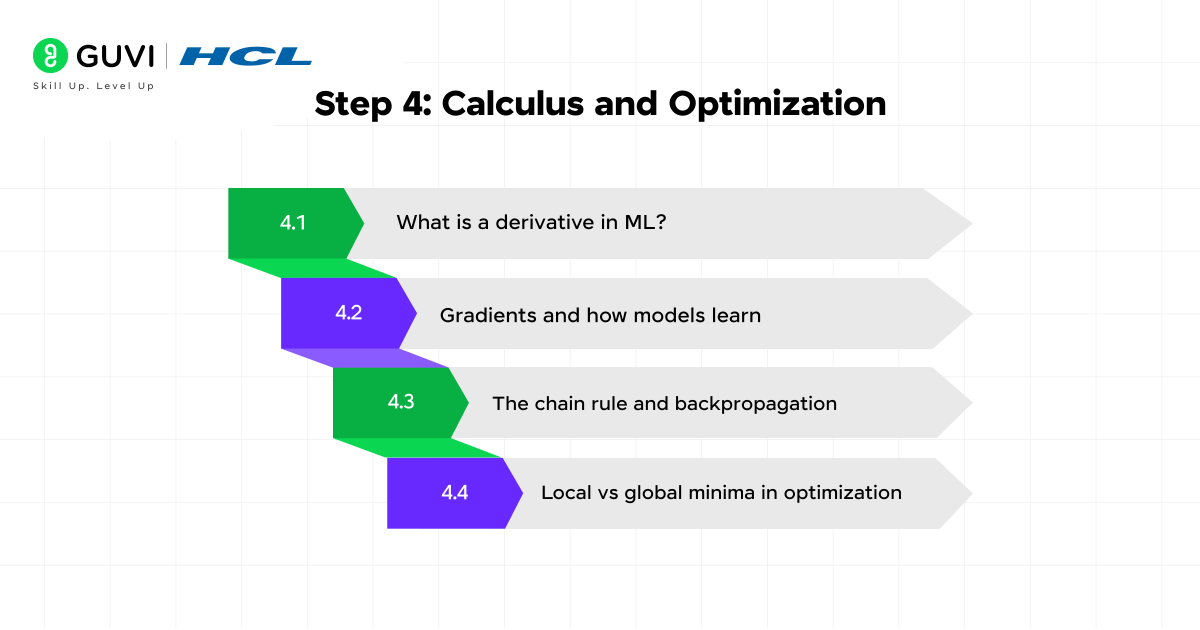

4) Calculus and Optimization Basics

At the heart of machine learning’s learning process lies calculus, the mathematics of change and optimization. Calculus enables algorithms to learn from data by making incremental improvements to their predictions.

4.1) What is a derivative in ML?

A derivative measures the rate of change of a function at a specific point. In machine learning, derivatives help algorithms understand how changes in parameters affect model performance. Essentially, a derivative indicates the slope or steepness of a function, showing whether a slight parameter adjustment will increase or decrease the error.

Positive derivative: Function is increasing at that point, suggesting parameter adjustment in the opposite direction. Negative derivative: Function is decreasing at that point, indicating potential improvement in that direction.

4.2) Gradients and how models learn

The gradient is simply a derivative extended to functions with multiple input variables—it’s a vector of partial derivatives pointing in the direction of steepest ascent. During training, models use gradients to:

- Calculate the loss with current parameters

- Determine which direction reduces loss fastest

- Move the parameters a small amount in that direction

- Repeat until convergence

This process, known as gradient descent, adjusts weights and biases iteratively to minimize error between predicted and actual results.

4.3) The chain rule and backpropagation

The chain rule allows finding derivatives of composite functions, which is crucial since neural networks represent massive nested composite functions. Backpropagation applies this rule efficiently by:

- Computing the output through forward propagation

- Calculating error at the output layer

- Working backward through the network, computing gradients layer by layer

This approach avoids redundant calculations, making deep learning computationally feasible.

4.4) Local vs global minima in optimization

- Optimization algorithms generally find the nearest minimum (local minimum)—a point where the function value is smaller than nearby points. Yet this might not be the best possible solution (global minimum).

- Local minima can trick optimization algorithms, particularly in complex functions with multiple valleys. A model caught in a local minimum performs suboptimally compared to one reaching the global minimum. Nonetheless, in deep learning, we often accept good local minima if they yield sufficiently low error values.

Origin of Gradient Descent: The popular optimization technique used in machine learning today was first introduced way back in 1847 by French mathematician Augustin-Louis Cauchy.

Eigenfaces in Facial Recognition: Modern facial recognition systems use linear algebra concepts like eigenvectors and eigenvalues to mathematically represent faces—these are called “eigenfaces.”

The Zero-to-Hero Path

Learning math for Machine Learning can feel overwhelming, but breaking it down into clear stages makes the journey smooth and practical. Here’s a roadmap you can follow step by step:

Stage 1: Build Intuition with the Basics

Start with linear algebra essentials—vectors, matrices, dot products, and matrix multiplication. Don’t rush the formulas; instead, use visualizations and geometric interpretations to understand what these operations mean (e.g., a dot product as a projection).

- Why it matters: These concepts form the foundation of ML models like linear regression, PCA, and neural networks.

Stage 2: Add Probability & Statistics to the Mix

Learn probability rules (independence, conditional probability, Bayes’ theorem) and basic statistics (mean, variance, standard deviation, distributions). Practice by solving small data problems like computing probabilities or modeling random variables.

- Why it matters: Probability explains uncertainty in data, while statistics helps in making decisions from sample datasets—core to every ML task.

Stage 3: Explore Calculus & Optimization

Move into differentiation, gradients, and partial derivatives. Learn how gradient descent works by coding a simple linear regression from scratch. Once comfortable, explore convex functions and why they guarantee a global minimum.

- Why it matters: Almost every ML algorithm—from logistic regression to deep learning—relies on optimization through calculus.

Stage 4: Practice with Real Applications

Start applying math directly in ML workflows. Use small projects like:

- Building a regression model with gradient descent

- Implementing k-means clustering with distance metrics

- Visualizing probability distributions with real datasets

This hands-on phase helps solidify math concepts by connecting them to real outcomes.

Stage 5: Expand into Advanced Topics

Once your basics are strong, dive into more advanced fields:

- Convex optimization → deeper understanding of model training stability

- Information theory → concepts like entropy and KL divergence, vital for ML interpretability

- Linear algebra in depth → eigenvalues/eigenvectors, singular value decomposition, etc. for dimensionality reduction

- Metric spaces & norms → crucial for distance-based learning and embeddings

This staged roadmap ensures you progress from intuition → application → mastery, making math feel less like abstract theory and more like a practical toolkit for building intelligent systems.

Take your math-to-ML journey further with HCL GUVI’s Intel & IITM Pravartak and Intel Certified AI/ML Course—a hands-on, industry-aligned course that builds essential mathematical intuition alongside Python, NLP, deep learning, and MLOps skills. With live sessions, vernacular support, real-world projects, and placement assistance, it transforms theory into job-ready expertise.

Concluding Thoughts…

Mathematics for machine learning truly serves as the bedrock upon which machine learning builds its impressive capabilities and you will too if you follow this guide closely. Throughout this guide, you’ve discovered how different mathematical domains work together to create intelligent systems that learn from data.

The next time you see a machine learning algorithm perform impressively, you’ll recognize the mathematical principles working behind the scenes. This zero-to-hero guide provides your starting point – now you can confidently begin exploring the fascinating intersection of mathematics for machine learning. Good Luck!

FAQs

Q1. What mathematical foundations are essential for machine learning?

The key mathematical foundations for machine learning include linear algebra, statistics, probability theory, and calculus. These provide the tools to represent data, analyze patterns, handle uncertainty, and optimize algorithms.

Q2. Do I need to master all the math before starting machine learning?

The key mathematical foundations for machine learning include linear algebra, statistics, probability theory, and calculus. These provide the tools to represent data, analyze patterns, handle uncertainty, and optimize algorithms.

Q3. Which programming languages are most commonly used in machine learning?

Python is currently the most popular language for machine learning, with libraries like scikit-learn, TensorFlow, and PyTorch. However, R is also widely used, especially in statistical learning and data analysis.

Q4. How does deep learning differ from traditional machine learning?

Deep learning is a subset of machine learning that uses neural networks with multiple layers. It excels at automatically learning features from raw data, whereas traditional machine learning often requires more manual feature engineering.

Q5. What are some good resources for beginners to learn machine learning?

Popular resources for beginners include online courses like HCL GUVI’s AI ML Course, Andrew Ng’s Machine Learning course on Coursera, textbooks such as “An Introduction to Statistical Learning” by James et al., and hands-on tutorials using libraries like scikit-learn. Practice with real datasets is also crucial for learning.

Did you enjoy this article?