Object Detection using Deep Learning: A Practical Guide

Sep 10, 2025 3 Min Read 1218 Views

(Last Updated)

In an era where machines are becoming increasingly adept at interpreting visual information, object detection using deep learning has emerged as a groundbreaking advancement.

From self-driving cars recognizing pedestrians to security systems identifying faces, deep learning, specifically Convolutional Neural Networks (CNNs), has revolutionized the way we analyze and process images. This is where deep neural networks step in, enabling machines to understand visual data with a level of accuracy that rivals, and sometimes surpasses, human perception.

In this article, we’ll explore how neural networks, especially CNNs and YOLO (You Only Look Once), are used for object detection, classification, and localization, along with a practical walkthrough using Google’s Teachable Machine.

Table of contents

- Deep Learning and Neural Networks

- Understanding Neural Networks

- Image Classification using CNNs

- ANN vs CNN vs YOLO – Quick Comparison

- Object Detection: Classification vs Localization

- Object Detection Using YOLO

- How YOLO Works:

- Real-World Applications of Object Detection

- Hands-On Demo: Image Classification with Teachable Machine

- Challenges in Object Detection

- Conclusion

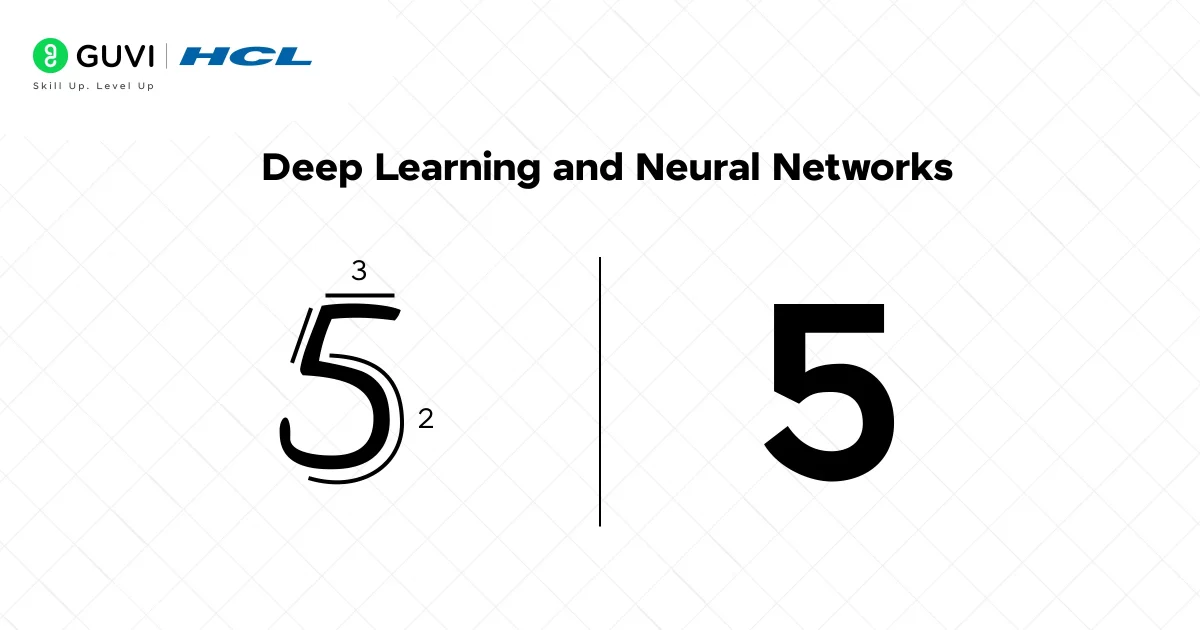

Deep Learning and Neural Networks

Before diving into object detection, take a moment to observe a handwritten digit, say, the number 5. It’s instantly recognizable, even if written in different styles. But how does your brain distinguish that? A specific part of your visual cortex is responsible for recognizing such patterns.

Now, imagine writing a program that can recognize digits from 28×28 pixel images. Sounds complex, right? Traditional programming and even classical machine learning algorithms struggle with such unstructured data.

That’s where neural networks come into play. Let’s open the black box of deep learning to see how object detection works through neural networks, particularly Convolutional Neural Networks (CNNs) and YOLO (You Only Look Once) models.

Understanding Neural Networks

Neural networks contain nodes or “neurons,” which receive input, apply a mathematical transformation, and pass the result forward. Each neuron has weights and biases, which are adjusted through a process called backpropagation using gradient descent.

Key Components:

- Weights & Biases: Help in shaping how input transforms across layers.

- Activation Functions: ReLU, Sigmoid, and Softmax are used to determine whether neurons should activate.

- Loss Function: Measures how far the prediction is from the actual result (e.g., Sum of Squared Residuals).

- Gradient Descent: Optimizes weights to minimize the loss function.

Image Classification using CNNs

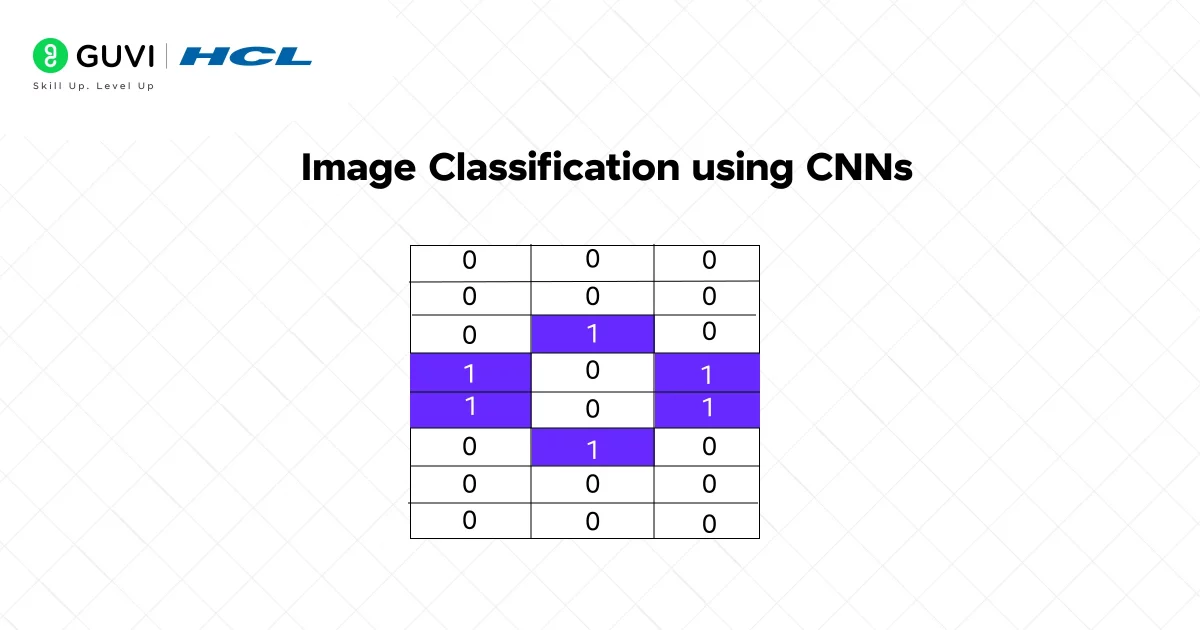

Let’s say we want to classify a 3×8 pixel image into either ‘X’ or ‘O’. Using a basic neural network, we would have:

- 24 input neurons,

- A hidden layer with 2 neurons,

- 48 weight/bias combinations.

Now consider a real-world image of 1920×1080 pixels. That’s 2 million input neurons, making the computation extremely heavy.

Why CNNs?

CNNs drastically reduce the number of parameters by applying filters (kernels) to detect features like edges, shapes, and textures. The steps include:

- Convolution: Sliding a filter over the image and computing dot products to get a feature map.

- Activation (ReLU): Introduces non-linearity and helps extract complex patterns.

- Pooling (Max Pooling): Reduces feature map dimensionality while preserving key information.

- Flattening: Converts pooled features into a 1D vector.

- Fully Connected Layers: Perform classification using output from the convolution layers.

ANN vs CNN vs YOLO – Quick Comparison

| Aspect | ANN | CNN | YOLO |

| Use Case | Tabular/Structured Data | Image Classification | Real-Time Object Detection |

| Input Size Handling | High computation on large data | Efficient on large image data | Extremely efficient for full images |

| Localization Support | ❌ | ❌ | ✅ |

| Speed | Moderate | Fast | Real-time |

Object Detection: Classification vs Localization

Traditional image classification tells what is in the image. Object detection tells what and where. One method was the sliding window approach, which cropped regions of the image and applied CNNs on each.

This was computationally expensive. That’s where YOLO changed the game.

Object Detection Using YOLO

YOLO (You Only Look Once) applies a single CNN to the full image, predicting multiple bounding boxes and class probabilities in one pass, making it much faster and more accurate.

How YOLO Works:

- Divide the image into an S x S grid.

- Each grid cell predicts:

- Bounding box (bx, by, bh, bw)

- Confidence score (Pc)

- Class probabilities (C)

- Bounding box (bx, by, bh, bw)

- Only boxes with high confidence are selected.

This reduces time and increases real-time usability across applications like surveillance, robotics, and autonomous vehicles.

Real-World Applications of Object Detection

- Autonomous Vehicles: Detect pedestrians, traffic signs, and obstacles.

- Medical Imaging: Identify tumors and anomalies in scans.

- Retail: Track inventory using real-time camera feeds.

- Agriculture: Use drones for monitoring crop health and pests.

- Security: Facial recognition in smart surveillance systems.

Hands-On Demo: Image Classification with Teachable Machine

Source link: teachablemachine.withgoogle.com

Step 1: Let’s first divide into three classes: Dog, Cat, and Tiger, providing our model with input data.

Step 2: Train the model with default parameters

Step 3: Let’s test our data with new images.

Let’s confuse our model now!

Here we got contradictory results because we have given two objects in a new image So it couldn’t predict it correctly.

Result:

The model accurately classifies single-object images. However, it may get confused with images containing multiple objects, showing the limitation of basic classifiers and the need for multi-label models.

If you want to learn more about how Neural Networks work and how deep learning can impact your surroundings, consider enrolling in HCL GUVI’s IITM Pravartak Certified Artificial Intelligence and Machine Learning course that teaches NLP, Cloud technologies, Deep learning, and much more that you can learn directly from industry experts.

Challenges in Object Detection

- Overlapping objects reduce accuracy.

- Hardware limitations affect real-time performance.

- Limited labeled data can hinder training.

- Class imbalance skews predictions.

Conclusion

In conclusion, object detection through deep learning is reshaping industries by enabling machines to not only recognize what’s in an image but also where it is. Traditional neural networks struggle with image data, but CNNs and advanced models like YOLO offer faster, more scalable solutions.

From reducing millions of computations to real-time bounding box predictions, these technologies are powerful tools in the AI arsenal. With further advancements in transfer learning and model optimization, the future of computer vision looks exceptionally bright.

Did you enjoy this article?