Neural Networks in Machine Learning: The Artificial

Aug 30, 2025 6 Min Read 1872 Views

(Last Updated)

Ever wondered how your phone recognizes faces, your inbox filters spam, or Netflix knows what you’ll like next? Behind the scenes, there’s a powerful concept doing the heavy lifting: Neural Networks in Machine Learning.

Inspired by the human brain, neural networks form the foundation of modern machine learning, especially in areas like image recognition, natural language processing, and even self-driving cars.

Let’s break down what neural networks are, how they work, and why they matter. So, without further ado, let us get started!

Table of contents

- What is a Neural Network?

- Basic Structure of a Neural Network

- Input Layer

- Hidden Layers

- Output Layer

- Key Concepts in the Structure:

- How Does a Neural Network Work?

- Forward Propagation

- Backward Propagation (Training Phase)

- Summary of Steps:

- Supporting Concepts:

- What is an Activation Function?

- Popular Activation Functions:

- Types of Neural Networks

- Feedforward Neural Network (FNN)

- Convolutional Neural Network (CNN)

- Recurrent Neural Network (RNN)

- Long Short-Term Memory (LSTM)

- Generative Adversarial Networks (GANs)

- Transformer Networks

- Best Practices When Working With Neural Networks

- Start Simple

- Normalize Your Data

- Use the Right Activation Functions

- Avoid Overfitting

- Choose the Right Loss Function

- Monitor Training and Validation Metrics

- Test Your Knowledge (MCQs)

- Conclusion

- FAQs

- What is a neural network in simple terms?

- What are neural networks used for in real life?

- What is the difference between AI, Machine Learning, and Neural Networks?

- What is the best programming language to learn neural networks?

- Are neural networks hard to learn?

What is a Neural Network?

A Neural Network is a type of machine learning algorithm inspired by how the human brain processes information. It’s made up of layers of interconnected nodes called neurons that can learn to recognize patterns in data.

These networks are capable of approximating complex functions and relationships, making them extremely powerful for tasks such as image recognition, speech processing, language translation, and more.

The inspiration comes from biological neurons in the brain. Just like a neuron receives signals from other neurons, processes them, and passes the output to the next, an artificial neuron receives input data, applies mathematical transformations, and forwards the output. But instead of electrical impulses, artificial neurons use numbers, functions, and weighted connections.

Basic Structure of a Neural Network

A typical neural network consists of three main types of layers: the Input Layer, the Hidden Layer(s), and the Output Layer. Each layer contains nodes (or neurons) that are interconnected and assigned weights. These layers form the “network” part of the neural network.

Let’s explore each of these components in detail:

Input Layer

This is where the network receives data. The number of neurons in the input layer corresponds to the number of features or variables in the dataset. For example, if you’re feeding in a grayscale image of 28×28 pixels, your input layer will have 784 nodes (since 28×28 = 784).

Hidden Layers

The hidden layers do the actual computation. Each neuron in a hidden layer takes inputs from the previous layer, processes them, and passes the output to the next layer. You can have one hidden layer or multiple; when there are many, it’s called a deep neural network.

What’s happening here is not visible to us directly (hence “hidden”), but this is where the model detects patterns like edges in an image or sentiment in a sentence.

Output Layer

This layer provides the final result. For binary classification, it might be a single neuron with a sigmoid activation function. For multi-class classification, it could have as many neurons as there are categories, using a softmax activation function to output probabilities.

Key Concepts in the Structure:

- Weights: Each connection between neurons carries a weight that adjusts as the model learns.

- Bias: A value added to the output of a neuron to help the model fit the data better.

- Activation Function: Adds non-linearity, allowing the network to learn complex patterns.

How Does a Neural Network Work?

At a high level, a neural network works by taking inputs, transforming them through layers of neurons, and producing an output. This process involves a combination of linear algebra, non-linear functions, and optimization algorithms.

The real power of neural networks comes from their ability to learn from data by adjusting internal parameters through training. Let’s break it down into the two key phases:

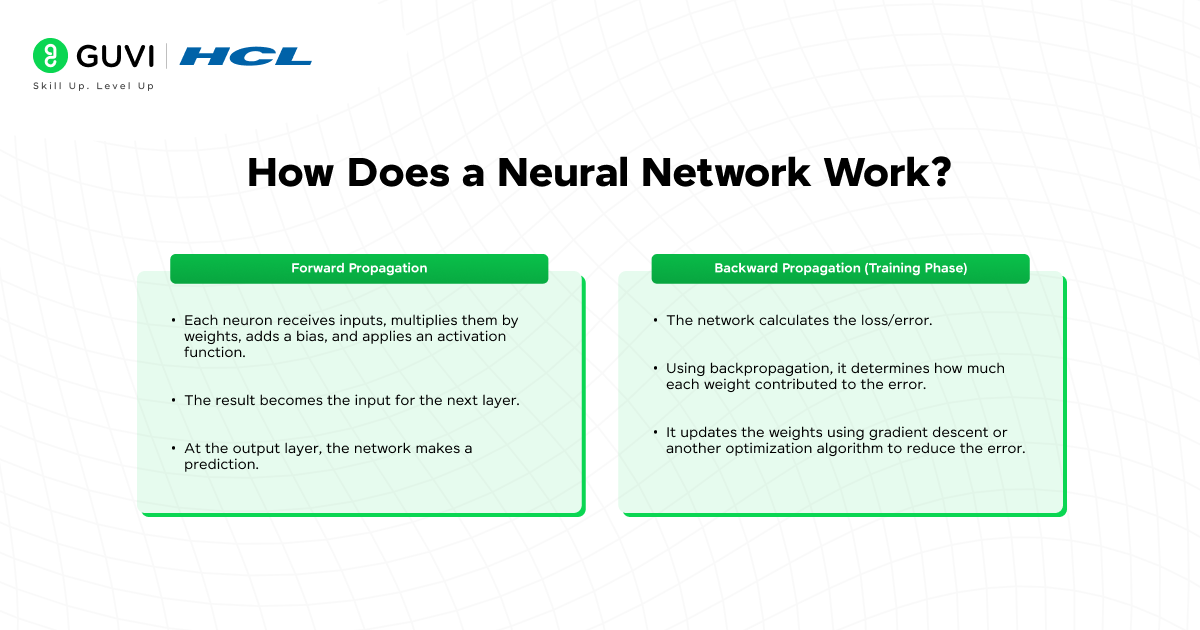

1. Forward Propagation

In this phase, the input data flows through the network, layer by layer, until it reaches the output.

- Each neuron receives inputs, multiplies them by weights, adds a bias, and applies an activation function.

- The result becomes the input for the next layer.

- At the output layer, the network makes a prediction.

This phase ends with the model producing a prediction based on current parameters.

2. Backward Propagation (Training Phase)

After the model makes a prediction, it needs to evaluate how good that prediction is. This is done using a loss function, which measures the difference between the predicted output and the actual value.

- The network calculates the loss/error.

- Using backpropagation, it determines how much each weight contributed to the error.

- It updates the weights using gradient descent or another optimization algorithm to reduce the error.

This process is repeated across many iterations (called epochs) until the model improves its accuracy.

Summary of Steps:

- Step 1: Accept input data

- Step 2: Pass it through multiple layers (forward propagation)

- Step 3: Compare output with actual values using a loss function

- Step 4: Adjust weights using backpropagation and optimization

- Step 5: Repeat until the model learns well enough

Supporting Concepts:

- Epoch: One full cycle through the entire training dataset.

- Batch Size: Number of training samples used in one update.

- Learning Rate: Controls how much the weights are adjusted during training.

If you’re interested in learning more about neural networks, read the blog – Learn deep learning and neural networks in just 30 days!!

What is an Activation Function?

An activation function is a mathematical equation that determines the output of a neural network node. Without activation functions, no matter how many layers your network has, it would behave like a linear regression model.

In other words, without them, neural networks would not be able to learn complex patterns, non-linear relationships, or handle tasks like image classification or language translation.

You can think of activation functions as gatekeepers; they decide which information gets passed to the next layer and how. This non-linearity is essential because real-world data is rarely linear.

Popular Activation Functions:

1. ReLU (Rectified Linear Unit)

ReLU is the most widely used activation function in modern neural networks. It outputs zero if the input is negative and outputs the same value if it’s positive.

f(x) = max(0, x)

Why it works well:

- It introduces non-linearity.

- It speeds up training because of its simplicity.

- It avoids vanishing gradients (mostly).

But, ReLU isn’t perfect. For example, if a neuron gets stuck in the negative region, it may stop learning entirely. This is known as the “dying ReLU” problem.

2. Sigmoid

Sigmoid squashes input values into a range between 0 and 1. It was one of the original choices for binary classification problems.

f(x) = 1 / (1 + e^-x)

Pros:

- Smooth curve, good for probability-based outputs.

Cons:

- Saturates and kills gradients for large inputs.

- Causes slow convergence in deep networks.

3. Tanh (Hyperbolic Tangent)

Tanh is similar to sigmoid but squashes input values between -1 and 1.

f(x) = (e^x – e^-x) / (e^x + e^-x)

Why can it be better than sigmoid?

- The output is zero-centered.

- Still suffers from vanishing gradients at extremes.

4. Softmax

Softmax is used primarily in the output layer for multi-class classification problems. It converts raw scores into probabilities that sum to 1.

When to use:

- For problems where you need to classify data into more than two categories (e.g., digit classification, emotion detection).

Types of Neural Networks

Not all neural networks are created equal. Depending on the kind of data you’re working with, be it images, sequences, time-series, or tabular, different types of networks are more effective.

1. Feedforward Neural Network (FNN)

This is the simplest type of neural network where the data moves in one direction, from input to output, without any loops or cycles. Each layer feeds its output to the next.

Use cases:

- Binary or multi-class classification

- Simple regression problems

Why it’s foundational:

- Easy to implement and understand.

- Basis for more complex architectures.

2. Convolutional Neural Network (CNN)

CNNs are designed for working with image data. They use convolutional layers to automatically detect and learn features such as edges, textures, and shapes.

Structure highlights:

- Convolutional layers extract spatial features.

- Pooling layers reduce dimensionality.

- Fully connected layers at the end make the final prediction.

Common applications:

- Image classification (e.g., identifying cats vs dogs)

- Object detection

- Medical imaging (e.g., detecting tumors in X-rays)

3. Recurrent Neural Network (RNN)

RNNs are suited for sequential data, where the order of inputs matters. They have loops that allow information to persist over time.

Key idea:

- The output from one step is fed as input to the next, enabling memory of past inputs.

Use cases:

- Time-series forecasting (e.g., stock prediction)

- Language modeling and text generation

- Speech recognition

Limitations:

- Prone to vanishing gradients.

- Hard to learn long-term dependencies.

4. Long Short-Term Memory (LSTM)

LSTM is a special kind of RNN designed to better remember long-term dependencies. It uses a more complex architecture with gates to decide what to keep or forget.

Best for:

- Text generation

- Translation

- Sequential decision-making tasks

5. Generative Adversarial Networks (GANs)

GANs consist of two networks, a generator and a discriminator, competing against each other. The generator tries to create fake data, while the discriminator learns to detect whether the input is real or fake.

Why they’re cool:

- Capable of generating realistic images, videos, and even audio.

- Used in art, synthetic data generation, and style transfer.

6. Transformer Networks

Transformers have taken over the field of natural language processing. Unlike RNNs, they don’t process data sequentially, which makes them more parallelizable and scalable.

Examples:

- BERT, GPT, and other large language models.

Applications:

- Machine translation

- Question answering

- Chatbots

Did You Know?

Neural networks were first conceptualized in the 1940s, but only gained real traction after computing power caught up in the 2010s.

Best Practices When Working With Neural Networks

Getting a neural network to work isn’t just about stacking layers and hitting run. It involves a combination of good design, preprocessing, parameter tuning, and sometimes even a little luck. But some practices significantly increase your chances of success.

1. Start Simple

It’s tempting to build a huge, complex model from the get-go. Don’t. Start with a basic network. Get it to learn something simple, then scale.

- Begin with fewer layers and neurons.

- Add complexity only if needed.

2. Normalize Your Data

Neural networks train better when inputs are on a similar scale. Normalize or standardize your inputs before feeding them into the model.

- Scale pixel values between 0 and 1.

- Standardize numerical data to zero mean and unit variance.

3. Use the Right Activation Functions

- ReLU for hidden layers (especially in deep networks)

- Sigmoid or softmax for output layers (depending on your task)

4. Avoid Overfitting

If your model performs great on training data but poorly on test data, it’s probably overfitting.

Mitigation strategies:

- Use dropout layers.

- Apply L1/L2 regularization.

- Get more data or use data augmentation.

- Use early stopping based on validation loss.

5. Choose the Right Loss Function

The choice of loss function should align with your task:

- Binary cross-entropy: binary classification

- Categorical cross-entropy: multi-class classification

- Mean squared error (MSE): regression

6. Monitor Training and Validation Metrics

It’s important not to just rely on accuracy. Depending on your use case, look at:

- Precision

- Recall

- F1 Score

- Confusion Matrix

Use tools like TensorBoard to visualize training metrics..

Test Your Knowledge (MCQs)

Q1. What does an activation function do in a neural network?

A. Calculates accuracy

B. Normalizes data

C. Adds non-linearity

D. Updates weights

Answer: C

Q2. Which neural network is best for image processing?

A. FNN

B. CNN

C. RNN

D. GAN

Answer: B

Q3. What’s the role of the loss function?

A. Increases prediction score

B. Measures prediction error

C. Adds memory to the model

D. Compresses input

Answer: B

Q4. What causes vanishing gradients?

A. Too much training data

B. Too few neurons

C. Deep networks with sigmoid functions

D. Low learning rate

Answer: C

If you’re serious about mastering machine learning concepts like Neural Networks, and want to apply them in real-world scenarios, don’t miss the chance to enroll in HCL GUVI’s Intel & IITM Pravartak Certified AI & ML course. Endorsed with Intel certification, this course adds a globally recognized credential to your resume, a powerful edge that sets you apart in the competitive AI job market.

Conclusion

In conclusion, neural networks are no longer optional; they’re essential. Whether you’re building a chatbot, training a model to diagnose cancer, or just trying to understand AI better, neural networks are the stepping stone.

The best part? You don’t need to be a math genius to get started. With tools like TensorFlow, Keras, and PyTorch, anyone can dive in.

So if you’re planning to make your mark in the world of machine learning, neural networks are where you begin. Start simple, experiment often, and never stop learning.

FAQs

1. What is a neural network in simple terms?

A neural network is a computer system that mimics how the human brain works. It’s made of layers of “neurons” (nodes) that learn from data. These layers process input data (like images or numbers), recognize patterns, and make decisions, like predicting if an email is spam or not.

2. What are neural networks used for in real life?

Neural networks are behind many things you use every day. They power facial recognition in your phone, product recommendations on Amazon, voice assistants like Alexa, self-driving cars, medical diagnosis tools, fraud detection systems, and even ChatGPT.

3. What is the difference between AI, Machine Learning, and Neural Networks?

Think of it like this:

AI (Artificial Intelligence) is the big umbrella.

Machine Learning (ML) is a subset of AI that helps systems learn from data.

Neural Networks are a type of ML model, inspired by the human brain, used to solve complex problems.

4. What is the best programming language to learn neural networks?

Python is by far the most popular and beginner-friendly language for neural networks. Libraries like TensorFlow, PyTorch, and Keras make it easy to build and train powerful models, even if you’re just starting out.

5. Are neural networks hard to learn?

Not really, especially with today’s tools. The math can be deep, but you don’t need to master calculus to start. If you understand the basics of Python, loops, and arrays, and are willing to practice, you can learn how to build and train neural networks with hands-on projects.

Did you enjoy this article?