Critical Section Problem in OS (Operating System)

Oct 23, 2025 5 Min Read 2625 Views

(Last Updated)

Imagine a scenario where two people with a joint bank account are trying to withdraw cash simultaneously, yet separately. It isn’t very clear to consider a situation like this. However, for the sake of this moment, let’s extend this example. In this case, if the bank account has a total balance of ₹2000, and one individual withdraws ₹1000, while the other withdraws ₹500 at the same time. As both actions are performed simultaneously, does the bank system display ₹1,500 to one and ₹1,000 to the other?

It doesn’t happen like that, as we all know. The simple reason for this is that the Operating System treats this account activity as a critical section. To solve this critical section problem, it allows only one user, or in this case, a single account holder, to gain access to the system, ensuring every user gets an equal opportunity without any unnecessary waiting time.

In this blog, we will understand all significant aspects of the critical section problem in OS (operating System). So, without any further ado, let’s begin our discussion.

Table of contents

- What is a Critical Section and the Critical Section Problem in OS (Operating Systems)?

- Critical Section Examples in OS

- Solutions For the Critical Section Problem

- Peterson's Solutions

- Locks / Mutexes

- Semaphores

- Monitors

- Critical Section Synchronization Working Mechanism

- Deadlock and Starvation in OS (Operating Systems)

- Deadlock

- Starvation

- Conclusion

- FAQs

- What are the best solutions to solve the critical section problem in OS (operating systems)?

- What are the three main protocols that should be followed before solving any critical section problem?

- Why does the critical section problem occur?

What is a Critical Section and the Critical Section Problem in OS (Operating Systems)?

(Section)

When any program runs on the OS, it utilizes various resources such as data variables, memory space, files, data structures, functions or methods, process tables, ready queues, and many more. However, the entire program is not utilizing these resources; within the program, multiple processes are executed simultaneously at different parts of the program or system.

And there are times when numerous distinct processes try to access a single resource concurrently; this part of the program is known as the Critical Section. If more than one process gets injected into the system, it can lead to data corruption or the dismantling of the entire system architecture.

(Problem)

Due to these potential vulnerabilities, the Critical Section Problem arises. The Critical Section Problem in OS is a fundamental challenge in developing and designing a system or internal architecture that enables processes to share and utilize resources without obstructing each other.

The primary purpose of a Critical Section is to protect shared resources from degradation by multiple processes. It ensures the safety of the resources by allowing them to be conditionally accessible to the processes.

Critical Section Examples in OS

- Memory Allocation: The code becomes the critical section when the OS is responsible for allocating the memory address or location to the processes. In this specific case, if more than one process is assigned the same memory space or releases memory simultaneously, it can result in memory leakage or high memory consumption. The OS avoids this issue through synchronization tools.

- Process Table: During the processing of data tables, when the OS performs update tasks on the tables, that particular section becomes critically important. The tables that store information about all operating processes are manipulated by multiple processes simultaneously, which increases the likelihood of data becoming messy and corrupted. To solve this critical section problem, the OS validates the entry of one process at a time to update the table.

- File Management: Sometimes, multiple processes require specific program files for performing upgrade tasks. In this situation, updating a shared file becomes a critical task. Imagine how ineffective it would be when multiple processes try to manipulate the same file simultaneously; the content or code scripts may be hindered extensively. So, to ensure the file integrity and consistency, the OS authorizes one process to operate at a time.

Solutions For the Critical Section Problem

Before discussing the solutions for critical section problems, let us know the three most important rules that need to be followed before delivering the solutions:

- Mutual Exclusion

It is the most basic rule of all, which checks that only one process can be inside the critical section at a time. It prevents two or more processes from interfering with or altering shared resources simultaneously.

- Progress

As the name suggests, Progress, which means without any unnecessary waiting time or delay, the processes that are in the memory queue can progress or proceed further to enter the system. And it is only possible when the resources are not yet shared or are empty.

- Bounded Waiting

This specific protocol ensures that every process has a fair chance to enter the critical section of the OS without experiencing starvation. In the memory queue, no process is forced to wait indefinitely while other processes continuously enter the system and access resources.

So, the following are the most effective solutions:

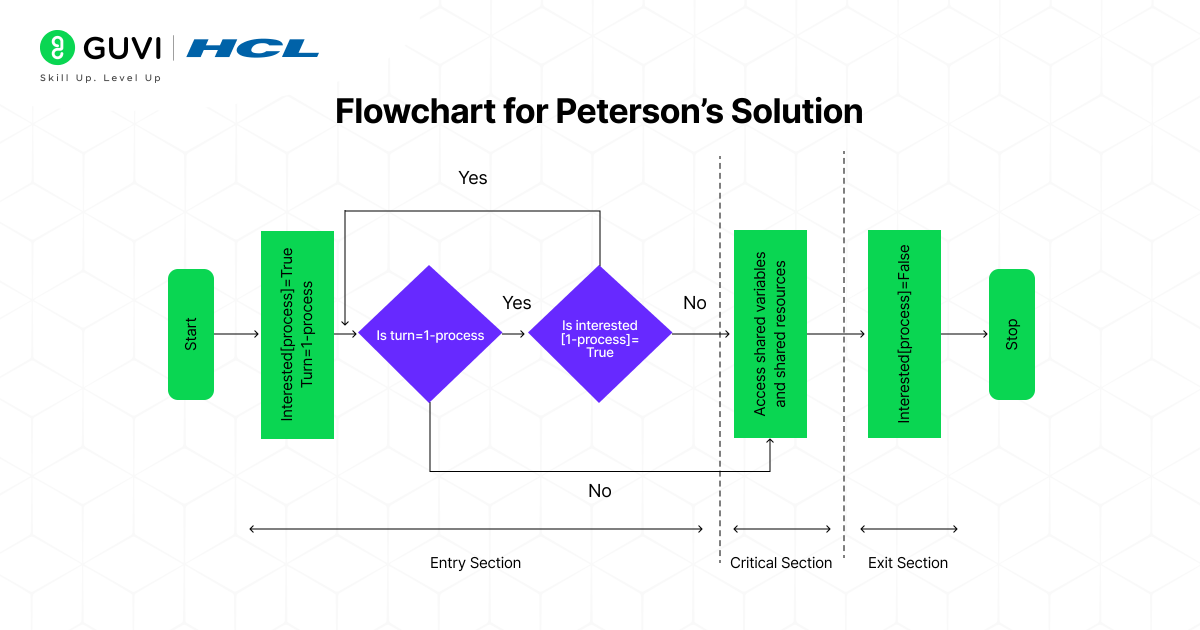

1. Peterson’s Solutions

It is an advanced software algorithm explicitly designed to prevent accessibility conflicts between two processes. When these processes attempt to enter the critical section area of the OS, this algorithm attaches a flag to each process that indicates its priority to enter the system.

Here, in this logic, a shared turn variable exists, which prioritizes the entrances to the critical section, allowing processes to be fed in chronologically without any extra waiting time.

Example: Imagine two processes trying to update the Process Table in the OS. Without control, both may overwrite entries simultaneously, causing errors. Peterson’s algorithm ensures that only one process updates at a time, while the others wait for their turn.

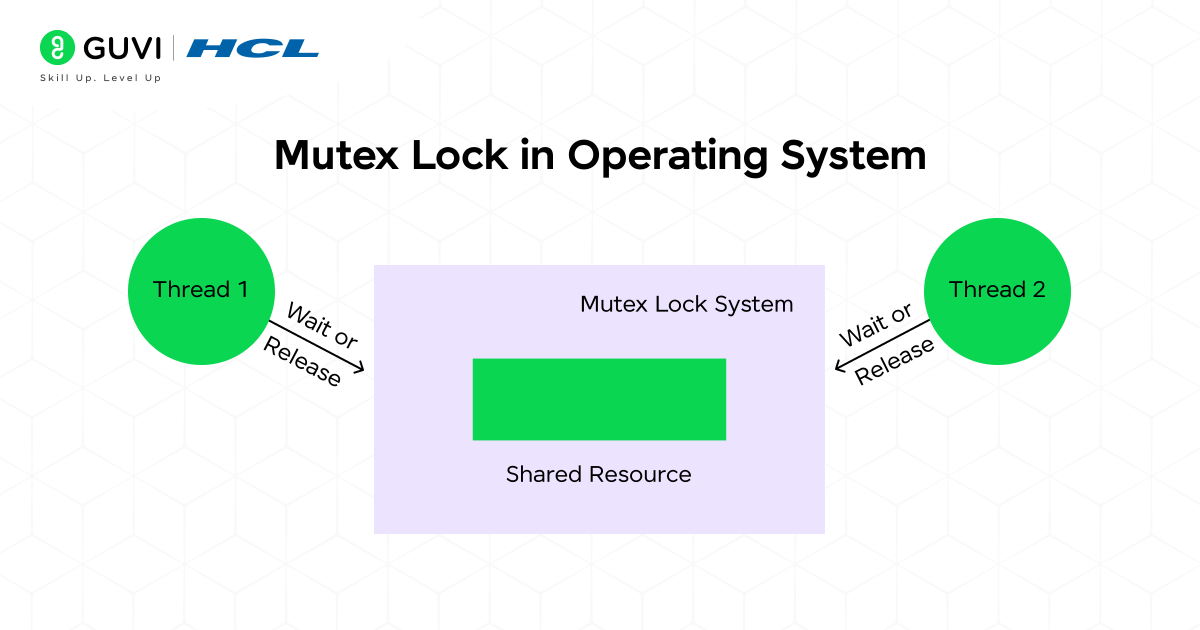

2. Locks / Mutexes

These are a type of synchronization tool which are implemented to direct the accessibility task of the processes. Before accessing the critical section area to make changes, a process must have the lock. And if another process already holds the lock, the desiring process must wait until the lock is released.

This strictness helps ensure that only a single process has the liberty to access the shared resources at a time, which prevents data inconsistency and memory leaks. Due to their simplicity in integration into the system and their efficiency in establishing a safe and secure environment, they are widely used.

Example: Suppose multiple processes are writing to a shared log file. If they all write at once, the log becomes cluttered and difficult to read. With a mutex, only one process can write, while others wait until the lock is released, ensuring the file remains consistent.

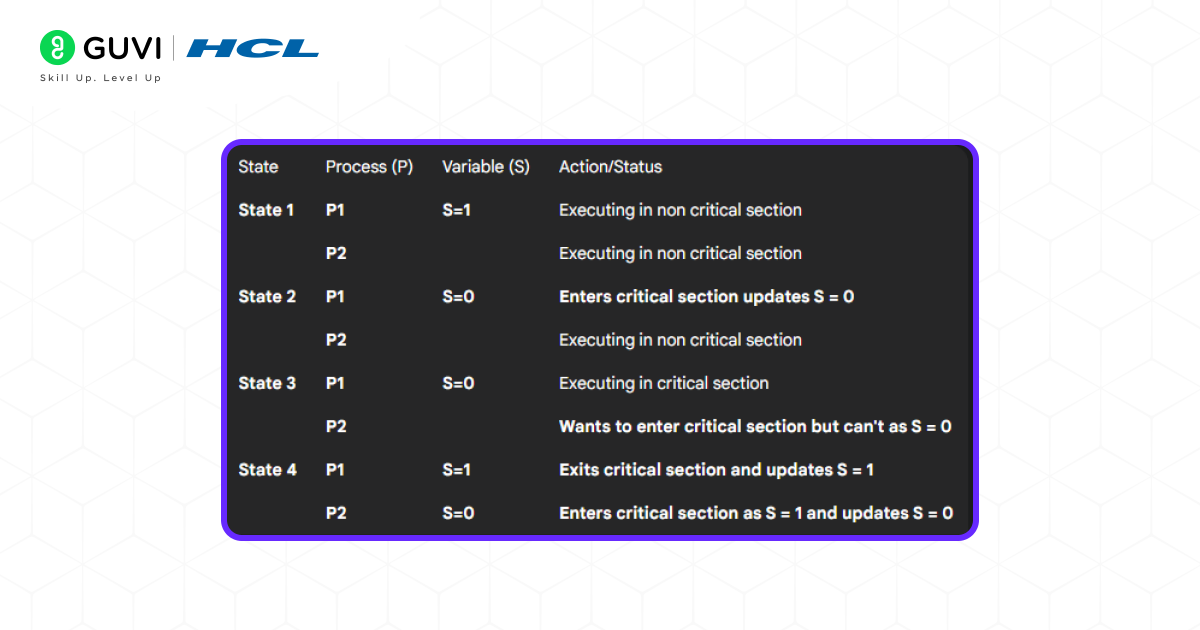

3. Semaphores

To handle highly complex synchronization scenarios of multiple distinct processes, Semaphores are used. These are integer variables implemented to control the accessibility to shared resources. They can be in the form of binary (0 or 1), like a lock, or a variable counter (0 to N). The primary purpose behind their utilization is to manage various identifiable shared resources.

When a process enters the critical section, it decreases the value of the semaphore variables, and when it departs, it increases them. By doing this, it ensures that the mutual exclusion rule is followed efficiently, while also controlling the finite resource availability. These variables are flexible in nature and can avoid potential synchronization disputes.

Example: Think of three printers available in a lab. Many processes may request printing, but a counting semaphore set to 3 ensures that at most three processes print at once. Others wait until a printer is free.

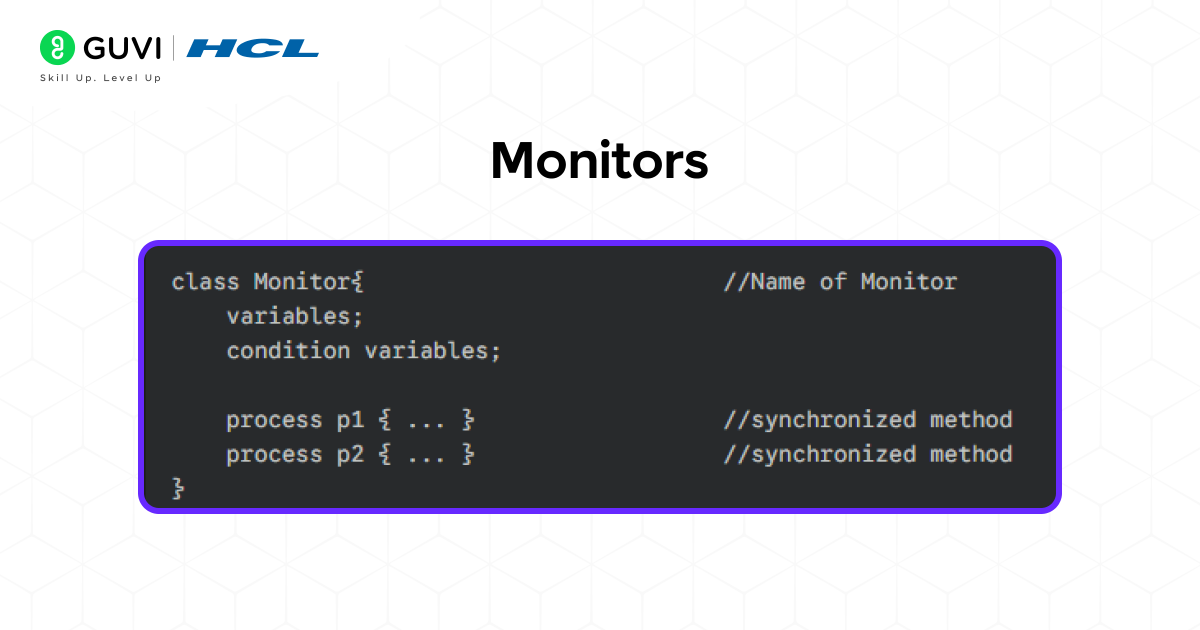

4. Monitors

It is an advanced and complex synchronization mechanism that supports various programming languages (Java, C#, Concurrent Pascal, etc.) and operating systems. It combines the shared data with the processes that access it into a unified structure. At a time, only one process can actively enter the monitor, upon which mutual exclusion is automatically ensured.

It also provides conditional variables that are required to make a process wait in the ready queue or signal other procedures and methods, making it easier to manage and handle synchronization problems without manual intervention, depending on locks or semaphores.

Example: Consider a printer queue where processes submit jobs. A monitor manages the queue by allowing only one process to add or remove a job at a time. If the queue is empty, a process waits until it is signaled that a new job has been added.

Critical Section Synchronization Working Mechanism

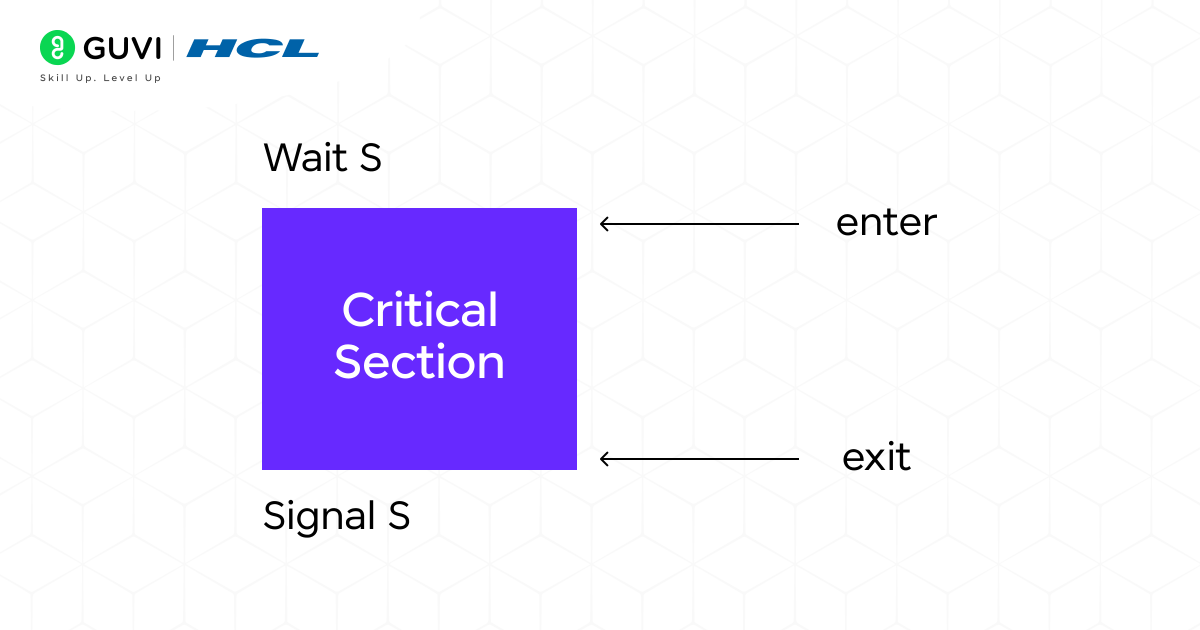

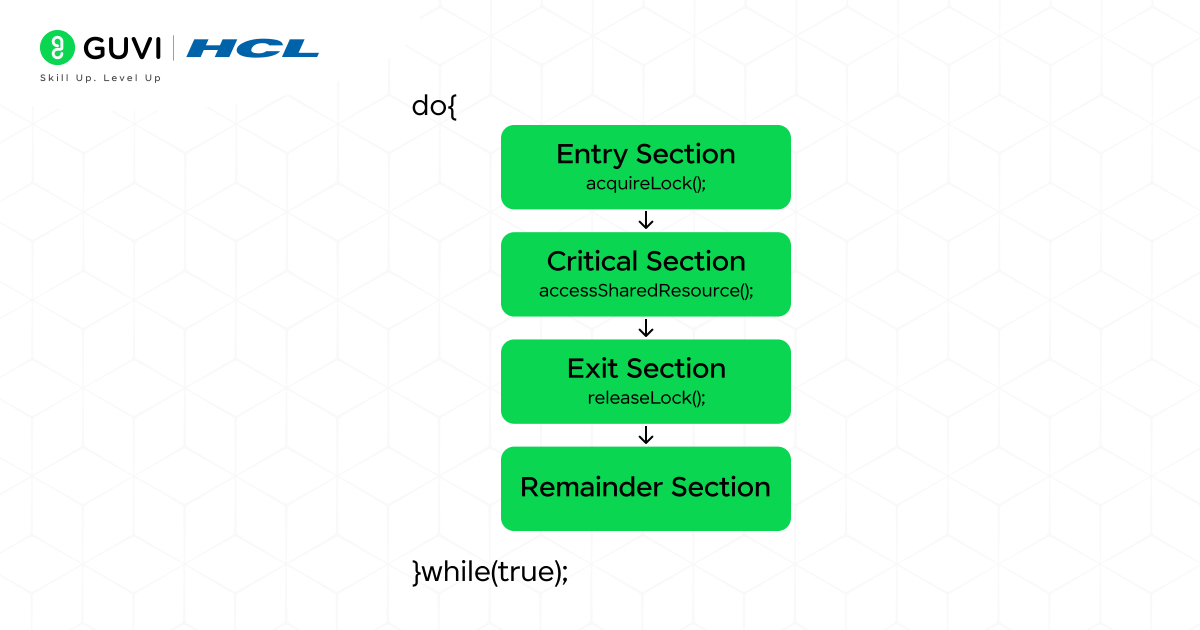

The working of critical section synchronization follows a step-by-step flow: first, in the Entry Section, a process checks the synchronization mechanism (like a lock, semaphore, or mutex) to request permission for entering the critical section; once access is granted, the process moves into the Critical Section, where it safely performs operations on shared resources without the risk of data inconsistency; after completing these operations, the process goes to the Exit Section, where it releases the lock or semaphore so that other waiting processes can get their turn; finally, the process continues in the Remainder Section, where it executes the rest of its code that does not require synchronization, ensuring smooth and fair execution among multiple processes.

Deadlock and Starvation in OS (Operating Systems)

Deadlock

- Deadlock is a special kind of situation in an OS that occurs when two or more processes are blocked permanently due to their extended waiting time for a resource that another process is occupying. This creates a circular chain of dependencies where no process can proceed, effectively bringing that part of the system to a halt.

- Once a deadlock process comes into action, all the processes in the ready queue that are waiting to be executed later can’t continue on their own. To resolve this issue, the OS itself must intervene via techniques and methods such as deadlock detection or lock recovery.

Starvation

- Starvation is a situation in which processes of low priority are given a later chance or are waiting for previous functions that take a long time to execute or involve a large amount of data. Access to resources for this kind of process becomes nearly impossible, or, in simple terms, they starve for an extended period.

- Starvation is most commonly encountered in scheduling algorithms, such as priority scheduling, or when allocating resources to the OS or any other system or software platform.

Operating Systems (OS) are the backbone of process management and synchronization. In today’s tech world, when OS concepts combine with Artificial Intelligence (AI), they open doors to smarter software development. To build a strong career in this evolving domain, enroll in HCL GUVI’s IITM Pravartak & MongoDB certified AI Software Development course and get ready to crack top product-based companies.

Conclusion

At last, we can conclude that the Critical Section Problem in OS (Operating System) is a fundamental challenge that arises when various processes in the program, that are actively running, attempt to access shared resources or files simultaneously, thereby increasing the probability of potential risks such as system failures or data inconsistency.

Through a clear understanding of the synchronization mechanism and practical solutions such as Peterson’s algorithm, locks/mutexes, semaphores, and monitors, we knew how the OS ensures mutual exclusion, progress, and bounded waiting, allowing processes to safely and efficiently share resources. Overall, proper management of critical sections and synchronization techniques is crucial for maintaining system stability, data integrity, and fair resource allocation in any multitasking environment.

FAQs

What are the best solutions to solve the critical section problem in OS (operating systems)?

Some of the most effective solutions include Peterson’s Algorithm, Semaphores, Monitors, and Locks/Mutexes.

What are the three main protocols that should be followed before solving any critical section problem?

The three main rules are: Mutual Exclusion (only one process at a time), Progress (no unnecessary waiting), and Bounded Waiting (each process gets a turn).

Why does the critical section problem occur?

It occurs when multiple processes attempt to access the same shared resource simultaneously without proper synchronization, resulting in race conditions, data corruption, or system errors.

Did you enjoy this article?