Understanding AI Foundation Models: Everything You Need to Know in 2025

Oct 28, 2025 5 Min Read 1541 Views

(Last Updated)

Remember those sci-fi movies, where the hero just talks to the computer? They’d ask for complex data analysis, and in a calm voice, they would get an instant reply with the answer from the computer. They’d sketch a rough idea, and the system would render a perfect 3D model. For decades, this was pure fantasy. But today, it’s not. That futuristic computer isn’t a special effect; it has become real, and it’s powered by something called an AI Foundation Model. This isn’t just a better app or a smarter algorithm. It’s a fundamental shift in the creation of a digital, all-purpose brain that’s learning to see, write, and reason. And understanding how it works is the key to understanding the world that’s already unfolding around us.

In this blog we”ll discuss everything you need to to know about the AI Foundation Model. And we’ll explore what they are, how they work, why they’re such a big deal, and the profound challenges and opportunities they present for our future.

Table of contents

- What Are AI Foundation Models?

- How Do AI Foundation Models Work?

- Pre-Training on Massive Datasets

- Transformer Architecture and Attention

- Fine-Tuning for Specific Tasks

- Prompting and In-Context Learning

- Scaling Up = Better Performance

- Types of Foundation Models

- Language Foundation Models

- Vision Foundation Models

- Key Characteristics of AI Foundation Models

- Challenges and Limitations

- Wrapping it up…

- FAQs

- How are AI Foundation Models different from traditional AI?

- Are small businesses able to utilize these models?

- Is fine-tuning necessary every single time?

- What types of skills will someone need to work with foundation models?

What Are AI Foundation Models?

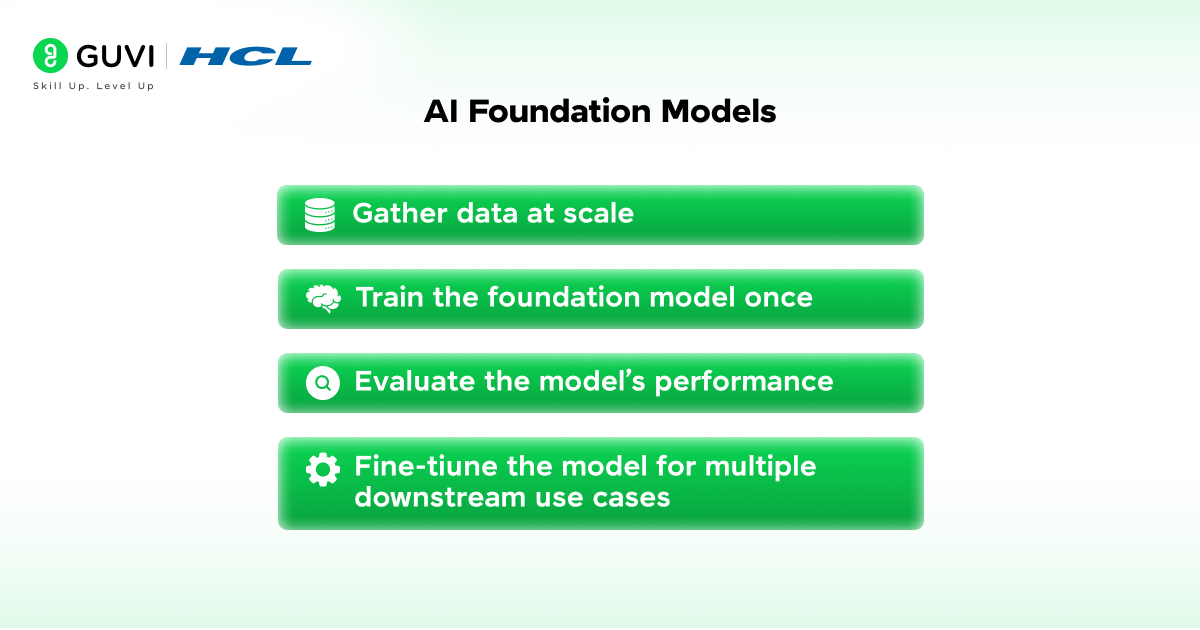

A Foundation Model is a large-scale artificial intelligence model trained on a vast and diverse corpus of data (often encompassing text, code, images, and more) using self-supervised learning. Because it is trained on such a broad set of information, it develops a general, foundational understanding that can be adapted (or “fine-tuned”) for a wide range of downstream tasks, often without needing to be built from scratch for each new job.

Let’s break down the key parts of that definition:

- Large-Scale: We’re talking about models with billions or even trillions of parameters (the internal variables the model adjusts during training). This immense size is what allows them to capture incredibly complex patterns.

- Vast and Diverse Data: These models are trained on petabytes of data scraped from the internet, books, articles, Wikipedia, code repositories like GitHub, social media, and image databases. This is their “education.”

- Self-Supervised Learning: This is the magic sauce. Instead of being trained on millions of painstakingly human-labeled examples (e.g., “this is a picture of a cat”), the model learns by finding patterns within the data itself. For text, it might be given a sentence with a word missing and learn to predict it. For an image, it might be shown with a portion obscured, and learn to reconstruct the missing part. Through trillions of these simple exercises, it builds a deep, internal representation of language, vision, and logic.

- Adaptable (Fine-Tuned): This is the “foundation” part. Once this broad base model is built, it isn’t just for one thing. It can be specialized for specific applications with a relatively small amount of additional, task-specific data. The same model that learns general English from the web can be fine-tuned to serve as a legal contract reviewer, a customer service chatbot, or a creative writing partner.

The most famous examples of AI Foundation Models are OpenAI’s GPT series (which powers ChatGPT), Google’s Gemini and PaLM models, and image generators like Stable Diffusion and DALL-E. They are the versatile engines driving the current AI revolution.

How Do AI Foundation Models Work?

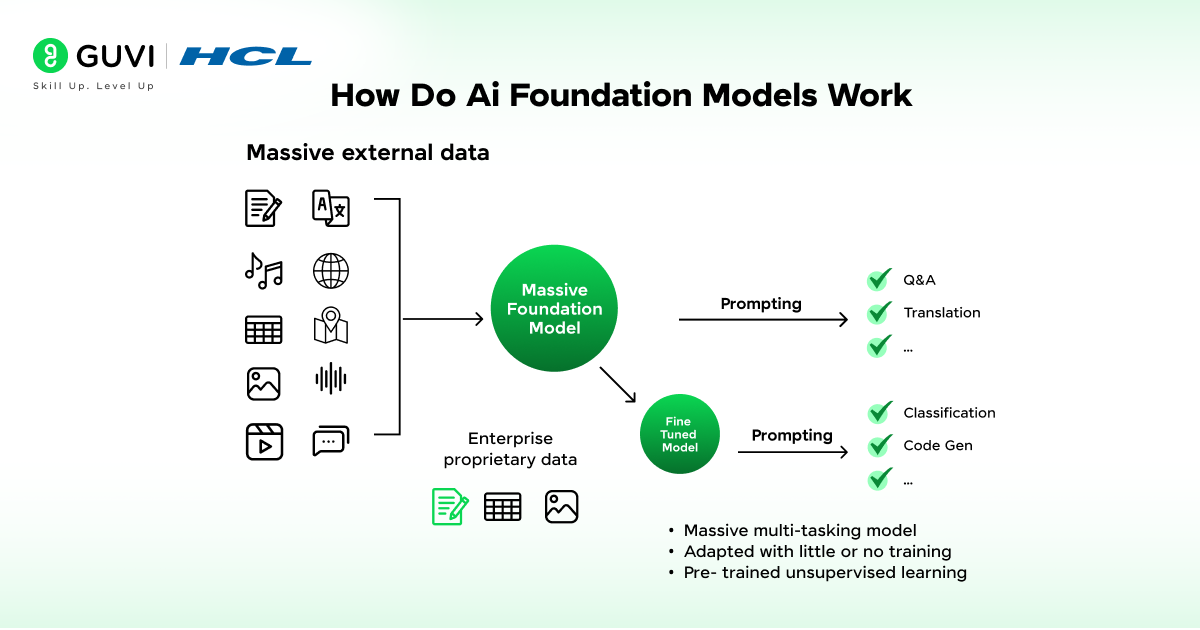

AI Foundation Models operate via a mixture of extensive pre-training, fine-tuning, and prompting. The majority of these models operate on a transformer architecture, which makes them capable of understanding relationships between data points, whether words in a sentence, pixels in an image, or patterns in audio.

So, how do these work in practice? Let’s break it down step by step:

1. Pre-Training on Massive Datasets

- AI Foundation Models are trained on massive datasets, such as billions of words, images, or code examples.

- Instead of learning a discrete task, Foundation Models learn generalized patterns: grammar, facts, reasoning, and so on.

- For example, GPT, the model, learns to predict the next word in a sentence; while doing this on an immense scale, it develops deep knowledge of language.

2. Transformer Architecture and Attention

- The critical innovation behind foundation models is the transformer, which was introduced in 2017.

- The transformer uses a mechanism called attention to allow the model to focus on the most relevant aspects of the input data.

- Example: The impact of attention, in the sentence “The cat sat on the mat because it was tired,” we use attention to work out that the word “it” refers to “the cat.”

The reliance on context makes foundation models more versatile and powerful than older AI systems.

3. Fine-Tuning for Specific Tasks

- After pre-training, foundation models can be fine-tuned with smaller, specialized datasets.

- For example:

- A general language model can be fine-tuned for medical diagnosis using healthcare data.

- A vision model can be fine-tuned for self-driving cars using road images.

This process saves time and resources because the heavy lifting was already done during pre-training.

4. Prompting and In-Context Learning

- One of the most exciting features of AI Foundation Models is that they don’t always need retraining.

- Instead, you can simply give them a prompt (instructions in natural language).

- Example: “Write a short poem about space exploration.” The model instantly generates a poem without needing task-specific training.

- This ability to perform zero-shot (no examples given) or few-shot learning (a few examples given) makes them highly flexible.

5. Scaling Up = Better Performance

- Research shows that as you increase the size of models (parameters) and training data, performance improves significantly.

- This is known as the scaling law of AI.

- That’s why models like GPT-4, PaLM, and Gemini are much more capable than their earlier versions.

Curious about how AI Foundation Models really work? Start your journey with HCL GUVI’s Free 5-Day AI & ML Email Course and get practical lessons straight in your inbox.

Types of Foundation Models

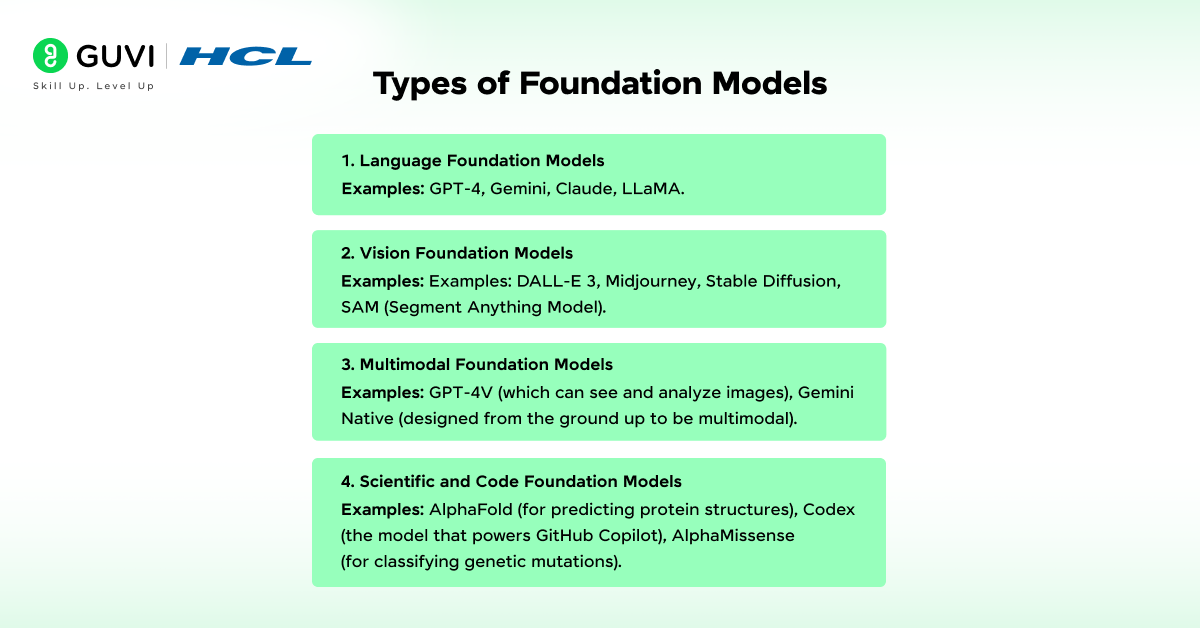

While the term “Foundation Model” often brings text generators to mind, the concept extends across several domains. They can be categorized by their primary input and output modalities.

1. Language Foundation Models

These are the most well-known AI Foundation Models. Trained primarily on text data, they excel at understanding and generating human language.

Examples: GPT-4, Gemini, Claude, LLaMA.

Capabilities: Writing essays and emails, translating languages, answering questions, summarizing long documents, writing and debugging code, and engaging in open-ended conversation.

2. Vision Foundation Models

Trained on massive datasets of images and their associated text captions, these models understand the visual world.

Examples: DALL-E 3, Midjourney, Stable Diffusion, SAM (Segment Anything Model).

Capabilities: Generating realistic images from text prompts (text-to-image), editing existing images based on text instructions, identifying and segmenting objects within images, and classifying visual content.

3. Multimodal Foundation Models

These models are trained on multiple types of data simultaneously, text, images, audio, and sometimes even video. This allows them to understand and make connections across different modalities.

Examples: GPT-4V (which can see and analyze images), Gemini Native (designed from the ground up to be multimodal).

Capabilities: Answering questions about an image (“What’s funny about this meme?”), generating an image from a complex textual description, analyzing a graph and writing a summary about it, or even generating a video from a text script (a rapidly advancing field).

4. Scientific and Code Foundation Models

Some AI Foundation Models are trained on highly specialized data to power scientific discovery and software development.

Examples: AlphaFold (for predicting protein structures), Codex (the model that powers GitHub Copilot), AlphaMissense (for classifying genetic mutations).

Capabilities: Predicting complex 3D structures of proteins, suggesting and auto-completing code, explaining scientific papers, and accelerating drug discovery.

- The term “Foundation Model” was first coined by Stanford researchers in 2021 — and within just three years, it became the backbone of tools like ChatGPT and Gemini!

- Some AI foundation models are trained on data sets larger than the entire Wikipedia multiplied by thousands!

- Models like GPT-4 can understand text, images, and even humor — yes, they can actually “get the joke”!

Key Characteristics of AI Foundation Models

AI Foundation Models are noteworthy due to a few key features.

Generalization: AI Foundation models differ from traditional AI systems because they are not designed for a single task. A foundation model can summarize a text, translate a language, and write codes, all from a single model.

Scalability: AI Foundation models improve as they increase in size and in the amount of training data. Generative pre-trained transformer models such as GPT-4 often display new “emergent abilities” not observed in smaller models.

Adaptability:AI Foundation models can be fine-tuned for specific industries (e.g., healthcare, finance) with little data or guided with prompting strategies to achieve results without the need for fine-tuning.

Multimodality: Many AI foundation models are also multimodal, which allow them to work across different text, images, audio, and video. This lead to more interesting applications like captioning still images, or working with documents containing both text and images.

Zero-shot & Few-shot Learning: Foundation models can perform new tasks without any prior training (zero-shot) or with only a few examples (few-shot), creating a workable solution that is both flexible and efficient.

Challenges and Limitations

Despite their power, AI Foundation Models come with challenges:

- Bias and Fairness: Models inherit biases from training data, which can lead to unfair outcomes.

- Compute and Energy Costs: Training large models requires massive computational resources.

- Lack of Interpretability: Foundation models often function as “black boxes.”

- Security Risks: Potential for misuse, such as generating misinformation or malicious code.

- Data Privacy: Using large datasets raises privacy and copyright issues.

Addressing these challenges is crucial for building safe and responsible AI systems.

Curious about AI Foundation Models and how they’re transforming the world? Learn hands-on with HCL GUVI’s IITM Pravartak & Intel Certified AI & ML course, designed to make you job-ready. From beginner-friendly projects to advanced concepts, you’ll gain the skills to stand out in the fast-growing AI job market.

Wrapping it up…

AI Foundation Models represent a tectonic shift in our relationship with technology. They are not merely tools but partners and amplifiers of human intellect and creativity. They hold a mirror to our own world, reflecting both our collective knowledge and our deep-seated flaws.

Understanding what they are, how they work, and the immense potential and pitfalls they carry is no longer a technical exercise; it is an essential form of modern literacy. As this technology continues to evolve at a staggering rate, our role as a society is to guide its development with wisdom, foresight, and a steadfast commitment to human-centric values. The foundation has been poured; it is now up to us to build a future upon it that is equitable, safe, and beneficial for all.

FAQs

1. How are AI Foundation Models different from traditional AI?

Traditional AI models usually operate on one task, while foundation models can easily adjust to many tasks with the same training core.

2. Are small businesses able to utilize these models?

Yes. Most pre-trained models can be found in APIs and various cloud services, allowing for usage without needing very deep budgets.

3. Is fine-tuning necessary every single time?

No. Many tasks can be accomplished with prompts alone, but fine-tuning is beneficial, and essential, for certain tasks specific to a certain industry.

4. What types of skills will someone need to work with foundation models?

Having a basic knowledge of ML would be helpful, but even non-techies can begin using prompt engineering with various cloud AI tools.

Did you enjoy this article?