A Complete Guide to the Layered Architecture of Generative AI Models

Nov 18, 2025 6 Min Read 1029 Views

(Last Updated)

What if intelligence could be built layer by layer, each part learning not just to process data but to understand it? Generative AI brings that possibility to life through architectures that mirror how humans think, connect, and create. These models go beyond recognition: they imagine, reconstruct, and refine. Their architecture reveals how machines learn context, meaning, and creativity within a structured and precise framework.

Please read the full blog to learn how generative models work and what makes their architecture unique.

- 65% of organizations report they are regularly using generative AI in one or more business functions.

- By 2026, over 80% of enterprises are expected to integrate generative AI models or APIs into their operations.

- The global generative AI market, valued at $16.8 billion in 2024, is projected to cross $109 billion by 2030, which marks one of the fastest growth curves in tech history.

Table of contents

- What is the Layered Architecture of Generative Models?

- Layered Architecture of Generative Models

- Data Processing Layer

- Data Platform and API Management Layer

- Infrastructure Layer

- Model Layer and Hub

- LLMOps and Prompt Engineering Layer

- Application Layer

- Supplementary Layers in Generative AI Architecture

- Top Generative Model Architectures

- Variational Autoencoders (VAEs)

- Generative Adversarial Networks (GANs)

- Autoregressive Models

- Diffusion Models

- Large Language Models (LLMs)

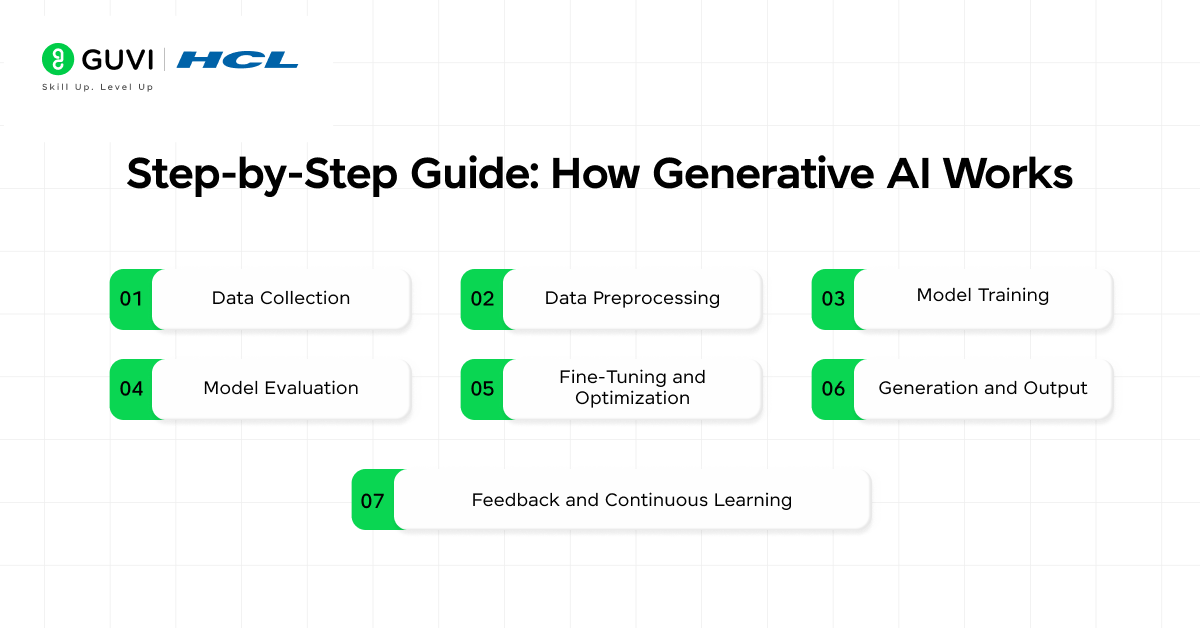

- Step-by-Step Guide: How Generative AI Works

- Step 1. Data Collection

- Step 2. Data Preprocessing

- Step 3. Model Training

- Step 4. Model Evaluation

- Step 5. Fine-Tuning and Optimization

- Step 6. Generation and Output

- Step 7. Feedback and Continuous Learning

- Computational Framework of Generative AI

- Loss Functions and Regularization

- Parallelization and Compute Scaling

- Hyperparameter Optimization

- Latent Space Representation

- Conclusion

- FAQs

- What makes the architecture of generative AI different from traditional machine learning models?

- How do large language models fit into generative AI architecture?

- What are the main components of a generative AI system?

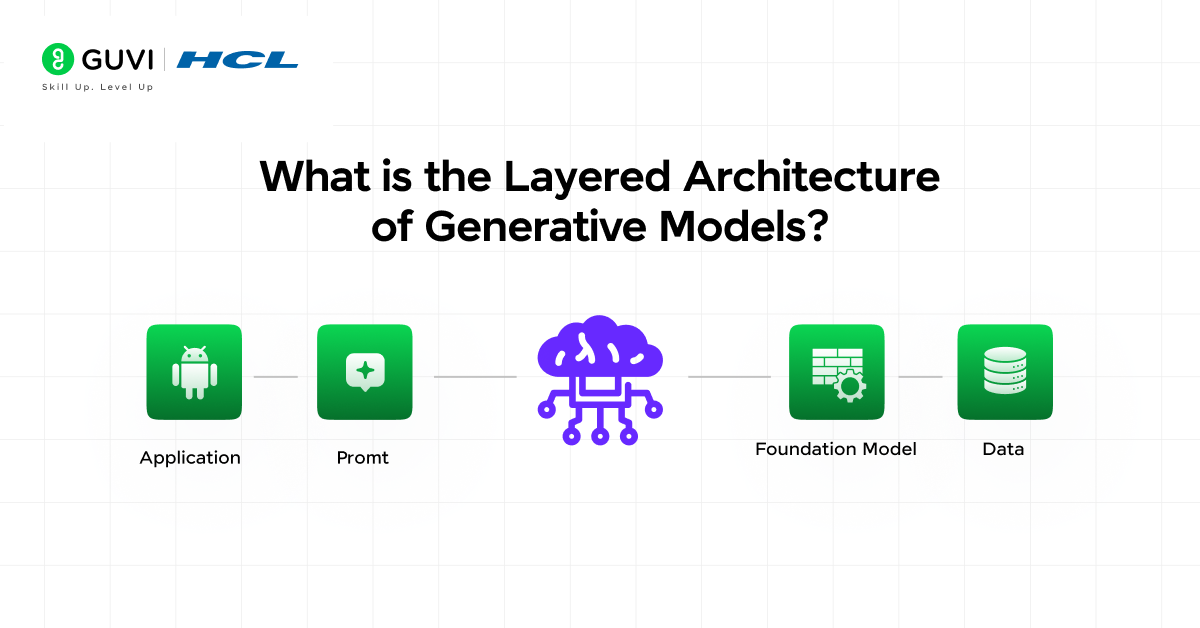

What is the Layered Architecture of Generative Models?

The layered architecture of generative models refers to a structured approach where intelligence develops through coordinated stages. Each layer has a precise role that supports how data moves, how patterns are learned, and how content is produced. This structure keeps the model organized and allows it to function across tasks with clarity and balance. Right from collecting information to creating outputs, every layer adds a new dimension of understanding. Together, they form a system that learns context and produces results that align closely with human reasoning.

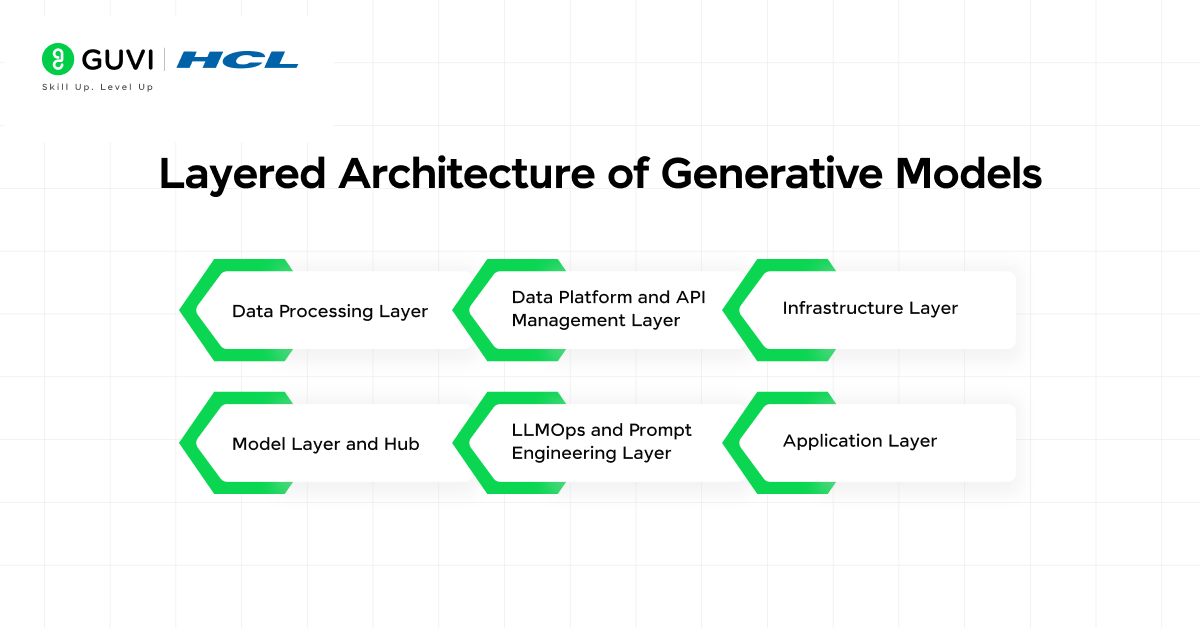

Layered Architecture of Generative Models

The structure of a generative AI model functions like an intelligent stack where each layer has a defined role yet remains interdependent. Together, they create a system that can collect, process, learn, and produce results that align closely with human context. This layered design is what allows generative models to scale and operate efficiently without losing coherence between data and response.

1. Data Processing Layer

This layer forms the groundwork for any generative model. It ensures that information entering the system is organized, accurate, and meaningful. Without this foundation, even the most advanced models struggle to interpret inputs correctly.

Purpose: Collects, cleans, and transforms data so that it aligns with model training requirements.

Core Functions:

- Normalization: Brings all data to a consistent scale and format.

- Data Augmentation: Expands the dataset by generating logical variations.

- Shuffling and Splitting: Distributes data evenly for unbiased training and testing.

- Filtering: Removes duplicates and irrelevant samples to improve clarity.

2. Data Platform and API Management Layer

Once processed, data must flow through systems that allow models to access it efficiently. This layer manages those pipelines and governs how external tools interact with the model through secure APIs. It supports structured and unstructured data exchange across environments.

Purpose: Enables seamless data connectivity and operational consistency.

Core Functions:

- Pipeline Management: Controls data ingestion and output delivery.

- API Integration: Connects the model with applications and databases.

- Access Control: Ensures only verified systems and users can communicate with the model.

- Real-time Updates: Supports live data feeds for adaptive learning.

3. Infrastructure Layer

Every generative AI roadmap or model requires a strong technological backbone to operate efficiently. The infrastructure layer provides the compute and scaling capabilities that power training and inference. It guarantees that the system can handle large workloads and maintain stability across distributed environments.

Purpose: Offers the hardware and cloud foundation needed for sustained model performance.

Core Functions:

- High-Performance Computing: Uses GPUs or TPUs to accelerate deep learning.

- Scalable Storage: Manages growing data and model versions securely.

- Parallel Training: Allows simultaneous computations for faster learning cycles.

- System Optimization: Balances speed, resource use, and cost efficiency.

4. Model Layer and Hub

This is the center of intelligence within the architecture. The model layer contains the generative frameworks that learn from data and produce meaningful outcomes. It includes large language models and diffusion models. It also includes multimodal systems capable of handling text and other inputs.

Purpose: Holds the trained models responsible for generating intelligent outputs.

Core Functions:

- Model Training: Adjusts parameters and weights to minimize prediction error.

- Fine-Tuning: Refines pre-trained models for specific domains or languages.

- Model Hosting: Stores and manages different model versions for access.

- Performance Evaluation: Tests output quality and coherence against benchmarks.

5. LLMOps and Prompt Engineering Layer

Operational intelligence begins at this layer. It ensures that large language models are deployed, maintained, and refined over time. Prompt engineering defines how users interact with these systems and further shapes the clarity and tone of responses.

Purpose: Maintains operational stability and human alignment for deployed models.

Core Functions:

- Model Deployment: Oversees rollout and scaling across environments.

- Monitoring: Tracks output quality and detects model drift.

- Prompt Optimization: Refines phrasing to improve response accuracy.

- Feedback Integration: Uses human and automated reviews to update behavior.

6. Application Layer

This layer connects technology to human experience. It converts the capabilities of generative models into tools that people use in real life, from conversational agents and creative platforms to research assistants and productivity suites.

Purpose: Delivers AI functionality through accessible and reliable user experiences.

Core Functions:

- Product Integration: Embeds AI models into business or consumer applications.

- Personalization: Tailors behavior to user preferences and context.

- Ethical Guardrails: Implements privacy and fairness safeguards within usage.

- Performance Tracking: Measures how effectively AI features meet user intent.

Supplementary Layers in Generative AI Architecture

| Layer | Purpose | Key Functions |

| Monitoring and Governance Layer | Maintains system reliability and ethical control. | Tracks performance, detects anomalies, and enforces compliance. |

| Security and Privacy Layer | Protects model data and user information. | Uses encryption, anonymization, and secure access protocols. |

| Evaluation and Quality Assurance Layer | Verifies accuracy and contextual integrity of outputs. | Benchmarks results and filters inconsistent responses. |

| Human Feedback Layer | Brings human insight into AI refinement. | Reviews outputs, corrects bias, and improves contextual fit. |

| Explainability Layer | Makes AI reasoning visible and understandable. | Shows decision paths and clarifies prediction logic. |

| Deployment Layer | Delivers models into practical environments. | Integrates APIs and connects models with real-time systems. |

| User Experience Layer | Shapes how users interact with AI tools. | Designs natural interfaces and response behavior. |

| Continuous Learning Layer | Keeps intelligence current and adaptive. | Updates knowledge through new data and feedback. |

| Sustainability Layer | Balances performance with energy efficiency. | Reduces computational load and resource use. |

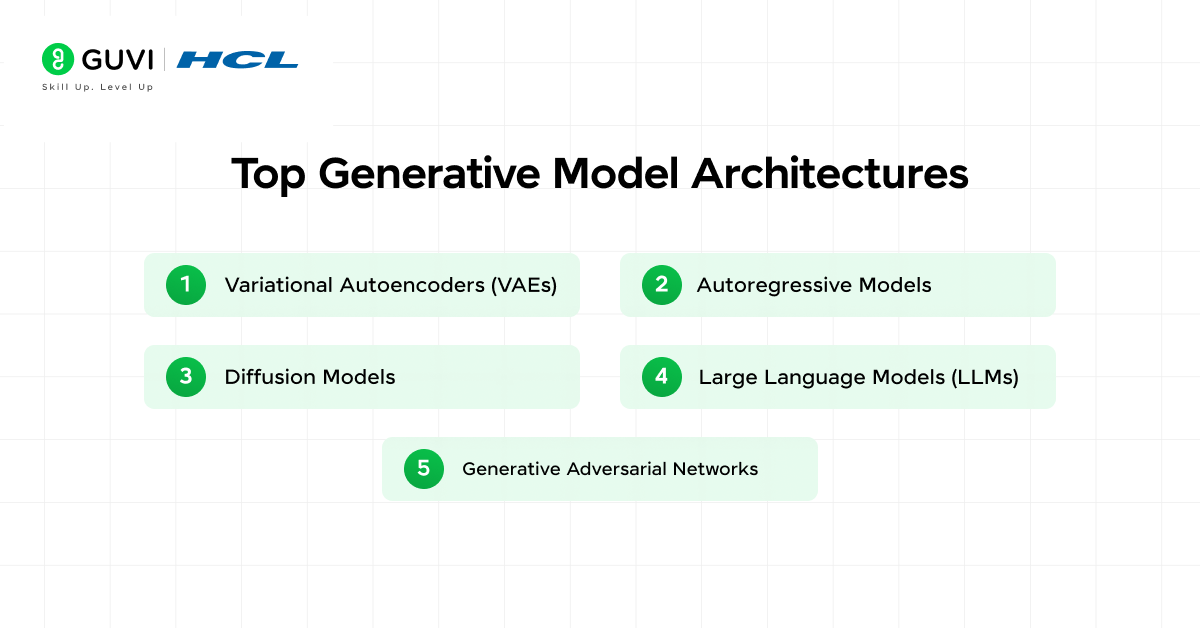

Top Generative Model Architectures

1. Variational Autoencoders (VAEs)

Variational Autoencoders are designed to understand the hidden structure of data. They compress information into a compact form and rebuild it to resemble the original input. This approach teaches the model to focus on meaning instead of memorizing details, which allows it to generate smooth and varied results.

Core Function:

- Compresses data into a lower-dimensional representation and reconstructs it accurately.

Key Features:

- Learns essential features rather than repeating input data.

- Produces controlled and diverse creative outputs.

- Commonly used for design prototypes and content synthesis.

2. Generative Adversarial Networks (GANs)

Generative Adversarial Networks work through a creative rivalry between two neural systems. One generates data while the other evaluates its authenticity. This process of feedback and refinement helps the model improve over time and create results that closely match reality.

Core Function:

- Uses two networks that train together to enhance quality and realism.

Key Features:

- Produces highly realistic images, videos, and 3D assets.

- Improves output through repeated evaluation and adjustment.

- Applied in the entertainment and visual simulation fields.

3. Autoregressive Models

Autoregressive models focus on prediction through sequence understanding. They analyze previous data points to generate the next element in a pattern, maintaining logical order and consistency. This structure allows them to handle tasks where flow and context must remain intact.

Core Function:

- Predicts subsequent outputs based on earlier inputs in a sequence.

Key Features:

- Works effectively for text, code, and music generation.

- Maintains coherence across long content structures.

- Builds responses that adapt naturally to context.

4. Diffusion Models

Diffusion models start from disorder and refine information step by step until structure emerges. They learn to reverse random noise into clear, meaningful patterns. This method produces visuals and data that appear organic and balanced.

Core Function:

- Converts random input into detailed and meaningful outputs through gradual refinement.

Key Features:

- Generates realistic images and visual art with high precision.

- Offers creative control over quality and detail.

- Forms the base of many image and video generation tools.

5. Large Language Models (LLMs)

Large language models learn language through exposure to massive text datasets. They recognize patterns, tone, and meaning to produce text that feels natural and contextually aware. Their proficiency to interpret instructions and respond conversationally has redefined how people interact with technology.

Core Function:

- Processes and generates text using deep contextual understanding.

Key Features:

- Understands nuance and relationships between words.

- Supports chat systems and reasoning engines.

- Powers conversational AI models like ChatGPT and Google Gemini.

Fascinated by how generative AI models are built layer by layer to think, create, and evolve? Take the next step and build your own intelligent systems with our AI & ML Course with Intel Certification. Learn the fundamentals of data processing, model design, and neural architectures, the same principles powering LLMs, GANs, and diffusion models. Gain hands-on project experience and earn a globally recognized Intel-backed certification to launch your career in AI innovation.

Step-by-Step Guide: How Generative AI Works

Step 1. Data Collection

The process begins with gathering large amounts of data from varied sources such as text, images, audio, and code. The quality and diversity of this data determine how accurately the model can represent real-world concepts. Systems often rely on curated datasets that reflect language patterns, visual styles, or domain-specific knowledge.

Step 2. Data Preprocessing

Once collected, the data is cleaned and formatted to remove inconsistencies and noise. This stage ensures that the model trains on information that is accurate and organized. Data preprocessing also involves labeling and normalizing data so that it can be interpreted correctly during training.

Step 3. Model Training

The training phase is where the model learns from data through repeated analysis and adjustment. It identifies connections, predicts outcomes, and adjusts internal parameters until it can generate outputs that resemble human-created results. This step requires extensive computational resources and fine-tuned algorithms.

Step 4. Model Evaluation

After training, the model undergoes evaluation to measure accuracy, coherence, and performance. This involves comparing generated outputs with real examples or benchmark datasets. Weak areas are identified and corrected through further fine-tuning.

Step 5. Fine-Tuning and Optimization

Fine-tuning adjusts the trained model for specific applications or industries. For example, a general language model can be refined to understand medical text or creative writing. Optimization also focuses on reducing latency and improving efficiency during real-time generation.

Step 6. Generation and Output

Once trained and refined, the model begins generating new content based on input prompts. It predicts what comes next by referencing learned patterns and structures. This stage turns statistical learning into meaningful creation, whether that is text, an image, or a synthetic voice.

Step 7. Feedback and Continuous Learning

The process does not end at output. User interaction and real-world use provide ongoing feedback that helps improve performance. Systems can update or retrain periodically using new data, ensuring relevance and adaptability over time.

Computational Framework of Generative AI

Beyond architecture lies the system that powers every function of generative intelligence. The computational framework defines how models learn and respond under mathematical control. Each component of this framework contributes to how efficiently a model translates complexity into coherent output.

- Gradient Optimization

Learning begins with error recognition. The model compares its output with expected results and adjusts its internal parameters to reduce that difference. Each iteration makes the network slightly more accurate, creating a cycle of gradual improvement that strengthens pattern recognition.

Key Focus:

- Uses gradient descent to refine weights after each prediction.

- Maintains stability with adaptive learning rates.

- Balances sensitivity between overfitting and underlearning.

2. Loss Functions and Regularization

Loss functions guide how a model measures success. They quantify the gap between predictions and reality, providing direction for improvement. Regularization ensures that learning remains balanced and does not drift toward memorizing data.

Key Focus:

- Cross-entropy and mean-square loss to compare predictions with actual data.

- Dropout layers and normalization to control over-dependence on features.

- Regular penalties that maintain generalization across new inputs.

3. Parallelization and Compute Scaling

Generative models handle billions of parameters, which demand specialized infrastructure. Parallel computing allows simultaneous training across multiple processors. This approach reduces time and improves performance without compromising accuracy.

Key Focus:

- Distributes computation across GPUs or TPUs.

- Syncs parameter updates for uniform learning progress.

- Uses checkpointing to preserve training progress during large-scale runs.

4. Hyperparameter Optimization

Every generative system depends on precise tuning. The selection of learning rate, batch size, and activation function determines how quickly and accurately it learns. Automated tuning frameworks help identify the most efficient configurations through continuous testing.

Key Focus:

- Adjusts parameters based on data complexity.

- Runs controlled experiments for better convergence.

- Adapts dynamically to minimize error over time.

5. Latent Space Representation

Generative models store knowledge in abstract dimensions known as latent spaces. These spaces capture relationships between concepts and allow the system to create new variations that remain logical. They represent how models internalize meaning rather than memorize examples.

Key Focus:

- Encodes compressed representations of learned patterns.

- Enables smooth interpolation between ideas.

- Supports creativity by connecting contextually similar points.

Conclusion

Generative AI represents a new stage in computational intelligence where creativity and logic coexist. Its layered and computational designs allow machines to learn meaning, not just data. As models evolve, their proficiency to understand, adapt, and create mirrors human reasoning more closely, shaping a future where artificial intelligence becomes a silent collaborator in innovation.

FAQs

1. What makes the architecture of generative AI different from traditional machine learning models?

Generative AI models are designed to create new content instead of only analyzing data. Their layered architecture combines encoding, pattern recognition, and synthesis, which allows them to produce realistic text or sound that mimic human creativity.

2. How do large language models fit into generative AI architecture?

Large language models form the core of modern generative systems. They process massive datasets using deep learning to understand grammar and semantics. Within the architecture, they connect contextual understanding with creative generation, enabling conversational and content-driven outputs.

3. What are the main components of a generative AI system?

A complete generative AI system includes data processing, model training, and output layers. Supporting modules such as APIs, feedback loops, and monitoring systems ensure smooth performance, ethical use, and continuous learning for more accurate and adaptive results.

Did you enjoy this article?