What is DeepFaceDrawing? Turning Sketches into Real Faces

Sep 10, 2025 3 Min Read 1059 Views

(Last Updated)

Have you ever wished your rough doodles could magically transform into photorealistic portraits? If you grew up watching the iconic Indian TV series Shaka Laka Boom Boom, you probably remember the Magic Pencil that could bring drawings to life.

While we’re still a long way from conjuring objects out of thin air, researchers have developed a system that feels like a step in that direction, DeepFaceDrawing. Developed by a team of scientists from the Chinese Academy of Sciences, DeepFaceDrawing is a deep learning-based system that transforms even incomplete or amateur freehand face sketches into highly realistic human faces.

Unlike traditional sketch-to-photo models that require clean, aligned inputs and produce mixed results, this AI model works surprisingly well even with messy or minimal sketches, making it accessible to everyday users, not just professional artists. Let us read more about them in this article!

Table of contents

- How DeepFaceDrawing Works?

- Creative Control and Customization

- Applications and Real-World Use Cases

- What’s New in 2025?

- Ethical Considerations and Limitations

- Conclusion

How DeepFaceDrawing Works?

To understand how this tool works, imagine you’re feeding it a rough sketch with a few important facial cues, like eye shape, mouth position, or jawline. DeepFaceDrawing doesn’t try to generate a face from scratch like other generative adversarial networks (GANs) might.

Instead, it analyzes your sketch and maps its features to a latent space where it can search for similar facial components in a massive database of pre-trained face-sketch-image pairs.

Here’s a simplified breakdown of the process:

- Feature Decomposition Using Autoencoders: The system uses multiple specialized autoencoders to break down the sketch into several facial components, such as the left eye, right eye, nose, mouth, and general face shape.

- Component Matching from Trained Dataset: Each of these components is then compared against a dataset of facial features extracted from thousands of sketch-image pairs. The best-matching facial features are retrieved from this dataset.

- Manifold Representation and Feature Mapping: These individual matches define a multi-dimensional manifold, a mathematical representation of facial structure, which is then projected and fine-tuned using a Feature Mapping Module.

- Image Synthesis: Finally, the features are decoded and passed into the Image Synthesis Module, which blends them into a cohesive, photorealistic face.

One of the most remarkable aspects of DeepFaceDrawing is that it works well even with incomplete inputs. For instance, if a user provides only partial facial features (like just the eyes and the mouth), the system fills in the missing parts plausibly and naturally. You can also modify specific components, like adjusting the size of an eyebrow or the tilt of a smile, and the system responds accordingly, generating a new face with the desired tweaks.

Also Read: What is Object Detection: A Comprehensive Guide

Creative Control and Customization

Another interesting feature is the ability to control the degree of resemblance between the input sketch and the generated image. You can choose whether the final output should closely match your sketch or reflect only a rough interpretation of it.

This flexibility makes DeepFaceDrawing useful not just for sketch conversion but also for creative exploration, character generation, and even artistic experimentation.

Want to draw a winking woman with exaggerated eyes? Done.

Do you want a guy with one eye & eyebrow smaller than the other? You got it.

Do you want to draw his not-so-identical twin? At your service.

Such applications open up doors for both professionals (like forensic artists or digital creators) and casual users experimenting with digital portraits.

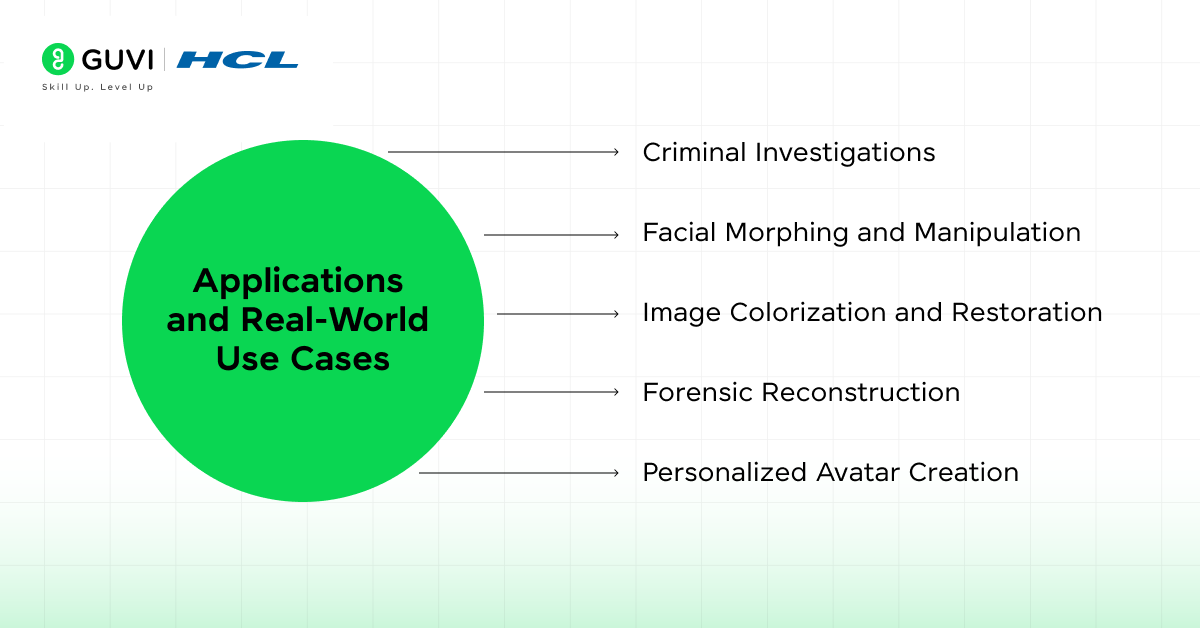

Applications and Real-World Use Cases

Since its original release, DeepFaceDrawing has been praised for its practicality and potential in several domains:

- Criminal Investigations: Law enforcement agencies can use the system to quickly generate suspect profiles from witness sketches. Even vague or partial witness descriptions can produce a face that aids identification.

- Facial Morphing and Manipulation: Artists and game developers can use the tool to generate multiple facial variations or merge characteristics from different sketches to create new faces.

- Image Colorization and Restoration: With additional training, the system can also be adapted to colorize black-and-white sketches or restore degraded facial images.

- Forensic Reconstruction: Sketches based on skeletal or partial remains can be refined and humanized into photo-realistic images for public identification or archival purposes.

- Personalized Avatar Creation: In the context of gaming and virtual social platforms, users can create avatars based on drawn sketches, making character design faster and more intuitive.

Find out: Object Detection using Deep Learning: A Practical Guide

What’s New in 2025?

Since its first publication, several AI models have advanced sketch-to-image synthesis. Systems like StyleGAN3, DALL·E 3, and Adobe Firefly have demonstrated text-to-image capabilities, but DeepFaceDrawing still holds relevance for sketch-based interaction, especially in privacy-sensitive or police workflows.

Recent extensions of the original work now support:

- Profile and angled faces, not just front-facing ones

- Accessories like glasses and hats, which previously caused issues

- Multi-ethnic facial feature modeling

- Higher resolution outputs suitable for media use

Integration into forensic software and animation pipelines has also grown, reflecting its wider utility.

Ethical Considerations and Limitations

As with any AI tool that manipulates or generates human faces, ethical concerns arise. DeepFaceDrawing has the potential to be misused for:

- Creating deepfakes or fake identities

- Generating misleading or defamatory images

- Manipulating public perception through altered visual data

To mitigate misuse, researchers and developers must implement strict usage controls, watermarking, traceability, and access limitations. In addition, systems trained on more diverse and representative datasets can help reduce bias and produce more equitable outputs.

Transparency, accountability, and responsible deployment must accompany the power of AI-based image generation.

If you want to learn more about how machine learning helps in our day-to-day life and how it can impact your surroundings, consider enrolling in HCL GUVI’s IITM Pravartak Certified Artificial Intelligence and Machine Learning course that teaches NLP, Cloud technologies, Deep learning, and much more that you can learn directly from industry experts.

Discover: Understanding Sea-Thru: How It Works and Why It Matters

Conclusion

In conclusion, DeepFaceDrawing is more than just a clever AI gimmick. It represents a leap in human-computer creativity, where even the most novice sketcher can produce realistic faces with just a few strokes. From law enforcement to digital art, its potential is vast, and its ease of use makes it a powerful tool in the democratization of AI.

But as we continue to develop tools that blur the line between real and synthetic, it’s imperative to proceed with caution. Used ethically, DeepFaceDrawing can be a force for creativity, accessibility, and innovation. Misused, it can contribute to misinformation and erosion of trust in digital media.

Did you enjoy this article?