The Power of Human Feedback in ChatGPT and RLHF Training

Sep 10, 2025 3 Min Read 2610 Views

(Last Updated)

In the ever-evolving landscape of Artificial Intelligence, large language models have redefined how machines understand and generate human language. But behind every impressive chatbot response or AI-generated article lies a critical component that often goes unnoticed: human feedback.

As we move beyond traditional training methods, Reinforcement Learning from Human Feedback (RLHF) has emerged as a game-changing approach that enables models like ChatGPT to become more accurate, context-aware, and aligned with human intent.

This article explores how human feedback powers the next generation of NLP, the evolution of language models, and why RLHF has become the gold standard in making AI more relatable, reliable, and responsible.

Table of contents

- Basics and Evolution of Language Models

- Brief history of the evolution of language models

- Why Human Feedback Matters

- What is RLHF?

- ChatGPT: A Flagship RLHF Example

- Why RLHF Has Gained Momentum?

- Improving language models with human feedback

- Benefits of RLHF-Enhanced Training

- Challenges and Limitations

- Conclusion

Basics and Evolution of Language Models

Language models are computer programs that are designed to understand, generate, and process human language. They are a fundamental component of natural language processing (NLP), which is a field of artificial intelligence (AI) that focuses on enabling computers to understand, analyze, and generate human language.

Language models are designed to predict the likelihood of a sequence of words or phrases, based on the context in which they appear. This is known as language modeling, and it is a key task in NLP. Language models are used in a wide range of applications, such as speech recognition, machine translation, text-to-speech conversion, and chatbots.

“The evolution of language models has led to the development of more sophisticated models that can handle the complexity and variability of natural language, with applications in areas such as machine translation, chatbots, and virtual assistants.”

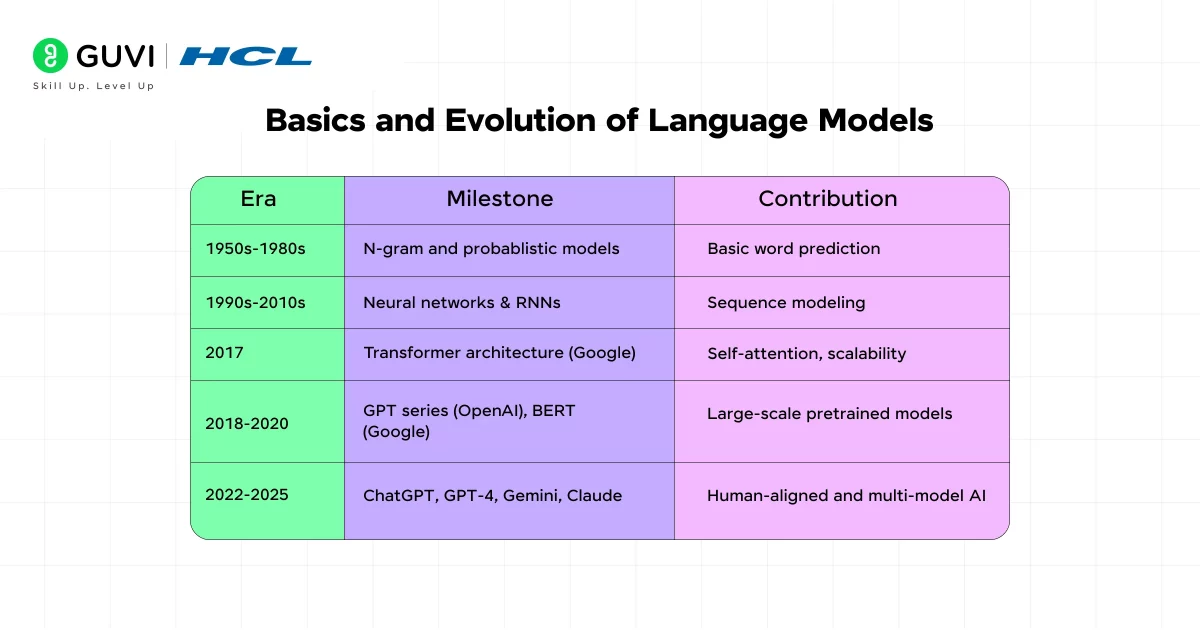

Brief history of the evolution of language models

| Era | Milestone | Contribution |

| 1950s–1980s | N-gram and probabilistic models | Basic word prediction |

| 1990s–2010s | Neural networks & RNNs | Sequence modeling |

| 2017 | Transformer architecture (Google) | Self-attention, scalability |

| 2018–2020 | GPT series (OpenAI), BERT (Google) | Large-scale pretrained models |

| 2022–2025 | ChatGPT, GPT-4, Gemini, Claude | Human-aligned and multi-modal AI |

Why Human Feedback Matters

While pretraining on massive datasets enables LLMs to generate fluent text, it doesn’t always ensure alignment with human intent. Human feedback helps:

- Correct misinterpretations or hallucinations

- Improve response relevance and tone

- Guide models toward ethical and responsible behavior

- Enable continuous learning without retraining from scratch

What is RLHF?

Reinforcement Learning from Human Feedback (RLHF) is a method where human-generated ratings, preferences, or corrections are used to fine-tune a model’s behavior. Instead of just maximizing accuracy on a dataset, the model learns to act in ways humans find useful or correct.

The RLHF pipeline:

- Supervised fine-tuning – Initial fine-tuning on human demonstrations

- Reward modeling – Train a model to rank good/bad responses

- Reinforcement learning (e.g., PPO) – Optimize the base model based on reward feedback

This loop creates a virtuous cycle of human-AI collaboration.

ChatGPT: A Flagship RLHF Example

OpenAI’s ChatGPT, particularly from GPT-3.5 onwards, has been the leading example of RLHF in action:

- ChatGPT (2022) – Introduced instruction-following with RLHF

- ChatGPT Plus (GPT-4, 2023) – Multimodal input, memory, better factual accuracy

- ChatGPT-4o (2024) – Vision, voice, and real-time interaction with human-like emotion

Key strengths enhanced via RLHF:

- Contextual awareness

- Style and tone adaptation

- Safer and more ethical outputs

- Instruction-following (code, writing, advice)

Why RLHF Has Gained Momentum?

- Explosion in open-source models (Mistral, LLaMA 3, Falcon)

- Advancements in reward modeling and preference collection

- Improved tooling for scalable feedback (e.g., OpenAI Evals, Anthropic’s Constitutional AI)

- Growing concerns around alignment, safety, and bias mitigation

Improving language models with human feedback

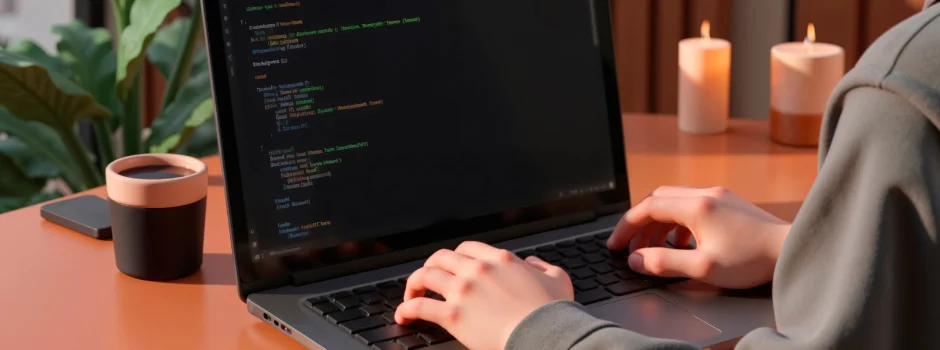

Human feedback can be used to improve language models by correcting errors in the model’s predictions or by providing additional context and information that the model may not have considered. Here’s an example of how human feedback can be incorporated into a ChatGPT model using Python:

import openai

openai.api_key = "your-api-key"

prompt = "Explain quantum computing like I'm five."

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}]

)

print("Model Output:", response.choices[0].message["content"])

feedback = input("Was this helpful? (yes/no): ")

if feedback.lower() == "no":

correction = input("Provide a better explanation: ")

# Log feedback for future fine-tuning

with open("feedback_log.json", "a") as f:

f.write(f"{prompt} → {correction}\n")In this example, the ChatGPT model is used to generate a short story about a robot learning to love. After the story is generated, the user is prompted to provide feedback on the accuracy and relevance of the generated text. If the feedback is negative, the user is prompted to provide a corrected version of the text. This corrected text is then used to update the prompt and generate new text using the ChatGPT model.

By incorporating human feedback in this way, the ChatGPT model can learn from its mistakes and improve its accuracy over time.

Benefits of RLHF-Enhanced Training

- More human-like reasoning and dialogue

- Safer, ethically sound responses

- Adaptive to cultural and user-specific nuances

- Greater trustworthiness in real-world applications

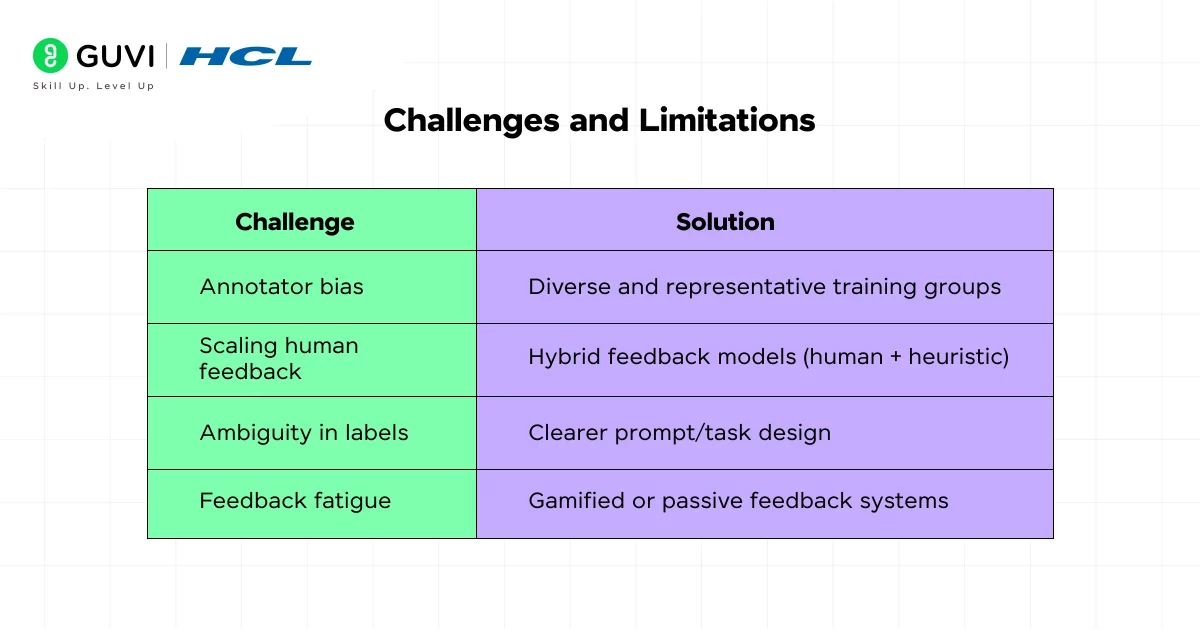

Challenges and Limitations

Despite its success, RLHF comes with trade-offs:

| Challenge | Solution |

| Annotator bias | Diverse and representative training groups |

| Scaling human feedback | Hybrid feedback models (human + heuristic) |

| Ambiguity in labels | Clearer prompt/task design |

| Feedback fatigue | Gamified or passive feedback systems |

In summary, ChatGPT and RLHF have set a new benchmark in AI-human interaction. By closing the feedback loop between human users and language models, we’re building AI that doesn’t just generate text, it understands, adapts, and aligns with what we want.

If you want to learn more about how machine learning helps in our day-to-day life and how learning it can impact your surroundings, consider enrolling in HCL GUVI’s IITM Pravartak Certified Artificial Intelligence and Machine Learning course that teaches NLP, Cloud technologies, Deep learning, and much more that you can learn directly from industry experts.

Conclusion

In conclusion, the integration of Reinforcement Learning from Human Feedback marks a seismic shift in how we train language models. From healthcare to customer service, and from tutoring to code generation, models like ChatGPT are learning to think more like us—because we’re teaching them directly.

As the field advances, the collaboration between humans and machines will deepen. Human feedback is no longer an optional enhancement; it’s the foundation for responsible AI. The next generation of models won’t just be smarter; they’ll be more aligned, empathetic, and trustworthy, thanks to the human touch in their training journey.

Did you enjoy this article?