Innovate or Stagnate: Comprehensive Generative AI Terms For Enthusiasts

Mar 13, 2025 5 Min Read 7237 Views

(Last Updated)

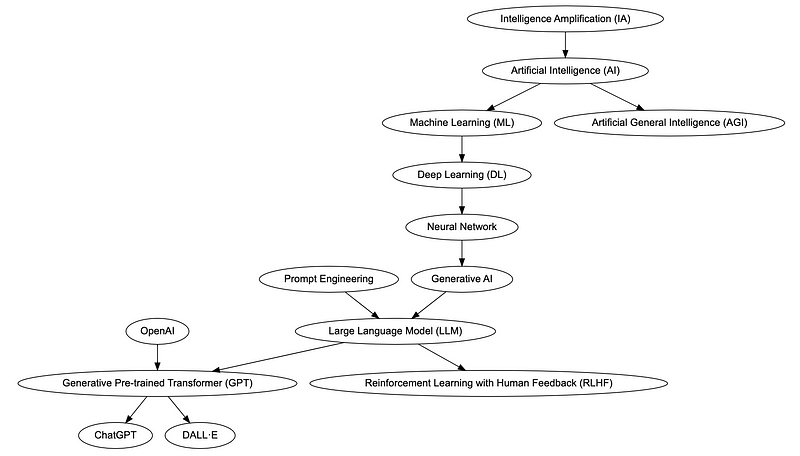

Generative AI is essential in many fields today to help professionals survive in an era of swift technological advancements. As a subfield of Artificial Intelligence, Generative AI is transforming the way we use data and technology- opening new areas into digital craftsmanship and problem-solving. In this growing era of AI, it’s also important to get acclimated with its Key generative AI terms to be technology-driven.

It is not only a mere technological evolution but a change of paradigm in the way machines interact with and contribute to human endeavors through generative AI. From aiding in content creation to solving complexities, Generative AI is changing industries, making its understanding a must for practitioners and enthusiasts. This guide should help demystify the key terms there are in Generative AI and give clarity to what its foundational concepts and applications are.

With the advent of the world of Generative AI with more precision, we need to appreciate what each term means as well as within the wider context of AI. In doing so, such knowledge by itself makes it possible for better professional competence and innovative applications, including opportunities across a whole range of sectors involved across various industries.

Table of contents

- The Comprehensive Glossary of Generative AI Terms

- Artificial Intelligence (AI)

- Alignment

- Attention

- Augmented Intelligence

- Bias

- Chain-of-thought

- ChatGPT

- Completion

- Embeddings

- Fine Tuning

- Foundational Model

- Generative AI

- Generative Pretrained Transformers (GPT)

- Hallucination

- Large Language Model (LLM)

- Latent Space

- Low-Rank Adaptation (LoRA)

- Model Architecture

- Multi-modal Models

- Neural Network

- One-shot / Few-shot

- Parameters

- Plugins / Tools

- Prompt

- Prompt Engineering

- Prompt Injection

- Reinforcement Learning from Human Feedback (RLHF)

- Retrieval Augmented Generation (RAG)

- System Prompt

- Token

- Training

- Transformer

- In Conclusion

- FAQs - Generative AI Terms Glossary

- What is the difference between AI and Generative AI?

- How does a Transformer architecture in AI work?

- Why is understanding Bias in AI important?

The Comprehensive Glossary of Generative AI Terms

Artificial Intelligence (AI)

Artificial Intelligence represents the pinnacle of computer systems designed to emulate human intelligence. It encompasses a broad spectrum of capabilities, including visual perception, speech recognition, and language processing. AI is the cornerstone of modern technology, bridging the gap between human cognitive functions and digital execution.

Find Out 7 Benefits of AI-Powered Learning Environments

Alignment

Alignment in AI refers to the training process aimed at steering models towards ethical principles. This process is crucial in ensuring that AI systems produce outputs that are not only accurate but also ethically sound and free from biases or harmful content.

Attention

Attention is a groundbreaking concept in transformer architecture. It allows AI models to understand and interpret the relationships between words in a text sequence, providing a deeper context and enhancing the model’s language processing capabilities.

Augmented Intelligence

Augmented Intelligence is a paradigm where AI systems enhance human decision-making rather than replace it. It’s a collaborative interaction where AI extends human capabilities rather than automating them. This approach emphasizes the assistive role of AI, working in tandem with human intelligence to amplify our cognitive strengths.

ALSO READ | How to improve your coding with ChatGPT?

Bias

Bias in AI refers to the tendency of AI models to produce results that are systematically prejudiced due to erroneous assumptions in the machine learning process. This can occur due to biased data or flawed model design. Bias is a significant concern in AI ethics, as it can lead to unfair or discriminatory outcomes, especially in sensitive applications like hiring, law enforcement, and lending.

Chain-of-thought

Chain-of-thought is a prompting technique used in AI, particularly in language models, to improve reasoning capabilities. It involves breaking down complex tasks or questions into smaller, more manageable steps, allowing the AI to process and respond in a more structured and logical manner. This approach mimics human problem-solving processes and is instrumental in enhancing the AI’s ability to handle complex queries.

ChatGPT

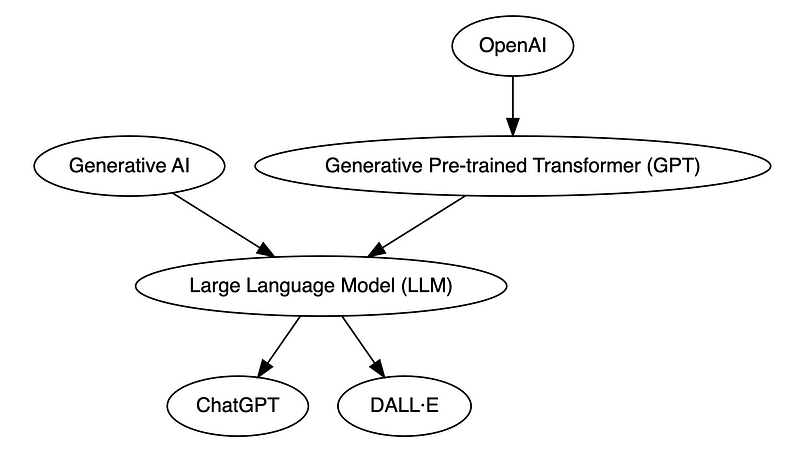

ChatGPT, developed by OpenAI, is a conversational interface that retains the state of conversations. This advancement allows for more coherent and contextually relevant interactions, as the model can reference previous exchanges to provide more accurate and relevant responses.

Also Explore: Everything You Should Know About ChatGPT & Why It Matters?

Make sure you understand machine learning fundamentals like Python, SQL, deep learning, data cleaning, and cloud services before we explore them in the next section. You should consider joining GUVI’s Artificial Intelligence & Machine Learning Course, which covers tools like Pyspark API, Natural Language Processing, and many more and helps you get hands-on experience by building real-time projects.

Also, if you want to explore Artificial Intelligence and Machine Learning through a Self-paced course, try GUVI’s Artificial Intelligence Course.

Completion

Completion refers to the output generated by an LLM in response to a prompt. This term is essential in understanding how AI models respond to inputs and how they can be used to generate coherent and contextually appropriate content.

Embeddings

Embeddings are a method of encoding text into numerical values, capturing the semantics of the language. This technique is vital in understanding how AI interprets and processes language, allowing for more nuanced and accurate language models.

Fine Tuning

Fine Tuning involves additional training applied to foundational models to enhance their capabilities for specific tasks. This process is crucial for tailoring general AI models to specialized applications, increasing their effectiveness and accuracy.

Also, Read | ChatGPT 3.5 Vs ChatGPT 4.0

Foundational Model

Foundational Models are trained on broad datasets, making them versatile for a wide range of applications. These models form the basis for more specialized AI applications and are essential for understanding the general capabilities of AI systems.

Generative AI

Generative AI refers to models capable of creating new content, such as text or images, based on textual inputs. This aspect of AI highlights its creative potential, moving beyond data analysis to content creation.

Also Read: Generative Design: Navigating the Future of Mechanical Engineering

Generative Pretrained Transformers (GPT)

Introduced by OpenAI, GPT is a popular LLM architecture known for its vast number of parameters. This architecture has revolutionized the field of AI, particularly in natural language processing and generation.

Hallucination

Hallucination in AI occurs when models generate believable but factually incorrect or entirely fictitious statements. Understanding this term is crucial for recognizing the limitations and potential errors in AI-generated content.

Large Language Model (LLM)

LLMs are AI models that specialize in processing and generating human language. They represent a significant advancement in AI’s ability to interact and communicate in human-like language.

Latent Space

Latent Space refers to the abstract, multi-dimensional space where AI algorithms, especially in deep learning and generative models, encode the features and relationships of the input data. In this space, AI models can discover and represent underlying patterns and structures in the data that are not immediately apparent. Manipulating the latent space allows AI to generate new data instances with variations or to perform tasks like clustering and dimensionality reduction.

Low-Rank Adaptation (LoRA)

LoRA is a method of fine-tuning AI models that is resource-efficient, consumes less memory. This technique is crucial for making AI model training more accessible and less resource-intensive.

Model Architecture

Model Architecture refers to the discrete components of complex AI models, each serving a specific function. This term is essential for understanding how different parts of an AI model work together to process and analyze data.

Bard or ChatGPT? Which AI Chatbot is better?

Multi-modal Models

Multi-modal Models in AI can handle mixed media inputs, such as text and images. This versatility is crucial in developing more sophisticated and adaptable AI systems.

Neural Network

Neural Networks are a method of developing AI by modeling the human brain. They consist of nodes, known as perceptrons, connected into a network, forming the fundamental structure of AI learning and processing.

Also Explore | How to become proficient in deep learning and neural networks in just 30 days!!

One-shot / Few-shot

These terms refer to prompting techniques where a single or a few examples are provided to help the LLM understand a task. This approach is crucial for training AI models efficiently and effectively.

Parameters

Parameters in AI are weights associated with connections in a neural network. They play a critical role in how values are combined and processed, significantly influencing the model’s size and capabilities.

Plugins / Tools

In AI, Plugins / Tools refer to the capabilities of LLM agents to utilize APIs for new functionalities. This aspect is crucial for extending the applications and effectiveness of AI models.

Read About the 6 Best AI Tools for Coding

Prompt

A Prompt is the textual input supplied to an LLM. It can range from brief to detailed and is fundamental in guiding the AI model’s response and content generation.

Prompt Engineering

Prompt Engineering involves developing effective prompts that blend technical writing and requirements definition. This skill is essential for maximizing the effectiveness and accuracy of AI models.

Prompt Injection

Prompt Injection is the process of subverting a model’s behavior by providing inputs that override the established prompt or system prompt. Understanding this term is key to recognizing potential manipulations in AI interactions.

Reinforcement Learning from Human Feedback (RLHF)

RLHF is a technique that combines reinforcement learning with human feedback to fine-tune AI models. In this approach, human feedback is used to create a reward model, which then guides the AI in learning tasks. This method is particularly effective in aligning the AI’s outputs with human values and preferences, ensuring that the AI behaves in a way that is desirable and ethical.

Know More About the Top 6 Programming Languages For AI Development

Retrieval Augmented Generation (RAG)

RAG involves supplementing a prompt with additional information based on web searches or document queries. This technique is vital for enhancing the context and relevance of AI-generated content.

System Prompt

System Prompt in AI refers to the predefined input that sets the context or guidelines for an AI model’s responses. In applications like chatbots, the system prompt combines with user input to guide the AI’s behavior, ensuring that it remains within the desired parameters and behaves consistently.

Token

In AI, a Token is a unit of text, such as a word, part of a word, or a symbol, used in natural language processing. Tokens are the building blocks that AI models use to understand and generate language. The process of breaking down text into tokens, known as tokenization, is a fundamental step in preparing data for language models.

Training

Training in AI is the process where large datasets are presented to the neural network, with parameters being tweaked to produce better outputs. This process is the cornerstone of AI learning and development.

Transformer

A Transformer is a type of neural network architecture that has become central to modern AI, particularly in natural language processing. It uses mechanisms like self-attention to process sequences of data, such as text, in a way that captures the context and relationships within the data. Transformers are behind many of the recent advancements in AI, including large language models like GPT.

Kickstart your Machine Learning journey by enrolling in GUVI’s Artificial Intelligence & Machine Learning Course where you will master technologies like matplotlib, pandas, SQL, NLP, and deep learning, and build interesting real-life machine learning projects.

Alternatively, if you want to explore Artificial Intelligence and Machine Learning through a Self-paced course, try GUVI’s Artificial Intelligence Course.

In Conclusion

Understanding these generative AI terms is crucial for anyone looking to delve deeper into the field of AI and Generative AI. As the technology continues to evolve, these concepts will play an increasingly important role in shaping the future of AI applications and their impact on various sectors.

Also Read: Best Product-Based Companies for AI Engineers

FAQs – Generative AI Terms Glossary

AI, or Artificial Intelligence, refers to the broader concept of machines designed to emulate human intelligence and perform a wide range of tasks. Generative AI, a subset of AI, specifically focuses on creating new content, such as text or images, based on input data.

While AI can include anything from data analysis to automation, Generative AI is particularly known for its creative and generative capabilities.

Transformer architecture in AI is a neural network design that processes data in sequences (like language) using self-attention mechanisms. This allows the model to weigh the importance of different parts of the input data differently, providing a more nuanced understanding of context and relationships within the data.

It’s especially effective in language processing tasks, enabling models to generate more coherent and contextually relevant outputs.

Understanding Bias in AI is crucial because biased AI models can lead to unfair, unethical, or discriminatory outcomes. Bias in AI typically arises from skewed data or flawed algorithms and can affect decision-making processes in critical areas like recruitment, law enforcement, and credit scoring. Recognizing and mitigating bias ensures that AI systems operate fairly and ethically.

![10 Unique Keras Project Ideas [With Source Code] 4 Keras Project Ideas](https://www.guvi.in/blog/wp-content/uploads/2024/10/Feature-Image.png)

![AI Video Revolution: How the Internet is Forever Changed [2025] 6 Feature Image - AI Video Revolution How the Internet is Forever Changed](https://www.guvi.in/blog/wp-content/uploads/2024/03/Feature-4.webp)

Did you enjoy this article?