Bias and Variance in Machine Learning: An Informative Guide

Aug 29, 2025 5 Min Read 1628 Views

(Last Updated)

Ever wondered why your machine learning model performs great during training but completely falls apart on new data? Or why sometimes it doesn’t seem to learn anything meaningful at all?

These aren’t just random glitches; they’re symptoms of two fundamental forces at play: bias and variance. Understanding how bias and variance in machine learning affect your model is key to diagnosing performance issues and building models that actually generalize.

Let’s understand what bias and variance in machine learning mean, how they show up in your models, and what you can do about them. So, without further ado, let us get started!

Table of contents

- What Is Bias?

- Think of it like this:

- Technical Symptoms

- Example in Code

- What Is Variance?

- Real-world analogy:

- Technical Symptoms

- Example in Code

- Bias vs Variance: Simplified

- Underfitting vs Overfitting: Understanding the Difference

- Underfitting (High Bias)

- Overfitting (High Variance)

- How Do Bias and Variance Show Up in Real Models?

- Linear & Logistic Regression

- Decision Trees

- k-Nearest Neighbors (k-NN)

- Neural Networks

- How to Reduce Bias and Variance?

- Reducing Bias

- Reducing Variance

- A Practical Reminder

- Try It Yourself: Mini Challenge

- Conclusion

- FAQs

- What causes high bias in machine learning?

- What are the signs of high variance?

- Can a model have both high bias and high variance?

- How do I know if my model is underfitting?

- Is high variance always bad?

What Is Bias?

In simple terms, bias in machine learning is the error introduced by approximating a real-world problem (which might be complex and messy) with a much simpler model.

High bias means the model is too simple to capture the underlying patterns. It makes strong assumptions about the data, which leads to underfitting.

Think of it like this:

Imagine you’re trying to guess someone’s age just based on their height. That’s a bold (and flawed) assumption; you’re ignoring all other factors. That’s bias in action.

Technical Symptoms

- Poor performance on both training and test data

- Low model complexity (e.g., linear regression on nonlinear data)

- Consistent but inaccurate predictions

Example in Code

from sklearn.linear_model import LinearRegression

from sklearn.datasets import make_regression

from sklearn.metrics import mean_squared_error

X, y = make_regression(n_samples=100, n_features=1, noise=30)

model = LinearRegression()

model.fit(X, y)

y_pred = model.predict(X)

print("MSE:", mean_squared_error(y, y_pred))Even if the dataset has complex relationships, this model forces a straight line, leading to high bias.

What Is Variance?

Variance refers to the model’s sensitivity to small fluctuations in the training set. A high variance model pays too much attention to training data, including its noise. This leads to overfitting.

Real-world analogy:

It’s like a student who memorizes the textbook word-for-word but can’t answer a question that’s slightly different from the practice set.

High variance models are usually very complex, capturing even tiny, irrelevant details.

Technical Symptoms

- Very good performance on training data

- Very poor generalization on unseen/test data

- Instability with slight data changes

Example in Code

from sklearn.tree import DecisionTreeRegressor

# Same data

model = DecisionTreeRegressor(max_depth=20) # very deep tree

model.fit(X, y)

y_pred = model.predict(X)

print("MSE:", mean_squared_error(y, y_pred))Here, the tree fits the training data nearly perfectly. But test it on new data, and performance may crash.

Bias vs Variance: Simplified

| Aspect | High Bias | High Variance |

| Model behavior | Drops significantly compared to training accuracy. This is where the real problem appears: the model can’t generalize to new, slightly different inputs. | A high variance model is hypersensitive to the training data. It tries to fit every little twist, turn, and outlier in the dataset, even the noise. |

| Training accuracy | Usually low, because the model doesn’t learn enough from the training data. Since it oversimplifies, it never really “gets” the structure, so training error stays high. | Usually very high, sometimes suspiciously perfect. The model memorizes the training data so well that it looks like it’s performing brilliantly. |

| Test accuracy | Also low. The model already performs poorly on training data, and this poor understanding naturally carries over to unseen data. | Drops significantly compared to training accuracy. This is where the real problem appears, the model can’t generalize to new, slightly different inputs. |

| Model Complexity | Too simple to capture the relationships in the data. Think linear regression trying to fit nonlinear patterns, or a shallow decision tree on a deeply nested classification problem. | Too complex for the problem at hand. Think deep neural networks trained on tiny datasets, or unpruned decision trees trying to handle noisy data. |

| Root Problem | Underfitting. The model hasn’t learned enough. It’s not tapping into the full capacity of the features, the structure, or the relationships in your dataset. | Overfitting. The model has learned too much, including the random noise or outliers that shouldn’t be part of the learning. |

| Fix | You need to add more learning capacity. That could mean using a more flexible algorithm, reducing regularization, or feeding more informative features. | You need to simplify or constrain the model. Try regularization, pruning, dropout, or collecting more data to balance it out. |

Underfitting vs Overfitting: Understanding the Difference

When your model isn’t performing well, it’s often a sign that you’re dealing with either underfitting or overfitting. Both are tied directly to bias and variance, and knowing the difference is key to fixing the issue.

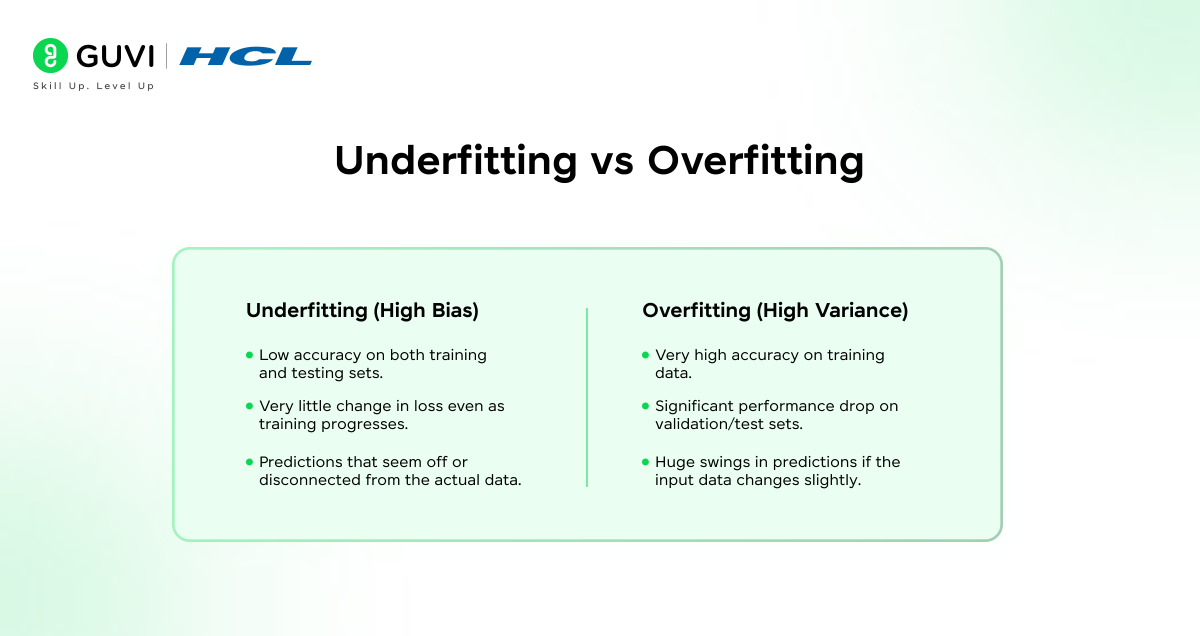

Underfitting (High Bias)

Underfitting happens when your model is too simplistic to capture the real patterns in your data. It fails to learn enough from the training data, leading to poor performance not just on unseen data but also on the training set itself.

You’ll typically see:

- Low accuracy on both training and testing sets.

- Very little change in loss even as training progresses.

- Predictions that seem off or disconnected from the actual data.

This kind of problem shows up when you use models like linear regression on data that has non-linear trends, or when your feature selection is too shallow.

Why does it happen?

- You’re using a model that’s too basic.

- You haven’t included enough useful features.

- You might be over-regularizing your model, forcing it to be too conservative.

Overfitting (High Variance)

On the flip side, overfitting is what happens when your model becomes too good at learning the training data, so much so that it also picks up on the noise and outliers. While it might perform impressively on training data, it completely collapses when given something new.

The tell-tale signs are:

- Very high accuracy on training data.

- Significant performance drop on validation/test sets.

- Huge swings in predictions if the input data changes slightly.

Why does it happen?

- The model is too complex for the amount of data you have.

- Training went on for too long without early stopping.

- There’s no regularization to prevent over-learning.

In short:

Underfitting means your model didn’t learn enough. Overfitting means it learned too much of the wrong stuff.

How Do Bias and Variance Show Up in Real Models?

Different algorithms naturally lean toward either high bias or high variance, based on how they’re built. Understanding this helps you choose the right model depending on the complexity of your data and the size of your dataset.

Linear & Logistic Regression

These are your classic high-bias models. They’re easy to train, fast to run, and great when the data has linear relationships. But when your data isn’t linear? They’ll underfit.

- Tend to simplify complex relationships.

- Stable across different data samples (low variance).

- Great for problems where interpretability is more important than complexity.

Decision Trees

Decision Trees can swing the other way. If left unpruned, they’ll keep splitting until they memorize the training data.

- Low bias — they can model complex patterns.

- But very high variance — small changes in data can drastically change the tree.

- Best used with depth restrictions or pruning techniques.

k-Nearest Neighbors (k-NN)

Here, the bias-variance trade-off depends on the value of k.

- Small k (e.g., k=1): Very low bias, very high variance.

- Large k (e.g., k=15): Higher bias, lower variance, more smoothing.

Choosing the right k is everything here.

Neural Networks

Neural networks are powerful, but they can be both a blessing and a curse.

- When designed well with the right amount of data, they can have low bias and low variance.

- But with small data or poor tuning, they easily fall into overfitting territory.

- Need regularization (like dropout), a large enough dataset, and tuning to get right.

The Bias-Variance problem is a foundational concept in ML, but it originally comes from statistics. In fact, the bias-variance decomposition was formalized long before neural networks became a thing.

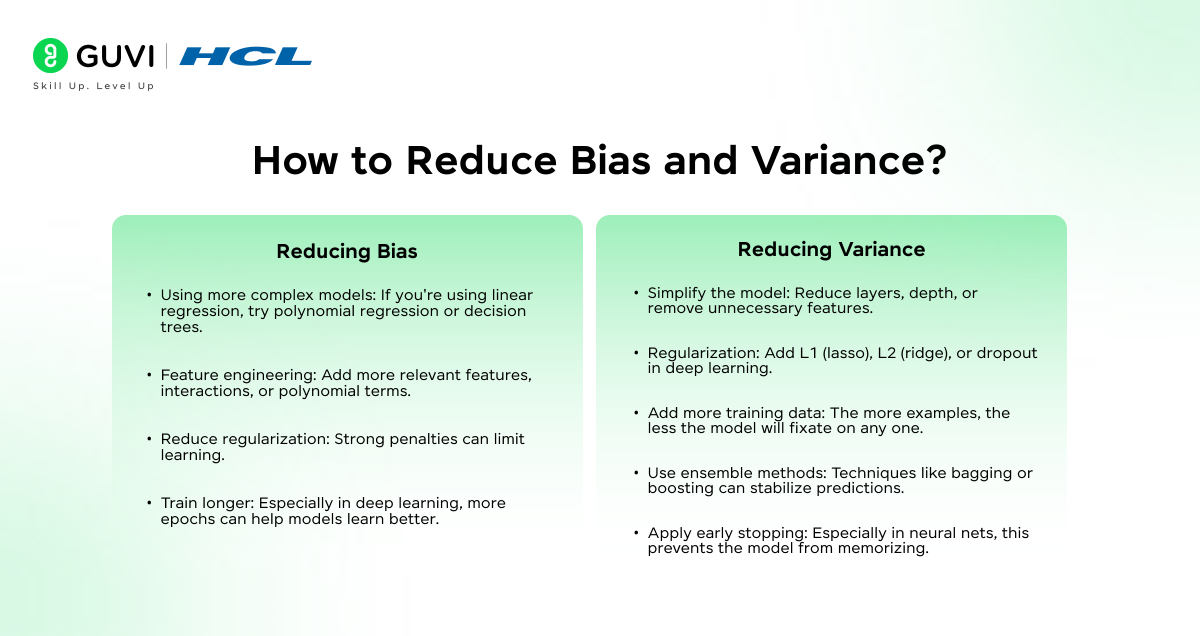

How to Reduce Bias and Variance?

Now that you know what bias and variance look like in practice, let’s talk solutions. Depending on which one your model suffers from, your strategy will vary.

Reducing Bias

If your model has high bias (i.e., it’s underfitting), the goal is to help it learn more complexity from the data.

Try:

- Using more complex models: If you’re using linear regression, try polynomial regression or decision trees.

- Feature engineering: Add more relevant features, interactions, or polynomial terms.

- Reduce regularization: Strong penalties can limit learning.

- Train longer: Especially in deep learning, more epochs can help models learn better.

In simple terms, you’re giving the model more room to grow and capture patterns.

Reducing Variance

If your model has high variance (i.e., it’s overfitting), you want to increase generalization.

Here’s what helps:

- Simplify the model: Reduce layers, depth, or remove unnecessary features.

- Regularization: Add L1 (lasso), L2 (ridge), or dropout in deep learning.

- Add more training data: The more examples, the less the model will fixate on any one.

- Use ensemble methods: Techniques like bagging or boosting can stabilize predictions.

- Apply early stopping: Especially in neural nets, this prevents the model from memorizing.

The idea is to help the model step back and see the bigger picture instead of obsessing over tiny details in the training set.

A Practical Reminder

Every change to reduce bias may increase variance, and vice versa. That’s the real challenge in machine learning: finding the right point of balance, where your model performs consistently well across both known and unseen data.

So, don’t just look at training accuracy. Always keep an eye on how your model performs in the wild.

Pro Tip: Cross-Validation Helps Balance

When you’re unsure whether your model is suffering from high bias or high variance, try k-fold cross-validation. It gives a better estimate of how well your model generalizes and helps prevent overfitting from random train-test splits.

Try It Yourself: Mini Challenge

Here’s a quick real-world challenge to test your understanding.

You’re given a dataset where a linear regression performs poorly on both train and test data. What’s most likely happening?

A) Overfitting due to high variance

B) Underfitting due to high bias

C) Perfect fit

D) The model is just slow

Answer: B) Underfitting due to high bias

If you’re serious about mastering machine learning concepts like Bias and Variance, and want to apply them in real-world scenarios, don’t miss the chance to enroll in HCL GUVI’s Intel & IITM Pravartak Certified AI & ML course. Endorsed with Intel certification, this course adds a globally recognized credential to your resume, a powerful edge that sets you apart in the competitive AI job market.

Conclusion

In conclusion, bias and variance in machine learning aren’t just technical terms to memorize; they’re practical tools to help you debug and improve your models. High bias can make your model blind to patterns, while high variance makes it chase noise.

Your job is to strike the right balance, where the model learns enough from the data to make meaningful predictions without getting distracted by irrelevant details.

Once you internalize this balance, you’ll be able to look at model performance and know exactly what’s going wrong, and more importantly, how to fix it.

FAQs

1. What causes high bias in machine learning?

High bias is usually caused by choosing a model that’s too simple to capture the data’s underlying pattern. For example, using linear regression on complex nonlinear relationships.

2. What are the signs of high variance?

Your model does really well on training data but fails on test or validation sets. The predictions change drastically with small changes in the training data.

3. Can a model have both high bias and high variance?

Yes, especially if the data is noisy and the model is poorly chosen. This scenario is rare but possible, particularly in under-optimized pipelines.

4. How do I know if my model is underfitting?

If both training and testing scores are low, your model is likely underfitting. It’s not capturing the important patterns.

5. Is high variance always bad?

Not always. Complex models like deep neural networks can handle high variance if you have enough data and use techniques like dropout, early stopping, or regularization.

Did you enjoy this article?