AI Meets Edge: Building Smart Applications with LLMs on Edge Devices

Sep 26, 2025 2 Min Read 1202 Views

(Last Updated)

“What if your smart camera could summarize what it sees right on the device, with no internet required?”

Welcome to the world where Artificial Intelligence (AI) meets the Edge and Large Language Models (LLMs) become ultra-light, local, and lightning-fast.

This is not science fiction. This is happening right now, and it’s unlocking a new generation of smart, secure, and offline-capable applications.

Table of contents

- What Are LLMs on Edge Devices?

- Why Run AI on the Edge?

- Use Cases of LLMs on the Edge

- Smart Cameras

- Health Devices

- Automotive

- Smart Homes

- Personal Devices

- How to Build LLMs for Edge

- Llama.cpp

- Ollama

- GGML & GPT4All

- Edge TPU & NVIDIA Jetson

- ONNX Runtime + Transformers.js

- Developer Workflow (Example)

- Challenges to Watch For

- The Future: Federated & Decentralized AI

- Pro Tip for Developers & Students

- Final Thoughts

What Are LLMs on Edge Devices?

Edge devices are hardware like smartphones, Raspberry Pi, IoT sensors, cameras, or embedded systems that can compute data locally, without depending on cloud servers.

LLMs (Large Language Models) are AI models like GPT, LLaMA, or Claude, trained on vast amounts of human language.

LLMs on edge means you’re running these powerful AI models directly on your phone, drone, car dashboard, or home automation hub.

Why Run AI on the Edge?

| Benefit | Description |

| Privacy | No data is sent to the cloud – ideal for healthcare, surveillance, or sensitive info |

| Speed | Local inference = zero network latency |

| Offline Support | Runs even without internet |

| Cost-Efficient | No cloud hosting or API billing |

| Efficiency | Works with small, optimized models that use less power |

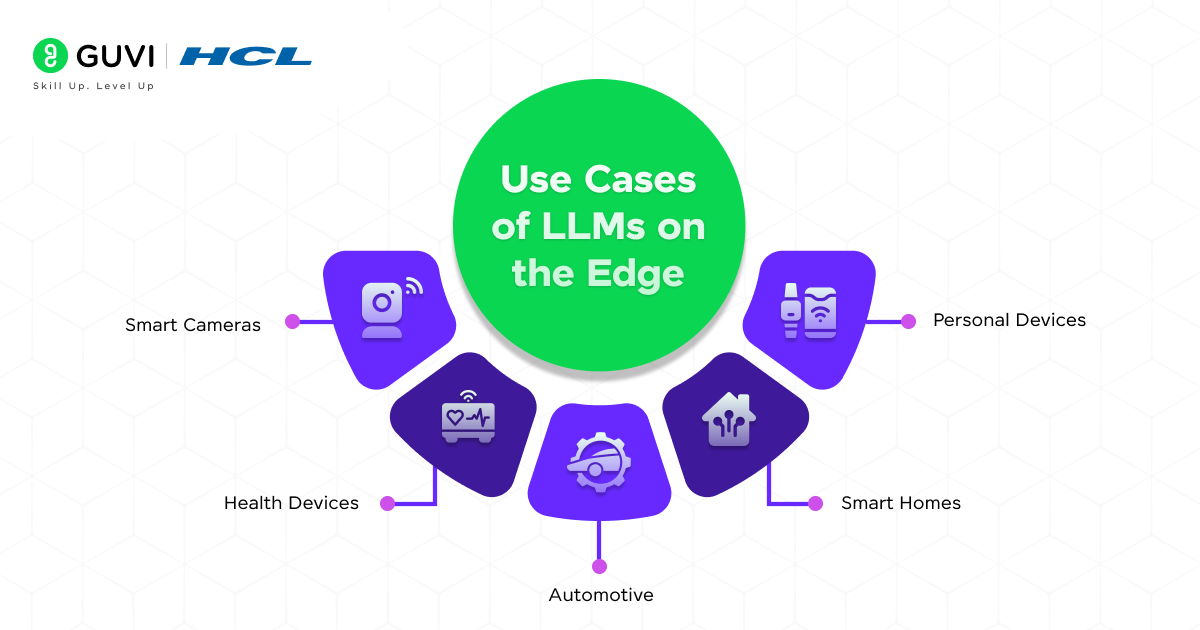

Use Cases of LLMs on the Edge

1. Smart Cameras

- Summarize what’s happening: “3 people entered the room. One is wearing red.”

- Face detection, license plate translation, anomaly alerts.

2. Health Devices

- Voice assistant for elderly people, fully offline.

- Patient monitoring summaries: “Blood pressure rising for last 30 minutes.”

3. Automotive

- Driver assistant: “You’re approaching a school zone.”

- Onboard chatbot in your car that doesn’t need the internet.

4. Smart Homes

- Offline AI assistant: “Turn off the lights and play calming music.”

- Child-safe chat interface that never connects to external servers.

5. Personal Devices

- On-device summarization of notes, chats, or PDFs.

- Private journaling with AI coaching suggestions.

How to Build LLMs for Edge

Here are the tools and frameworks making this possible:

1. Llama.cpp

- Run LLaMA 2, CodeLLaMA, Mistral on CPU using quantized models.

- Works even on Raspberry Pi or Android phones.

2. Ollama

- CLI tool to run LLMs on your machine with one command.

- Easy model management and built-in API layer.

3. GGML & GPT4All

- Lightweight model formats using quantization (.gguf) for low-resource hardware.

- GPT4All provides local GUIs and integration scripts.

4. Edge TPU & NVIDIA Jetson

- Hardware accelerators for deep learning on edge.

- Used in smart cameras, drones, and robots.

5. ONNX Runtime + Transformers.js

- For JavaScript-based inference in browsers or hybrid apps.

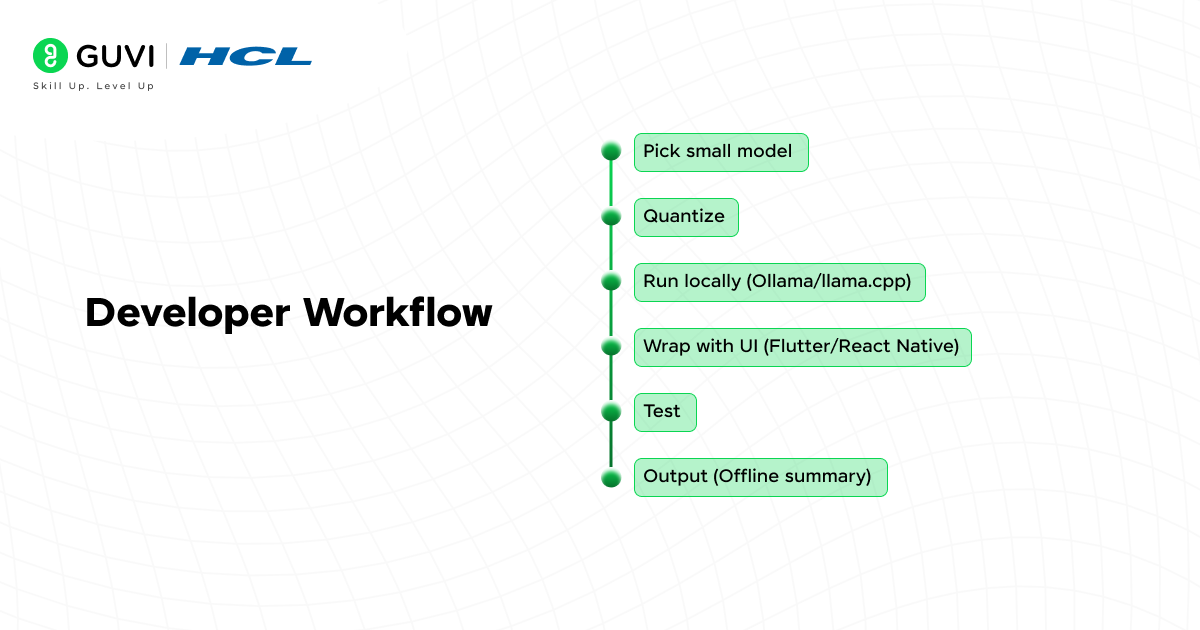

Developer Workflow (Example)

Let’s say you’re building a privacy-first note summarizer app for Android:

- Choose a small model (e.g., LLaMA 2 7B Q4, or Phi-2).

- Quantize the model using llama.cpp or download from Hugging Face in .gguf format.

- Use Ollama or GGUF runner on Android or Pi.

- Wrap it in a local API or app UI (Flutter, React Native, or Java/Kotlin).

- Test input like:

“Summarize the following note I wrote yesterday…”

Output happens locally, offline, securely.

Challenges to Watch For

| Challenge | Description |

| Power & RAM Limits | Devices must be optimized (quantized models, batching) |

| Model Size | You can’t rely on cloud context unless it’s synced |

| Privacy vs Personalization | Ecosystem is still evolving, not plug-and-play everywhere |

| Tool Maturity | The ecosystem is still evolving, not plug-and-play everywhere |

The Future: Federated & Decentralized AI

Combine Edge + LLMs + Federated Learning and you unlock:

- A global network of intelligent devices learning from each other without centralizing data.

- Total user data sovereignty.

- Powerful offline assistants, always with you, never spying on you.

Pro Tip for Developers & Students

If you’re:

- A student: Start with llama.cpp and Ollama on a laptop.

- A mobile dev: Try Mistral 7B on Android or iOS with GPU acceleration.

- A Java dev: Build a local API layer in Spring Boot to wrap LLMs for desktop apps.

- A startup founder: Think about voice AI for India’s rural areas offline, edge-based assistants.

Want to build AI-powered apps that run even on edge devices? Start your journey with the IITM Pravartak Certified AI & ML Course from GUVI and learn the skills to design, train, and deploy intelligent systems in the real world.

Final Thoughts

“The future isn’t in the cloud, it’s in your pocket.”

As AI becomes more personalized and privacy becomes non-negotiable, bringing LLMs to the edge offers the best of both worlds:

- Intelligence

- Independence

- Innovation

Start small, optimize locally, and build smart apps that think globally but act locally.

Did you enjoy this article?