Random Forest Classifier: Understanding it Thoroughly in Simple Terms

Oct 23, 2025 4 Min Read 1645 Views

(Last Updated)

If we observe our own lives, we will notice that most of the actions that we take are based on decisions, either irrational or pragmatic. Based on this observation, we can clearly state that before performing any tasks, we often predict potential outcomes based on the information available to us. But sometimes problems are much wider, more complex & critical, and along with that, several entities are associated with it.

This is where the Random Forest Classifier (RFC) comes into play. RFC is an advanced algorithm that is implemented for improving the prediction accuracy of a system or program. As an algorithm, it is used for performing regression and classification tasks.

In this blog post, we will focus entirely on developing an understanding of the classification part of this algorithm, specifically the Random Forest Classifier. So, without wasting any time, let’s delve deep into this topic.

Table of contents

- What Does Random Forest Classifier Mean?

- Some Prominent Features of RFC

- Working Mechanism Of the Random Forest Classifier

- Strengths of RFC

- Limitations of RFC

- Conclusion

- FAQs

- In what sectors is RFC commonly used?

- How does the Random Forest Classifier ( RFC ) differ from the Random Forest Regression ( RFR )?

- What is the difference between Random Forest and Decision Tree?

What Does Random Forest Classifier Mean?

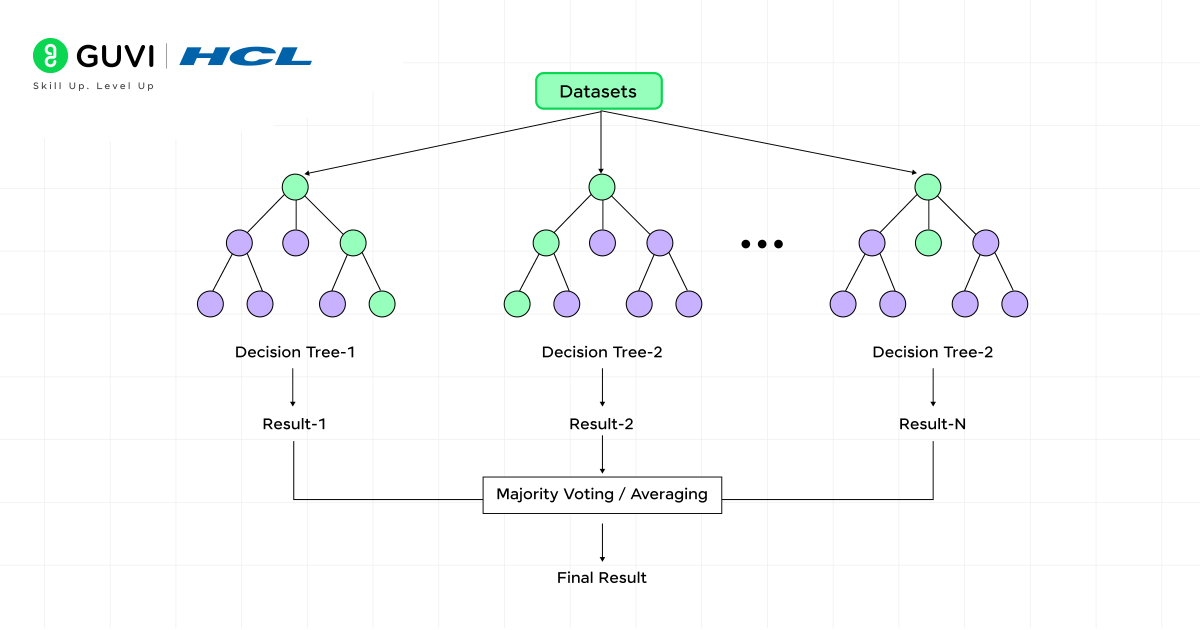

Random Forest Classifier (RFC) is an extension of the Random Forest algorithm, a Machine learning (ML) model that ensembles multiple decision trees to make a final prediction.

RFC is primarily used for conducting classification tasks; during this process, each decision-making tree votes for an answer, and the majority vote among all the predictions is treated as the final result.

From predicting customer behavior, handling price fluctuations, to detecting vulnerabilities and fraud in the banking system, and identifying spam emails, job recommendations, and filtering out fake accounts effectively, the Random Forest Classifier (RFC) is being used extensively across various business verticals, serving unique purposes.

Some Prominent Features of RFC

- This robust algorithm provides a cumulative answer, which is the result of processing all decision trees together. During this activity, each tree is engaged in picking up random features at each decision split.

- RFC attaches randomness through Bagging (random sampling) and Random feature selection. Bagging is the process of picking up random data subsets. In contrast, Random feature selection is the process of selecting and ranking the random features from the data based on their significance.

- Each decision tree is fed with a random dataset, and at each split, it gives the optimal prediction. In this way, the RFC sorts the things into groups and subgroups for any particular task—for example, Approved or Rejected, Spam or Not Spam, etc.

- As multiple decision trees are involved, rather than relying on a single tree, the chances of being hindered by missing and distorted data are minimized. Here, predictions from a large set of decision trees neutralize the flaws of a few trees that are less precise.

- Without losing efficiency, the RFC algorithm can effortlessly work with a massive amount of data and records (rows: records and columns: features associated with those records) simultaneously. And due to this feature, the Bagging process gets optimized.

Working Mechanism Of the Random Forest Classifier

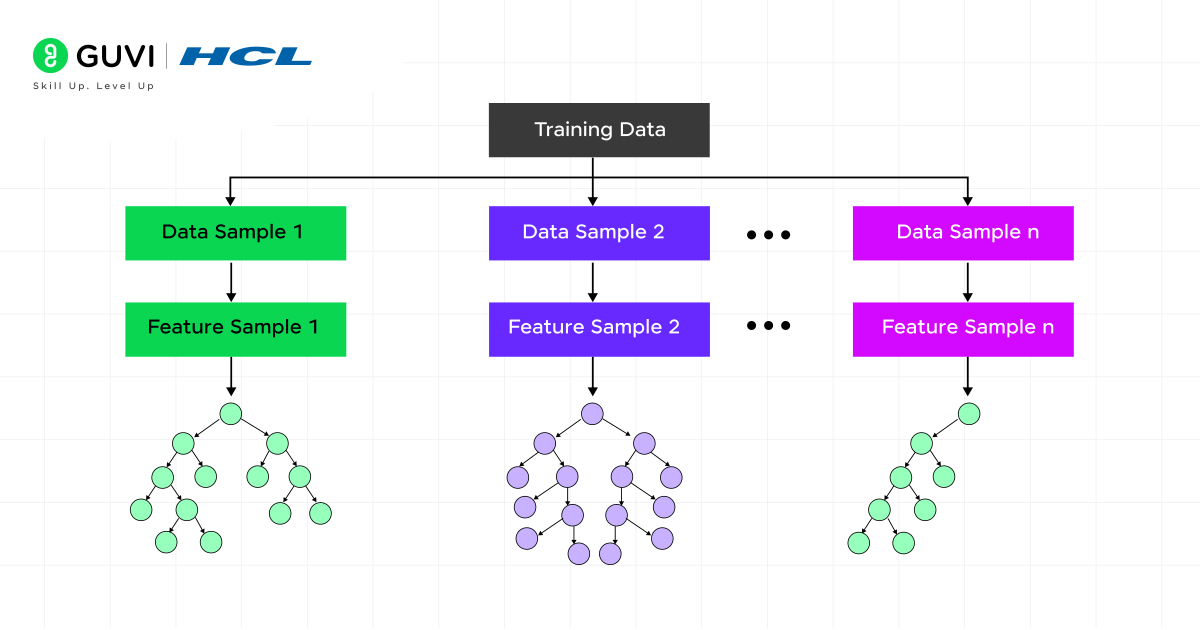

Step 1: Bagging

- Different subsets of the primary data are created by the Random Forests algorithm, and based on this data, each decision tree is trained.

- While this is done, at the same time, random sampling of data takes place with replacement. (Replacement can be defined as picking of the row data ( records ), some of the rows can be repeated more than once, and some rows can be left untouched.)

- Each tree here has its own dataset to learn from.

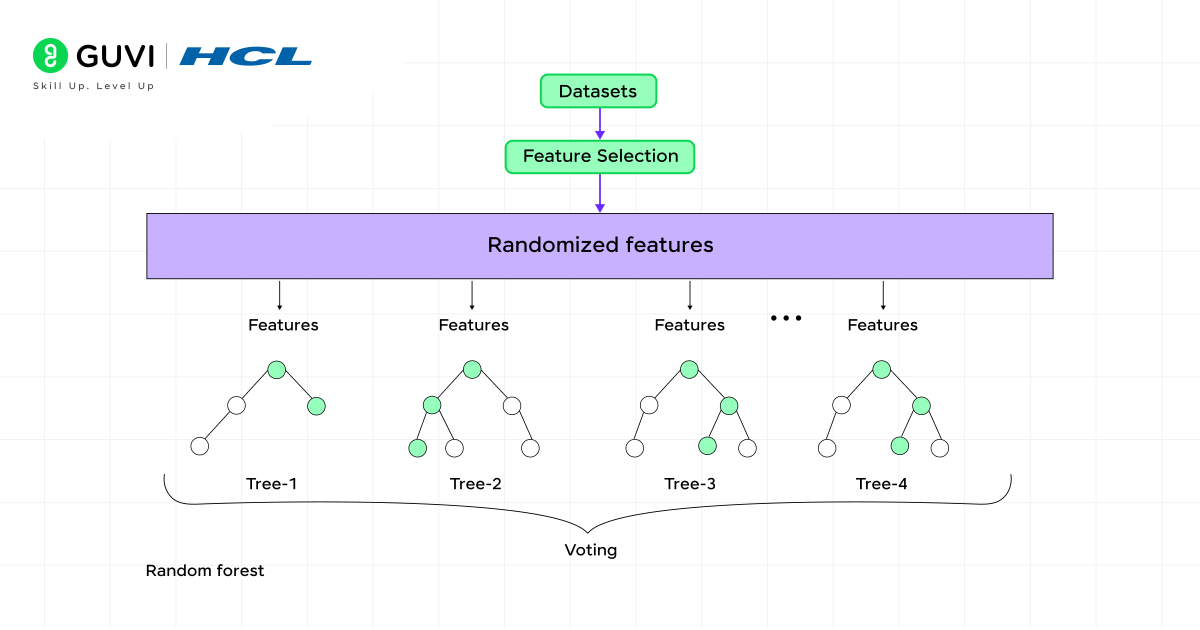

Step 2: Random Column ( Feature ) Selection

- Each tree has nodes in it, and those nodes get split up further into child nodes until certain conditions are met.

- To understand this better, whenever the split is taking place, the algorithm randomly chooses the most essential features (column data) and then divides them for the subsequent operations.

- Execution of this particular process maintains the individuality of each decision tree.

Step 3: Construction of Tree

- Based on the subset of data that is constructed with the inputs of rows ( records )and columns ( features ), decision trees are created recursively.

- After that, at each decision node, the best feature is picked for splitting.

- The length of the splitting procedure depends on hyperparameters such as the maximum depth of the tree, the minimum number of samples required to divide, the type of splitting function being implemented, and the creation process of the data subset.

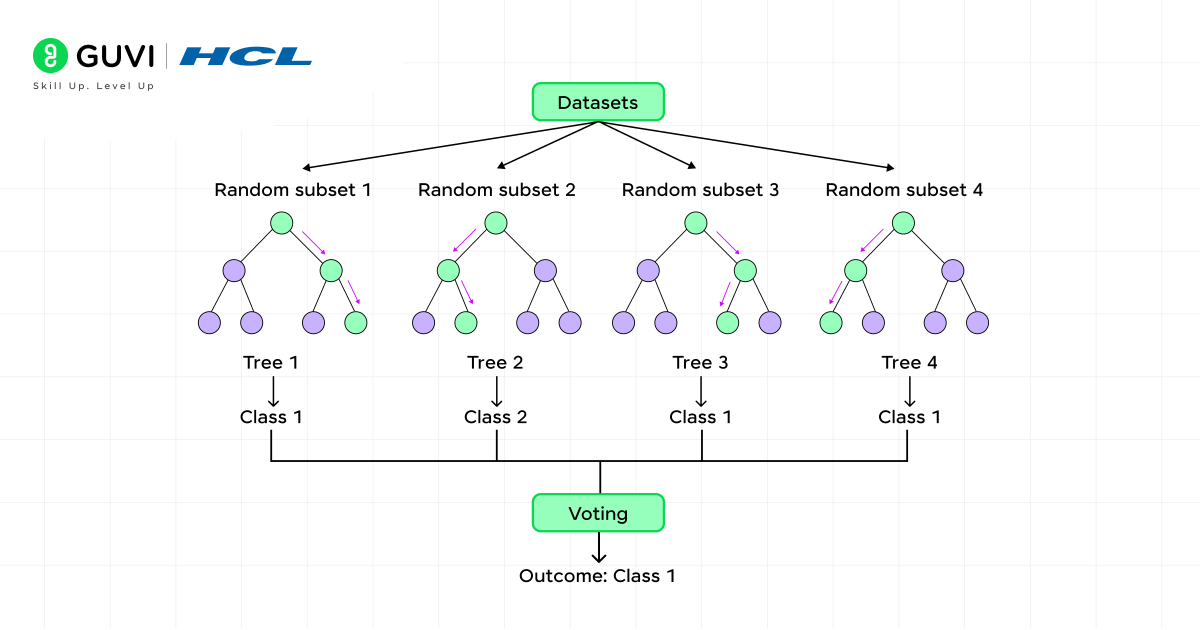

Step 4: Voting

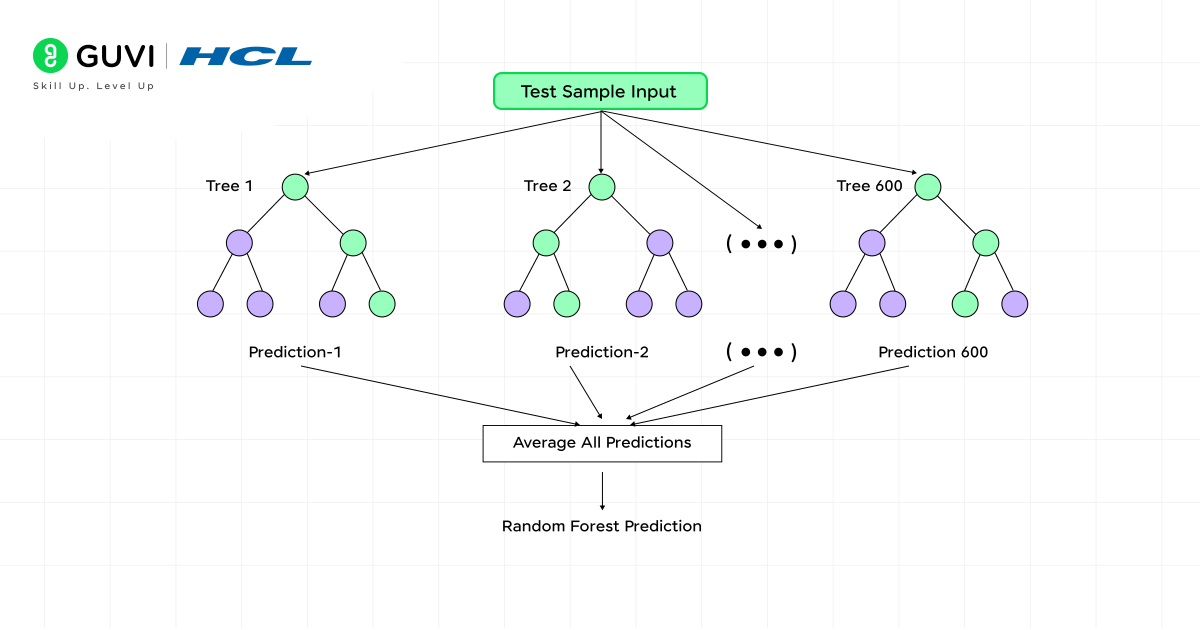

- In accordance with the inputs, each tree predicts the result independently.

- For performing classification, each tree predicts a class. For instance, positive or negative, win or lose.

- On the other hand, for performing regression tasks, the trees predict numbers instead of classes. For more information on Random Forest Regression, please refer to this link: Random Forest Regression

Step 5: Final Outcome/Decision

- After the prediction from each decision-making tree, the class with the majority vote is chosen as the ultimate answer. In other words, the model relies on the collective decision of all trees, where the class receiving the highest number of votes is selected as the final prediction.

Also Read: Top 10 Machine Learning Applications You Should Know

Strengths of RFC

- Reduces Biasnesss

The Random Forest Classifier (RFC) combines multiple decision trees to provide a conclusion. In this process, the trees with errors, missing values, or noisy data get counterbalanced by other trees in the majority.

- Mitigates Overfitting

Random forest, which is a collection of decision trees, facilitates offsetting the inefficiency of a single tree operation. Because an individual decision tree can be trained very deeply, as a result of which overfitting occurs, i.e, an abundance of data that also includes unnecessary information and even corrupted details.

The number of small trees acting as a single unit helps in averaging the decisions; this ensures no single tree relies heavily on specific features.

- Comprehensible

Each tree has its own prediction, along with the logic behind it. And for this reason, it becomes simple for us to understand these independent predictions.

- Cost-effective

The RFC model, due to its characteristics such as parallel processing, few resource requirements, and blazing fast training process, is much cheaper than expensive models like deep neural networks (DNNs), large language models (LLMs), and convolutional neural networks, etc.

Explore: How Long Does it Take to Learn Machine Learning?

Limitations of RFC

- High Memory Consumption

There are cases when n_estimators (the number of trees in a random forest) is set too high to achieve greater accuracy in the prediction. But while doing that, a lot of computer memory is being used, and that causes prediction delays, extended training times, and deployment challenges. And the reason behind this is that each tree reserves its own data and structure, so having many of them leads to high memory usage.

- Tuning Challenges

Hyperparameters such as n_estimators ( number of trees in forest ), max_depth ( maximum depth of each tree ), max_features ( maximum features considered per split ), and bootstrap (whether to use random sampling or not ), that are required for the RFC algorithm, can be overwhelming if settings are not appropriate. Working with these hyperparameters demands a high level of competence.

- Black Box Issue

Individuals with a lack of in-depth technical knowledge will find it extremely challenging to decipher why the RFC model made a particular kind of prediction. And this becomes a significant point of concern, especially in the field of healthcare, security, fintech, and law, where conclusions must be lucid and pragmatic.

The more you get to know about the capabilities of the Random Forest Classifier (RFC), the more astonished you will be. Here’s an eye-popping fact: In 2017, the TROPOMI XCH₄ project, operated by the Royal Netherlands Meteorological Institute (KNMI) and the SRON Netherlands Institute for Space Research, implemented RFC in the scikit-learn Python library (version 1.2.2) to clear clouds from satellite-based images. This particular RFC model had been trained on patterns derived from five years of collective data.

This groundbreaking mission set new standards for accurately measuring atmospheric gases like methane and air quality indicators from space—without any interference from clouds.

If you are aware of the importance of artificial intelligence and machine learning, and how crucial they are in shaping today’s world, then don’t wait any further – join us by enrolling in HCL GUVI’s Intel & IITM Pravartak Certified Artificial Intelligence & Machine Learning Course. Upskill yourself with advanced AI/ML techniques, methods, and tools, and elevate your career towards innovation and growth by making GUVI your ideal edtech partner.

Conclusion

After exploring the Random Forest Classifier ( RFC ), if we have to summarize it in one line, we can state that it is a machine learning model that is trained on a large set of data to make the most precise predictions. Additionally, RFC also leverages techniques such as bagging and random feature selection to ensure optimal performance and readability.

With a technological perspective, RFC is one of the most optimal models for executing classification functions efficiently.

FAQs

In what sectors is RFC commonly used?

RFC has been adopted and is being used by various sectors like healthcare, banking & finance, stock market, e-commerce, and many more.

How does the Random Forest Classifier ( RFC ) differ from the Random Forest Regression ( RFR )?

The most fundamental differences between RFC and RFR are :

RFC predicts categories or classes as outcomes, whereas RFC predicts numerical values as the final answer.

RFC uses majority voting from all trees for concluding, and in contrast to this, RFR uses the average of all tree predictions.

What is the difference between Random Forest and Decision Tree?

The significant distinctions between these 2 are as follows:

A decision tree is a single entity that creates a tree-like structure with nodes for doing classification and regression tasks. At the same time, a Random forest is a bunch ( collection ) of these individual decision trees that is responsible for churning out accurate predictions.

A decision tree is highly biased, whereas Random Forest significantly reduces this bias in comparison.

Did you enjoy this article?