Cross Validation in Machine Learning: The Ultimate Guide for Beginners

Sep 23, 2025 6 Min Read 1820 Views

(Last Updated)

The most significant aspect of machine learning (ML) is not just to construct a model capable of making good predictions but rather to create a model that is able to accurately predict new, unseen data. This ability is known as generalization. How can data scientists and ML practitioners assess this ability before allowing the model to be out in the wider world?

The short answer, in most cases, is Cross Validation.

This blog will take you through cross validation in machine learning. We will break down the main ideas, look at different approaches to it, and ultimately show you why it is so important for creating reliable and useful ML models.

Table of contents

- Why a Simple Train-Test Split Isn't Enough

- What is Cross-Validation in Machine Learning?

- The General Cross-Validation Steps

- Cross-Validation Techniques

- Holdout Method

- Leave-One-Out Cross-Validation (LOOCV)

- K-Fold Cross-Validation

- Stratified K-Fold Cross-Validation

- Cross Validation vs Train-Test Split

- Python Implementation of K-Fold Cross Validation

- Step 1: Import the Necessary Libraries

- Step 2: Load the Dataset

- Step 3: Create the Classifier

- Step 4: Define the Number of Folds

- Step 5: Perform K-Fold Cross Validation

- Step 6: Evaluate the Results

- Advantages of Cross-Validation

- Disadvantages of Cross-Validation

- Final Thoughts..

- FAQs

- Why is cross validation important in ML?

- Is cross validation used in deep learning?

- Can cross validation be used for regression models?

- When should I use stratified cross validation?

Why a Simple Train-Test Split Isn’t Enough

Before we deep dive into cross-validation in machine learning, let’s understand the method it improves upon: the standard train-test split.

The most basic way to assess a machine learning model is to take your dataset and split it into two parts:

- Training set: This is the larger chunk of the dataset (say, 80%), or whatever percentage you decide to use to teach the model. The model learns patterns, relationships, and rules from the training set.

- Testing set: This is the remaining portion (say, 20%) kept hidden from the model during training. Once the model has been trained, we then use this dataset to assess its performance, or how it might perform on new, real-world data.

This sounds reasonable, and for a quick one-off check, it is. However, the train-test split has a significant weakness, making the cross-validation vs train-test split debate a crucial one for any data scientist.

The core issue is variance. The model’s performance score (like accuracy or F1-score) on the test set is highly dependent on which specific data points happened to end up in the training and testing sets. If you get a “lucky” split, your model might look like a genius. If you get an “unlucky” split, where the test set contains particularly tricky or unusual data points, your model might look like a failure. You are essentially basing your entire evaluation on a single “exam.” This single point of evaluation is not reliable enough to make critical decisions about which model to deploy.

This is precisely where the importance of cross-validation in machine learning becomes undeniable.

What is Cross-Validation in Machine Learning?

So, what is cross-validation in machine learning?

Cross-validation (CV) is a resampling procedure used to analyze learning models on a limited data sample. The idea is simple yet profound: rather than just a single train-test split, we are doing multiple train-test splits. Then we are essentially creating multiple “practice tests” for our model, and therefore, every part of the data has a chance to be in a testing set.

The role of cross-validation in ML is to provide a more stable and reliable estimator of performance on independent data, to help answer the important question: “How well will my model perform when being deployed in the real world?”

Taking the average of performance across these multiple test runs allows us more robust, less biased estimate of the true generalization ability of the model, and will alleviate some of the “luck of the draw” issues that come with just one train-test split.

The General Cross-Validation Steps

Even though there are multiple types of this technique, the basic cross-validation steps are the same:

- Partition the Dataset: Split the original data set into several smaller, same size subsets. These subsets are generally called “folds”.

- Iterate and Train: Hold out one of these folds for validation (the “test” set for this loop).

- Train the Model: Fit your machine learning model on all other folds combined (the training set).

- Evaluate and Score: Test the fit model on the hold-out fold and log the performance metric (e.g., accuracy, precision, RMSE).

- Repeat: Continue this process until all folds have served as the validation set exactly once.

- Aggregate Results: Compute the average of each of the performance scores from each iteration. This average score is now your final cross-validation performance estimation. Often, a standard deviation is also computed to understand how variable the model’s performance is.

This process ensures every single data point has been used for validation as well as training, so it is a much more efficient use of your data.

Cross-Validation Techniques

Different types of scenarios and datasets require different types of cross-validation techniques. Here are some of the most common techniques used, and let’s explore one by one in detail.

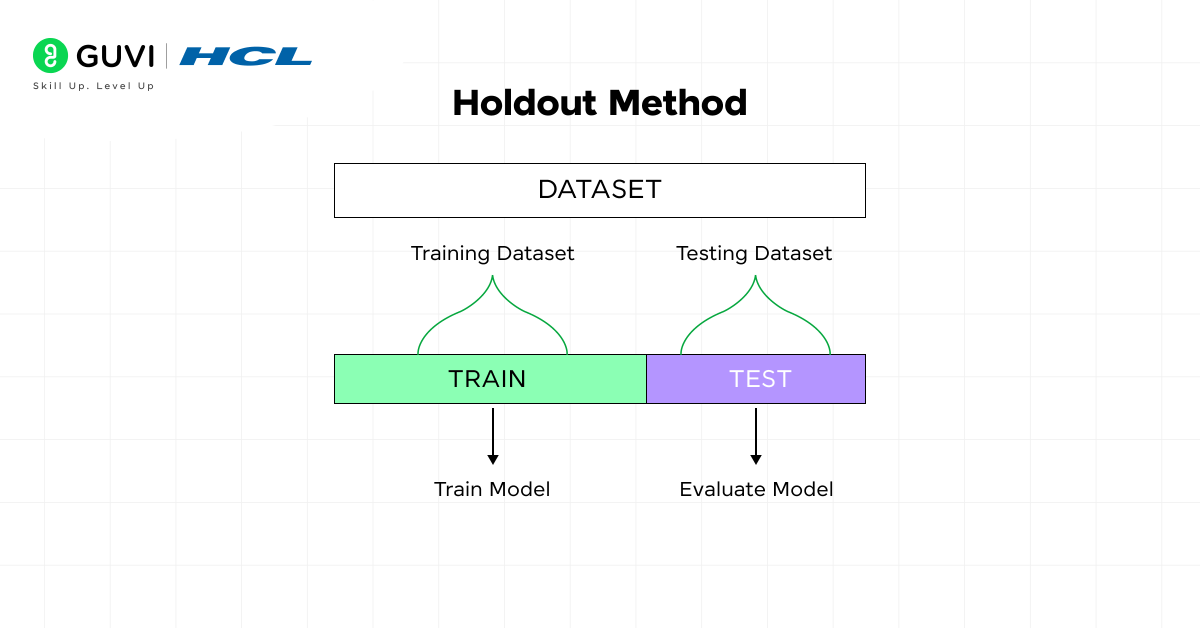

1. Holdout Method

The handout method is the simplest and basic validation technique, if often called as train-test split. This is the foundation of most advanced techniques, such as cross-validation.

How it works:

It works by splitting your dataset into two mutually exclusive sets:

- Training Set: A majority of the data (e.g., 70-80%) is used to train the machine learning model. The model learns all patterns and relationships from this data.

- Holdout Set (or Test Set): The remaining portion of the data (e.g., 20-30%) that is kept separate and “held out” from the training process. This unseen data is used to evaluate the final model’s performance.

The idea is to simulate how the model would perform on new, real-world data.

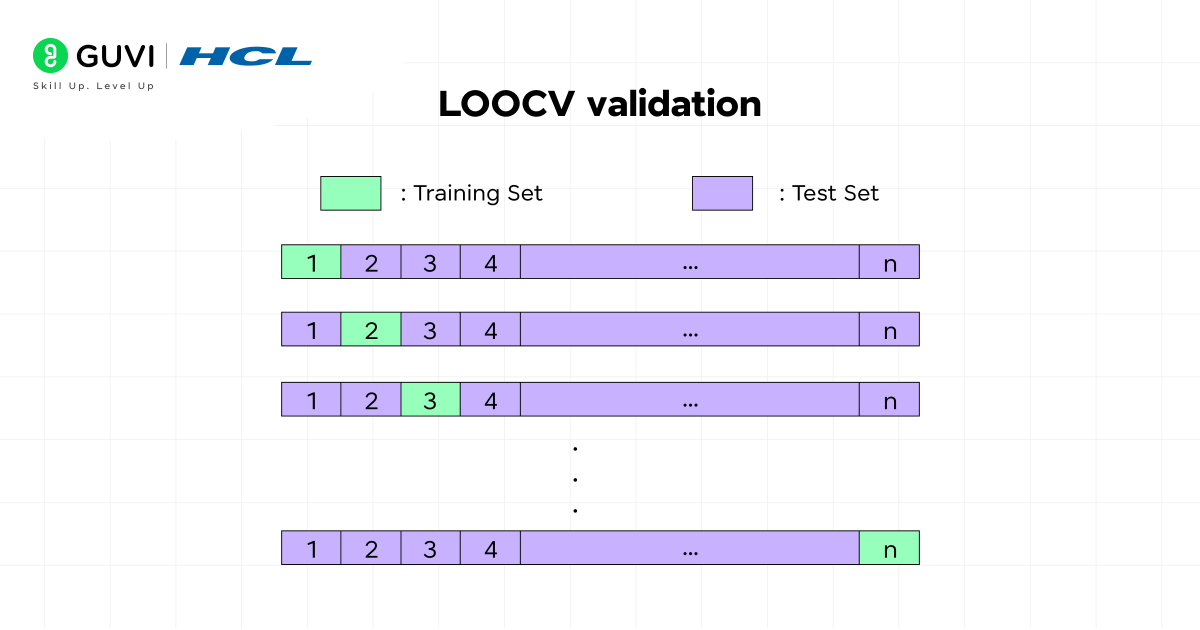

2. Leave-One-Out Cross-Validation (LOOCV)

Leave-One-Out cross-validation is the most extreme and computationally intensive version of K-Fold. In LOOCV, the value of K is equal to N, where N is the total number of data points in your dataset.

How it works:

- You iterate N times.

- In each iteration, you pick a single data point to be your validation set.

- You train the model on the remaining N-1 data points.

- You test the model on that one held-out data point.

- The final performance is the average of the N scores

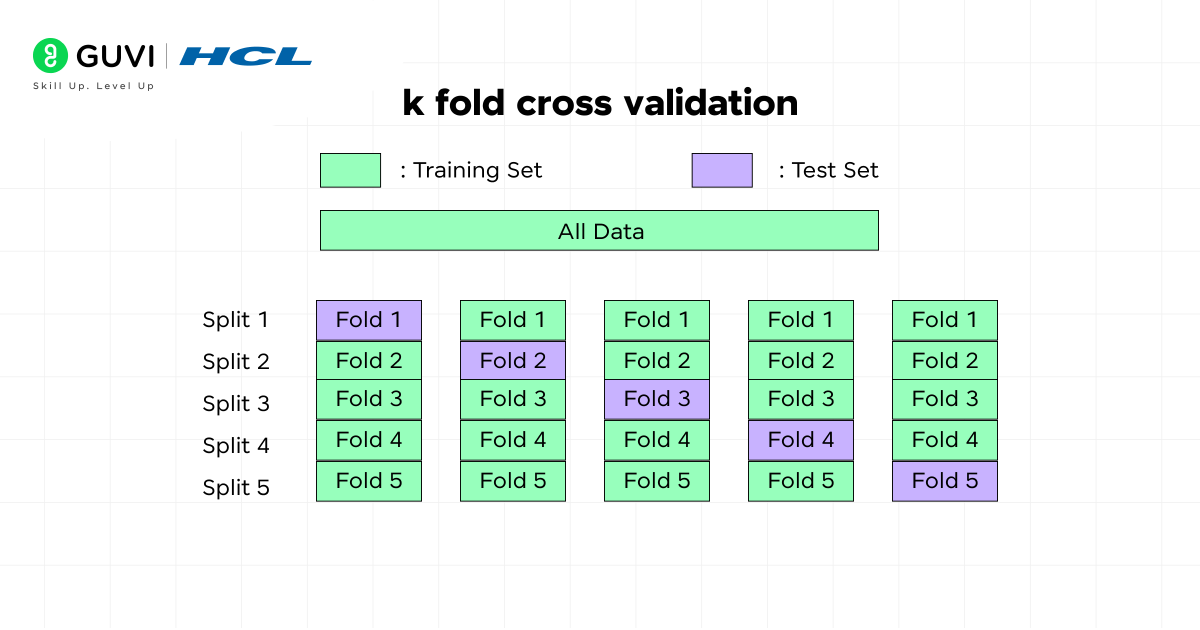

3. K-Fold Cross-Validation

This is the workhorse and the most widely used cross-validation technique. The ‘K’ in K-Fold cross-validation refers to the number of folds you split your data into.

How it works:

- The dataset is randomly shuffled and then partitioned into K equal-sized folds.

- The process then iterates K times. In each iteration, a different fold is chosen as the validation set, and the remaining K-1 folds are used for training.

- The performance scores from the K iterations are then averaged to produce the final K-Fold cross-validation score.

A common choice for K is 5 or 10. A K value of 5 means the data is split into 5 folds, and the model is trained and evaluated 5 times. A K of 10 means 10 folds and 10 iterations.

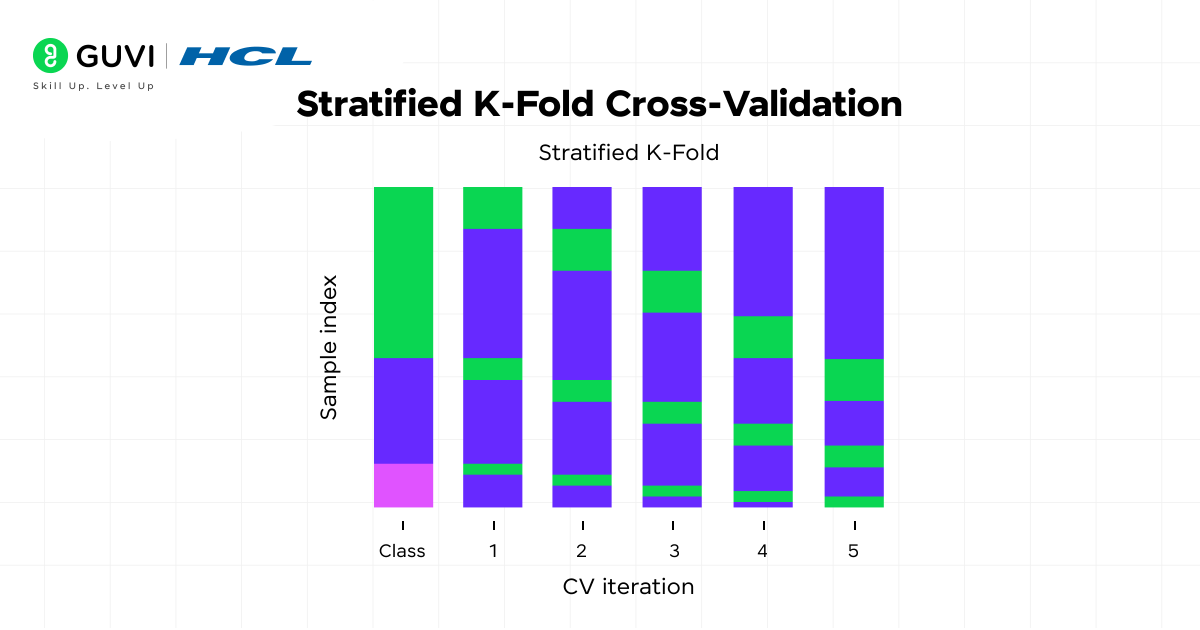

4. Stratified K-Fold Cross-Validation

Standard K-Fold is great for balanced datasets. But what happens when you have an imbalanced dataset, like in fraud detection, where the positive class is rare, or in medical diagnosis, where the disease class is significantly smaller than the healthy class?

If you use standard K-Fold, the data gets shuffled randomly, and some folds might potentially have very few, or even zero, minority instances. This is a disaster, because you absolutely do not want to train your model on data that it has not seen the minority class.

This is where Stratified K-fold cross-validation comes into play.

How it works:

Stratified K-Fold cross-validation is a variation of K-fold, which makes sure class distribution is maintained in each fold. For example, if your dataset had 90% Class A and 10% Class B overall, stratified K-fold makes sure each of the K folds will also have approximately 90% Class A and 10% Class B.

This is absolutely critical for classification problems with class imbalance, as you need the evaluation to be meaningful and reflective of the overall distribution of data.

- Cross-validation is a secret weapon for competitors. Top data scientists on Kaggle use it to estimate model performance on hidden test data before submitting.

- There’s a reason K=10 is so popular — research shows it’s the “Goldilocks choice”, balancing performance reliability and computational cost.

- Leave-One-Out Cross-Validation (LOOCV) can train a model thousands of times. With 5,000 rows, that means 5,000 separate training runs!

Cross Validation vs Train-Test Split

One very common question is always asked: Why not just use the train-test split?

Here is why:

| Aspect | Train-Test Split | Cross Validation (CV) |

| Process | Splits data once into training and testing sets | Splits data multiple times into folds |

| Reliability | Results may vary depending on the split | More reliable, as performance is averaged |

| Bias/Variance | Higher risk of bias or variance | Reduced risk, balanced results |

| Use Case | Quick testing or very large datasets | Model evaluation, small to medium datasets |

Python Implementation of K-Fold Cross Validation

Step 1: Import the Necessary Libraries

We’ll import the required modules from scikit-learn.

| from sklearn.model_selection import KFold, cross_val_score from sklearn.svm import SVC from sklearn.datasets import load_iris |

Step 2: Load the Dataset

We’ll use the famous Iris dataset, which is built into scikit-learn. It’s a multiclass classification dataset.

| iris = load_iris() X, y = iris.data, iris.target |

Step 3: Create the Classifier

We’ll use an SVM (Support Vector Machine) classifier with a linear kernel.

| svm_classifier = SVC(kernel=’linear’) |

Step 4: Define the Number of Folds

We’ll perform 5-fold cross-validation, which means the dataset will be split into 5 equal folds. Each fold will be used once as a test set while the remaining 4 are used for training.

| num_folds = 5 kf = KFold(n_splits=num_folds, shuffle=True, random_state=42) |

Step 5: Perform K-Fold Cross Validation

Now we run the cross-validation using cross_val_score().

| cross_val_results = cross_val_score(svm_classifier, X, y, cv=kf) |

Step 6: Evaluate the Results

We print the accuracy for each fold and the average accuracy across all folds.

| print(“Cross-Validation Accuracy Scores:”) for i, result in enumerate(cross_val_results, 1): print(f” Fold {i}: {result * 100:.2f}%”) print(f”Mean Accuracy: {cross_val_results.mean() * 100:.2f}%”) |

Output

| Cross-Validation Accuracy Scores: Fold 1: 96.67% Fold 2: 100.00% Fold 3: 96.67% Fold 4: 96.67% Fold 5: 96.67% Mean Accuracy: 97.33% |

The output shows the accuracy score from each fold. The mean accuracy (~97.3%) represents the model’s overall performance across all folds, making it more reliable than a single train-test split.

Advantages of Cross-Validation

- More accurate performance estimate: This is the first benefit. By taking the average performance across several validation sets, the final metric is much less susceptible to the specific way the data has been split into train and test. It tells you much better how your model will perform on unseen data.

- More efficient use of the data: With a basic train-test split, some of the data will never be used for training. In K-Fold cross-validation, every single data point is in a validation set once and is used for training K-1 times. This is important, particularly when the amount of data available is small and every data point is precious.

- Better model selection: As we have seen in our example, K-Fold cross validation sets up a reliable way to compare different algorithms (Logistic Regression vs Random Forest) or different versions of the same algorithm.

- Base for hyperparameter tuning: Many machine learning models have “hyperparameters,” parameters not learned from the data, but set by the data scientists (e.g., the number of trees in a Random Forest model) Cross-validation is the standard way of finding the best values (the optimal values) for these hyperparameters, in a process called Grid Search or Randomized Search.

Disadvantages of Cross-Validation

- High Computation Expense: Cross-validation entails training a model multiple times (K times for K-Fold), which is time-consuming and expensive for complex models or large datasets.

- Inapplicable for Time-Series Data: Since all standard practices shuffle the data randomly, which destroys the chronological order required in time-series datasets, this leads to data leakage (using the future to predict the past), and unrealistically good performance.

- Does Not Produce a Final Model: Cross-validation is used for evaluation, not for building the final product. After you’ve used cross-validation to determine the best approach, the final training must be conducted on the entire dataset to build the one model you will actually deploy.

Do you want to take your AI capabilities to the next level? Check out HCL GUVI’s Advanced AI & Machine Learning Course, co-designed with Intel and IITM Pravartak. You’ll master hands-on skills in Python, Deep Learning, NLP, Generative AI, and MLOps and a globally recognized Intel certification to turn your learning into a career advantage.

Final Thoughts..

The importance of cross-validation in machine learning cannot be overstated. It is the bridge between a model that works on your laptop and a model that delivers real value in a production environment. While a simple train-test split can give you a quick first impression, cross-validation provides the robust, reliable evidence needed to make informed decisions.

By mastering techniques like K-Fold cross-validation and Stratified K-Fold cross-validation, you move from simply building models to engineering trustworthy and high-performing machine learning systems. It is an indispensable tool in any data scientist’s toolkit, a fundamental practice that separates amateur efforts from professional, production-ready solutions.

FAQs

1. Why is cross validation important in ML?

Cross validation in machine learning is important because it can help limit overfitting and underfitting. It helps ensure the model performs well on unseen data, making the results more reliable than using a train-test split.

2. Is cross validation used in deep learning?

Yes, but not in all cases. In deep learning, the datasets can be small, and k-fold CV is computationally heavy when datasets are large. Therefore, hold out validation (also known as train-validation-test split) is typically used instead of k-fold CV, although k-fold CV could still be used for the smaller datasets.

3. Can cross validation be used for regression models?

Yes, cross validation works for both classification and regression problems. For regression, techniques like k-fold CV are commonly applied to evaluate metrics such as Mean Squared Error (MSE) or R² score.

4. When should I use stratified cross validation?

Stratified cross validation should be used when your dataset is imbalanced (e.g., fraud detection, medical diagnosis). It ensures that each fold maintains the same class distribution as the full dataset.

Did you enjoy this article?