Understanding the KNN Algorithm in Machine Learning

Aug 21, 2025 6 Min Read 2716 Views

(Last Updated)

If you’re delving into machine learning, you’ll almost certainly encounter the K-Nearest Neighbors (KNN) algorithm early on. The KNN algorithm in machine learning is one of the simplest yet most intuitive algorithms among all the others.

It has been around for decades and remains a popular choice for learning and benchmarking due to its ease of implementation and reasonably good accuracy on many basic tasks.

In this article, we’ll explore what the KNN algorithm in machine learning is, how it works, and why it’s important. By the end, you’ll understand KNN’s strengths and weaknesses, see examples (including a bit of code), and even test your knowledge with a quick quiz. Without any delay, let’s get started!

Table of contents

- What are K-Nearest Neighbors (KNN)?

- How does the KNN algorithm work?

- 1. Choose the Value of K

- Measure Distance from the New Data Point

- Identify the K Nearest Neighbors

- Make the Prediction

- Bonus Insight: Weighted Neighbors

- What Happens Behind the Scenes?

- Choosing the value of K

- Applications of KNN

- Healthcare and Medical Diagnosis

- Recommendation Systems

- Data Imputation

- Finance: Credit Scoring & Stock Prediction

- Pattern Recognition: Image and Text Classification

- Advantages and Disadvantages of KNN

- Advantages of KNN

- Disadvantages of KNN

- Quick Quiz – Test Your Understanding

- Conclusion

- FAQs

- What is KNN algorithm in machine learning and how does it work?

- How do I choose the best value of K in KNN?

- What are the main advantages and disadvantages of using KNN?

- When should I not use the KNN algorithm?

- Is KNN a supervised or unsupervised learning algorithm?

What are K-Nearest Neighbors (KNN)?

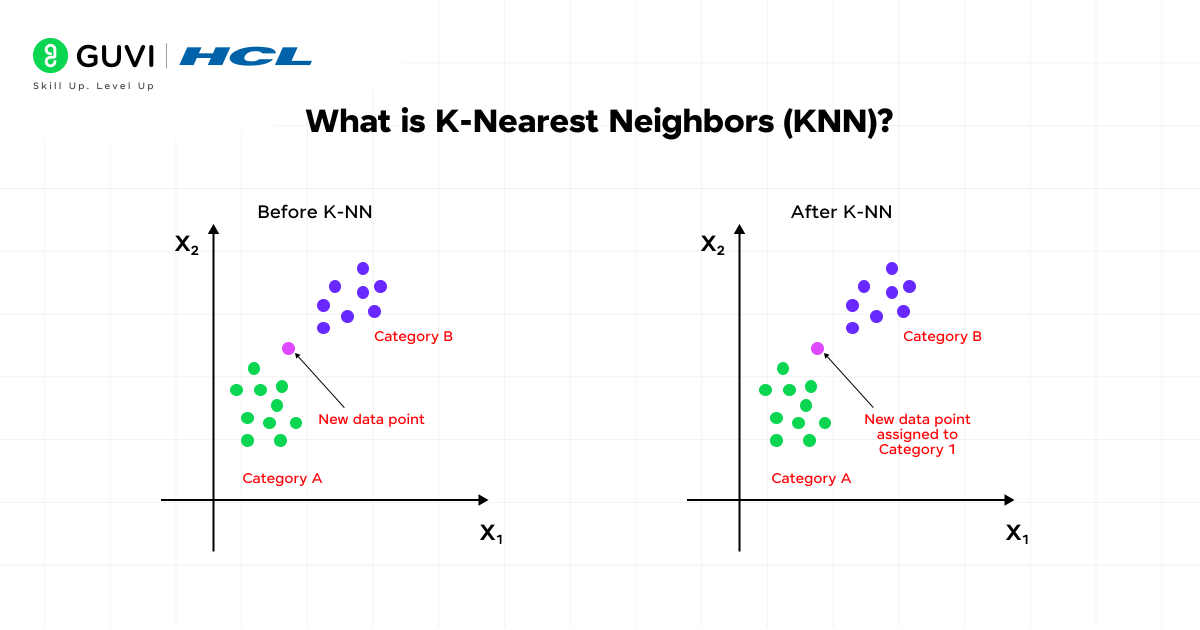

K-Nearest Neighbors (KNN) is a supervised learning algorithm that can be used for both classification and regression tasks. The core idea is simple: to make a prediction for a new data point, KNN looks at the “K” closest data points in the training set and bases the prediction on those neighbors.

KNN is often described as a “lazy” learning algorithm, and for good reason. Unlike many other algorithms, KNN does not build an explicit model or perform intensive training computations upfront.

There is essentially no training phase; the algorithm simply stores the training data. All the heavy lifting (calculating distances, finding neighbors, etc.) happens at prediction time, when you query the algorithm with a new data point.

This lazy approach means KNN is very easy to implement and understand, but it also implies that prediction can be slow if the dataset is large (since it might need to scan through all training points to make each prediction). We’ll discuss these trade-offs more later.

Did you know?

The basic ideas behind KNN were introduced way back in 1951 by researchers Evelyn Fix and Joseph Hodges, and later expanded by Thomas Cover in 1967. This makes KNN one of the earliest machine learning algorithms. It’s still taught today as a foundational technique due to its simplicity and effectiveness on small problems.

How does the KNN algorithm work?

At its heart, the KNN algorithm can be summarized in a few straightforward steps. Imagine you have a dataset of labeled points (for example, students labeled by whether they passed or failed a course, based on their study hours and sleep hours). Here’s how KNN would approach this:

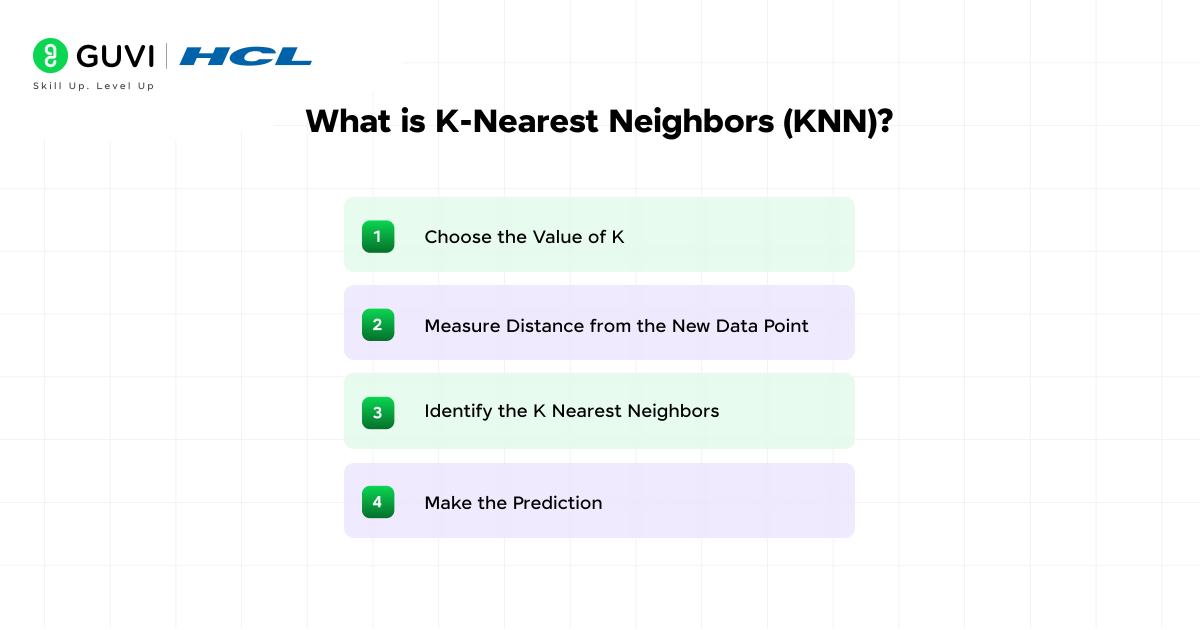

1. Choose the Value of K

You start by selecting K, the number of neighbors the algorithm should consider. This is a user-defined number, typically an odd value like 3, 5, or 7 for classification tasks to avoid ties.

2. Measure Distance from the New Data Point

To find which neighbors are “closest,” the algorithm calculates the distance between the new data point and all points in the training set.

Common distance metrics include:

- Euclidean distance – straight-line distance (most commonly used)

- Manhattan distance – like navigating city blocks

- Minkowski distance – a generalized form of both

- Hamming distance – for categorical or binary data

Note: It’s important to normalize your data before calculating distances, or else features with larger scales might dominate.

3. Identify the K Nearest Neighbors

Once all distances are computed, KNN sorts the training points based on proximity to the new input.

- The K closest data points (based on the chosen distance metric) are selected.

- These are the points that will influence the prediction.

Think of this as forming a tight little neighborhood around your query point.

4. Make the Prediction

Now comes the decision-making.

- For classification, the algorithm performs a majority vote among the K neighbors. Whichever class appears most frequently becomes the predicted class.

- For regression, it takes the average of the target values of the K neighbors and uses that as the prediction.

For example, if K=5 and 3 out of 5 neighbors belong to class A, KNN predicts class A for the new point.

Bonus Insight: Weighted Neighbors

In some cases, KNN can be made smarter by weighting neighbors based on their distance — closer neighbors get more influence than farther ones. This can help reduce noise and improve accuracy.

What Happens Behind the Scenes?

Even though KNN feels simple, here’s what it does at prediction time:

- Scans through the entire training dataset

- Calculates the distance between the query point and all training points

- Sorts the results

- Picks the top K

- Aggregate their outputs (majority vote or average)

- Returns the final prediction

Notice that KNN doesn’t “learn” during training. It just stores the data. All the work happens when you ask it a question, which is why it’s called a lazy learning algorithm.

That’s essentially the whole algorithm! As you can see, no mathematical model fitting or training coefficients are involved – the “model” is just the stored data itself, and the prediction is made by these simple calculations at query time. This simplicity is what makes KNN appealing.

Choosing the value of K

One important decision when using KNN is selecting an appropriate value for K, the number of neighbors. The choice of K can significantly impact your model’s performance.

If you choose a very small K (like K = 1), the model becomes very sensitive to individual data points – it may capture noise or outliers in the training data, leading to overfitting (high variance, low bias).

On the other hand, choosing a very large K (say K = N, the size of the whole dataset) would make the model overly generalized – essentially averaging everything and ignoring useful local patterns, which can lead to underfitting (high bias).

There is a trade-off: lower K tends to yield more complex models (flexible but potentially noisy), while higher K yields smoother, more generalized models.

Applications of KNN

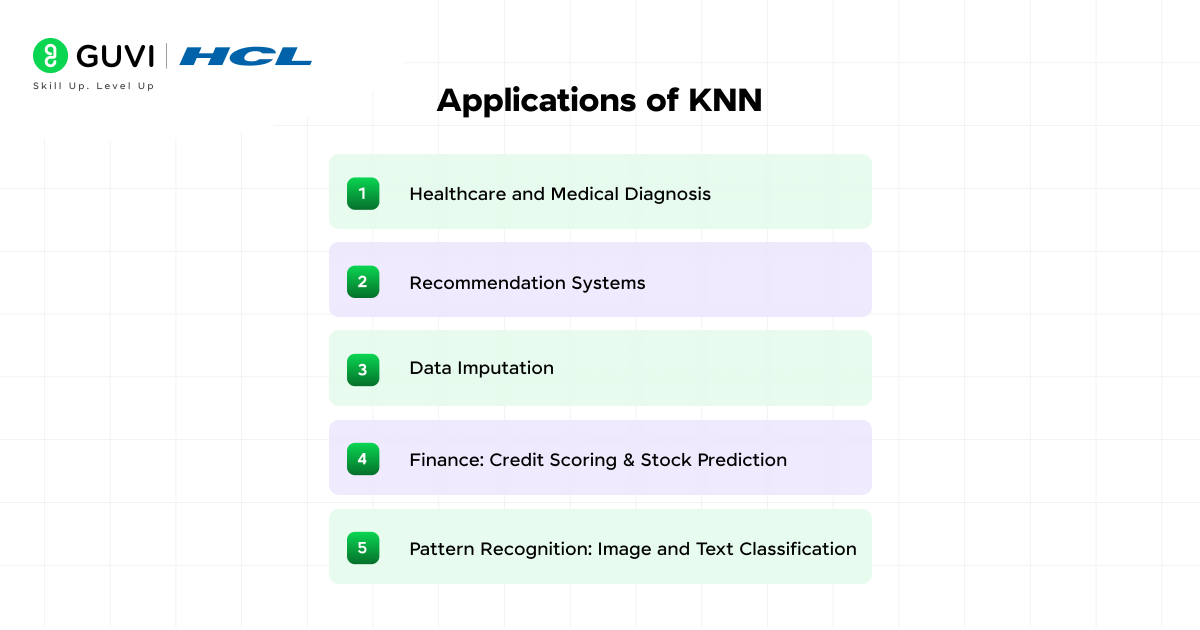

KNN might be simple, but it has a wide range of applications, especially for straightforward tasks or as a baseline to compare against more complex models. Here are some areas where KNN can be and has been applied:

1. Healthcare and Medical Diagnosis

KNN is frequently applied in predictive diagnostics. Given a patient’s health metrics, it compares them with past cases to predict risks like heart disease or diabetes.

- If most similar patients had a certain condition, the new patient is flagged for the same.

- Works well in scenarios where labeled medical data is available.

For example, KNN has been used in breast cancer detection by comparing tumor characteristics to historical cases.

2. Recommendation Systems

Some basic recommendation engines use KNN to suggest content based on user similarity.

- If you watch a certain set of movies, KNN finds users with similar watch patterns.

- Recommendations come from what your “neighbors” liked.

It’s simple but effective, especially when combined with other models in hybrid systems.

3. Data Imputation

What if your dataset has missing values?

KNN can be used to fill in missing data by looking at similar rows.

- For a missing value, KNN finds K closest rows (based on other features) and averages their values for the missing feature.

- This is called KNN imputation and is popular in data preprocessing pipelines.

4. Finance: Credit Scoring & Stock Prediction

In the financial domain, KNN can help assess credit risk or forecast market trends.

- For credit scoring, KNN compares a loan applicant to past applicants and predicts if they’re likely to default.

- In stock price analysis, it compares current market conditions to similar historical patterns.

Of course, for large financial datasets, more scalable models are often used, but KNN is great for quick prototyping.

5. Pattern Recognition: Image and Text Classification

KNN is commonly used in image recognition and handwriting classification tasks.

- A classic example is the MNIST digit classification, where KNN classifies handwritten digits by comparing pixel values.

- In text classification, documents are turned into vectors, and KNN finds the closest topic match based on word usage.

It’s often used as a baseline for benchmarking against more advanced models.

Advantages and Disadvantages of KNN

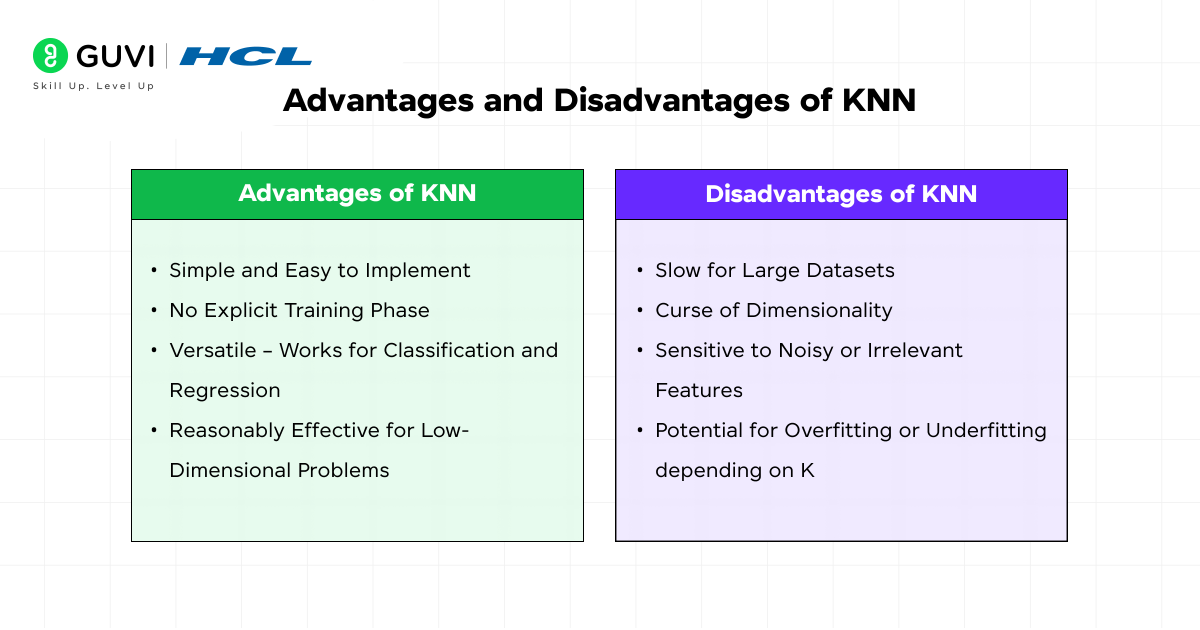

Like any algorithm, KNN has its pros and cons. It’s important to understand where KNN shines and where it struggles, especially if you’re considering it for a project.

Advantages of KNN

- Simple and Easy to Implement: KNN is about as straightforward as it gets in machine learning. There’s no complex math or optimization under the hood – just distance calculations and counting neighbors.

- No Explicit Training Phase: Since KNN is a lazy learner, you don’t need to spend time training a model (no model parameters are learned). All you do is store the data.

- Versatile – Works for Classification and Regression: KNN naturally handles both classification and regression tasks. The same algorithm can be applied to predict a discrete class or a continuous value by just changing the voting/averaging scheme.

- Reasonably Effective for Low-Dimensional Problems: For small datasets with a few features (dimensions), KNN can perform quite well and often competitively with more complex models, especially if the relationship between features and the target is not too complicated.

Disadvantages of KNN

- Slow for Large Datasets: The flip side of having no training phase is that prediction (query) time in KNN can be slow. In the worst case, to classify one new point, KNN might have to compute distances to every single point in the training dataset. That’s fine for small data, but if you have millions of points, that’s millions of distance calculations per query, which is very computationally expensive.

- Curse of Dimensionality: KNN tends to struggle as the number of features (dimensions) in your data grows large. In high-dimensional space, points tend to all be far apart from each other (a phenomenon often called the curse of dimensionality).

- Sensitive to Noisy or Irrelevant Features: Because KNN uses all features in computing distance, if some features are noisy or not relevant to the outcome, they can negatively impact distance calculations and lead to incorrect neighbor choices.

- Potential for Overfitting or Underfitting depending on K: The choice of K is critical. As discussed, a small K (like 1) can lead to overfitting – your model memorizes individual points, including noise, and may misclassify new examples that are just noise differences from one training point.

In summary, KNN is easy to use and can be quite powerful for small, well-structured problems, but it faces challenges with big, high-dimensional, or noisy data. It’s often used as a baseline or a teaching tool rather than the go-to algorithm for production systems, especially as data grows.

Quick Quiz – Test Your Understanding

Let’s make the learning interactive! Try answering the following questions to check your understanding of the KNN algorithm.

- KNN is an example of which type of machine learning?

A. Supervised Learning

B. Unsupervised Learning

C. Reinforcement Learning

D. Deep Learning - For regression tasks, how does KNN derive a predicted value for a new data point?

A. It takes a majority vote among the nearest neighbors’ labels.

B. It averages the values of the nearest neighbors.

C. It chooses the value of the single closest neighbor.

D. It uses a linear regression on the nearest neighbors. - What is a likely outcome of choosing a very large value of K (say, K = 100) for a KNN classifier on a moderate-sized dataset?

A. The model may underfit, because it smooths out differences by considering so many neighbors.

B. The model may overfit to noise in the training data.

C. The computation time for making predictions will be independent of K.

D. The decision boundaries become more complex and wiggly. - Why might KNN be a poor choice for extremely large datasets or very high-dimensional data?

A. It requires storing and scanning through all training data for each prediction (slow and memory-intensive).

B. Distances in high dimensions can be misleading (many points end up far apart or equidistant).

C. It doesn’t perform any feature selection, so irrelevant features can confuse it.

D. All of the above.

Answers: 1: A, 2: B, 3: A, 4: D.

If you want to learn more about how the KNN Algorithm works in machine learning and how it can boost your learning, consider enrolling in GUVI’s Intel and IITM Pravartak Certified Artificial Intelligence and Machine Learning Course that teaches NLP, Cloud technologies, Deep learning, and much more that you can learn directly from industry experts.

Conclusion

In this article, we covered the k-Nearest Neighbors algorithm in machine learning in depth – from its definition and how it works, to tips on choosing the right K and the importance of feature scaling. We discussed where the KNN algorithm in machine learning can be applied, as well as its advantages and limitations.

KNN’s core philosophy is easy to grasp: “birds of a feather flock together”, meaning points with similar features likely share the same label. This intuitive approach makes KNN a great learning tool and a baseline for comparisons.

Feel free to experiment with KNN on your datasets. Try implementing it, tweak the number of neighbors, and see how it affects the results. And always remember to look at your data – sometimes the simplest method, like KNN, can surprise you with how well it works when its assumptions align with your problem. Happy learning!

FAQs

1. What is KNN algorithm in machine learning and how does it work?

The K-Nearest Neighbors (KNN) algorithm is a supervised learning method used for classification and regression tasks. It works by identifying the K closest data points to a new input and predicting the result based on those neighbors. Instead of training a model, KNN stores the dataset and makes predictions during runtime using distance calculations.

2. How do I choose the best value of K in KNN?

Choosing the right value of K depends on the dataset and problem. A small K might overfit the data, while a large K can underfit and miss key patterns. Cross-validation is typically used to test different K values and pick the one that performs best.

3. What are the main advantages and disadvantages of using KNN?

KNN is easy to understand, requires no training, and works for both classification and regression. However, it’s computationally expensive for large datasets and struggles with irrelevant or unscaled features. Its performance also drops in high-dimensional spaces due to the curse of dimensionality.

4. When should I not use the KNN algorithm?

KNN isn’t ideal for large datasets because it has slow prediction times and high memory usage. It also performs poorly on high-dimensional data where distances become less meaningful. If your features are noisy or unnormalized, KNN may give unreliable results.

5. Is KNN a supervised or unsupervised learning algorithm?

KNN is a supervised learning algorithm because it relies on labeled data to make predictions. Although it doesn’t involve traditional model training, it still requires known outcomes during learning. It’s often mistaken for unsupervised learning due to its simplicity.

Did you enjoy this article?