The Ethics And Responsibility Of Being An AI-Augmented Developer

Jan 06, 2026 3 Min Read 547 Views

(Last Updated)

The responsibility of an AI-augmented developer is becoming increasingly important as AI transforms software development. Developers now rely on AI for coding, debugging, testing, and automating workflows, making ethical awareness and accountability critical in every decision.

This blog covers the essential responsibilities, ethical considerations, and best practices for AI-augmented developers. It is designed for software developers, tech leads, AI enthusiasts, and anyone looking to understand how to use AI responsibly in development projects.

Quick Answer

AI-augmented developers should focus on responsible data usage, fairness, transparency, human oversight, and ethical decision-making. They must balance AI assistance with critical thinking, ensure user safety, and maintain accountability in all development processes.

Table of contents

- Understanding The Role Of An AI-Augmented Developer

- Ethical Responsibilities In AI-Augmented Development

- Responsible Use Of AI Tools In Software Development

- Code Validation

- User Data Protection

- Avoiding Over-Reliance on AI

- Impact On Teams, Users, And The Industry

- 💡 Did You Know?

- Conclusion

- FAQs

- Why is ethics important for AI-augmented developers?

- Can AI replace developers completely?

- What is the biggest ethical risk in AI-augmented development?

- How can developers stay ethically responsible?

- Who is responsible for AI-generated code?

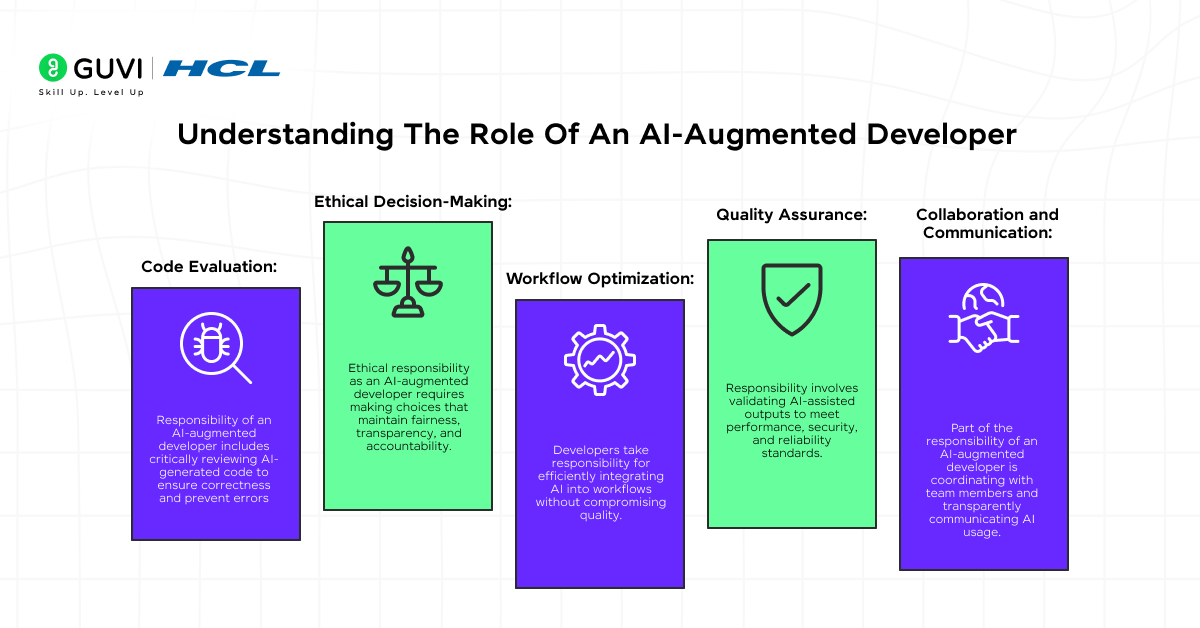

Understanding The Role Of An AI-Augmented Developer

The responsibility of an AI-augmented developer extends beyond using AI tools to write or optimize code. These developers must balance AI assistance with human judgment, ensuring outputs are accurate, ethical, and aligned with project goals. They play a crucial role in integrating AI into workflows while maintaining accountability and safeguarding the quality and trustworthiness of software systems.

Key Roles:

- Code Evaluation: The Responsibility of an AI-augmented developer includes critically reviewing AI-generated code to ensure correctness and prevent errors.

- Ethical Decision-Making: Ethical responsibility as an AI-augmented developer requires making choices that maintain fairness, transparency, and accountability.

- Workflow Optimization: Developers take responsibility for efficiently integrating AI into workflows without compromising quality.

- Quality Assurance: Responsibility involves validating AI-assisted outputs to meet performance, security, and reliability standards.

- Collaboration and Communication: Part of the responsibility of an AI-augmented developer is coordinating with team members and transparently communicating AI usage.

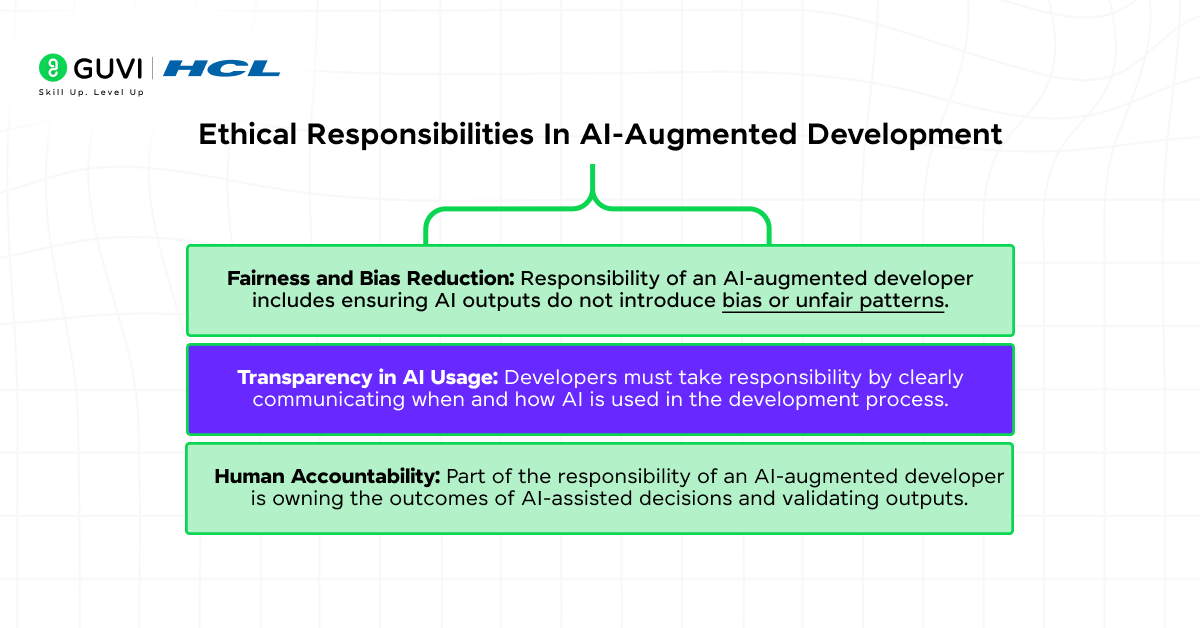

Ethical Responsibilities In AI-Augmented Development

Responsibility of an AI-augmented developer requires more than using AI tools; it involves ethical awareness and decision-making in every aspect of development. Developers must ensure their AI-assisted work is fair, transparent, and accountable to maintain trust, compliance, and quality in software projects.

Key Responsibilities:

- Fairness and Bias Reduction: The Responsibility of an AI-augmented developer includes ensuring AI outputs do not introduce bias or unfair patterns.

- Transparency in AI Usage: Developers must take responsibility by clearly communicating when and how AI is used in the development process.

- Human Accountability: Part of the responsibility of an AI-augmented developer is owning the outcomes of AI-assisted decisions and validating outputs.

Responsible Use Of AI Tools In Software Development

Responsibility of an AI-augmented developer extends beyond simply using AI tools; it requires careful management to ensure accuracy, security, and ethical compliance. This section focuses on three key areas: 1. Code Validation, 2. User Data Protection, and 3. Avoiding Over-Reliance on AI, which collectively uphold quality, trust, and ethical standards in AI-assisted development.

1. Code Validation

Responsibility of an AI-augmented developer involves thoroughly reviewing AI-generated code to ensure correctness, security, and reliability before deployment. Developers must critically evaluate outputs, identify errors, and maintain quality standards to prevent failures or vulnerabilities.

- Error Checking: Review AI-generated code for logical mistakes or bugs.

- Security Testing: Test outputs to ensure no vulnerabilities or unsafe patterns are present.

- Quality Assurance: Confirm AI-assisted code meets performance, reliability, and project standards.

2. User Data Protection

Part of the responsibility of an AI-augmented developer is handling sensitive data ethically while complying with privacy regulations. Proper management of user information ensures trust and reduces legal and ethical risks.

- Data Anonymization: Remove personal identifiers wherever possible.

- Minimal Data Collection: Avoid collecting unnecessary sensitive information.

- Regulatory Compliance: Follow GDPR, CCPA, and other relevant privacy standards.

3. Avoiding Over-Reliance on AI

Responsibility of an AI-augmented developer includes using AI as a support tool rather than a replacement for human judgment. Monitoring outputs and correcting errors is essential to maintain ethical and high-quality software development.

- Output Monitoring: Continuously check AI-generated results for accuracy and relevance.

- Bias Correction: Identify and correct any biased outputs produced by AI tools.

- Human Oversight: Ensure human review and decision-making remain central to the development process.

Impact On Teams, Users, And The Industry

Responsibility of an AI-augmented developer extends beyond individual tasks and influences teams, end users, and the broader industry. Developers must ensure AI-assisted workflows enhance collaboration, maintain trust, and uphold ethical standards. Proper communication, accountability, and culture-building are essential to maximize AI benefits while minimizing risks.

- Team Collaboration: Encourage clear communication and open discussion of AI-assisted outputs to prevent misunderstandings.

- Cross-Functional Coordination: Work effectively with designers, product managers, and QA teams to integrate AI responsibly.

- User Trust: Maintain transparency about AI involvement and deliver fair, unbiased, and reliable results to build confidence.

- Transparency in Decision-Making: Clearly explain AI-assisted decisions to both team members and end users.

- Ethical Work Culture: Promote responsible AI practices and ethical awareness among colleagues.

- Balanced Automation: Ensure AI tools enhance productivity without replacing critical human judgment.

- Continuous Learning: Stay updated on AI ethics, industry standards, and new tools to maintain accountability.

- Accountability for Outcomes: Take ownership of AI-assisted outputs, ensuring they meet safety, quality, and ethical standards.

- Long-Term Industry Impact: Contribute to responsible AI adoption that benefits the organization, users, and the broader software development community.

The HCL GUVI Artificial Intelligence and Machine Learning Course is perfect for developers who want to strengthen their understanding of the responsibilities of an AI-Augmented Developer. It covers core AI/ML concepts, practical applications, and ethical practices to help build responsible AI systems. The course offers hands-on projects and expert guidance for real-world learning.

💡 Did You Know?

- AI-assisted development can sometimes generate unexpected bugs, making the responsibility of an AI-augmented developer crucial for safe and reliable software.

- Teams that actively review and monitor AI outputs experience higher trust and fewer errors in production.

- Ethical decision-making by AI-augmented developers directly impacts user confidence and the long-term success of AI-driven products.

Conclusion

The responsibility of an AI-augmented developer goes beyond technical skills and requires ethical awareness, accountability, and careful oversight of AI-assisted workflows. By combining AI assistance with critical thinking, developers can ensure outputs are accurate, fair, and aligned with user needs and business goals.

To take this knowledge to the next level, developers should continuously learn about emerging AI tools, industry standards, and ethical guidelines. Applying best practices in real projects, collaborating with peers, and fostering responsible AI adoption strengthen expertise and ensure high-quality, trustworthy AI solutions.

FAQs

1. Why is ethics important for AI-augmented developers?

Ethics ensures AI-assisted systems operate fairly, transparently, and safely, protecting users and organizations.

2. Can AI replace developers completely?

No. AI enhances productivity, but human judgment, creativity, and accountability remain essential.

3. What is the biggest ethical risk in AI-augmented development?

Bias in data and AI outputs is one of the most significant risks.

4. How can developers stay ethically responsible?

By continuously learning regulations, reviewing AI outputs critically, and following best practices for fairness, transparency, and accountability.

5. Who is responsible for AI-generated code?

The developer reviewing and deploying the code holds full responsibility, even if AI assisted in its creation.

Did you enjoy this article?