Greedy Algorithms Explained: From Basics to Mastery

Nov 13, 2025 6 Min Read 1883 Views

(Last Updated)

When you first start learning algorithms, greedy strategies can feel almost too simple to take seriously. They make decisions step by step, always choosing what looks best at the moment without thinking too far ahead.

At first glance, that might sound like a bad idea. After all, shouldn’t smart algorithms plan, consider alternatives, and optimize carefully? Surprisingly, in many well-structured problems, doing the “obvious” best move at each step actually leads straight to the optimal answer. That’s the beauty of greedy algorithms: they are simple to design, fast to run, and incredibly powerful when used in the right situations.

If you are studying Data Structures and Algorithms or preparing for coding interviews, greedy algorithms will show up everywhere. That is why, in this article, we’ll take a deep dive into how greedy algorithms think, where they shine, where they break, and how you can develop the intuition to use them with confidence.

Table of contents

- What is a Greedy Algorithm?

- Key Characteristics of Greedy Algorithms

- Greedy Choice Property

- Optimal Substructure

- How Greedy Algorithms Work (General Structure)

- Popular Examples Where Greedy Works Perfectly

- Activity Selection Problem (Interval Scheduling)

- Fractional Knapsack Problem

- Huffman Coding

- Minimum Spanning Tree (Kruskal’s and Prim’s Algorithms)

- When Greedy Fails (And Why)

- 0/1 Knapsack Problem

- Traveling Salesman Problem (TSP)

- Making Change (Non-Standard Coins)

- Why Does Greedy Work in Some Problems but Not Others?

- How to Design a Greedy Algorithm (The Thought Process)

- How Greedy Differs from Dynamic Programming and Backtracking

- Conclusion

- FAQs

- What is a greedy algorithm in simple terms?

- When does a greedy algorithm give the optimal solution?

- What is the difference between greedy and dynamic programming?

- What are some real-life applications of greedy algorithms?

- Why does the greedy algorithm fail sometimes?

What is a Greedy Algorithm?

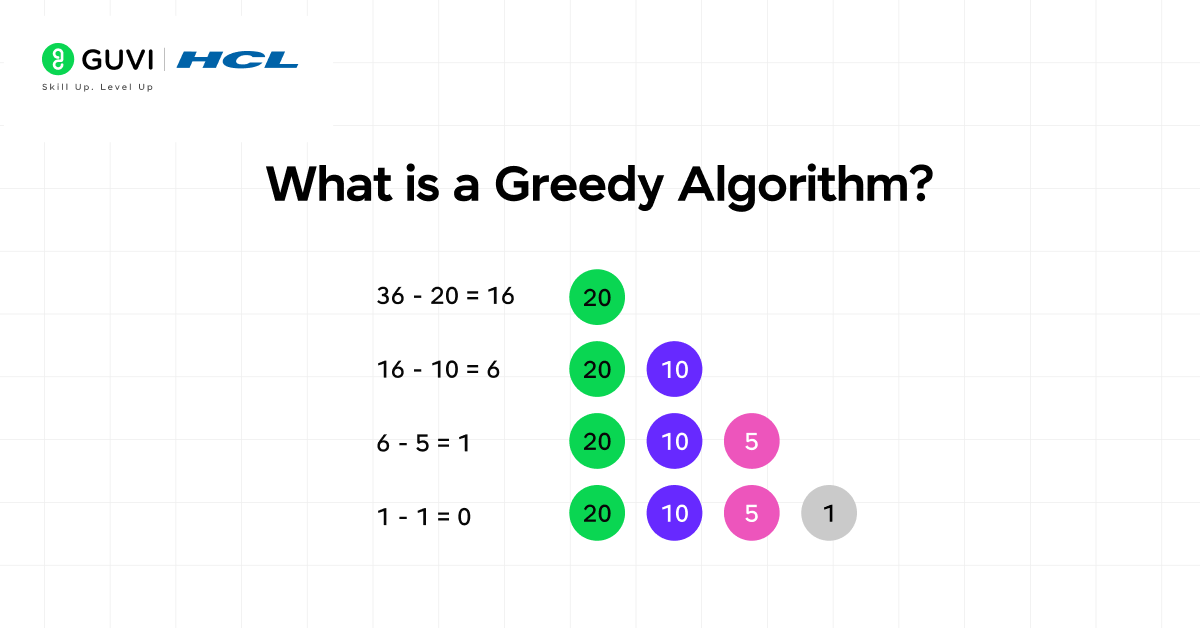

A greedy algorithm is a prominent technique in data structures and algorithms. It is a method that builds a solution piece by piece, always choosing the option that offers the most immediate benefit. It doesn’t undo previous decisions or reconsider past choices. It commits early and moves forward.

This might sound risky, but many problems are structured in such a way that making the best local decision does, in fact, lead to the global best solution. The key is understanding the problem’s properties, not just the logic of the code.

A simple way to think about it: imagine you’re picking activities to attend during the day. Each activity has a start and end time. You want to attend as many as possible. If you always pick the activity that ends first, you give yourself the most time to attend more events later. This is exactly how the classic “Activity Selection Problem” works, and greedy nails it.

So, greed is not guesswork; it’s logic applied at each step, based on a specific measure of what “best” means for that problem.

Key Characteristics of Greedy Algorithms

To really understand greedy algorithms, let’s break down the mental model behind them. There are two fundamental properties that allow a greedy strategy to succeed, and without them, a greedy algorithm will most likely fail. These are not just theoretical terms; you will actually use them to evaluate whether a greedy approach is safe for a given problem.

1. Greedy Choice Property

This property means that making the best local choice at one step does not prevent you from reaching the global optimum. In other words, the locally optimal choice is part of some globally optimal solution.

If choosing something early blocks you from achieving the best final result, the greedy approach is flawed. This is why greedy algorithms need careful problem analysis; sometimes, the most obvious local choice actually leads to a dead end.

2. Optimal Substructure

This property means the optimal solution to the whole problem contains within it optimal solutions to its smaller subproblems. You might recognize this concept from dynamic programming.

Both greedy and DP rely on optimal substructure, but the difference is that the greedy algorithm makes final decisions as it goes, while DP explores more possibilities and stores results. If a problem has optimal substructure, you can still examine whether greedy is the faster, simpler alternative.

When both greedy choice property and optimal substructure hold, you have a strong chance that a greedy solution will work. But don’t worry, later on, we’ll talk about how to prove it or at least build a strong intuition through examples.

If you are just starting in programming and wondering why you should learn DSA, here is a quick read that might help you understand its importance in programming – Importance of DSA: Why is it a “Must-Learn” for Developers?

How Greedy Algorithms Work (General Structure)

Although each greedy algorithm looks different depending on the problem, most follow a similar pattern. Understanding this structure makes it easier to recognize greedy problems and design workable solutions:

- Define what “best” means at each step (e.g., smallest weight, most value, earliest finish).

- Sort or organize the input based on that criterion.

- Start from an empty solution.

- Loop through choices in order, and at each step, check if adding the choice keeps the solution valid.

- If it does, include it. If it doesn’t, skip it.

- Continue until no more choices are left.

- The final solution is returned without revisiting earlier steps.

Notice how simple and elegant this is. Unlike dynamic programming or backtracking, you don’t explore multiple paths or undo decisions. Efficiency is one of the greedy algorithm’s biggest strengths.

If you want to read more about how DSA paves the way for effective coding and its use cases, consider reading HCL GUVI’s Free Ebook: The Complete Data Structures and Algorithms Handbook, which covers the key concepts of Data Structures and Algorithms, including essential concepts, problem-solving techniques, and real MNC questions

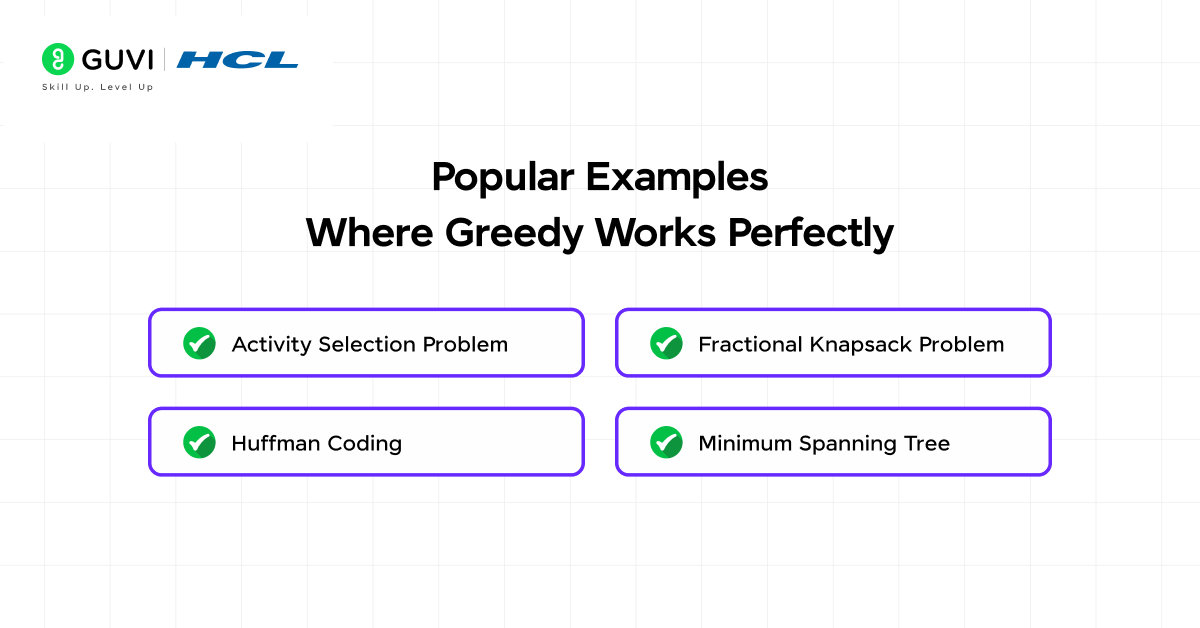

Popular Examples Where Greedy Works Perfectly

To build the intuition that we talked about earlier, let’s look at classic problems where the greedy algorithm is not only useful but also gives the optimal solution every time.

1. Activity Selection Problem (Interval Scheduling)

Goal: Pick the maximum number of non-overlapping activities.

Greedy Strategy: Always pick the activity that finishes earliest.

Why it works: Choosing the earliest finishing activity leaves the most room for future activities. Any other choice risks blocking more slots. This problem perfectly demonstrates the greedy choice property and is often used as the introductory example to greedy thinking.

2. Fractional Knapsack Problem

Goal: Fill a knapsack with items of given weight and value to maximize total value. You can take fractions of items.

Greedy Strategy: Take items in descending order of value-to-weight ratio.

Why it works: Because fractions are allowed, you can always fill the knapsack with the highest-value remaining portion. This flexibility makes the greedy strategy optimal. However, you should notice something critical: once you remove the ability to take fractions, greedy breaks. That is exactly what happens in 0/1 Knapsack, which we’ll cover later.

3. Huffman Coding

Goal: Compress data using variable-length binary codes with no ambiguity.

Greedy Strategy: Repeatedly combine the two least frequent symbols to build the tree.

Why it works: This ensures that the most frequent symbols have the shortest codes. The greedy process builds an optimal prefix tree without needing to backtrack or try different combinations.

4. Minimum Spanning Tree (Kruskal’s and Prim’s Algorithms)

Both Kruskal’s and Prim’s algorithms build a minimum spanning tree by repeatedly selecting the smallest edge that maintains a valid structure.

- Kruskal’s algorithm sorts all edges by weight and picks the smallest ones that don’t form a cycle.

- Prim’s grows the tree from a starting node, always adding the smallest edge that connects the tree to a new node.

These are greedy at heart, but they work because of deep structural properties of spanning trees that ensure local optimal edges build a globally optimal tree.

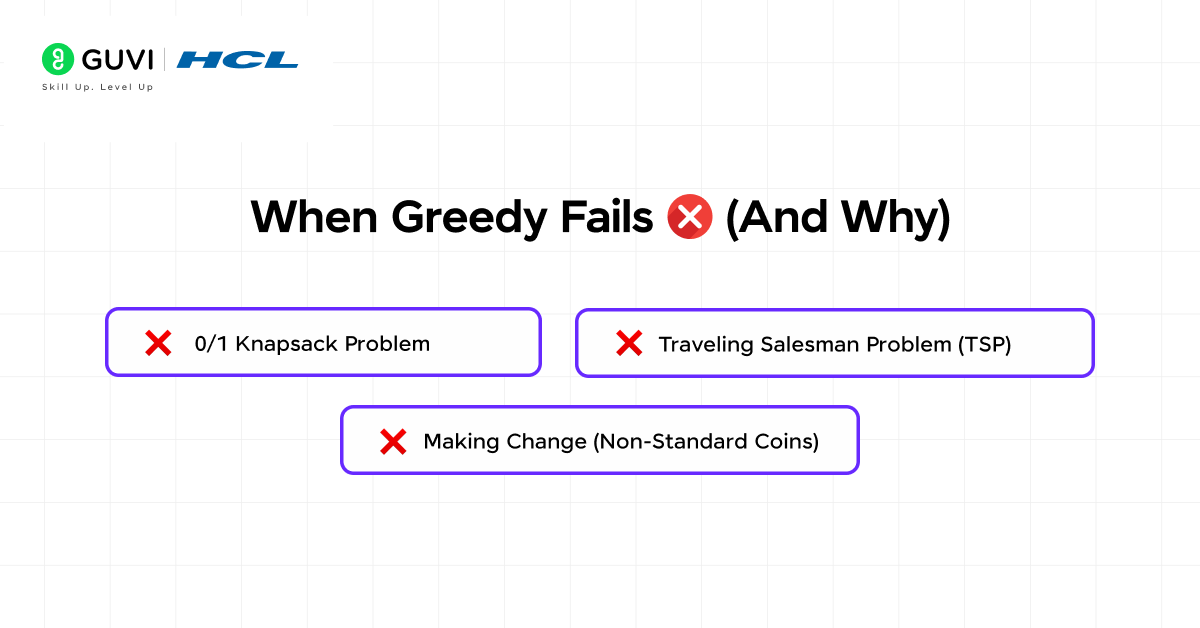

When Greedy Fails (And Why)

You can’t just throw greedy at any optimization problem and assume it will work. In fact, some famous problems are known specifically because greedy fails on them.

1. 0/1 Knapsack Problem

In this version, you cannot take fractions of items. It’s all or nothing. Greedy by value-to-weight ratio might pick a high ratio item that uses up most of the capacity, but blocks a better combination of smaller items. Dynamic programming is needed here because greedysearch cannot reconsider.

2. Traveling Salesman Problem (TSP)

The intuitive greedy strategy is to always go to the nearest unvisited city. Unfortunately, this often leads to getting stuck far from the remaining cities, forcing long, inefficient trips at the end. TSP requires careful global planning or advanced heuristics.

3. Making Change (Non-Standard Coins)

With standard coins like 1, 5, 10, 25, greedy works. But if the coin denominations are unusual, greedy might fail to find the minimum number of coins. This is a great example of why greedy depends on problem structure, not just logic.

Why Does Greedy Work in Some Problems but Not Others?

This is the million-dollar question. The key difference is whether the problem allows each greedy choice to be “safe.” A safe choice is one that leads to some optimal solution, not necessarily all optimal solutions, but at least one. When such safe moves exist and can be proven, greedy algorithms work. When they don’t, greedy can get trapped.

In problems like MST or Activity Selection, you can prove that certain local decisions don’t ruin global optimality. In problems like TSP or 0/1 Knapsack, a local decision can completely block the optimal path. So the real challenge is not coding greedy, but recognizing whether the problem supports safe local decisions.

If you want a platform that actually teaches DSA in a structured, beginner-friendly way while also giving you practical coding experience, consider enrolling in HCL GUVI’s DSA for Programmers Course that is designed specifically for learners who want clarity instead of confusion. It explains concepts in simple terms and guides you from the basics to advanced topics step-by-step.

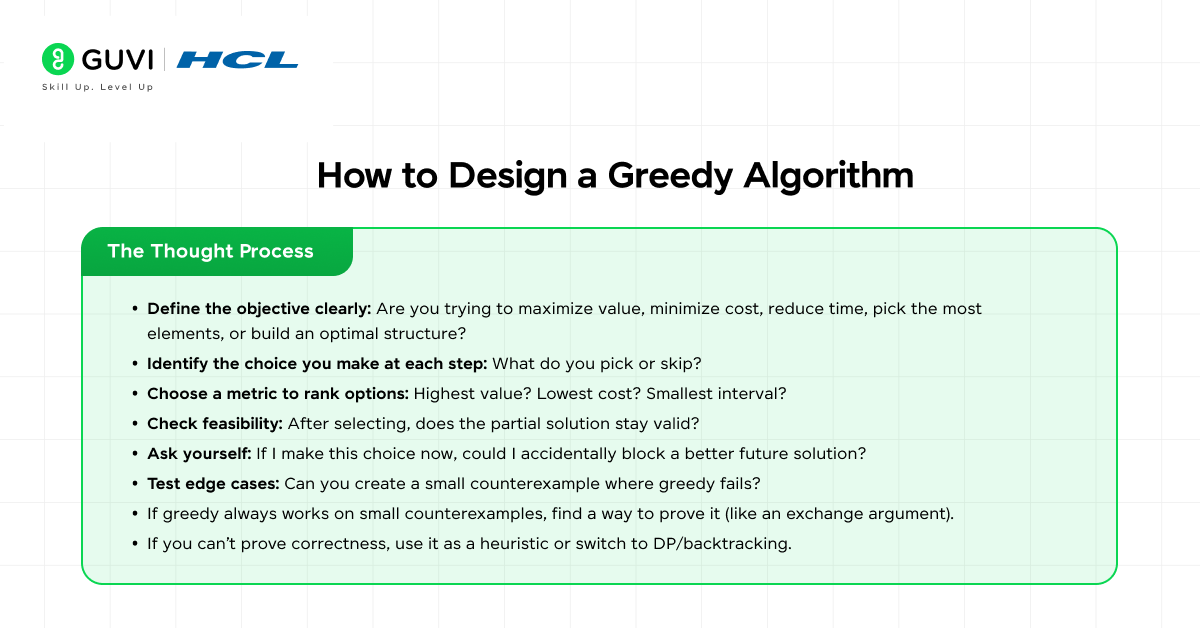

How to Design a Greedy Algorithm (The Thought Process)

If you’re trying to solve a problem and suspect greedy might work, here is how you should think about it:

- Define the objective clearly: Are you trying to maximize value, minimize cost, reduce time, pick the most elements, or build an optimal structure?

- Identify the choice you make at each step: What do you pick or skip?

- Choose a metric to rank options: Highest value? Lowest cost? Smallest interval?

- Check feasibility: After selecting, does the partial solution stay valid?

- Ask yourself: If I make this choice now, could I accidentally block a better future solution?

- Test edge cases: Can you create a small counterexample where greedy fails?

- If greedy always works on small counterexamples, find a way to prove it (like an exchange argument).

- If you can’t prove correctness, use it as a heuristic or switch to DP/backtracking.

This mental framework helps you move from guesswork to confident reasoning.

How Greedy Differs from Dynamic Programming and Backtracking

At first glance, greedy algorithms might look similar to dynamic programming or backtracking because all three are used to solve optimization problems. However, they think very differently.

- Dynamic programming explores many combinations but avoids repeated work by storing the results of subproblems. It guarantees correctness but can be slower.

- Backtracking tries multiple paths and abandons dead ends, allowing it to explore complex solutions but at a potentially high computational cost.

- Greedy, on the other hand, makes a single immediate decision and never looks back. It wins on speed but risks incorrectness unless the problem structure supports its logic.

Knowing which technique to use is a major part of algorithm design. If you can prove greedy works, always choose it for speed and clarity. If you have doubts, dynamic programming or backtracking may be safer choices.

Greedy algorithms appear in more places than you might expect, even beyond classical textbook problems. Dijkstra’s algorithm for shortest paths is actually a greedy algorithm in disguise; it always selects the nearest unvisited node and finalizes its distance. Huffman coding, a widely used compression technique, is entirely greedy and produces an optimal encoding tree.

If you’re serious about mastering DSA in software development and want to apply it in real-world scenarios, don’t miss the chance to enroll in HCL GUVI’s IITM Pravartak and MongoDB Certified Online AI Software Development Course. Endorsed with NSDC certification, this course adds a globally recognized credential to your resume, a powerful edge that sets you apart in the competitive job market.

Conclusion

In conclusion, greedy algorithms look deceptively simple, but mastering them requires deeper insight. The true skill lies in understanding when greedy thinking matches the structure of the problem. When it does, you get elegant, fast, optimal solutions. When it doesn’t, you risk missing the real answer entirely.

Keep practicing by asking “What’s the best move right now?” and then challenge yourself by asking “Could this ruin the future?” That balance of intuition and logic is the key to becoming confident with greedy algorithms. Once you understand when and why greedy works, you won’t just be memorizing solutions, you’ll be designing them with purpose.

FAQs

1. What is a greedy algorithm in simple terms?

A greedy algorithm builds a solution step by step by always choosing the most beneficial option at that moment. It never revisits earlier choices and relies on the idea that local best decisions lead to a global best result, which only works in certain problem types.

2. When does a greedy algorithm give the optimal solution?

Greedy works when the problem has the greedy choice property and optimal substructure, meaning each local choice safely contributes to the best overall solution. Classic examples include Activity Selection, Huffman Coding, and Minimum Spanning Tree.

3. What is the difference between greedy and dynamic programming?

Greedy makes one decision at a time and never looks back, while dynamic programming explores multiple possibilities and stores subproblem results. Greedy is faster but not always correct; DP is safer but usually more complex.

4. What are some real-life applications of greedy algorithms?

Greedy algorithms are used in scheduling, shortest path routing, data compression (Huffman coding), network design (MST), and resource allocation. They’re popular because they are efficient and often produce optimal or near-optimal results.

5. Why does the greedy algorithm fail sometimes?

It fails when a locally optimal choice blocks access to a better overall solution later. Problems like 0/1 Knapsack, TSP, or certain coin systems show that greedy can be shortsighted without proper problem structure.

Did you enjoy this article?