The Types of Clustering in Machine Learning Explained

Sep 09, 2025 7 Min Read 2277 Views

(Last Updated)

Clustering is a form of unsupervised learning that is a quite powerful type of machine learning. With supervised learning, you create models based on labeled data, while with clustering, you segment and group unlabeled data into meaningful clusters of related data based on their similarity.

Because it is so common, clustering is primarily used in customer segmentation, image recognition, fraud detection, document categorization, and recommending categories. As it is applied in numerous domains, it is considered one of the core concepts in data science.

In this blog, we will discuss different types of clustering in machine learning, take a look at popular algorithms such as K-means, DBSCAN, hierarchical, fuzzy clustering, and compare advantages/disadvantages and use cases.

Table of contents

- What is Clustering in Machine Learning?

- Understanding Why Clustering is Important in Machine Learning

- Types of Clustering in Machine Learning

- Hard Clustering:

- Soft Custering:

- Machine Learning Clustering M

- Partitioning Clustering Methods

- Hierarchical Clustering

- Density-Based Clustering in Machine Learning

- Fuzzy Clustering in Machine Learning

- Model-Based Clustering in Machine Learning

- Advantages and Disadvantages of Clustering in Machine Learning

- Applications of Clustering in Machine Learning

- Final thoughts..

- Which clustering algorithm is most commonly used?

- What is the difference between hierarchical and partitioning clustering?

- Why use DBSCAN over K-means?

- What are real-world applications of clustering?

What is Clustering in Machine Learning?

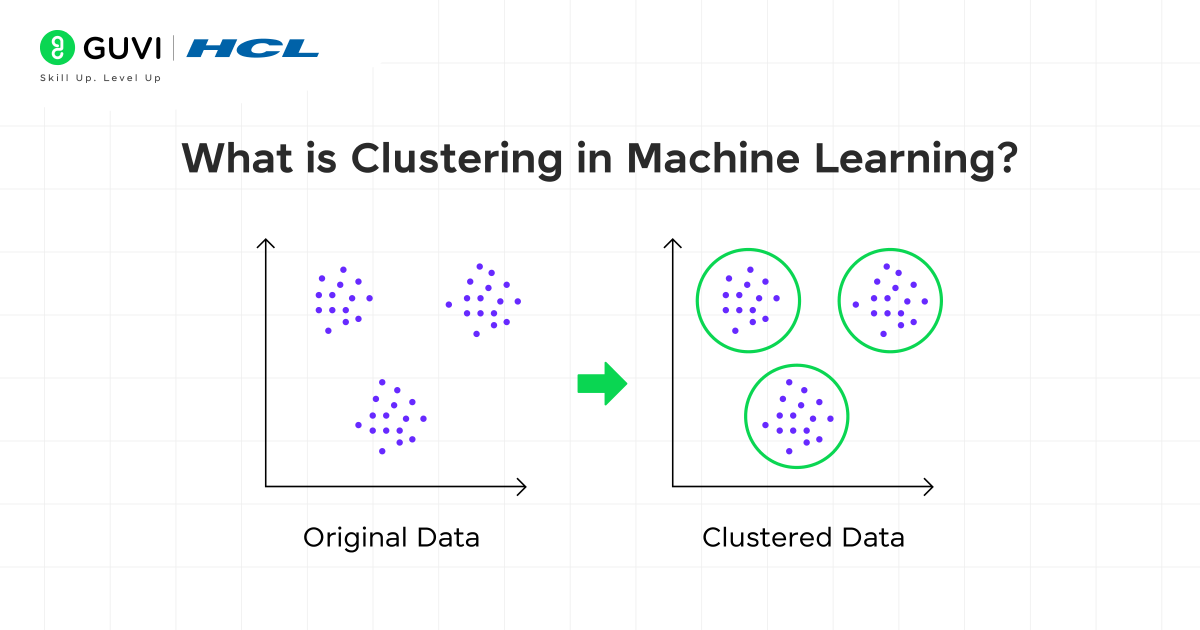

In machine learning, clustering is a way to group data points so that they are more similar to each other than to the points in other groups (or clusters). Clustering is an unsupervised learning method, as it does not use predefined labels or categories.

Rather, the algorithm analyzes the data, identifies similarities and differences, and naturally groups or clusters them like shown in the image above. Clustering methods can thus be useful to find hidden patterns, structures, or relationships in large datasets.

Understanding Why Clustering is Important in Machine Learning

Before we look into clustering techniques, we need to understand why it is important in machine learning.

- Unsupervised learning: It allows the discovery of hidden patterns when we have no labeled data.

- Data organization: It provides a more manageable and interpretable data structure for analysis and visualization.

- Business insights: Clustering can help companies with marketing campaigns, understanding customer behaviour, and product analysis.

- Underlying advanced ML tasks: Clustering is often the first stage of, and preparation for, classification, recommendation, and anomaly detection.

Types of Clustering in Machine Learning

Generally, there exist 2 types of clustering that can be done to group similar data points:

Hard Clustering:

In a hard clustering algorithm, each data point belongs to exactly one cluster and not more than one cluster. This indicates clustering is tough. The hard clusters are bounded and mutually exclusive. Therefore, if the algorithm says a point is in a certain cluster, due to similarity or distance, the point cannot be in another cluster.

For example:

Each data point (P1, P2, P3, etc.) belongs strictly to one cluster only. As shown below, P1 and P2 are grouped into Cluster 1, while P3 and P4 go into Cluster 2, and P5 into Cluster 3.

| Data Point | Cluster Assigned |

| P1 | Cluster 1 (C1) |

| P2 | Cluster 1 (C1) |

| P3 | Cluster 2 (C2) |

| P4 | Cluster 2 (C2) |

| P5 | Cluster 3 (C3) |

Soft Custering:

In soft clustering, data points are not allocated to a single cluster. Rather, each point is assigned a membership degree to multiple clusters, often expressed as probabilities or weights. So, a data point can partially belong to two or more clusters, with some clusters having more membership values than others.

For example:

Here, each data point has a probability of belonging to clusters instead of being fixed to just one.

| Data Point | Probability of Belonging to C1 | Probability of Belonging to C2 | Assigned Cluster |

| P1 | 0.80 | 0.20 | C1 |

| P2 | 0.60 | 0.40 | C1 (but with overlap) |

| P3 | 0.30 | 0.70 | C2 |

| P4 | 0.50 | 0.50 | Overlap (C1 & C2) |

Machine Learning Clustering M

There are many clustering algorithms in machine learning, each with its own features and algorithms. Let’s break them down in detail:

1. Partitioning Clustering Methods

Partitioning clustering is one of the simplest and most popular methods of clustering in machine learning, where the data set has a predetermined number of clusters, and clusters are generated based on data point attributes and similarity. Each data point exactly belongs to one cluster, and clusters are assigned based on similarity, so data points of one cluster are more similar to each other than data points in different clusters.

The total number of clusters must be determined beforehand. Partitioning clustering algorithms are usually iterative routines because they first place points into clusters and then continue to reassign points to different clusters according to whatever criteria improve the quality of the groupings. These criteria usually involve distance measures, such as Euclidean distance or Manhattan distance, which determine how close or far a data point is from the center of a cluster. The most popular algorithm in this category is:

1.1 K-Means Clustering:

K-means clustering in machine learning is one of the most common techniques used for clustering. K-Means is a partitioning approach that divides data into K clusters, where K is chosen before the algorithm runs. Each cluster is represented by a centroid or the average position of all the points in that cluster.

Example:

Let’s say you have data points that show that the students received marks in Math and Science. K-Means will create 2 clusters: people who scored really high in both subjects and those who have lower scores. The cluster will be represented by the average score of the students in that cluster.

1.2 K-medoids Clustering:

K-Medoids Clustering is a partitioning clustering method similar to K-Means, but instead of using the mean (centroid) of the data points, it uses an actual data point (called a medoid) to represent the center of each cluster. A medoid is the data point that is most centrally located within a cluster and has the lowest average dissimilarity to all other points in that cluster.

Example:

Using the same student marks data, K-Medoids will also create 2 clusters, but instead of using average marks as the cluster center, it will use one actual student’s score (the representative one) as the center of the cluster as shown below.

| Student | Cluster |

| S1 (90 marks) | C1 (represented by S3’s score 85) |

| S2 (30 marks) | C2 (represented by S4’s score 40) |

| S3 (85 marks) | C1 |

| S4 (40 marks) | C2 |

2. Hierarchical Clustering

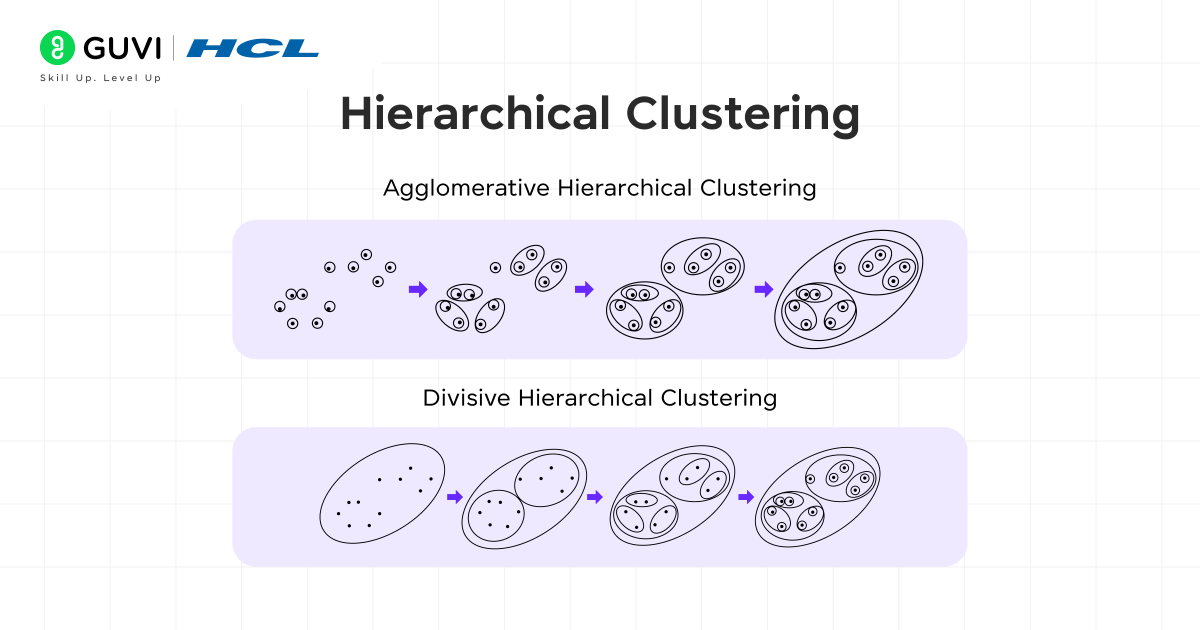

Hierarchical clustering is another popular method of clustering in machine learning. As opposed to partitioning methods, which require you to fix the number of clusters in your problem before running the algorithm, hierarchical clustering creates a tree of clusters (called a dendrogram). The tree enables you to see how the clusters merged or split over time, which makes it easier to decide on how many clusters to create after seeing the tree. Clusters are formed based on similarity, and the hierarchical clustering process can be either agglomerative (starting with individual clusters and merging them) or divisive (starting with one large cluster and splitting it down).

2.1 Agglomerative Clustering:

The process begins with each data point treated as an individual cluster. At each step, the two clusters that are closest (defined by your distance measure as Euclidean distance) will be joined together based on a linkage method (single linkage, complete linkage, or average linkage). The process will repeat until all the points are joined into a large cluster. When completed, you will have a hierarchical structure that allows you to cut at any level to get the desired number of clusters.

2.2 Divisive Clustering:

Divisive clustering is the opposite of agglomerative clustering. Divisive clustering begins by grouping all data points into a very large cluster. This cluster is then continuously divided into smaller clusters based on differences. This process continues until every single data point is in its own cluster, or the limiting number of clusters is reached.

Applications of Hierarchical Clustering

- Customer segmentation in marketing

- Gene expression data analysis in bioinformatics

- Document or text clustering

3. Density-Based Clustering in Machine Learning

The main idea behind density-based clustering methods is that clusters can be formed from dense regions of data points. Unlike the partitioning and hierarchical methods, density-based clustering methods do NOT require all data points to belong to a cluster, and can label points that do not belong to a cluster as outliers or noise. Density-based clustering is particularly useful when the dataset has irregular shapes and is noisy.

The most well-known density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

3.1 DBSCAN Clustering Algorithm:

DBSCAN clusters data points that are close together by looking at two parameters:

- Epsilon (ε): The maximal distance between points such that they can be considered neighbors.

- MinPts: The minimal number of points required to create a dense region.

How it Works:

- It starts with a point that has not been visited.

- It counts the number of neighbors that fall into the ε distance.

- If the count is no less than MinPts, it is a core point, and a new cluster is formed.

- The points that neighbors within ε are added to the cluster, and the process continues.

- Points that don’t belong to any cluster are considered noise.

4. Fuzzy Clustering in Machine Learning

Traditional clustering methods, such as partitioning and hierarchical clustering, are hard clustering methods. This means that every data point belongs to one, and only one, cluster. However, in many real-world cases, a data point could have characteristics of more than one cluster. This is where fuzzy clustering comes in.

For fuzzy clustering, each data point can belong to more than one cluster as a result of a degree of membership (a probability value between 0 and 1). Fuzzy clustering allows for a great deal of flexibility for datasets with clusters where the boundaries are not clearly defined.

4.1 Fuzzy C-Means Algorithm:

Fuzzy C-Means (FCM) is the most common fuzzy clustering algorithm.

How it works:

- Decide how many clusters (C) you want.

- Assign a degree of belonging for each data point, for each cluster.

- Calculate the cluster centers (centroids) considering the membership values.

- Update the membership values of the data points based on the distance to the centroids.

- Repeat until the membership values stabilize.

5. Model-Based Clustering in Machine Learning

Model-based clustering focuses on data generated from a mixture of underlying probability distributions. Each cluster is represented by a statistical model, and the objective is to estimate the parameters for each model to assign each data point to a cluster.

Gaussian Mixture Models (GMMs) are the most common model-based clustering approach in the field.

5.1 Gaussian Mixture Models (GMMs)

The Gaussian Mixture Model assumes that the data is generated from a mixture of several Gaussian (normal) distributions, which characterize clusters. Unlike K-Means (or similar methods), which only allow spherical clusters, GMMs allow for ellipsoidal clusters of different sizes and orientations.

How GMM Works:

- Assume the number of Gaussian distributions.

- Use the Expectation-Maximization (EM) method, which gives estimates of parameters (mean, variance, probability of each Gaussian distribution).

- Assign probabilities for each point belonging to the different clusters.

- Classify points (i.e. clustering) based on the highest probability.

Advantages and Disadvantages of Clustering in Machine Learning

| SI No | Advantages | Disadvantages |

| 1. | Requires no labeled data and is relevant for unsupervised learning. | Sensitive to noise and outliers, which affect clustering quality. |

| 2. | Discover hidden patterns in data and natural groupings of well-defined populations. | Performance depends significantly on the algorithm and its parameters (e.g., the number of clusters in K-Means). |

| 3. | Flexible and used across industries such as marketing, healthcare, cybersecurity, and retail. | High dimensionality is also a problem, as distances between points become less meaningful. |

| 4. | Useful for data compression and summarization, as it can reduce large datasets into manageable groups. | For larger datasets, clustering is computationally expensive due to the large number of distances. |

| 5. | Support decision-making by providing some insight into customer behavior, anomalies, and system performance. | Some algorithms, due to random initialization, will give variable results each time they run. |

Clustering has been around since the 1950s,and it powers many of the tools you often interact with! Google News uses clustering to group similar stories together, while Netflix and Spotify. rely on clustering to provide recommendations, for what you might enjoy next. 40% In the health sciences, AUD 70,000 scientists are even using clustering techniques to study genes and identify new treatments. It’s incredible how one simple technique can link so many different fields, isn’t it?

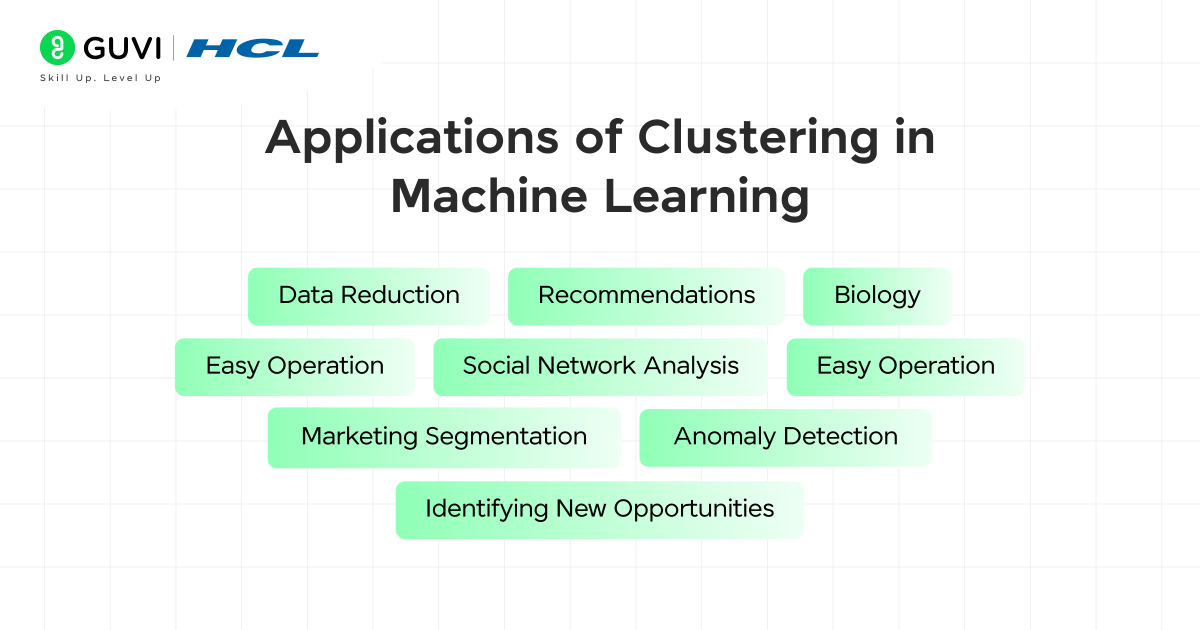

Applications of Clustering in Machine Learning

Clustering can be applied in numerous areas in the real world, as it can help with grouping and understanding data irrespective of any predefined labels. Below are some common examples of applications of clustering:

- Customer Segmentation – Companies can use clustering methods to group customers based on their buying behavior, preferences, or demographics. For example, e-commerce platforms group users into categories like “frequent buyers” or “discount seekers” in order to make recommendations.

- Market Research – Companies can analyze data from a survey, taking their responses, and cluster them to find different groups of customers for designing new marketing strategies.

- Image Segmentation – In computer vision, cluster analysis is a powerful tool that can be used to partition image segments (identifying the background, objects, and boundaries). This can be useful in areas including medical imaging and face recognition.

- Document and Text Clustering – Clustering can also be used to group similar documents or articles. For example, news websites automatically cluster their articles into some type of category, such as sports, politics, or technology.

- Anomaly Detection – By clustering normal data patterns, it is possible to determine unusual or abnormal behaviors. For example, fraud detection, cybersecurity, and quality control in manufacturing, and verification can all use anomaly detection through clustering.

- Healthcare and Biology – Medical researchers use clustering to group patients who have similar types of symptomology, and patients who have similar genetic data. Patient clustering is helpful for disease classifications, potential drug discovery, and treatment of diseases.

- Search Engines – Clustering is a helpful approach to displaying search results since clustering can display similar pages together. Search results will be more useful for the end-user if similar pages are together.

Are you looking to channel your enthusiasm for clustering into a career in artificial intelligence and machine learning? If so, take a look at GUVI’s Artificial Intelligence and Machine Learning Course, certified by Intel and IIT-M Pravartak, developed by industry professionals to equip you for real-life skills while applying for top tech jobs – no experience required.

Final thoughts..

Clustering is an exciting concept within machine learning that enables us to organize large sets of data into meaningful groups. There are so many different clustering methods including K-Means, Hierarchical, DBSCAN, and Fuzzy clustering that students should understand how machines can find patterns, with no labeled data! Each of the methods has its own strengths and weaknesses, but together, the methods provide us with different tools to address real-world scenarios (e.g., business, health, technology).

If you are just beginning your machine learning journey, clustering is a great way to get started because it will help build a solid foundation in unsupervised learning. If you continue to practice, you will not just understand how these algorithms work, but will learn how to apply them in practice.

I hope you find this blog useful, and I hope it helps to make your journey a little bit easier, keep learning, keep practicing, and before long you will have confidence in mastering clustering techniques in machine learning!

1. Which clustering algorithm is most commonly used?

K-means clustering is the most commonly used clustering algorithm since it is easy to use and is also very efficient.

2. What is the difference between hierarchical and partitioning clustering?

In simple terms, partitioning clustering (like K-means) groups the data into non-overlapping groups, while hierarchical clustering creates nested structures (tree-like structure).

3. Why use DBSCAN over K-means?

DBSCAN works better with irregular-shaped clusters, and it has the ability to find outliers, whereas K-means makes the assumption of spherical clusters.

4. What are real-world applications of clustering?

Clustering is used in customer segmentation, healthcare analytics, fraud detection, search engines, and image recognition.

Did you enjoy this article?