Data Transformers: Roles and Responsibilities of Data Engineers

Oct 24, 2024 7 Min Read 1940 Views

(Last Updated)

Have you ever stopped to think about how the apps on your phone know exactly what you want, or how businesses manage to predict your preferences with perfect accuracy? These are the roles and responsibilities of data engineers. They are entitled to collect these raw data and engineer it according to the company’s needs

But who are they, and what exactly do they do? How do they turn raw data into insights that shape the world around us? In this article, we’re diving into the world of data engineers, understanding their roles and responsibilities in the data-driven age we live in, and also we will be exploring the tech stack as well as the educational background needed for it.

Table of contents

- Roles and Responsibilities of Data Engineer

- Data Pipeline Development:

- Data Modeling

- Data Warehousing

- Data Quality Assurance

- Data Transformation

- Data Security and Compliance

- Performance Optimization

- Collaboration

- Technology Evaluation

- Documentation

- Required Skills for Data Engineering

- Programming Languages

- Data Storage and Warehousing

- Big Data Technologies

- ETL and Data Modeling:

- Data Pipeline Orchestration

- Cloud Platforms

- Containerization and Orchestration

- Automation and Scripting

- Education and Background for Data Engineering

- Bachelor's Degree

- Master’s Degree (Optional but beneficial)

- Online Courses and Bootcamps

- Certifications

- Conclusion

- FAQ

- What is a data engineer?

- What are the key responsibilities of a data engineer?

- How do data engineers ensure data quality?

- What technologies do data engineers work with?

Roles and Responsibilities of Data Engineer

Data engineers play a critical role in the field of data management and analytics. They are responsible for designing, building, and maintaining the infrastructure necessary for handling and processing data efficiently.

Before we move to the next part, it is recommended that you should have a deeper knowledge of data engineering concepts. You can consider enrolling yourself in GUVI’s Big Data and Data Analytics Course, which lets you gain practical experience by developing real-world projects and covers technologies including data cleaning, data visualization, Infrastructure as code, database, shell script, orchestration, cloud services, and many more.

Instead, if you would like to explore Python through a Self-Paced course, try GUVI’s Python course.

Here are some of the key roles and responsibilities of data engineers:

1. Data Pipeline Development:

Data pipeline development involves the design, creation, and management of a structured sequence of processes that facilitate the efficient and reliable flow of data from various sources to their destination, often a data warehouse, database, or analytics platform.

These pipelines play a crucial role in modern data-driven organizations, enabling them to gather, process, and analyze data from diverse sources to make informed business decisions

Data pipeline development requires a combination of domain knowledge, data engineering expertise, and familiarity with relevant tools and technologies.

It involves collaboration between data engineers, data analysts, and domain experts to design a pipeline that meets the organization’s specific data processing needs while adhering to best practices for data quality, security, and efficiency.

The pipeline should be scalable to accommodate growing data volumes and adaptable to evolving business requirements, making it a foundational element of a data-driven organization’s infrastructure.

2. Data Modeling

Data modeling is a structured process of creating visual representations and conceptual frameworks that depict how data elements are organized, related, and stored within a database or information system.

It serves as a crucial bridge between business requirements and technical implementation, helping to design databases that are efficient, well-organized, and capable of supporting the intended applications and analyses.

Data modeling is an iterative process that involves collaboration between business analysts, data architects, and database administrators.

It not only aids in database design but also serves as documentation that helps ensure a common understanding among team members and stakeholders. Effective data modeling leads to databases that are well-structured, easily maintainable, and capable of accommodating future changes and expansions in the data requirements of an organization.

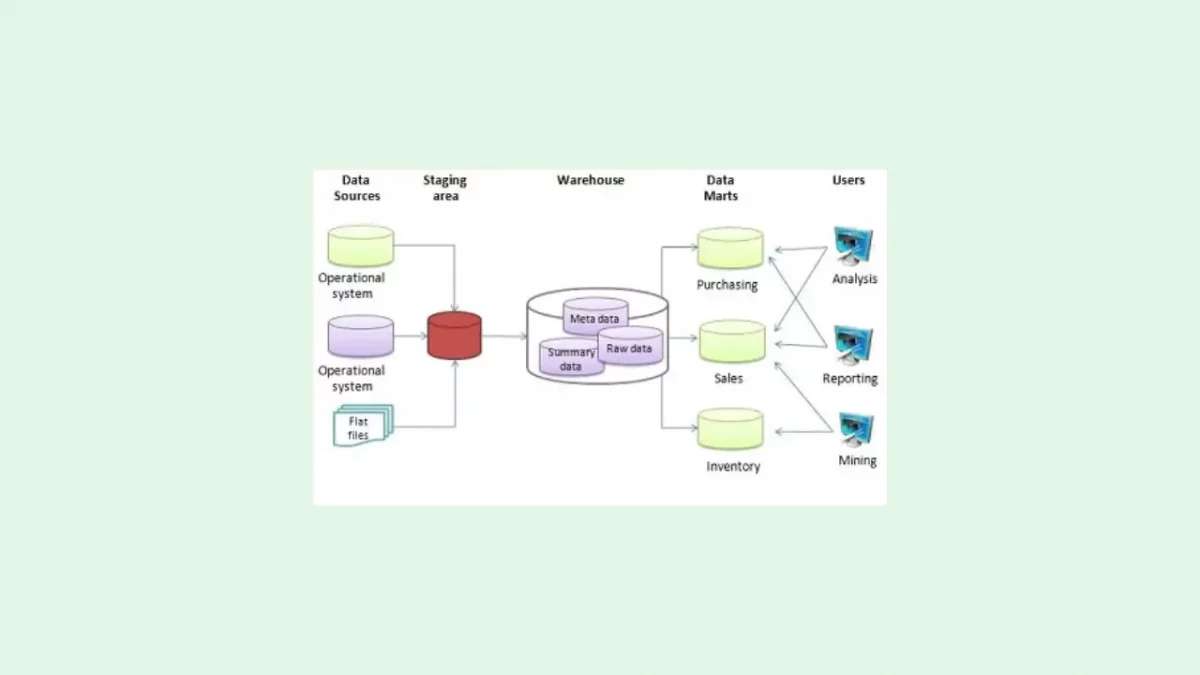

3. Data Warehousing

Data warehousing refers to the process of collecting, storing, and managing large volumes of structured and sometimes unstructured data from various sources within an organization to support business intelligence (BI) and data analysis activities.

It involves the creation of a centralized repository, known as a data warehouse, where data is organized and structured for efficient querying, reporting, and analysis.

Data warehousing plays a crucial role in enabling organizations to make informed decisions by providing a consolidated and historical view of their data.

It also supports data governance, as data quality, security, and access control can be managed centrally. A well-designed data warehouse becomes a valuable asset for organizations aiming to gain actionable insights from their data.

4. Data Quality Assurance

Data quality assurance is the process of systematically evaluating, maintaining, and improving the accuracy, completeness, consistency, reliability, and timeliness of data within an organization.

High-quality data is essential for making informed business decisions, as it ensures that the information used for analysis, reporting, and decision-making is trustworthy and reliable.

Improving data quality not only enhances decision-making accuracy but also boosts operational efficiency, reduces risk, and enhances customer satisfaction.

It’s a continuous effort that requires a combination of technology, processes, and a commitment to maintaining the integrity of an organization’s data assets.

5. Data Transformation

Data transformation is a critical process in data management and analysis that involves converting and restructuring data from its source format into a more suitable format for analysis, reporting, and storage.

It aims to enhance data quality, improve compatibility, and prepare data for specific business needs. Data transformation is often performed using tools such as Extract, Transform, and Load (ETL) platforms, scripting languages like Python or R, and data manipulation libraries like pandas or dplyr.

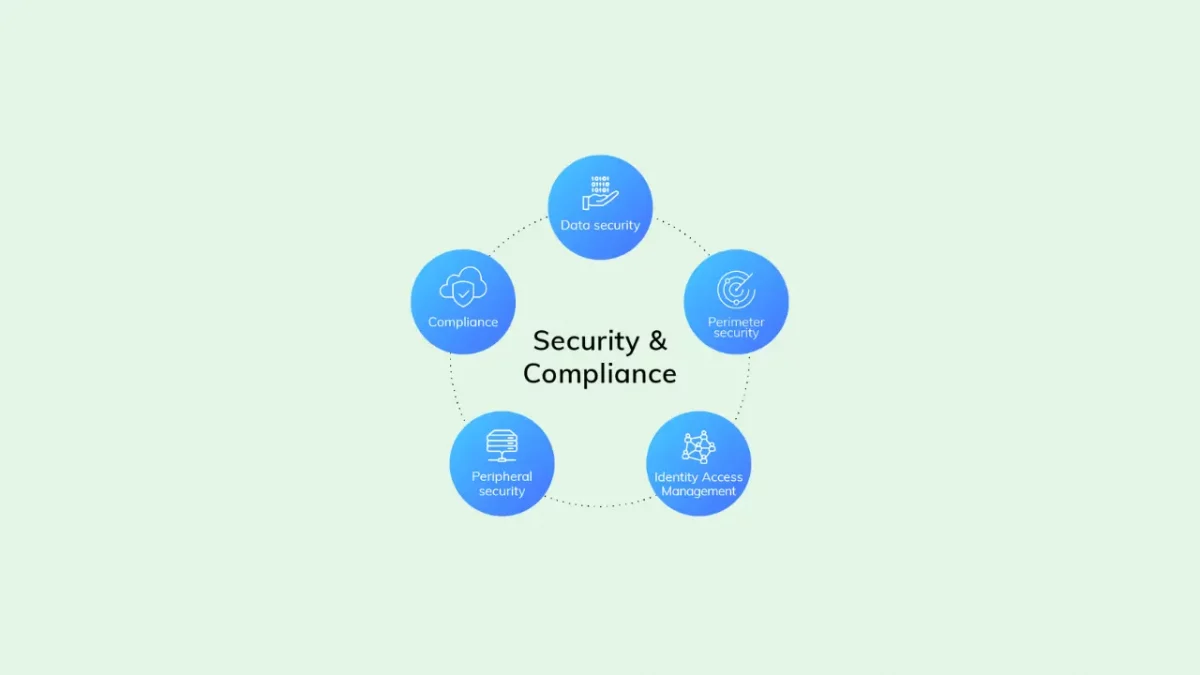

6. Data Security and Compliance

Data security and compliance refer to the set of practices, policies, and technologies implemented to protect sensitive information, maintain the privacy of individuals’ data, and adhere to regulatory requirements.

In today’s digital landscape, where data breaches and privacy concerns are prevalent, organizations must prioritize robust data security measures and ensure they comply with relevant data protection regulations.

Data security and compliance are essential not only for protecting sensitive information but also for maintaining trust with customers and stakeholders

7. Performance Optimization

Performance optimization in the context of computing and software refers to the systematic process of enhancing the efficiency, speed, responsiveness, and resource utilization of applications, systems, or processes.

It involves identifying and addressing bottlenecks, reducing latency, improving throughput, and minimizing resource consumption. Performance optimization encompasses various techniques such as code optimization, algorithmic improvements, memory management, caching strategies, parallel processing, load balancing, and database tuning.

The goal is to deliver a seamless user experience, achieve faster processing times, and maximize the utilization of hardware and software resources while ensuring optimal scalability and responsiveness even under heavy workloads.

8. Collaboration

Collaboration in data engineering refers to the cooperative effort and interaction between individuals with diverse skills and roles within a data engineering team or across departments to collectively design, develop, deploy, and maintain data pipelines, systems, and infrastructure.

Effective collaboration involves data engineers, data analysts, data scientists, domain experts, and stakeholders working together to define data requirements, design optimal data architectures, choose appropriate technologies, and ensure the quality, security, and reliability of the data ecosystem.

Collaborative tools, version control systems, and agile methodologies facilitate seamless communication, knowledge sharing, and the integration of insights from various perspectives, resulting in well-designed data solutions that meet business needs and support data-driven decision-making.

9. Technology Evaluation

Technology evaluation in data engineering involves the systematic assessment and analysis of various technologies, tools, frameworks, and platforms to determine their suitability for addressing specific data engineering challenges and requirements.

This process entails considering factors such as performance, scalability, ease of integration, compatibility with existing systems, cost-effectiveness, and support for required functionalities like data ingestion, processing, storage, and analysis.

Technology evaluation helps data engineering teams make informed decisions about selecting the most appropriate solutions that align with their project goals and business objectives, ensuring efficient data workflows, optimized resource utilization, and the ability to adapt to evolving data needs.

10. Documentation

Documentation involves the comprehensive creation and maintenance of clear, organized, and structured records that describe all aspects of data pipelines, processes, architecture, and infrastructure.

This documentation serves as a valuable resource for data engineers, analysts, and stakeholders, providing insights into how data flows through the system, the transformations applied, the data sources and destinations, as well as any dependencies, configurations, and decisions made during the development process.

Proper documentation facilitates effective collaboration, troubleshooting, and knowledge sharing among team members, aids in onboarding new team members, and ensures the repeatability and reproducibility of data workflows, enhancing transparency, accountability, and the long-term maintainability of data engineering projects.

Required Skills for Data Engineering

Data engineering requires a diverse set of skills to effectively design, build, and maintain data pipelines and infrastructure. Here’s a detailed list of skills of data engineers that should be possessed:

1. Programming Languages

Proficiency in programming languages is crucial for data engineers to develop and maintain data pipelines. Python is widely used due to its versatility and extensive libraries for data manipulation, while Java and Scala are preferred for large-scale data processing frameworks like Apache Hadoop and Apache Spark.

Strong programming skills enable data engineers to write efficient, scalable, and maintainable code for data transformation and processing tasks.

2. Data Storage and Warehousing

Understanding data warehousing concepts and techniques is crucial for designing efficient and optimized data storage solutions. This involves knowledge of data warehousing architectures, schema design (star, snowflake), and database normalization techniques.

Things to do in this sector are:

- Experience with relational databases (e.g., PostgreSQL, MySQL) and understanding of data modeling concepts.

- Familiarity with NoSQL databases (e.g., MongoDB, Cassandra) for handling unstructured or semi-structured data.

- Knowledge of data warehousing solutions like Amazon Redshift, Google BigQuery, or Snowflake.

3. Big Data Technologies

Familiarity with big data processing frameworks like Apache Hadoop and Apache Spark is important for handling large volumes of data efficiently.

These tools enable distributed data processing, making it possible to process and analyze data at scale. Data engineers need to understand concepts like MapReduce and Spark transformations to optimize data processing workflows.

Important things that you should know about in this domain are:

- Working knowledge of distributed data processing frameworks like Apache Hadoop and Apache Spark.

- Familiarity with data storage formats such as Parquet, Avro, and ORC used in big data environments.

4. ETL and Data Modeling:

Data modeling skills involve designing efficient data structures and schemas that align with business requirements. Extract, Transform, and Load (ETL) processes involve extracting data from various sources, transforming it into a suitable format, and loading it into a target system.

You should know the following tools to master ETL and data modeling:

- Proficiency in designing and implementing ETL processes to transform and move data between systems.

- Experience with ETL tools like Apache NiFi, Talend, Apache Airflow, or commercial solutions.

5. Data Pipeline Orchestration

Data pipeline orchestration refers to the management and coordination of various data processing and transformation tasks within a data pipeline.

It involves scheduling, monitoring, and controlling the execution of these tasks to ensure they are carried out in the correct order, at the right time, and with the appropriate dependencies.

Data pipeline orchestration plays a critical role in optimizing the flow of data through the pipeline, improving efficiency, reliability, and maintainability.

You need to hone the following skills to fully utilize data pipelines:

- Ability to design and manage data workflows using orchestration tools like Apache Airflow or similar solutions.

- Understanding workflow scheduling, task dependencies, and error handling.

6. Cloud Platforms

Cloud platforms provide a dynamic and flexible environment for designing, building, and managing data pipelines and systems. These platforms offer a range of services for data storage, processing, analytics, and orchestration.

Data engineers can leverage cloud-native tools and services to store and process large volumes of data, scale resources as needed, implement data transformations, and orchestrate complex workflows.

Cloud platforms enable data engineers to focus on creating efficient and scalable data solutions without the burden of managing physical infrastructure, leading to streamlined data processing, enhanced collaboration, and optimized resource utilization.

Things you need to focus on cloud platforms are:

- Familiarity with cloud platforms such as AWS, Google Cloud Platform, or Microsoft Azure.

- Experience in setting up and managing cloud-based data services like Amazon S3, Google Cloud Storage, or Azure Data Lake.

7. Containerization and Orchestration

In data engineering, containerization involves packaging applications, dependencies, and configurations into isolated units called containers, which can be consistently deployed across various environments.

Containerization, often using tools like Docker, facilitates reproducibility and portability of data processing workflows. Orchestration, exemplified by platforms like Kubernetes, manages the deployment, scaling, and monitoring of these containers, enabling data engineers to efficiently manage complex distributed data processing pipelines.

This approach enhances flexibility, resource utilization, and scalability, allowing data engineering teams to focus on designing effective data solutions while ensuring reliable execution across diverse computing environments.

8. Automation and Scripting

In data engineering, automation and scripting involve the use of programming languages and scripts to create repeatable and efficient processes for tasks such as data extraction, transformation, loading, and orchestration.

Automation tools and scripting languages like Python, Bash, or Apache NiFi enable data engineers to streamline data workflows, reduce manual intervention, and ensure consistent data processing.

By automating routine tasks and complex data transformations, data engineering teams can improve productivity, enhance data quality, and respond more effectively to changing data requirements while maintaining a structured and scalable approach to data management.

In automation and scripting, you have to know the following:

- Strong scripting skills for automating data processes and workflows.

- Knowledge of scripting languages such as Bash or PowerShell.

Keep in mind that the specific skills required may vary based on the organization’s technology stack, the nature of the data being handled, and the complexity of the data engineering tasks. Continuously expanding and refining these skills will help data engineers excel in their roles and contribute effectively to data-driven initiatives.

Education and Background for Data Engineering

To become a data engineer, you typically need a combination of education, skills, and practical experience. While there’s no strict educational path, certain degrees and fields of study can provide a strong foundation for pursuing a career as a data engineer. Here are the common educational routes:

1. Bachelor’s Degree

A bachelor’s degree in a related field is often the starting point for a career as a data engineer. Relevant fields of study include:

- Computer Science

- Software Engineering

- Information Technology

- Computer Engineering

- Electrical Engineering (with a focus on computer systems)

- Data Science

- Mathematics or Statistics (if combined with programming skills)

During your bachelor’s degree, you’ll gain fundamental knowledge in programming, algorithms, databases, and computer systems, which are essential for a data engineering role.

2. Master’s Degree (Optional but beneficial)

While not always required, a master’s degree can enhance your expertise and make you a more competitive candidate for advanced data engineering roles or leadership positions. Some relevant master’s degrees include:

- Master’s in Computer Science

- Master’s in Data Engineering

- Master’s in Data Science

- Master’s in Software Engineering

A master’s degree program can provide in-depth knowledge of advanced topics in data management, distributed computing, and big data technologies.

3. Online Courses and Bootcamps

If you already have a bachelor’s degree but want to transition into a data engineering role, there are many certified online Big Data and Data Analytics Course and boot camps that offer focused training courses in data engineering skills. These programs can be a faster way to acquire the necessary skills and start your career in data engineering.

4. Certifications

While not a substitute for a formal degree, certifications can validate your skills and knowledge in specific areas of data engineering. Some relevant certifications include:

- Coursera Certified Data Engineer

Certifications can be especially helpful if you’re looking to demonstrate expertise with specific tools or platforms.

Kickstart your career by enrolling in GUVI’s Big Data and Data Analytics Course where you will master technologies like data cleaning, data visualization, Infrastructure as code, database, shell script, orchestration, and cloud services, and build interesting real-life cloud computing projects.

Alternatively, if you would like to explore Python through a Self-Paced course, try GUVI’s Python course.

Conclusion

In conclusion, data engineers serve as the backbone of modern data-driven enterprises, facilitating the seamless flow of information from diverse sources to meaningful insights.

The role encompasses designing, building, and maintaining robust data pipelines, ensuring the accuracy and reliability of data, and optimizing systems for efficiency.

With proficiency in programming, database management, and data integration, data engineers enable organizations to unlock the full potential of their data, empowering data scientists, analysts, and business stakeholders to make informed decisions.

As the demand for data-driven insights continues to grow, the pivotal roles and responsibilities of data engineers will remain at the forefront of shaping successful data strategies across industries.

FAQ

What is a data engineer?

A data engineer is a professional responsible for designing, constructing, and maintaining systems for collecting, storing, and processing data to enable effective data analysis and insights.

What are the key responsibilities of a data engineer?

Data engineers are responsible for designing and building data pipelines, ensuring data quality, transforming raw data into usable formats, setting up and managing data storage solutions, and collaborating with other teams to meet data-related needs.

How do data engineers ensure data quality?

Data engineers implement data validation, data cleaning, and transformation processes to ensure that the data is accurate, consistent, and reliable throughout its lifecycle.

What technologies do data engineers work with?

Data engineers work with a range of technologies including databases (SQL, NoSQL), big data frameworks (Hadoop, Spark), cloud platforms (AWS, GCP, Azure), ETL tools (Airflow, NiFi), and version control (Git).

Did you enjoy this article?