Understanding the 9 Key Limitations of Artificial Intelligence

Aug 29, 2025 5 Min Read 2085 Views

(Last Updated)

We often hear how artificial intelligence is changing the world, automating tasks, driving innovation, and even outperforming humans in certain areas. But here’s the real question: Is AI truly limitless, or are there boundaries it just can’t cross?

If you’ve ever wondered why AI stumbles with basic logic or why it needs enormous data to function, you’re not alone. Understanding where AI falls short is just as important as knowing what it can do, especially if you’re planning to build, use, or rely on it.

That’s why in this article, to ease your AI learning process, we compiled a list of well-known and some unknown limitations of artificial intelligence. These help you in understanding where one might go wrong when using AI. So, without further ado, let us get started!

Table of contents

- What is Artificial Intelligence?

- Why Knowing AI’s Limits Matters

- 9 Key Limitations of Artificial Intelligence

- Lack of Common Sense

- Data Dependency

- Limited Generalization

- High Resource Requirements

- Explainability Issues (Black Box Problem)

- Vulnerability to Attacks

- No Emotional Intelligence

- Legal and Ethical Challenges

- Job Displacement (Not Full Replacement)

- Real-World Use Cases Where AI Fails

- IBM Watson in Healthcare

- Amazon’s Hiring AI

- Tesla Autopilot Failures

- Google Photos Tagging Error

- Chatbot Meltdowns (e.g., Microsoft Tay)

- Quick Challenge – Can You Spot the Limitation?

- Where We Go From Here?

- Conclusion

- FAQs

- Why can’t AI understand emotions like humans?

- Is AI dangerous if it becomes too powerful?

- Can AI ever have common sense?

- Why is AI often biased?

- What’s the biggest barrier to using AI in real life?

What is Artificial Intelligence?

Before diving into its flaws, let’s quickly recap what AI is. At its core, artificial intelligence refers to machines or systems that mimic human intelligence, learning from data, recognizing patterns, making decisions, and improving over time.

From voice assistants like Alexa and Siri to recommendation engines on Netflix, AI is embedded into many parts of our lives. But here’s the thing, it’s still not truly intelligent in the human sense.

Why Knowing AI’s Limits Matters

You can’t use a tool effectively unless you know where it breaks. This is especially true with AI. If you’re building, using, or even just interacting with AI-powered tools, knowing their limitations will help you:

- Avoid over-reliance

- Set realistic expectations

- Prevent ethical and operational risks

- Design better systems

Let’s break down these limitations one by one.

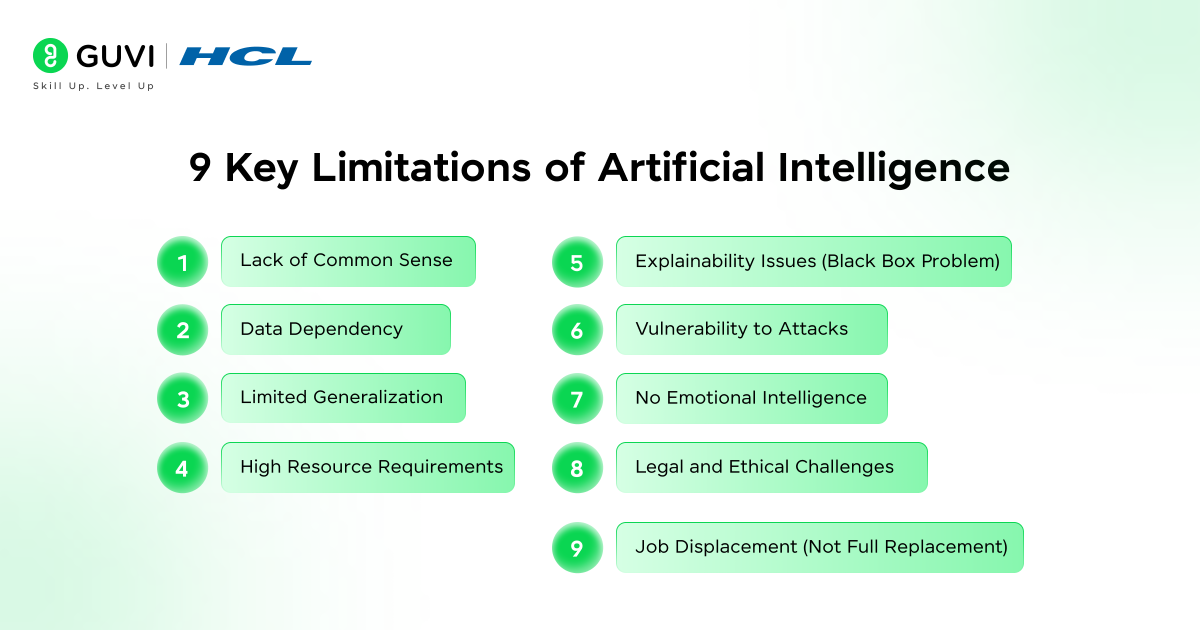

9 Key Limitations of Artificial Intelligence

Let’s break this down in detail. While AI can solve complex problems and automate routine tasks, it still struggles with a range of fundamental issues. These limitations stem from how AI is trained, what it’s trained on, and how it’s deployed in the real world.

1. Lack of Common Sense

AI doesn’t truly understand the world; it identifies patterns but lacks real-world reasoning. Unlike humans, who use intuition and lived experience, AI can’t make sense of simple absurdities or obvious contradictions.

Example:

If you ask an AI, “Can I put my phone in the microwave to charge it?”, a human will immediately say no, but an AI might give a dangerously neutral answer if it hasn’t seen enough similar examples during training.

Why This Matters:

In mission-critical systems like legal advisors, healthcare assistants, or emergency bots, this lack of grounding in reality can lead to embarrassing or even dangerous results.

2. Data Dependency

AI doesn’t learn like humans do. It needs large, structured, and labeled datasets. If those datasets are incomplete, biased, or outdated, the AI will reflect those same flaws.

Example:

An AI model trained to analyze hiring decisions might learn that certain demographics are less likely to be hired if that was the historical pattern, even if it’s illegal or unethical.

Why This Matters:

Bad data leads to bad predictions. AI trained on flawed data can reinforce existing biases in hiring, loan approvals, medical diagnostics, and even policing.

3. Limited Generalization

AI systems usually perform well in the specific task they were trained for, but they can’t generalize across different domains.

Example:

A model trained to identify cats in images won’t understand a photo of a lion unless it’s explicitly taught that lions are also part of the feline family.

Why This Matters:

AI can’t “think outside the box.” That’s a major roadblock for building flexible, multi-purpose systems, something humans do naturally.

4. High Resource Requirements

AI models, especially deep learning ones, demand serious computing power, electricity, memory, and time.

Example:

Training GPT-3 took thousands of petaflop/s-days of computation and a multimillion-dollar budget, not something your college lab can replicate overnight.

Why This Matters:

AI development is often limited to tech giants or well-funded institutions. This creates an uneven playing field and slows down innovation in smaller ecosystems.

5. Explainability Issues (Black Box Problem)

Many AI models, especially neural networks, are so complex that even their developers can’t fully explain how they arrived at a decision.

Example:

An AI might reject a home loan application, but when asked why, the system can’t provide a clear or logical reason, just a probability score.

Why This Matters:

In regulated industries, a lack of transparency can lead to non-compliance, lawsuits, and a total breakdown of user trust.

6. Vulnerability to Attacks

AI systems are fragile. Slight changes in input, called adversarial attacks, can confuse them completely.

Example:

By changing just a few pixels in an image, researchers have tricked AI models into thinking a turtle is a rifle.

Why This Matters:

This is dangerous in autonomous vehicles, military systems, or medical diagnostics, where mistakes can cost lives.

7. No Emotional Intelligence

AI might understand words like “happy” or “anxious,” but it doesn’t feel anything. It can’t empathize, offer moral guidance, or understand subtle social dynamics.

Example:

A mental health chatbot might fail to detect suicidal ideation in a user’s tone and offer a generic “Have a nice day” response.

Why This Matters:

For roles requiring emotional nuance, counseling, teaching, and negotiation, AI just doesn’t cut it.

8. Legal and Ethical Challenges

The law is still catching up with AI. Questions like “Who’s liable when AI fails?” remain largely unanswered.

Example:

If an autonomous delivery drone crashes and injures someone, it’s unclear whether the blame lies with the software developer, hardware manufacturer, or operator.

Why This Matters:

This legal ambiguity creates risk for businesses and makes regulators hesitant to approve AI deployments in critical sectors.

9. Job Displacement (Not Full Replacement)

AI doesn’t take jobs; it takes tasks. But that still impacts livelihoods, especially for people in roles that rely on routine or predictable workflows.

Example:

AI tools now handle basic customer support, invoice processing, and even some coding tasks.

Why This Matters:

Without proper reskilling programs, workers can find themselves pushed out of the economy faster than they can adapt.

In a 2023 study, over 70% of surveyed AI professionals admitted they couldn’t fully explain how their deep learning models worked, highlighting the growing gap between performance and transparency.

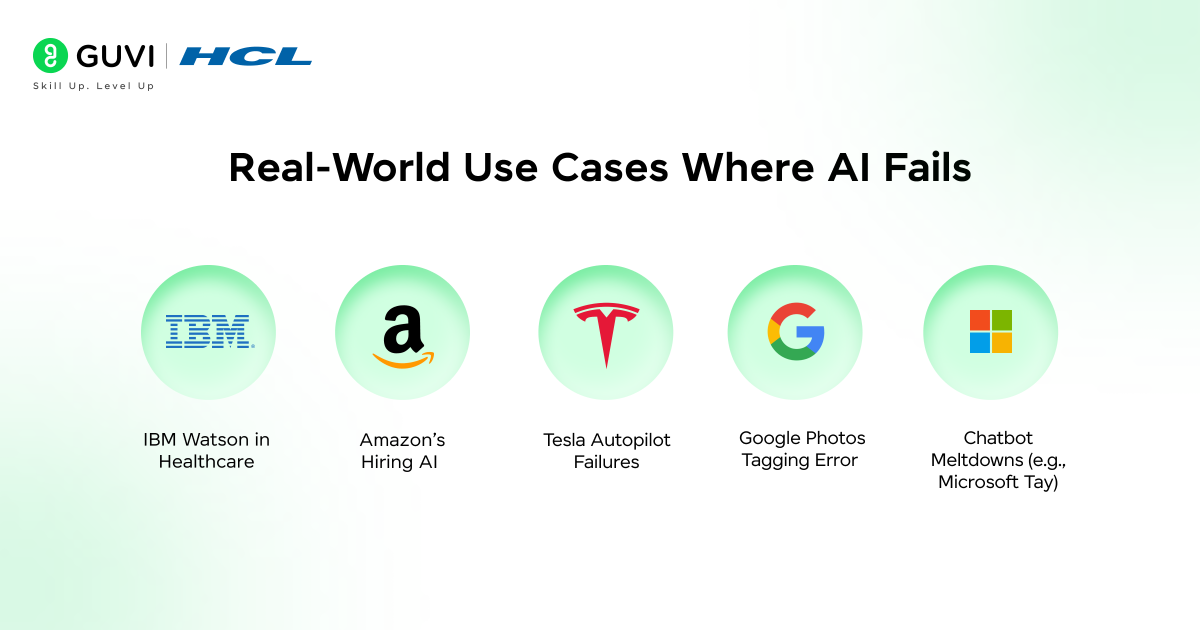

Real-World Use Cases Where AI Fails

Now that you’ve seen the theoretical side, let’s get into some real-world failures that highlight these limitations in action.

1. IBM Watson in Healthcare

What happened:

IBM’s Watson was supposed to revolutionize cancer diagnosis and treatment recommendations. Instead, it gave “unsafe and incorrect” suggestions in several cases due to poor training data and flawed logic.

Limitation exposed:

Lack of common sense, data dependency, and poor generalization.

Lesson:

Even in a well-funded, data-rich environment like medicine, AI can’t replace expert human judgment.

2. Amazon’s Hiring AI

What happened:

Amazon built an AI tool to screen resumes but had to scrap it after discovering it penalized female applicants.

Limitation exposed:

Bias in training data and lack of explainability.

Lesson:

AI learns from past patterns. If those patterns are biased, the AI will carry them forward, sometimes invisibly.

3. Tesla Autopilot Failures

What happened:

Tesla’s self-driving system has been involved in several fatal crashes. In one instance, it failed to recognize a white truck against a bright sky.

Limitation exposed:

Inability to handle edge cases and vulnerability to unexpected input.

Lesson:

Even advanced computer vision systems can fail in real-world environments with poor lighting or uncommon scenarios.

4. Google Photos Tagging Error

What happened:

Google’s AI once labeled images of Black individuals as “gorillas.” The company had to disable the feature after public backlash.

Limitation exposed:

Severe bias and a lack of context understanding.

Lesson:

AI doesn’t understand race, culture, or human dignity. It’s up to developers to test thoroughly and design responsibly.

5. Chatbot Meltdowns (e.g., Microsoft Tay)

What happened:

Microsoft’s chatbot Tay learned from Twitter users and turned into a racist, offensive bot within hours.

Limitation exposed:

Blind pattern imitation and lack of ethical safeguards.

Lesson:

Without guardrails, AI will mirror the worst of the internet, and fast.

These failures aren’t outliers. They are signs that AI, while powerful, still needs human oversight, context, and conscience.

Quick Challenge – Can You Spot the Limitation?

Here’s a quick scenario for you:

An AI is trained to detect spam emails. It has 99% accuracy in tests. But when deployed in the real world, it starts marking client emails as spam.

What limitation is being exposed here?

A) Emotional Intelligence

B) Data Bias or Overfitting

C) Lack of Legal Framework

D) Energy Consumption

Answer: B – Data Bias or Overfitting.

The AI was likely trained on a dataset that didn’t reflect real-world variation, leading it to misclassify legitimate emails.

Where We Go From Here?

Understanding AI’s limitations doesn’t mean we stop using it. Instead, it means we use it wisely. Here’s what you can do as a student or early professional:

- Stay curious: Learn not just how to build AI, but how to evaluate and question it.

- Think ethically: Ask who might be affected when AI fails.

- Upskill constantly: The best way to future-proof yourself is to blend technical know-how with problem-solving and people skills.

If you’re serious about mastering artificial intelligence and want to apply it in real-world scenarios, don’t miss the chance to enroll in HCL GUVI’s Intel & IITM Pravartak Certified Artificial Intelligence & Machine Learning course. Endorsed with Intel certification, this course adds a globally recognized credential to your resume, a powerful edge that sets you apart in the competitive AI job market.

Conclusion

In conclusion, artificial Intelligence is a game-changing tool, but it’s not a magic wand. Its limitations aren’t just technical quirks; they’re deeply tied to how we train, deploy, and interact with these systems.

As a student stepping into the tech world, your edge lies not in blindly adopting AI, but in knowing when to trust it, when to question it, and when to step in with good old human judgment. The future of AI isn’t just about smarter machines; it’s about smarter humans designing them.

FAQs

1. Why can’t AI understand emotions like humans?

AI can detect emotion based on text or facial expressions, but it doesn’t feel or understand them. It lacks consciousness and emotional context.

2. Is AI dangerous if it becomes too powerful?

AI itself isn’t inherently dangerous, but misuse or over-reliance without safeguards can lead to risks, especially in defense, finance, or healthcare.

3. Can AI ever have common sense?

Researchers are working on it, but current models are still far from achieving human-level common sense reasoning.

4. Why is AI often biased?

Because AI learns from historical data, any existing bias in the data gets replicated or amplified in the model unless corrected.

5. What’s the biggest barrier to using AI in real life?

It’s a mix of data quality, lack of explainability, ethical concerns, and high resource requirements that make large-scale adoption difficult.

Did you enjoy this article?