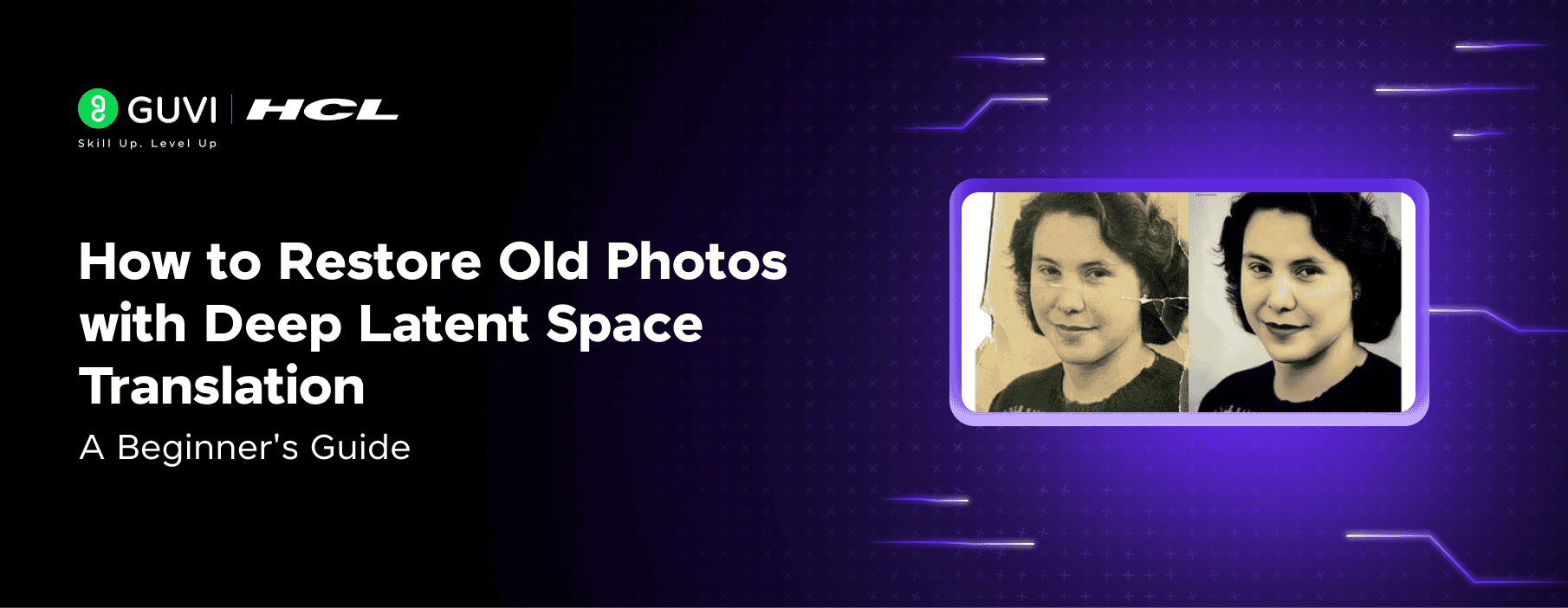

How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025]

Sep 10, 2025 3 Min Read 753 Views

(Last Updated)

Old photographs are windows to our past—moments that define family history and heritage and tell us so much about who we are and where we come from. Yet over time, prints fade, tear, and collect scratches, robbing them of their vibrancy. What if technology like deep latent space translation could restore these treasured memories to their original glory, no Photoshop or graphic skills required?

And what if you could do it yourself right at home? Amazing right? The possibilities. But it is a little tricky process, so make sure to follow along well. With Deep Latent Space Translation, a breakthrough in deep learning, that’s now possible.

In this blog, we will learn and master this technique that teaches AI to understand and correct damage at a pixel-by-pixel level, seamlessly removing noise, repairing tears, and reviving faded colors. From vintage portraits to historical archives, here’s how you can bring old images back to life using state-of-the-art AI. Let’s begin!

Table of contents

- What Is Deep Latent Space Translation?

- How It Works (Step-by-Step)

- Why It’s Better Than Traditional Methods

- How to Use It Today

- Real-World Applications

- Concluding Thoughts…

What Is Deep Latent Space Translation?

At its core, it’s about training a neural network to map degraded old photos into a latent space—an encoded form that captures essential visual information. Then, that clean version is decoded back into a high-quality image. This involves:

![How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025] 1 What Is Deep Latent Space Translation](https://www.guvi.in/blog/wp-content/uploads/2025/07/What-Is-Deep-Latent-Space-Translation_.png)

- Training two Variational Autoencoders (VAEs)—one for degraded images (with scratches, blur, etc.) and one for clean images.

- Learning a triplet domain translation in latent space using pairs of synthetic and clean images. This bridges the gap between artificial and real-world degradation.

How It Works (Step-by-Step)

![How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025] 2 How It Works Step by Step](https://www.guvi.in/blog/wp-content/uploads/2025/07/How-It-Works-Step-by-Step.png)

- Dataset Preparation: You need paired images—old, damaged photos alongside high-quality reference versions. Synthetic degradation helps augment this dataset.

- Feature Encoding: Each photo passes through a VAE that transforms it into a compact latent vector—one VAE for old images, another for clean ones.

- Latent Translation Network: A mapping network learns to convert old-image latents into clean-image latents using synthetic pairs. This avoids overfitting to synthetic degradations.

- Dual Restoration Branches:

- Global branch with a partial non-local block addresses structural defects like scratches and spots.

- Local branch deals with unstructured issues such as blur and noise. Their features merge in the latent space.

- Global branch with a partial non-local block addresses structural defects like scratches and spots.

- Face Refinement Module: A dedicated sub-network enhances facial details, ensuring lifelike clarity and identity preservation.

Let me put it simply: let’s look at the steps involved in old photo restoration using deep latent space translation and how you should go about it:

![How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025] 3 old photo restoration using deep latent space translation](https://www.guvi.in/blog/wp-content/uploads/2025/07/image-8.png)

- Collect a dataset of old photographs and high-quality images of images that you want to use for training our model.

- Then, you must preprocess the data by resizing the images and normalizing the pixel values.

- After that, we built a deep convolutional neural network (DCNN) to perform the image translation. and a neural network called a generator, which takes old photos as input and gives out high-quality images as output. The generator learns to create high-quality images that are similar to the corresponding high-quality images in the dataset.

- Then you will need to train the generator using old photos and their high-quality images. While training, the generator learns to create images that are very close to real, high-quality images.

- Then, check the performance of our trained model on a new set of old photos. The model should be able to create a high-quality image of that photo.

- Finally, we use the trained model to restore the old, damaged photographs. The model takes the photo as input and converts it into a high-quality image by removing noise, scratches, and other imperfections.

Also Read: Understanding Sea-Thru: How It Works and Why It Matters

Why It’s Better Than Traditional Methods

- Handles mixed degradation types—from dust and tears to fading and blur.

- Operates in latent space, making it resilient to domain differences between synthetic and real photos.

- Outperforms traditional tools and GAN-based solutions in both visual quality and realism.

How to Use It Today

- Microsoft Research GitHub: Clone and run this project for instant results on image restoration researchgate.net+2ijarcce.com+2emergentmind.com+2.

- Keras/TensorFlow Reimplementation: Use the OldPhotoRestorationDLST project for smoother integration with your Python environment youtube.com+3github.com+3levelup.gitconnected.com+3.

- Hands-on in Linux: Follow comprehensive steps on LinuxLinks for installation and GUI-based use linuxlinks.com.

NOTE: With just a GPU and a few commands, you can instantly restore vintage photos:

python run.py –input_folder ./old_photos –output_folder ./restored_photos –with_scratch

Real-World Applications

![How to Restore Old Photos with Deep Latent Space Translation: A Beginner’s Guide [2025] 4 Real World Applications](https://www.guvi.in/blog/wp-content/uploads/2025/07/Real-World-Applications.png)

- Family Archives: Bring multi-generational photos back to life.

- Historical Preservation: Restore archives and museum collections.

- Creative Projects: Use vivid imagery for documentaries, prints, or NFTs.

If you’re fascinated by how deep learning can restore old photos and want to build such powerful AI models yourself, check out HCL GUVI’s Artificial Intelligence and Machine Learning Course. This industry-relevant course offers hands-on projects, mentorship from experts, and certification from IIT-M Pravartak—perfect for mastering AI from scratch.

Explore: The Future of Underwater Photography

Concluding Thoughts…

Deep Latent Space Translation is more than just a tech innovation—it’s a way to revive history, reconnect with your past, and preserve memories for future generations. Whether you’re restoring your grandparents’ wedding photo or digitizing a rare family portrait, this tech makes it possible without manual editing or artistic skills.

Ready to breathe life into your old photos? Clone the repo, run the model, and watch memories transform. Apply and learn how it works firsthand, and if you have any doubts, reach out to me in the comments section below.

Did you enjoy this article?