Have you ever wondered how a machine learning model learns to make accurate predictions? Behind every intelligent AI system, from spam filters to recommendation engines, there lies one simple concept: models learn from data. However, not all data is treated the same way. To train a model effectively and evaluate its performance, the dataset is divided into two parts: training data and testing data.

Training and testing data play a crucial role in building dependable models. While the training data helps the model identify patterns and relationships, the testing data checks whether the model can apply that learning to new, unseen situations. In this blog, we’ll explore what these datasets are, why they’re important, how to split them correctly, and how they work together to create accurate, data-driven predictions.

Table of contents

- What Is Training Data?

- What Is Testing Data?

- Difference Between Training and Testing Data

- Why Do We Need Both Training and Testing Data?

- How Is Data Split into Training and Testing Sets?

- Common Methods for Splitting Data

- Hold-Out Method

- K-Fold Cross-Validation

- Stratified K-Fold Cross-Validation

- Leave-One-Out Cross-Validation (LOOCV)

- Practical Example: Splitting a Real Dataset

- Common Mistakes to Avoid

- Conclusion

- FAQs

- Can I use the same dataset for training and testing?

- What if my dataset is too small to split?

- How can I know if my model is overfitting?

- Can I use a different split ratio than 80/20?

- Should I include outliers in training and testing data?

What Is Training Data?

Training data is the dataset used to teach a machine learning model. It contains examples that help the model understand patterns, relationships, and trends. The model analyzes this data repeatedly, adjusting its parameters to reduce errors and improve accuracy.

Key points about training data:

- It makes up about 70–80% of the total dataset.

- It contains both input features (like age, experience, salary) and output labels (like promotion: yes or no).

- The model uses this data to learn correlations and predict outcomes.

Example:

If you’re training a model to predict employee promotions, the training data will include employee details (experience, salary, performance score) and whether they were promoted. The model learns from these examples before it sees any new data.

What Is Testing Data?

Testing data is the dataset used to evaluate how well the trained model performs on unseen data. It helps determine whether the model can make accurate predictions outside of what it has already learned.

Key points about testing data:

- It makes up around 20–30% of the dataset.

- It is not shown to the model during training.

- It helps measure how well the model generalizes to real-world data.

Example:

After training your promotion prediction model, you test it with a few new employee records. If the model correctly predicts promotions for these unseen cases, it proves that the learning was effective and not overfit to the training data.

Difference Between Training and Testing Data

When working with machine learning models, it’s important to understand how training data and testing data differ in purpose and use. Both come from the same dataset but serve completely different goals in the model development process.

In this section, we’ll look at the key differences between training and testing data, including their purpose, size, usage, and how each contributes to building accurate and reliable machine learning models.

| Aspect | Training Data | Testing Data |

| Purpose | Used to teach the model and help it learn patterns, relationships, and features from the data. | Used to evaluate how well the trained model performs on new, unseen data. |

| Usage | The model adjusts its parameters based on this data to minimize errors. | The model predicts outcomes without changing its parameters. |

| Size | Usually takes up a larger portion of the dataset (around 70–80%). | Takes up a smaller portion (around 20–30%). |

| Goal | Improve the model’s learning ability and accuracy during training. | Measure the model’s performance and generalization ability. |

| Examples | Training a model to identify spam emails based on known examples. | Checking if the trained model can correctly identify new spam emails it has never seen before. |

In short, the training data helps the model learn, while the testing data checks if it has truly understood. Both work together to build models that are not just accurate but also reliable in real-world situations.

Why Do We Need Both Training and Testing Data?

Using both training and testing data ensures that a machine learning model truly learns rather than just memorizes the data it has seen. This separation helps create models that perform well not only on the data they were trained on but also on completely new inputs.

Here’s why splitting the data is important:

- Prevents overfitting: The model focuses on understanding general patterns instead of memorizing specific examples.

- Ensures fairness: The testing data checks how well the model performs on unseen data, ensuring unbiased evaluation.

- Improves evaluation: With separate testing data, you can accurately measure how effective the model is using metrics like accuracy, recall, or precision.

- Builds reliability: It ensures that your model can handle real-world data confidently, not just the examples it was trained on.

If you’re exploring how concepts like training and testing data fit into the broader world of data science, the Data Science eBook is a great next step. It covers key topics such as Python basics, data preprocessing, visualization, and model building — all explained in simple terms with real-world examples. It’s a handy guide to strengthen your data science foundation at your own pace.

How Is Data Split into Training and Testing Sets?

Splitting data is a critical step that determines how well your model will perform in real-world conditions. It’s usually done randomly to make sure both sets represent the entire dataset fairly.

Common Split Ratios:

- 80% training / 20% testing

- 70% training / 30% testing

- 60% training / 40% testing

Example:

Imagine you have a dataset of 1,000 customer records for a churn prediction model. If you use an 80/20 split, 800 records will be used for training the model to learn patterns, and the remaining 200 records will be used for testing how accurately it predicts customer churn on unseen data.

You can easily perform this split using Python’s scikit-learn library:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

This command automatically divides your dataset into training and testing parts.

Common Methods for Splitting Data

While random splitting is most common, there are several structured methods to ensure reliable evaluation.

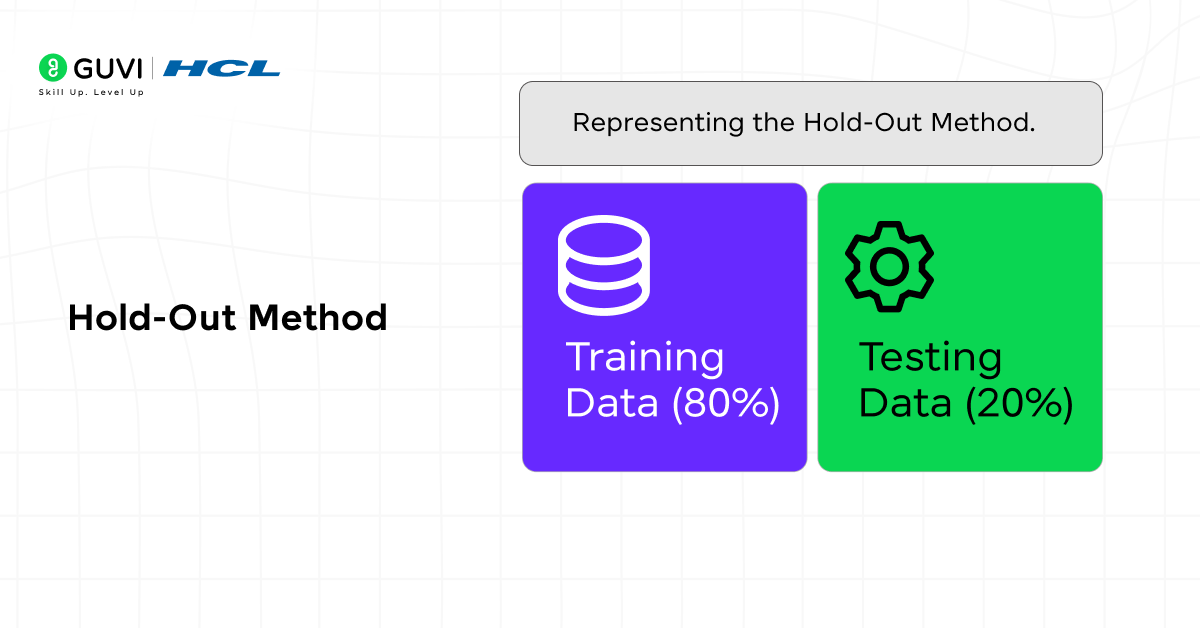

1. Hold-Out Method

- The simplest approach is where data is divided into two parts — training and testing (e.g., 80/20 or 70/30).

- Works well for large datasets.

- Quick and efficient but may produce slightly different results with small datasets.

2. K-Fold Cross-Validation

- The dataset is divided into K equal parts (folds).

- The model is trained K times — each time using a different fold for testing and the rest for training.

- The final score is the average performance across all K runs.

- Common values for K are 5 or 10.

- This method gives a more stable and unbiased performance estimate.

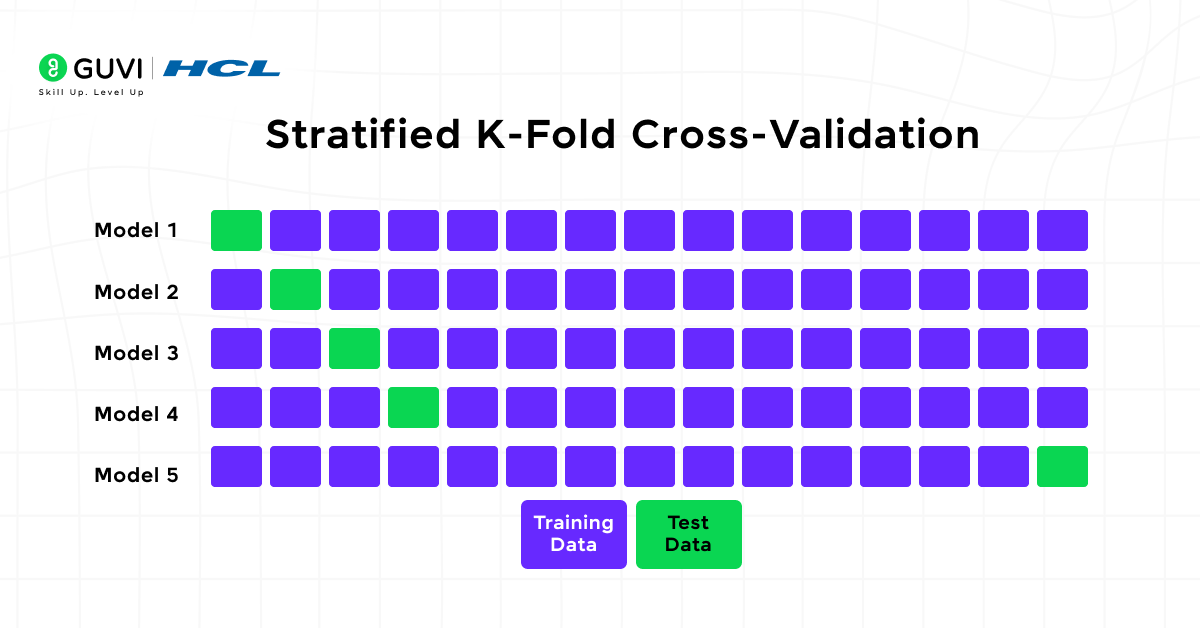

3. Stratified K-Fold Cross-Validation

- A variation of K-Fold is used when datasets are imbalanced (for example, more “No” than “Yes” labels).

- Ensures each fold maintains the same proportion of class labels as the original dataset.

- This provides a fair evaluation across all categories.

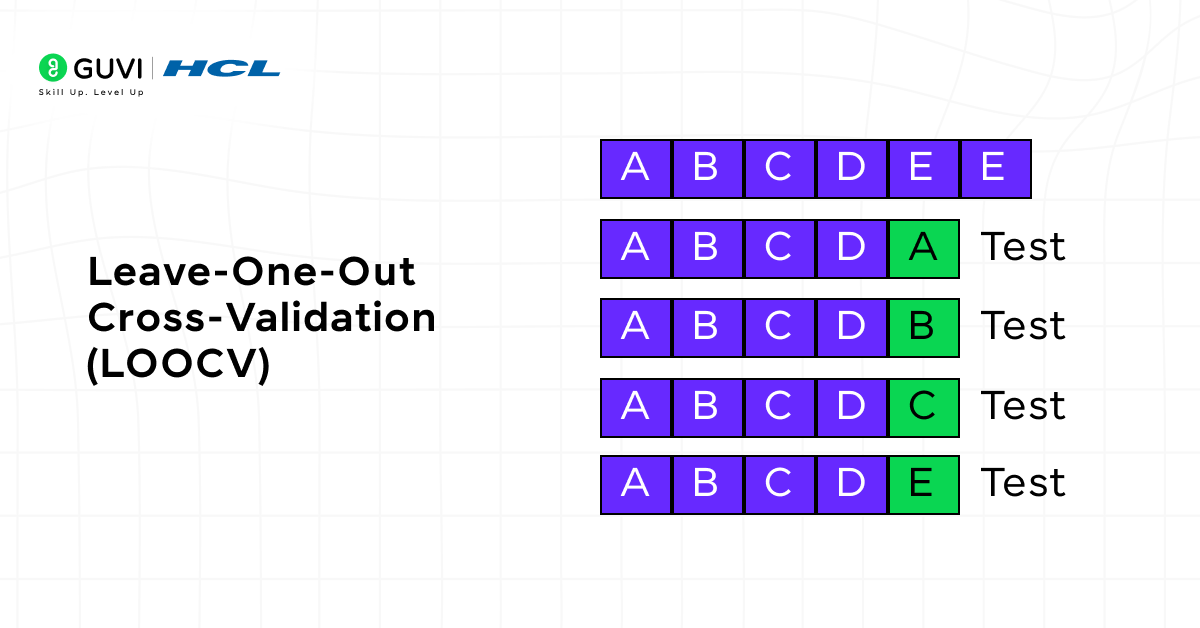

4. Leave-One-Out Cross-Validation (LOOCV)

- A special case of K-Fold where K equals the number of data points.

- Each sample is used once for testing, while all others are used for training.

- Extremely accurate but computationally expensive for large datasets.

Practical Example: Splitting a Real Dataset

Let’s take a small dataset to clearly understand how training and testing data are separated before building a machine learning model.

Sample Dataset :

| ID | Age | Experience (Years) | Salary | Promotion (Yes/No) |

| 1 | 24 | 1 | 30000 | No |

| 2 | 29 | 4 | 48000 | Yes |

| 3 | 31 | 6 | 52000 | Yes |

| 4 | 26 | 2 | 34000 | No |

| 5 | 33 | 7 | 60000 | Yes |

| 6 | 28 | 3 | 41000 | No |

| 7 | 35 | 10 | 75000 | Yes |

| 8 | 27 | 2 | 36000 | No |

| 9 | 30 | 5 | 50000 | Yes |

| 10 | 25 | 1 | 31000 | No |

Data Split Example ;

If we split this dataset into 80% training data and 20% testing data, we’ll have:

- Training Data (Rows 1–8): Used to teach the model the relationship between input features (Age, Experience, Salary) and output (Promotion).

- Testing Data (Rows 9–10): Used to check how well the model performs on unseen data.

| Training Set → Rows 1–8 | Testing Set → Rows 9–10 |

| Helps the model learn patterns and relationships | Checks model accuracy on new data |

This split ensures that the model learns from most of the dataset but is still tested on fresh examples. It helps measure accuracy, generalization, and real-world performance — key aspects of reliable machine learning.

If you prefer learning through short, structured lessons, check out HCL GUVI’s 5-day free Data Science Email Series. You’ll receive bite-sized lessons each day covering key concepts like data analysis, visualization, and model evaluation, making it easy to learn data science fundamentals step by step, right in your inbox.

Common Mistakes to Avoid

While splitting and using data, many beginners make errors that reduce model quality. Here are some common mistakes to watch out for:

- Using the same data for both training and testing.

- Not shuffling data before splitting, leading to biased results.

- Having unequal data distribution between sets.

- Using a very small testing set that doesn’t represent real-world data.

- Forgetting to evaluate models using proper metrics (like accuracy, recall, or F1-score).

Avoiding these mistakes ensures that your model performs well not just in theory, but also in practice.

Conclusion

Understanding the difference between training and testing data is a key step toward building effective machine learning models. The training data teaches the model to recognize patterns, while the testing data ensures it can apply that knowledge to new and unseen information.

When data is properly prepared and split, it helps create models that perform accurately, adapt well to real-world data, and make reliable predictions — forming the foundation of successful data science and AI systems.

If you’re eager to strengthen your foundation in data science and learn practical skills like data preparation, visualization, and model building, consider joining the Data Science Course. This program offers mentor-led training, hands-on projects, and job-ready modules covering Python, Machine Learning, and Data Visualization — helping you become confident in applying these concepts in real-world scenarios.

FAQs

1. Can I use the same dataset for training and testing?

No, because it will make the model memorize the data, leading to poor real-world performance.

2. What if my dataset is too small to split?

In that case, you can use cross-validation, which splits data into multiple smaller sets for better evaluation.

3. How can I know if my model is overfitting?

If your model performs very well on training data but poorly on testing data, it’s likely overfitting.

4. Can I use a different split ratio than 80/20?

Yes, depending on your dataset size. For large datasets, even 90/10 can work well.

5. Should I include outliers in training and testing data?

You can include them, but always analyze their impact — sometimes they improve learning, other times they distort results.

Did you enjoy this article?